drutman

Gawd

- Joined

- Jan 4, 2016

- Messages

- 622

Problem is if I hold out for every max spec the only card will be a $10K AI data center professional oriented card.Yes, hold out for the 48 GB cards.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Problem is if I hold out for every max spec the only card will be a $10K AI data center professional oriented card.Yes, hold out for the 48 GB cards.

I gotta say I'm actually impressed with the power usage on my 4090. Even when I have the slider maxed for 520W, power usage stays solid and never has jumps/spikes. My 3090 was all over the place when gaming.First strike = using the RM series

Second strike = full TDP of the 4090 Ti would be 600W for the FE vs. 450W for standard

My PC uses 650-700W with a regular 4090. Potentially adding another 150W pushes you to the edge of the 850W PSU, especially how you need to take transient spikes into account. They're not as bad as they were with Ampere, but they're still there.

Think of the kidsI am thinking of either buying a 4070 TI, or 4080. My kid needs a new GPU, and my 3070 Ti would be a good upgrade from his 980 Classified.

Dammit.

I was looking into new TV technology recently and the latest and greatest is something called MicroLED. The 110" from Samsung is currently $150k and you can buy it in a showroom like it's 1988. Kinda makes you laugh at the price tag of the 4090. The luxury market is out of most of our leagues.So I think that's going to be the target audience of the 4090 Ti, people who want the best, with no asterisk.

My 4090 is already well more than enough at 4K, WTF will I do with a 4090Ti other than heat my house in the winter with 600W+ of power?

Seriously though, not a single game struggles with this thing, and those that need a bump have DLSS 3.0 Frame Generation and work remarkably. I generally always try to snag top of the line at a series release, but this card might give me a pause when 5xxx launches in late 2024 (if history is an indication).

I can't say I'll ever get excited for a GPU that expensive, either. Back when everyone was fawning over the 8800 GTX, I felt myself lucky just to be able to afford an 8800 GTS 320MB and get in on the unified shader bandwagon. Luckily I finally got a 2080 Ti (and not even a month later by dumb fucking luck a 3080) after years of wanting an RTX GPU but abstaining because they (for me) were just so prohibitively expensive for someone in my position.Can't say I'll ever be excited for a $1000+ GPU. Maybe if PC gaming was my only hobby or I if I had completed a game on PC in the past few years. I just now upgraded my 2080 to a 3080 12GB just to stay somewhat current and also to hand my 2080 down to my son

But if they are not your kidneys...!I don't see a major draw to the 40 series over the 30 series... I'd rather hold out for the 50 series before selling any kidneys, heh.

If you don't pay your nVIDIA GPU payment Jensen will send the *repo men* to take your kidneys. (Or heart, lungs...) While you are still awake and conscious. Just slicing right into you with gusto like in the movie of the same name. Have fun!But if they are not your kidneys...!

No the 4090 is DP 1.4a. 240Hz at 4K.Is it still a display port 2.0 card with 120Hz 4K max?

I feel like those who complain about the power draw in the 4090's don't even have one (not you specifically, in general). Mine uses less power than my 3090 did, and that's with a power increase to 520W and an overclock on my 4090! Stock it rarely pushes past 450W gaming, and if you underclock or under-volt, you are using way less with minimal performance loss. The card is a beast.Really hoping NV focuses on efficiently more for the 5090 and that theses crazy high wattage cards that burn up are a thing of the past.

If I owned a 4090 the first thing I would do is undervolt it.

Great point. The 4090 is an amazingly power efficient card. Nvidia just gave it a pretty large power ceiling to play with, but it really doesn't pull all that power. And when tuned uses a ridiculously low amount of power for the performance delivered.I feel like those who complain about the power draw in the 4090's don't even have one (not you specifically, in general). Mine uses less power than my 3090 did, and that's with a power increase to 520W and an overclock on my 4090! Stock it rarely pushes past 450W gaming, and if you underclock or under-volt, you are using way less with minimal performance loss. The card is a beast.

Your budget is better than mine, I am waiting for the 5000 series so I can snag an ancient 3090TI3070 has been fine for me.

I'll get a 4090 when the 5000 series comes out.

Yeah, and this is what I think people want, but misread. Just because you CAN pull 600W, doesn't mean you are going to or need to. It allows those of us who are [H]ard to push our hardware to the limits for benchmarks or games, gives the average user great default settings and those who like to keep things even cooler with less power the ability to do so as well. I have used my card in all variations and enjoy that freedom.Great point. The 4090 is an amazingly lower efficient card. Nvidia just gave it a pretty large power ceiling to play with, but it really doesn't pull all that power. And when tuned uses a ridiculously low amount of power for the performance delivered.

Don't do it, I made that mistake earlier this year. I bought the LG OLED to see if I could go back to 1440P. Absolutely not, way too much aliasing and detail loss. Every game looked blurry with post process AA (TAA/FXAA/DLSS/FSR), MSAA looked ok but requires forward rendering on modern engines. I ended up refunding it.With current business practicles by AMD and Nvidia and the ridiculous prices, I'd rather downgrade my monitor from 4k to 1440p.

4090 is leaps and bounds better over a 4080, depending on what resolution you game at... at 4K, it is king, no debate.I know I said I'd take the 4090 Ti if someone bought it for me, but if all you can afford is the 4090, I'm okay taking that....thanks. ;-)

On a more serious note, the 4080 has come down in price in the USA? Microcenters only or?

If I had some extra cash I'd probably get a 4080 or 4090 - the most I've spent on a video card is probably my 3060 - which I then sold - that was about $500 CAD - and then I bought a used 3080. If I sold the 3080 - that's a big chunk towards the next card? But, I think the value has gone down for these? I've seen them advertised on buy and sell sites - for about $50-$100 less than I bought mine.

The 4080/4090 are interesting to me because they are (what I call) - all purpose cards - productivity/gaming etc. - lower power consumption /better power efficiency over the 30 series/Ampere - and it's only the bus width gimp down that's the major change with this series? 4090 isn't as much, though?

Do you use Facebook Marketplace? Some used ones on there are the same price as used 4080s - peculiar. I dunno - a scammer or the ad is still up even though it's sold? I thought, surely someone bought it.... it's under the retail price. Anyway, I was wondering if I could save up and just add the saving$$ to a sale of my 3080. Probably crazy and if the ads are somehow 'old' and the cards are sold or it's some sort of scam/false ad - then it's not doable. I'm not paying retail for any of those.4090 is leaps and bounds better over a 4080, depending on what resolution you game at... at 4K, it is king, no debate.

I just bought a 3090 off marketplace real cheap for $550.Do you use Facebook Marketplace? Some used ones on there are the same price as used 4080s - peculiar. I dunno - a scammer or the ad is still up even though it's sold? I thought, surely someone bought it.... it's under the retail price. Anyway, I was wondering if I could save up and just add the saving$$ to a sale of my 3080. Probably crazy and if the ads are somehow 'old' and the cards are sold or it's some sort of scam/false ad - then it's not doable. I'm not paying retail for any of those.

The 4090, obviously, clicks all the boxes - it's good for gaming AND productivity - has plenty of VRAM.... I'm only considering a 3090 (for cheapest upgrade), 4080 or 4090. I thought about a 4070 Ti but the 192 bus is really infuriating and I'm worried about the 12gb of VRAM. The efficiency of Ada is a good selling point, though - for me. I require a quiet card and the Ada cards should be easier to keep quiet than the AMD 7900 cards or even Ampere?

I did the same because of all the reports that there won't be 5000 series cards until 2025. This way, I can be really pissed when they surprise us all and announce them to be released in q4 of 2023.Just bought a 4090 because the pricing finally seems to have stabilized recently. Ti would be nice but I think we all know the same thing will happen all over again. Card drops, you either pay the scalper price or wait another 6+ months for the prices to stabilize again, then finally get one at MSRP.

No, 1.4a only supports up to HBR3, which is limited to 120 Hz at UHD 4K using 8-bit color and 165 Hz when DSC is used (98/120 Hz using 10-bit color). You can't hit 240 Hz at UHD 4K without using UHBR 13.5, which is only in the 2.0/2.1 specification.No the 4090 is DP 1.4a. 240Hz at 4K.

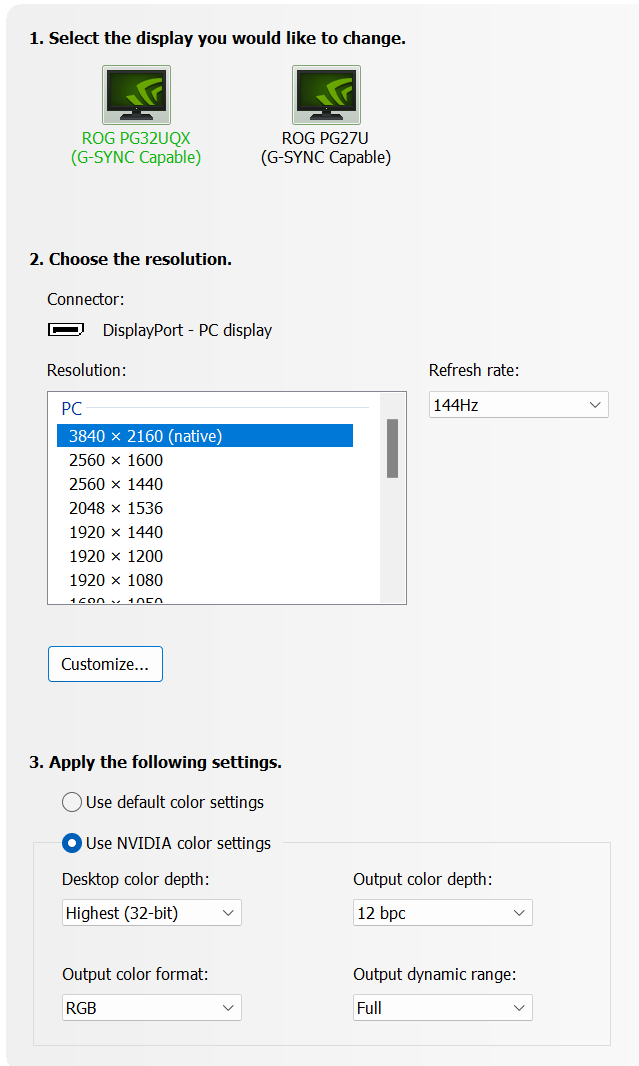

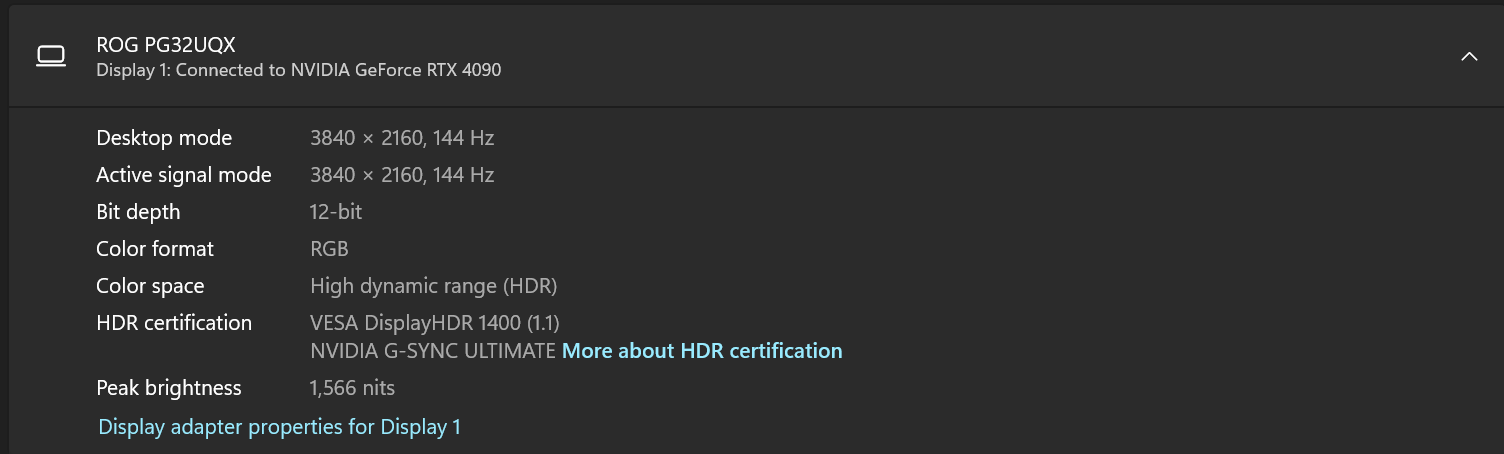

Uhhhhhhhh.... I'm running on a 4090 on a 4K monitor using DSC using 12-Bit color at 144Hz.No, 1.4a only supports up to HBR3, which is limited to 120 Hz at UHD 4K using 8-bit color and 165 Hz when DSC is used (98/120 Hz using 10-bit color). You can't hit 240 Hz at UHD 4K without using UHBR 13.5, which is only in the 2.0/2.1 specification.

No, 1.4a only supports up to HBR3, which is limited to 120 Hz at UHD 4K using 8-bit color and 165 Hz when DSC is used (98/120 Hz using 10-bit color). You can't hit 240 Hz at UHD 4K without using UHBR 13.5, which is only in the 2.0/2.1 specification.

No, you're not, unless you're using chroma subsampling. The PG32UQX is also a 10-bit panel.Uhhhhhhhh.... I'm running on a 4090 on a 4K monitor using DSC using 12-Bit color at 144Hz.

I am absolutely not running chroma sub sampling... Because I had to on my PG27UQ, I do NOT have too on my PG32UQX... DSC will get you 4K 144Hz 12-Bit color easy, and I believe it does go up to 240Hz at 12-Bit as well (which is what the G8 OLED uses, although that monitor may be 10-Bit only).No, you're not, unless you're using chroma subsampling. The PG32UQX is also a 10-bit panel.