Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

I said I'm not familiar with blender CPU test, meaning I don't know if it scales well with core count, clock, FP performance...

So I went and looked the results database? Did you do that? Did you do anything at all? No. Instead you come here and claim AMD presented hard data when they did not, they did not even specify whether quad channel memory was used on the Intel system.

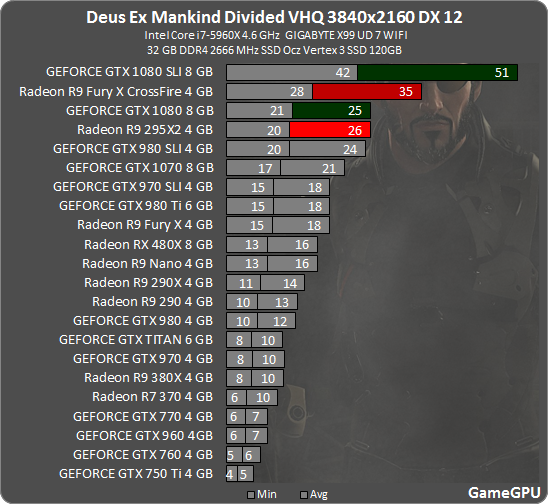

Anyway here is deus ex at 4k

You mean you are not done posting more off topic messages in the AMD forums , maybe next time just keep spamming Nvidia crap the mods don't care ...

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)