Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD ATI Radeon HD 4870 X2 Preview

- Thread starter HardOCP News

- Start date

capable heart

Weaksauce

- Joined

- Apr 15, 2007

- Messages

- 72

This is really sad, people.

Can't we just be objective and let the in-game framerates be the extent of things?

Can't we just be objective and let the in-game framerates be the extent of things?

evolucion8

Gawd

- Joined

- Mar 15, 2006

- Messages

- 917

The crysis driver, in my experience really didn't pan out. I am not trying to tell a future buyer to go nvidia or ati. Jimmy if you read the post by evolucion again you'll see that atis card has an advantage in certain games. How can you be so sure that this advantage won't disappear. Sure I was wrong in saying driver profiles for each game neccessitated optimization, but the card has an advantage when ati can use all 5 of it's threads. The real question now is do you think 64 true shaders will last? The ability for each cluster to handle crysis poorly explains the cards architectural disadvantage. Do you honestly believe future games like far cry2 won't be the same? Sure they'll be more efficient on the new engine but what if shafer intensive games are the future? Right now it's crysis but what about later? Right now this card is a preliminary undisputed king. It seems giving it a true workload of shafer heavy games is the warning to an unpredicted demise of this architecture since I believe shaded heavy games are the future. Looks like we gotta wait and see.

Games with longer shaders which doesn't has lots of texture reads will shine on the ATi architecture (Specially on the R6XX/RV6XX), games with shorter shaders and lots of texture reads etc will shine on nVidia hardware, but the Radeon HD 4800 series is far more balanced than it's previous incarnation and will benefit of both scenarios, specially the first one (Much better ROP/TMU performance). Look at the Workstation level benchmarks, were the heavy shader workload and heavy geometry makes the ATI HD cards best for graphic creation against the G8X based Quadro. For me, the ATI HD architecture is more futureproof. Good example, the HD 2600XT when first debuted, was much slower than the X1950PRO in the games that were released at that time, now with recent games, is able to outperform the X1950PRO considerably. Second example, when the HD 2900XT first came out, was like 30% average slower than the 8800GTS 640, and sometimes, it was two times slower in some games, later when the HD 3870 came out (Which is like 3% faster than the HD 2900XT), it was between 15% and 20% slower average than the 8800GT (Which is faster than the 8800GTS 640)

How did it come that the ATi HD architecture suddenly got better and was able to reduce the performance difference vs nVidia considering their previous performance differences? And now with newer driver sets, the HD 3870 performs very close to the 8800GT, and the 9600GT which was supposed to be the direct rival of the HD 3870, is starting to show it's limitations in newer games, something that was unnoticeable when it debuted and was slighly faster overall, but now in more shader intensive games, is getting outperformed by the HD card, and now the HD 4800 architecture is here to stay until ATi takes another approach.

DaveBaumann

Makes sense

Hi Dave, I see your name in Outlook, at least I think that's the right one!

DaveBaumann

n00bie

- Joined

- Jun 24, 2003

- Messages

- 39

How did it come that the ATi HD architecture suddenly got better and was able to reduce the performance difference vs nVidia considering their previous performance differences? And now with newer driver sets, the HD 3870 performs very close to the 8800GT, and the 9600GT which was supposed to be the direct rival of the HD 3870, is starting to show it's limitations in newer games, something that was unnoticeable when it debuted and was slighly faster overall, but now in more shader intensive games, is getting outperformed by the HD card, and now the HD 4800 architecture is here to stay until ATi takes another approach.

You are quite right to point out that games are becoming more shader intensive - even NVIDIA admit this with the changes to texture:shader ratio in GT200 - which is good for our architecture.

However an additional factor is that R600 was late to the party. NVIDIA had the G80 architecture ready before us and those 6 months they could spend optimizing the software (even before DX10 officially was available). While you can produce software on the simulation devices, prior to the hardward hitting silicon, this basically gives you some stable, but untuned driver base - for the software team there can never be anything like actually getting the hardware back. So, partly the ramp up in performance you've seen us us getting back up to speed with the architecture, DX10 and how the driver software interacts between them.

By the time HD 4800 hit we had got to a point of much greater development with the DX10 driver and when you combine that with an architecture thats 2.5x more powerful in places the benefits can really be seen!

Of course not.Hi Dave, I see your name in Outlook, at least I think that's the right one!You mind if I shoot you an email?

evolucion8

Gawd

- Joined

- Mar 15, 2006

- Messages

- 917

By the time HD 4800 hit we had got to a point of much greater development with the DX10 driver and when you combine that with an architecture thats 2.5x more powerful in places the benefits can really be seen!

And there's more. Since the HD 4800 architecture is based on the RV6X0 and the stream processors are pretty much identical, generic performance improvements (Like the compiler) through drivers will also benefits HD 2XXX and HD3XXX users in most situations!! (Except GPU specific situations or issues)

I was wondering what the power supply requirements are for 4870x2 crossfire setup?

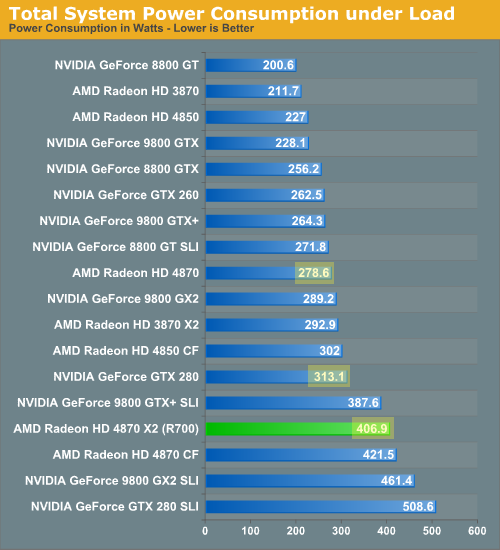

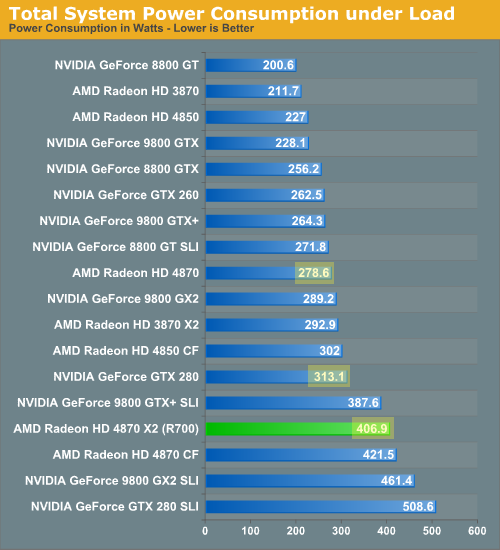

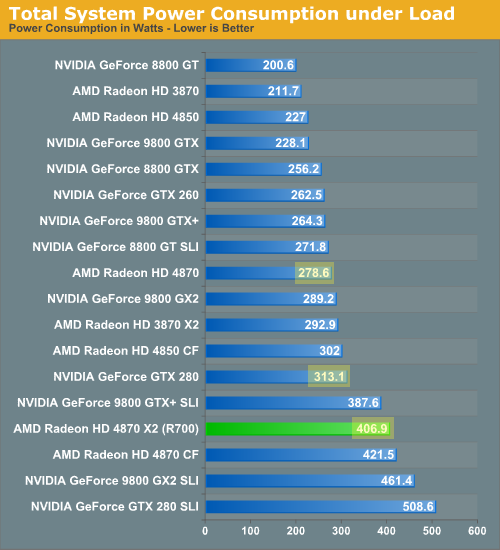

According to the article each card eats up 218 watts of power under load but what doesn't make sense is if you subtract watts and figure out the base system watts and subtract the 8800gtx power load amount the 8800gtx comes out to 75 watts which I thought should be more. Can anyone explain?

Thanks.

According to the article each card eats up 218 watts of power under load but what doesn't make sense is if you subtract watts and figure out the base system watts and subtract the 8800gtx power load amount the 8800gtx comes out to 75 watts which I thought should be more. Can anyone explain?

Thanks.

byusinger84

Gawd

- Joined

- Feb 1, 2008

- Messages

- 807

This is really sad, people.

Can't we just be objective and let the in-game frame rates be the extent of things?

I agree. Are we 2 years old? "nuh uh, yeah huh, nuh uh, yeah huh" sheesh.

As for my take on this whole thing. They both have their benefits and their drawbacks. I'm not loyal to any company. The one that gets my money is that one that performs. Hence why I have and Intel Processor and an nVidia GPU. That said, I will be buying a 4870 x2. It'll be a great improvement over my 8800gt especially at higher resolutions. That said, there's nothing wrong with the GTX 280 except that when it was released it was WAY overpriced for what you got and no one can deny that. ATI really did a great job this time around. Thankfully, there's competition and we all get to benefit!

I agree. Are we 2 years old? "nuh uh, yeah huh, nuh uh, yeah huh" sheesh.

As for my take on this whole thing. They both have their benefits and their drawbacks. I'm not loyal to any company. The one that gets my money is that one that performs. Hence why I have and Intel Processor and an nVidia GPU. That said, I will be buying a 4870 x2. It'll be a great improvement over my 8800gt especially at higher resolutions. That said, there's nothing wrong with the GTX 280 except that when it was released it was WAY overpriced for what you got and no one can deny that. ATI really did a great job this time around. Thankfully, there's competition and we all get to benefit!

EXACTLY!

Sign me up for 2 of these badboys!

MrWizard6600

Supreme [H]ardness

- Joined

- Jan 15, 2006

- Messages

- 5,791

ok, bump time; once again [H]'s numbers go head to head with everyone else's, although apparently nobody cares about these ones, I mean were all expected to own nuclear PSUs today right?

Who screwed up?

Who screwed up?

snowysnowcones

[H]ard|Gawd

- Joined

- Apr 2, 2006

- Messages

- 1,104

HardOCP measures power at the wall... I don't think other sites do that.

LstBrunnenG

Supreme [H]ardness

- Joined

- Jun 3, 2003

- Messages

- 6,676

I also doubt Anand runs benches with an overclocked CPU.

cannondale06

[H]F Junkie

- Joined

- Nov 27, 2007

- Messages

- 16,180

Anandtech used an Intel Core 2 Extreme QX9770 @ 3.20GHz.I also doubt Anand runs benches with an overclocked CPU.

http://www.anandtech.com/video/showdoc.aspx?i=3354&p=3

damn the forums are SLOW as hell today...

ok, bump time; once again [H]'s numbers go head to head with everyone else's, although apparently nobody cares about these ones, I mean were all expected to own nuclear PSUs today right?

Who screwed up?

No one screwed up, the rigs are different.

This thing needs to much of power :O. I'm planing to buy after 12th of august but i want to know, will it run with 750w CM PSU?

here is my system spec:

E6400

Gigabyte P965 DS3 rev1.1

2x320GB hds, 500GB hds and 750GB hds.

5fans

DVD/RW

Arctic freezer 7 pro

2GB ram OCZ

CM690 case.

Planning to OC after getting the 4870x2 ...(if its safe)

here is my system spec:

E6400

Gigabyte P965 DS3 rev1.1

2x320GB hds, 500GB hds and 750GB hds.

5fans

DVD/RW

Arctic freezer 7 pro

2GB ram OCZ

CM690 case.

Planning to OC after getting the 4870x2 ...(if its safe)

MrWizard6600

Supreme [H]ardness

- Joined

- Jan 15, 2006

- Messages

- 5,791

No one screwed up, the rigs are different.

different enough to warrant a swap of losers, I'm not intrested in the figures themselves simply in the ratios they provide. According to Hard OCP the HD4870 consumes equally as much as a GTX280, according to anand its more than 25W less.

Whos wrong?

This thing needs to much of power :O. I'm planing to buy after 12th of august but i want to know, will it run with 750w CM PSU?

Planning to OC after getting the 4870x2 ...(if its safe)

yeah your fine.

different enough to warrant a swap of losers, I'm not intrested in the figures themselves simply in the ratios they provide. According to Hard OCP the HD4870 consumes equally as much as a GTX280, according to anand its more than 25W less.

O damn, didn't look at that. Just compared 4870x2 and 4870 power figures from anand and [H] at work, didn't bother with the rest. Shouldn't the 4870 consume a lot less because die size wise it's more then two times smaller then that of gtx 280?

evolucion8

Gawd

- Joined

- Mar 15, 2006

- Messages

- 917

AFAIK, the HD 4870 under load consumes significantly less power than a GTX 280, but at idle, is the opposite, it's because the Powerplay functionality is not working properly under the HD48XX series with current drivers, I heard that future drivers should fix this.

yeah your fine.

what about my CPU E6400 ?will it be bottleneck? With 4870x2 ?

cannondale06

[H]F Junkie

- Joined

- Nov 27, 2007

- Messages

- 16,180

simple answer would be yes. to what extent would depend on the game and settings.what about my CPU E6400 ?will it be bottleneck? With 4870x2 ?

simple answer would be yes. to what extent would depend on the game and settings.

I don't understand one thing that is, why will my processor be the bottleneck? Usually when you are playing game it all depends on the 3d card, processor don't play much role when you game. Suppose if I oc my processor to 3ghz so it will still be the bottleneck or it will do fine ?

Edit: If its bottleneck so its negligible ? or noticeable?

Kaldskryke

[H]ard|Gawd

- Joined

- Aug 1, 2004

- Messages

- 1,346

I don't understand one thing that is, why will my processor be the bottleneck? Usually when you are playing game it all depends on the 3d card, processor don't play much role when you game. Suppose if I oc my processor to 3ghz so it will still be the bottleneck or it will do fine ?

Edit: If its bottleneck so its negligible ? or noticeable?

Whether or not a game will be CPU-limited or GPU-limited depends on so many factors. I would say that unless you are playing at low resolutions (1280x1024 or less) you stand a very low chance of being CPU-limited. And if you're playing at that resolution your framerates are probably going to be quite high anyway, so you wouldn't even notice it. Don't worry.

cannondale06

[H]F Junkie

- Joined

- Nov 27, 2007

- Messages

- 16,180

Im sure someone can explain it to you a lot better than me so I will them do it. also if the cpu didnt matter then gamers would all buy a Celeron and be done with it.I don't understand one thing that is, why will my processor be the bottleneck? Usually when you are playing game it all depends on the 3d card, processor don't play much role when you game. Suppose if I oc my processor to 3ghz so it will still be the bottleneck or it will do fine ?

Edit: If its bottleneck so its negligible ? or noticeable?

yes even at 3.0ghz your X2 would hold you back compared to a fast Core 2 cpu. the difference probably would not be noticeable in most situations though.

Whether or not a game will be CPU-limited or GPU-limited depends on so many factors. I would say that unless you are playing at low resolutions (1280x1024 or less) you stand a very low chance of being CPU-limited. And if you're playing at that resolution your framerates are probably going to be quite high anyway, so you wouldn't even notice it. Don't worry.

I won't be playing at 1280x1024.. i'm getting this card just to play @ 1900x1200.. thats why i'm planning to buy x2. otherwise i would have gone for 4850 or even 4870.

Already getting 4870x2 which is shit expensive plus if i change my processor that will give a good dent on my pocket

I'm just curious is it worth buying with my current processor or should i buy 4850

evolucion8

Gawd

- Joined

- Mar 15, 2006

- Messages

- 917

It has been proven through many reviews that the C2D running at 2.13GHz and above shows little performance gains across games (A GeForce 8 series card was used), so considering that the X2 is much faster, I would say that the sweet spot for this card is at 3.0GHz or more, and a bottleneck may be present, but it would be hard to pin point (If it doesn happen). Even at CPU dependant games, the performance difference doesn't worth the expenses of buying a $1K CPU like QX9750 or similar.

It has been proven through many reviews that the C2D running at 2.13GHz and above shows little performance gains across games (A GeForce 8 series card was used), so considering that the X2 is much faster, I would say that the sweet spot for this card is at 3.0GHz or more, and a bottleneck may be present, but it would be hard to pin point (If it doesn happen). Even at CPU dependant games, the performance difference doesn't worth the expenses of buying a $1K CPU like QX9750 or similar.

Thanks man for such a great reply...this really gives me push to buy 4870x2

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

I agree, with the newer single GPU cards, 3GHz is really the minimum you need.

With a dual GPU / SLI, you may want a bit faster.

With a dual GPU / SLI, you may want a bit faster.

MrWizard6600

Supreme [H]ardness

- Joined

- Jan 15, 2006

- Messages

- 5,791

I agree, with the newer single GPU cards, 3GHz is really the minimum you need.

With a dual GPU / SLI, you may want a bit faster.

no. First off, as has been prooven, an absolute number like 3GHz means nothing when the Athlon 64 is 30% more efficient clock-for-clock than a Pentium 4 and the Core 2's are 40% more efficient clock-for-clock than the Athlon 64s. Theres also the obvious case of dual core vs single core (vs quad core).

Ok so lets step back to the basics. The GPU can only render so much right? lets say in our primitive 3D game that the graphics card can handle 4 shader effects and 10 polygons while maintaining 60FPS. so, on your screen your looking at 3 shader effects and 8 polygons when whamo you look left and theres two more shader effects (for a total of 5). Since your graphics card can't handle that load at 60FPS, the FPS drops to (que the math wiz) 48 (?) FPS. Moral is that your graphics card can do a certain amount of work at 60 FPS.

Same story with a CPU only with different terms, but of course things get a little more complicated with the OS thrown into the mix. Lets say the CPU can handle 2 players worth of networking (meaning on-screen your looking at two other people who are playing with you on-line), and 2 buildings collapsing (physics being done on the CPU) at the same time. So your in a game with your friend (whos on screen ahead of you), and your throwing grenades at buildings. At one moment in time, you've got two buildings collapsing infront of you and your friend on screen when suddenly the enemy team pops up and now 3 people are on screen. Your CPU can't handle the networking load and as such the FPS drops by 25%.

There are millions if not billions of factors which make up a real game but my example gets the point across. Some engines are CPU intensive, maybe they have lots of physics or a heavy networking load but the graphics arn't to excellent so the GPU is just coasting through the load. Most engines are GPU intensive, where the CPU is always able to keep up as it has no trouble handling everything the game engine can throw at it but the visuals are excellent and as such the GPU gets bogged down. There is a third scenario, one thats often overlooked and thats the effect of a slow hard drive and/or not enough ram. Constantly having to move things out of ram and into the page file because your ram is full will slow you down considerably, and having an old 5400 RPM drive that just cant keep up with these big texture files is another issue some people have. Now of course the obvious answer is pre-cache but that can mean long load times and/or a CPU bottleneck!

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

no. First off, as has been prooven, an absolute number like 3GHz means nothing when the Athlon 64 is 30% more efficient clock-for-clock than a Pentium 4 and the Core 2's are 40% more efficient clock-for-clock than the Athlon 64s. Theres also the obvious case of dual core vs single core (vs quad core).

Ok so lets step back to the basics. The GPU can only render so much right? lets say in our primitive 3D game that the graphics card can handle 4 shader effects and 10 polygons while maintaining 60FPS. so, on your screen your looking at 3 shader effects and 8 polygons when whamo you look left and theres two more shader effects (for a total of 5). Since your graphics card can't handle that load at 60FPS, the FPS drops to (que the math wiz) 48 (?) FPS. Moral is that your graphics card can do a certain amount of work at 60 FPS.

Same story with a CPU only with different terms, but of course things get a little more complicated with the OS thrown into the mix. Lets say the CPU can handle 2 players worth of networking (meaning on-screen your looking at two other people who are playing with you on-line), and 2 buildings collapsing (physics being done on the CPU) at the same time. So your in a game with your friend (whos on screen ahead of you), and your throwing grenades at buildings. At one moment in time, you've got two buildings collapsing infront of you and your friend on screen when suddenly the enemy team pops up and now 3 people are on screen. Your CPU can't handle the networking load and as such the FPS drops by 25%.

There are millions if not billions of factors which make up a real game but my example gets the point across. Some engines are CPU intensive, maybe they have lots of physics or a heavy networking load but the graphics arn't to excellent so the GPU is just coasting through the load. Most engines are GPU intensive, where the CPU is always able to keep up as it has no trouble handling everything the game engine can throw at it but the visuals are excellent and as such the GPU gets bogged down. There is a third scenario, one thats often overlooked and thats the effect of a slow hard drive and/or not enough ram. Constantly having to move things out of ram and into the page file because your ram is full will slow you down considerably, and having an old 5400 RPM drive that just cant keep up with these big texture files is another issue some people have. Now of course the obvious answer is pre-cache but that can mean long load times and/or a CPU bottleneck!

Yep its easy to over complicate the issue.

If you are getting a new gen card you want a C2D of around 3GHz or more.

Maybe I should have said C2D earlier but its been said so often it feels like the norm.

keakdasneak

Bad Trader

- Joined

- Sep 25, 2005

- Messages

- 159

That is insane!

As you guys are saying you need minimum 3Ghz Processor, so why not i just overclock my processor to 3Ghz ? Don't you think that will do the trick ?

As far as the processor concerned, i just can't change it rite now! My motherboard is holding me back. My Motherboard only supports 1066Mhz processor and that's max for it.

I'm planning to build a new rig by the end of this year but not rite now, as I'm quite happy with my current rig. I just need a card which can play games @ 1900x1200. Otherwise I'm all set.

As far as the processor concerned, i just can't change it rite now! My motherboard is holding me back. My Motherboard only supports 1066Mhz processor and that's max for it.

I'm planning to build a new rig by the end of this year but not rite now, as I'm quite happy with my current rig. I just need a card which can play games @ 1900x1200. Otherwise I'm all set.

cannondale06

[H]F Junkie

- Joined

- Nov 27, 2007

- Messages

- 16,180

never mind

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

As you guys are saying you need minimum 3Ghz Processor, so why not i just overclock my processor to 3Ghz ? Don't you think that will do the trick ?

Yes, it will be great for most things.

some games will require more CPU, but thats always the way, theres always something you need to have faster.

Get what you can with what you have and if its not enough then upgrade.

Do reviews go up at midnight???

I'd expect to see the article at 8:35 CST.

Treeckcold57

n00b

- Joined

- Apr 15, 2007

- Messages

- 50

cannondale06

[H]F Junkie

- Joined

- Nov 27, 2007

- Messages

- 16,180

so basically it sucks in the one game that a card like this would/should actually be useful for. yes Im talking about that craptastic piece of code called Crysis.

LstBrunnenG

Supreme [H]ardness

- Joined

- Jun 3, 2003

- Messages

- 6,676

*facepalm*

Hexus said:AMD refers to the inter-GPU communication ability as CrossFireX SidePort, and it's a feature that, as the name suggests, offer high-bandwidth - bi-directional 5GB/s - transfers from GPU to GPU, should they be required. We were informed that the feature will not be enabled until a later date, though.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)