Because ultimately what do you think Next Gen games are going to run on? They are going to sell every 8GB RTX 3070 they can make for months to come if it really lives up to performance, and NVidias flagship 3080 is a beast of a card with 10GB. Devs certainly won't aim higher than that.

I think this is different perspectives. There is a reason Noko spoke about those who upgrade every 1-2 years being ok, but that others should be wary.

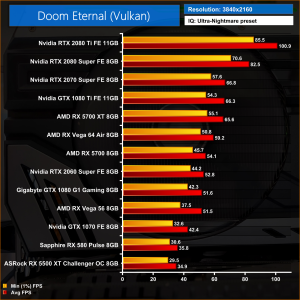

8-10GB until RTX 4000 in 2022 will probably be fine. You have 1-2 games now with possible issues (Doom, MSFS), expect maybe a couple more in 2021, and more yet in 2022 but as per the cycle Nvidia will have RTX 4000 before end of that year. Users might be fine with lowering settings exclusively for VRAM in these handful of games maybe.

Some of us are targetting 4 year cycles. You say that devs won't make 3070 and 3080 run out of VRAM. But what if 2021 has a Super series with 16GB+ as standard? What if RDNA 2 picks up significant market share with 12-16GB+ cards? And finally, the 2022 RTX 4000 series will undoubtedly launch with 12GB-16GB as standard minimum options on RTX 4060 - 4070. So devs will certainly feel free to aim higher than 8-10GB. It's rare in 2020, and will likely be uncommon in 2021, but for those hoping to make it to 2024 gen (like many did with Pascal - skip a Gen), I suspect a lot more settings dropping will happen come 2022, and especially 2023.

If I could get a double VRAM option for a reasonable premium, that could easily extend the life of the card for another year or two without having to drop settings only for VRAM. You may say that these cards won't run all Ultra in 2023 games (debatable tbh), but whatever other settings you lower, if they have the VRAM you can do max texture settings and that will make a visual difference.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)