Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,880

I'd appreciate any thoughts from those of you who have attempted this.

My pfSense router/firewall based on an Asrock board and consumer hardware went down the other day. I got it up again after some troubleshooting, but having become used to enterprise features like BMC/IPMI in the meantime, I decided it was time to actually use Enterprise-level hardware in this role.

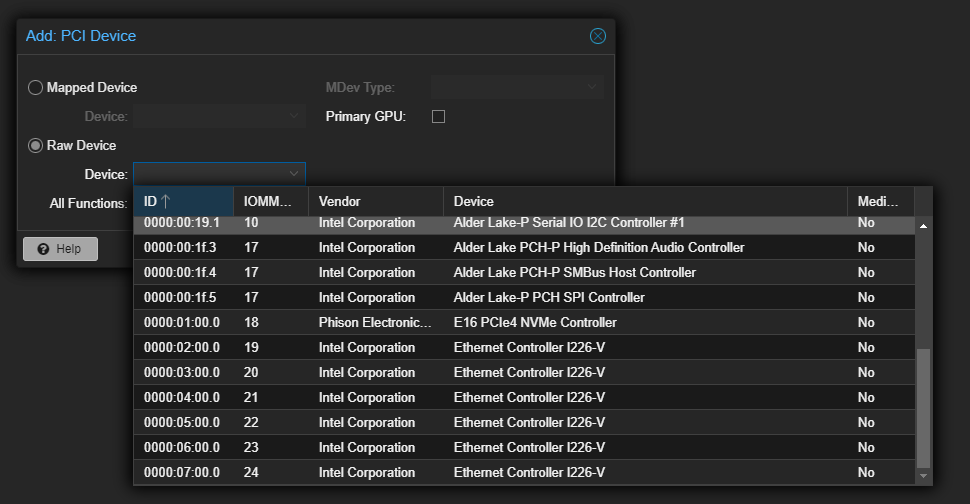

So I am now doing an open bench test on a Supermicro X12STL-F with a Rocket Lake generation E-2314 Xeon (4C/4T, 2.8 Ghz base, 4.8Ghz turbo) and 16gigs of DDR4-3200.

Total overkill for a router, I know, but I got some good deals, and I do lean heavily on my router when it comes to OpenVPN at gigabit speeds, which goes a little heavy on the CPU. (Turns out you can't buy DDR4-3200 ECC modules smaller than 8GB, at least not that I could find, and I'm not one to run a dual channel system in single channel mode, no matter how light the load.)

Anyway, once my testing regime is up, it will be time to install the router software. The easy way would be to just export my pfSense config, install pfSense on the new machine, import the config, and edit the NIC names and be done with it.

That said, after all the shit I've heard about pfSense and how they behaved when m0n0wall shut down, and OPNSense forked them, I'm not sure I want to have anything to do with pfSense anymore.

Of course there is more than just my feelings about the pfSense project at play here. There have been some recent security issues that are difficult to ignore, and OPNSense does more frequent security updates.

OPNSense have also been better about implementing stable versions of newer services like WireGuard.

On the flipside, pfBlockerNG is a nice feature in pfSense which is absent from OpenSense. I use it, but I go back and forth regarding keeping it, because it does occasionally break some websites, and some folks in the household have less tolerance for this than I do.

I also like dealing with a project that is not directly linked to a business model selling hardware as well.

I guess the thing is this:

While my system is a home system, it is a somewhat complicated home system, with 10 vlans, rules block and allowing routing between them for very specific things, routing through VPN for the entire network, etc. etc. and custom scripts triggered by cron that change settings, and bring networks up and down based on a set schedule. Migration is going to be a bitch.

So my question to anyone who has migrated is this:

1.) Did you finds it to be difficult?

2.) Was it worth it? Why / why not?

3.) Which would you go with?

Open to any other thoughts that might be interesting/relevant that I didn't ask about, but I might want to know about living with OPNSense or the migration process.

Essentially, I appreciate any input.

--Z

My pfSense router/firewall based on an Asrock board and consumer hardware went down the other day. I got it up again after some troubleshooting, but having become used to enterprise features like BMC/IPMI in the meantime, I decided it was time to actually use Enterprise-level hardware in this role.

So I am now doing an open bench test on a Supermicro X12STL-F with a Rocket Lake generation E-2314 Xeon (4C/4T, 2.8 Ghz base, 4.8Ghz turbo) and 16gigs of DDR4-3200.

Total overkill for a router, I know, but I got some good deals, and I do lean heavily on my router when it comes to OpenVPN at gigabit speeds, which goes a little heavy on the CPU. (Turns out you can't buy DDR4-3200 ECC modules smaller than 8GB, at least not that I could find, and I'm not one to run a dual channel system in single channel mode, no matter how light the load.)

Anyway, once my testing regime is up, it will be time to install the router software. The easy way would be to just export my pfSense config, install pfSense on the new machine, import the config, and edit the NIC names and be done with it.

That said, after all the shit I've heard about pfSense and how they behaved when m0n0wall shut down, and OPNSense forked them, I'm not sure I want to have anything to do with pfSense anymore.

Politics, drama & bad blood between the two projects

Politics, drama & bad blood between the two projects

This has nothing to do with technology, so if you don't enjoy reading about flame wars, move on to the technical section.

It's impossible to not mention the elephant in the room - the bad blood between pfSense and OPNSense. For those who are unaware, it got pretty ugly and very unprofessional on the part of the pfSense team.

In 2016 one of the Netgate/pfSense developers took over the opnsense.com domain to insult the competing project. For a time the opnsense.com displayed a "satirical" video from the movie Downfall (depicting the final days of Adolf Hitler and Nazi Germany). The site was full of vulgar language describing the OPNSense software. This ended after WIPO ruling:

In November 2017, a World Intellectual Property Organization panel found that Netgate, the copyright holder of pfSense, had been using the domain opnsense.com in bad faith to discredit OPNsense, a competing open source firewall forked from pfSense. It compelled Netgate to transfer the domain to Deciso, the developer of OPNsense.

More information about this incident, including the website snapshot, can be found on https://opnsense.org/opnsense-com/

Before writing this article I spent an entire day reading various forum threads and it's clear that there's no love between the two projects. There's plenty of antagonization of OPNsense on pfSense forum as well as various subreddits.

I have not seen the same behaviour from the other direction - if you have, let me know so I can add it here for some balance.

The pfSense folks also took over /r/opnsense and refused to give it back to the OPNsense project, created /r/OPNScammed to badmouth the competition. This drama never ends - if you are interested, start reading here

Of course there is more than just my feelings about the pfSense project at play here. There have been some recent security issues that are difficult to ignore, and OPNSense does more frequent security updates.

OPNSense have also been better about implementing stable versions of newer services like WireGuard.

On the flipside, pfBlockerNG is a nice feature in pfSense which is absent from OpenSense. I use it, but I go back and forth regarding keeping it, because it does occasionally break some websites, and some folks in the household have less tolerance for this than I do.

I also like dealing with a project that is not directly linked to a business model selling hardware as well.

I guess the thing is this:

While my system is a home system, it is a somewhat complicated home system, with 10 vlans, rules block and allowing routing between them for very specific things, routing through VPN for the entire network, etc. etc. and custom scripts triggered by cron that change settings, and bring networks up and down based on a set schedule. Migration is going to be a bitch.

So my question to anyone who has migrated is this:

1.) Did you finds it to be difficult?

2.) Was it worth it? Why / why not?

3.) Which would you go with?

Open to any other thoughts that might be interesting/relevant that I didn't ask about, but I might want to know about living with OPNSense or the migration process.

Essentially, I appreciate any input.

--Z

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)