Comixbooks

Fully [H]

- Joined

- Jun 7, 2008

- Messages

- 22,023

Let's not go through this again.

Totally agree the last launch was torture.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Let's not go through this again.

Benchmark | H100 PCIe | A100 80GB PCIe | |

| FP64 | 24 | 9.7 | +147% |

| FP64 Tensor Core | 48 | 19.5 | +146% |

| FP32 | 48 | 19.5 | +146% |

| TF32 | 400 | 156 | +156% |

| FP16 Tensor Core | 800 | 312 | +156% |

| INT8 Tensor Core | 1600 | 624 | +156% |

You could look at the OAM variants of either teams accelerator cards and claim 600+W is coming. I don’t see it outside of the top tier money is no object <insert sponsored-name here> Spooge edition cards from the big AIB’s.Sorry to disappoint all of the AMD fanbois, but it looks like MLID's 600+W prediction is going to be hilariously wrong - as usual.

The trend is smaller cases, not bigger, so I agree with you, there are practical limits to board power, especially with power prices going steadily up.You could look at the OAM variants of either teams accelerator cards and claim 600+W is coming. I don’t see it outside of the top tier money is no object <insert sponsored-name here> Spooge edition cards from the big AIB’s.

I mean those kinds of voltages can be expected on the extreme 3090TI variants but that is a major binning process on some custom PCB’s with exotic cooling. Not something remotely suitable for a mainstream product launch.

I’m thinking a more practical 450w on the upper end of the consumer products. Beyond that and you are going to run into some walls because of how PC cases work and how much heat you can realistically dissipate in that format.

I mean they could decide they AiO tripple radiators for GPU’s are the new norm but cases are going to need a redesign to match.

The PCIe version of the H100 is listed at only 350W. That's with 80GB of HBM3 memory. Nvidia's limited benchmark specs show that it runs rings around the A100 80GB which is listed at 300W.

Sorry to disappoint all of the AMD fanbois, but it looks like MLID's 600+W prediction is going to be hilariously wrong - as usual.

The SXM5 format like the OAM format allows for up to 700w while operating at 48v.H100 is Hopper (probably RTX 5XXX), not Lovelace (RTX 4XXX). "Interestingly, the SXM5 variant features a very large TDP of 700 Watts, while the PCIe card is limited to 350 Watts."

As for Lovelace, looks like it will go to 600W. AMD will probably be in the same neighborhood for RX 7XXX.

Interesting. Do you have a link to the Lovelace benching issue?The SXM5 format like the OAM format allows for up to 700w while operating at 48v.

350w is the practical limit on 12v for datacenter use and even with all their massive cooling systems are forced more than not to use liquids on any components exceeding 450w.

The recent NVidia leak made a number of mentions about Lovelace being too silicon intensive and the current supply chains not being capable of providing enough materials to make it feasible. There were reports in there about how their margins on it would be unsustainable when competing with AMD and their RDNA3 cards so they were advising benching Lovelace for their MCM based Hopper.

Not a good source in any way but this is what I can find of it. Leaks and leakers are pretty bad sources in general so I don’t exactly believe it, but their Hopper based workstation cards have a release date and their Lovelace ones don’t.Interesting. Do you have a link to the Lovelace benching issue?

Thanks.Not a good source in any way but this is what I can find of it. Leaks and leakers are pretty bad sources in general so I don’t exactly believe it, but their Hopper based workstation cards have a release date and their Lovelace ones don’t.

Granted this could only be true within the context of the datacenter cards and not the gaming/workstation cards.

https://www.tweaktown.com/news/8075...ead-of-ada-lovelace-to-beat-rdna-3/index.html

MLID has been very explicit that he's talking about the Geforce lineup, not the OEM-only SXM form-factor enterprise cards.You could look at the OAM variants of either teams accelerator cards and claim 600+W is coming.

Good to know, but I don’t know what they are basing their analysis off of, I can’t say their wrong god know the leaked stuff for the 3090TI’s show them to be thirsty bitches, but I’m not sure we can call that the new norm or a top end outlier. I mean if it’s the new norm then I’m going to have to move because my office isn’t equipped to handle those kinds of thermals.MLID has been very explicit that he's talking about the Geforce lineup, not the OEM-only OAM form-factor enterprise cards.

The 600W is a simple straight line scaling of watts per core using the rumored higher core count. It ignores the node shrink and the fact that the VRAM is a significant TDP contributor.Good to know, but I don’t know what they are basing their analysis off of, I can’t say their wrong god know the leaked stuff for the 3090TI’s show them to be thirsty bitches, but I’m not sure we can call that the new norm or a top end outlier. I mean if it’s the new norm then I’m going to have to move because my office isn’t equipped to handle those kinds of thermals.

Right…. So since moving a off the table, I’m not house hunting in this market I better look into a heat pump before my next build. Because if you take 600w account for a node shrink, add more VRAM, then increase clock speeds I still come out with a respectable 500w +… so I guess my only hope is the 4060 / 6700XT series chips aren’t so hungry.The 600W is a simple straight line scaling of watts per core using the rumored higher core count. It ignores the node shrink and the fact that the VRAM is a significant TDP contributor.

The VRAM is expected to be the same as the 3090, where it pulls an estimated 60-100W. I'd expect the FE to be 400W while something like a Strix 4090 to be 450W.Right…. So since moving a off the table, I’m not house hunting in this market I better look into a heat pump before my next build. Because if you take 600w account for a node shrink, add more VRAM, then increase clock speeds I still come out with a respectable 500w +… so I guess my only hope is the 4060 / 6700XT series chips aren’t so hungry.

It’s not like I get any chance to game anymore anyways and when I do the game is like 3 years old. Just getting around to Witcher 3…. Wooooh!

450w would be “reasonable” for a high end card….. but not 600. A 120mm aluminum radiator is good for about 150w of thermal dissipation before it saturates, so 450w could be handled by an AiO running a 360mm radiator, 600w would require 4 and I don’t know how many cases that would fit unless they split it up. But anything past 450w is incredibly hard to cool on air if you value your eardrums and don’t have the room climate controlled.The VRAM is expected to be the same as the 3090, where it pulls an estimated 60-100W. I'd expect the FE to be 400W while something like a Strix 4090 to be 450W.

You may want to bookmark this post so you can come back to it and mock me in a few months.

No! Nvidia was scared of Vega so went near full out, needlesly. 250W was considered eschelon in the day noobs. The market got a bonus because of it. Expect similar this next round.Competition is great! One reason Pascal topped out at 250W (1080 Ti) is that Nvidia didn't have to push the envelope. AMD had nothing to challenge the high end.

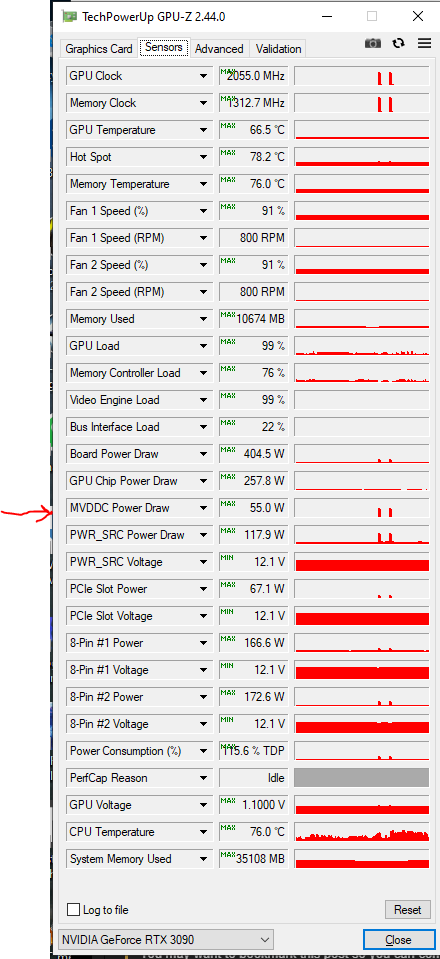

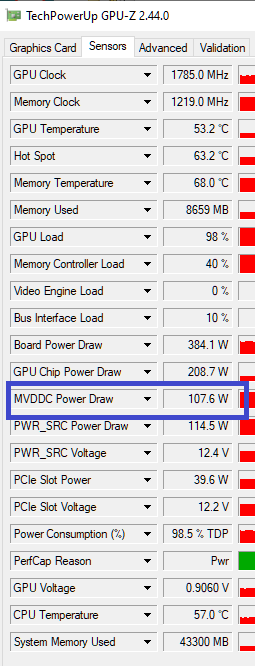

My 3090 Pulls ~49W on the ram, 59W max I've recorded when overclocked.The VRAM is expected to be the same as the 3090, where it pulls an estimated 60-100W. I'd expect the FE to be 400W while something like a Strix 4090 to be 450W.

You may want to bookmark this post so you can come back to it and mock me in a few months.

My 3090 Pulls ~49W on the ram, 59W max I've recorded when overclocked.

My 3090 Pulls ~49W on the ram, 59W max I've recorded when overclocked.

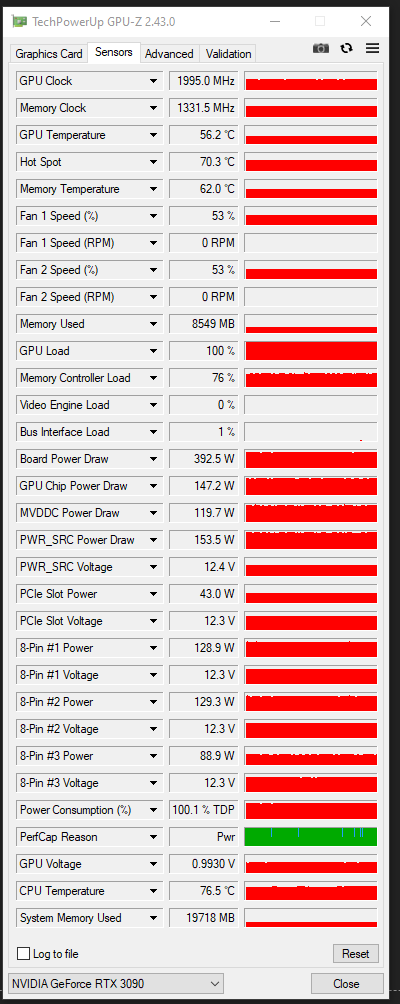

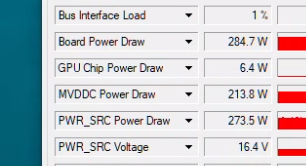

The difference between an A series and 3090 is the A series is going to be using all that memory, on a 3090 most of it is sitting in an idle state. I’m sure the 3090 could consume more than that with the memory usage at full load.don't have a screencap from a 3090 handy, but this is from an A6000. 48GB instead of 24GB, but also GDDR6 instead of the more (iirc) power-hungry GDDR6X of the 3090. If we cut this in half, it's still over 100W:

They were commenting earlier about memory draw on an RTX 3090 vs an A4000. Somebody commented that the 3090 drew far less and why was an A4000 drawing 4x more while only having double the ram. But their 3090 test was flawed because it was only using a small fraction of its memory while the above mining test is using all of it and showing its memory usage properly.Isn't 384.1W the overall board draw? Or is that a reply to something I missed?

They were commenting earlier about memory draw on an RTX 3090 vs an A4000. Somebody commented that the 3090 drew far less and why was an A4000 drawing 4x more while only having double the ram. But their 3090 test was flawed because it was only using a small fraction of its memory while the above mining test is using all of it and showing its memory usage properly.

PCI-E 3.0 is still fine. Why would you think PCI-E 4.0 wouldn't be enough?My main thing is, do you guys think this will require PCIE 5.0 to use at all? Or will PCIE4 suffice? I just did an upgrade so that's kind of rough, supposing I do even get the opportunity to try to upgrade...

PCIE4 will be fine for a while, you have to get into some pretty specific workloads to make use of 5 right now.My main thing is, do you guys think this will require PCIE 5.0 to use at all? Or will PCIE4 suffice? I just did an upgrade so that's kind of rough, supposing I do even get the opportunity to try to upgrade...

I just remember the GamerNexus overview for these new GPU's mentioning PCIE 5.0. I figured it was mainly for high end compute loads or something, because iirc most of the time the latest PCIE specs remained well ahead of GPU bandwidth requirements, but I wasn't sure. There was some mention of having more power from PCIE on the slot, though, for the 5.0 spec... had me worried.PCI-E 3.0 is still fine. Why would you think PCI-E 4.0 wouldn't be enough?

PCIE4 will be fine for a while, you have to get into some pretty specific workloads to make use of 5 right now.

My test was using a portion of the ram, but it is based on real world gaming. I've recently played both Cyberpunk 2077 for say a 5 hour stretch, and Siberian Mayhem for the same stretch, and never saw the max recorded value on my FE hit 60W. About 13Gb of the vram is max ram usage I have seen, and even with data in the vram, it isn't always all being accessed at all times.They were commenting earlier about memory draw on an RTX 3090 vs an A4000. Somebody commented that the 3090 drew far less and why was an A4000 drawing 4x more while only having double the ram. But their 3090 test was flawed because it was only using a small fraction of its memory while the above mining test is using all of it and showing its memory usage properly.

The 3090 has more ram than a gaming GPU needs while gaming even at 4K your not going to be using much more than 12. Which is why in practical usage 50-60w will the the norm. The A series workloads or mining applications can tear into ram like a bag of chips and will run it constant and in that case you will see your worst case numbers.My test was using a portion of the ram, but it is based on real world gaming. I've recently played both Cyberpunk 2077 for say a 5 hour stretch, and Siberian Mayhem for the same stretch, and never saw the max recorded value on my FE hit 60W. About 13Gb of the vram is max ram usage I have seen, and even with data in the vram, it isn't always all being accessed at all times.

So unless MLID was talking about Mining use cases, the power draw is still overstated imho.

A Strix hitting 100watts playing pubg... maybe it's just the games I am playing.

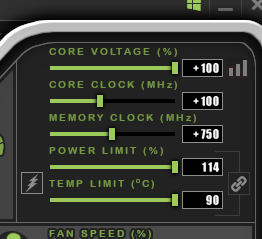

I have flashed the 3090 to the resize bar bios, and its enabled in my mobo bios. Maybe that improves vram power usage, hell if I know. But no matter the game I have played, I have never seen 60W power usage on my vram. And it's OC'd +750

View attachment 461612

I've used up to about 14-16gb in a handful of pc games with everything maxed out.The 3090 has more ram than a gaming GPU needs while gaming even at 4K your not going to be using much more than 12. Which is why in practical usage 50-60w will the the norm. The A series workloads or mining applications can tear into ram like a bag of chips and will run it constant and in that case you will see your worst case numbers.

I don’t know where MILD gets their numbers and what they speculate on to land where they do.

But I’m pretty sure most PUBG cheats balloon out GPU memory usage so…. I can see a few of those making use of that memory.