Well the H100 SXM5 is a 700w part stock so…I would also agree the new Ryzen is a bit of a power hog as well. Plus we all know a Ti version is coming at some point which likely will use even more power. I think more people are concerned by the amount of heat all that power generates. I know my office is the warmest room in the house due to my computer.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA GeForce RTX 4070: up to 30% faster than RTX 3090 in gaming

- Thread starter Krenum

- Start date

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

This is true. People just strawman it into complaining about power draw.I think more people are concerned by the amount of heat all that power generates. I know my office is the warmest room in the house due to my computer.

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

This is true. People just strawman it into complaining about power draw.

I mean it's both heat and power draw for me, seeing as I'm the one who pays my electrical bill for what is drawn from the wall + used to cool the computer room down edit: and one is related to the other - there's a difference between [H]ard OCP and [M]anagingOtherPeople'sFinances OCP

Last edited:

No kidding. When the 4090 reviews leaked and the 4090 was walking all over the 3090ti AND using similar or less power, I was impressed.

And then out of the woodwork come the usual "OMMGGGG POWER USE QQ".

New gen, significantly faster, same or less power. I don't see the issue.

It could be that years of liek omg bros, that 125w cpu is like totally a space heater bro mentality has cheesed off some people.

Gotta save the earth and wallet dudes. Now watch this OC of my 88w cpu hit 150w per hour. You gonna see some serious benchies. To save the earth and make it green.

auntjemima

[H]ard DCOTM x2

- Joined

- Mar 1, 2014

- Messages

- 12,141

I think the issue with a 150w cpu in the past (and I'm assuming you're crying about bulldozer here) is that its performance didn't match its power draw or heat. There was the issue.It could be that years of liek omg bros, that 125w cpu is like totally a space heater bro mentality has cheesed off some people.

Gotta save the earth and wallet dudes. Now watch this OC of my 88w cpu hit 150w per hour. You gonna see some serious benchies. To save the earth and make it green.

Last edited:

I think the issue with a 150w cpu in the past (and I'm assuming you're crying about bulldozer here) is that its performance didn't match its power draw or heat. There was the issue.

And yet it was cheaper than its i5 equivalent and likely better in the long run given it had 8 threads.

auntjemima

[H]ard DCOTM x2

- Joined

- Mar 1, 2014

- Messages

- 12,141

Except it wasn't.And yet it was cheaper than its i5 equivalent and likely better in the long run given it had 8 threads.

You don't have to buy it is the answer. Now how many folks will have 4090's? Very very few except for localized pockets where like minded folks visit. I expected Hardforum members here to have a noticeable buy in on the 4090 but what is the percent of the members going to get the 4090?600 watts for 1 piece of hardware is new issue. Also very few did SLI and only the tiniest fraction ever had 3 cards or more. You also have to deal with all this heat and electrical demand and I don't feel like redoing my house because I upgraded my computer. Only reason you used more power back in the day was you were stuffing you PC with more hardware, now just 1 piece is sucking down the power. To me it just shows just how much of a wall they are starting to hit to increase the performance now.

As for 1 piece of hardware or many equalling the same power usage, I see as irrelevant, I rather deal with one vice having to have several to get the performance I want. Just my 1 cent.

Thunderdolt

Gawd

- Joined

- Oct 23, 2018

- Messages

- 1,015

The 6900XT is an electricity burning pig of a card compared to a 4090. A 4090 that is throttled to about 100W can pull off the same frame rates as the 6900XT. Given that having the lowest possible wattage is now the primary selection criteria for GPUs, it seems like you should be pretty eager to trade in that coal-burning clunker for today's new solar-powered EV.No crime was committed by pointing out it's a power hog, my 6900xt is not exactly thrifty either but it doesn't need to be pushed either to run my stuff at 1440p.

Thunderdolt

Gawd

- Joined

- Oct 23, 2018

- Messages

- 1,015

Most likely image-driven marketing, giving OC headroom for early adopters who want to push headlines, and to help fortify the softening epeens of today's aging gamers.What's your explanation for the increase of the cooler size and the resulting comically gigantic models, for a card that uses about or less power than a 3090Ti?

Based on what techtubers have been posting, it seems the FE cooler is overkill even when the TDP slider is turned up to 600. Not only that, but they're also seeing fairly minimal performance gains when they do this. Go figure.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,541

The 6900XT is an electricity burning pig of a card compared to a 4090. A 4090 that is throttled to about 100W can pull off the same frame rates as the 6900XT. Given that having the lowest possible wattage is now the primary selection criteria for GPUs, it seems like you should be pretty eager to trade in that coal-burning clunker for today's new solar-powered EV.

That is literally the dumbest thing I have read on this forum. Why the heck would I spend 1600 dollars to notice 0 difference in how my games play at 1440p. I also tend to skip generations, 290x, 1080 and 6900xt as my current card run everything I want perfectly and I don't upgrade just to impress people on a forum with benchmark numbers. Even better you can turn on DLSS 3 and enjoy higher latency and fake frames that cause artifacting as well, but you get larger meaningless numbers from a benchmark to brag about while image quality goes down the drain. Make sure you buy two as well for completely useless SLI, plus they need money as I am not buying along with many others, oh and enjoy the heat it causes in the room as well.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,541

You don't have to buy it is the answer. Now how many folks will have 4090's? Very very few except for localized pockets where like minded folks visit. I expected Hardforum members here to have a noticeable buy in on the 4090 but what is the percent of the members going to get the 4090?

As for 1 piece of hardware or many equalling the same power usage, I see as irrelevant, I rather deal with one vice having to have several to get the performance I want. Just my 1 cent.

Go ahead if you want to, were just not all going to agree it's a good thing. I am sure Nvidia is going to need lots of people that agree with you as well to keep their stock anywhere near where it is right now. I am curious to see how the AMD cards do, even though I am not buying this generation, my Dad however is in the market for a new computer build.

Thunderdolt

Gawd

- Joined

- Oct 23, 2018

- Messages

- 1,015

Wait - performance matters again? I thought wattage was the only concern?Why the heck would I spend 1600 dollars to notice 0 difference in how my games play at 1440p.

Certainly you'd notice how ice cold your office was if you were able to play games at a lower wattage, right?

Is this not HardUCP?

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,541

Wait - performance matters again? I thought wattage was the only concern?

Certainly you'd notice how ice cold your office was if you were able to play games at a lower wattage, right?

Cool story bro, show me a quote where I say power draw is the only thing I care about.

I noticed the difference from the 1080 to the 6900xt, so yeah it's noticeable.

can't wait until the next witty retort you have.

ahmmm, kinda in same boat, don't see the need for the added performance, do think it is prudent to at least see RNDA 3 solutions, DLSS 3 so far is lol. As for power, people have been using 850w PS in their systems for over a decade and that is all that is required for most 4090 systems -> This is nothing new. Have to also look at performance/watt which the 4090 does not look too shabby for an Enthusiast card at this time compared to older generations, AMD may change that, hopefully so. Plus, no DP2? or DP2.1? makes the card much less desirable. I usually keep cards 3+ years and more like 5 years doing something, which in that time, proper monitors for that kind of GPU performance should be out.Go ahead if you want to, were just not all going to agree it's a good thing. I am sure Nvidia is going to need lots of people that agree with you as well to keep their stock anywhere near where it is right now. I am curious to see how the AMD cards do, even though I am not buying this generation, my Dad however is in the market for a new computer build.

Still own my Vega 64 LC (no longer used), stock 345w, +50% power I was pushing over 500w from that card -> now talking about a waste of power, that was it and that was over 5 years ago. Now if one does not need what 450w on the 4090 delivers but could use what 350w or 400w can, people can choose to do that with minimal amount of effort, up the power as needed. Some may prefer 450w or above, each their own.

Last edited:

Ah, yes, the "I crave for the card that barely fits my case, blocks expansion slots, needs an anti-sag bracket, for massive 6% OC gains" market.Most likely image-driven marketing, giving OC headroom for early adopters who want to push headlines, and to help fortify the softening epeens of today's aging gamers.

auntjemima

[H]ard DCOTM x2

- Joined

- Mar 1, 2014

- Messages

- 12,141

The reason people are mentioning power to you, and you already know this, is you brought up the power hog it is.Cool story bro, show me a quote where I say power draw is the only thing I care about.

I noticed the difference from the 1080 to the 6900xt, so yeah it's noticeable.

can't wait until the next witty retort you have.

Unfortunately for you the card can use significantly less power than your current card and perform the same. It's also significantly faster at stock than your card.

Seems pretty silly for you to want a card that performs worse at the same wattage after moaning about Nvidia's power. That's all. And to move the goal posts from wattage to Nvidia stock price when it was pointed out? Mega eye roll.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,541

The reason people are mentioning power to you, and you already know this, is you brought up the power hog it is.

Unfortunately for you the card can use significantly less power than your current card and perform the same. It's also significantly faster at stock than your card.

Seems pretty silly for you to want a card that performs worse at the same wattage after moaning about Nvidia's power. That's all. And to move the goal posts from wattage to Nvidia stock price when it was pointed out? Mega eye roll.

It is a power hog and it was built with the idea of it pulling 600 watts and it peaks at around 450 watts under stock conditions. Only plus is at least it performs at 4K for all that power it uses.

At 1440p at which I run the 4090 is not that much faster on some games, unless were going to talk about ray tracing. Seems like Nvidia drivers need some tuning on some games.

6900 XT uses 300 watts or so which is less then the 4090 and most times it's around 250 watts or less when playing games for me and I get to keep 1,600 dollars in my wallet. Only moaning seems to be coming from you that I think it's a power hog and I am not impressed with it. I just think it's funny how defensive you two are, your welcome to buy as many as you want despite my opinion.

I don't know what you are trying to say here but it sounds like you already own a 6900XT and you play at 1440p, and you are saying that you don't see the need to upgrade to a 4090.It is a power hog and it was built with the idea of it pulling 600 watts and it peaks at around 450 watts under stock conditions. Only plus is at least it performs at 4K for all that power it uses.

At 1440p at which I run the 4090 is not that much faster on some games, unless were going to talk about ray tracing. Seems like Nvidia drivers need some tuning on some games.

6900 XT uses 300 watts or so which is less then the 4090 and most times it's around 250 watts or less when playing games for me and I get to keep 1,600 dollars in my wallet. Only moaning seems to be coming from you that I think it's a power hog and I am not impressed with it. I just think it's funny how defensive you two are, your welcome to buy as many as you want despite my opinion.

In which case I agree at 1440p with a 4090 you will always be CPU limited with the current offerings out there the 4090 is not for you.

But in terms of power usage, the 4000 series is more power efficient than both AMD and Nvidia's previous architectures.

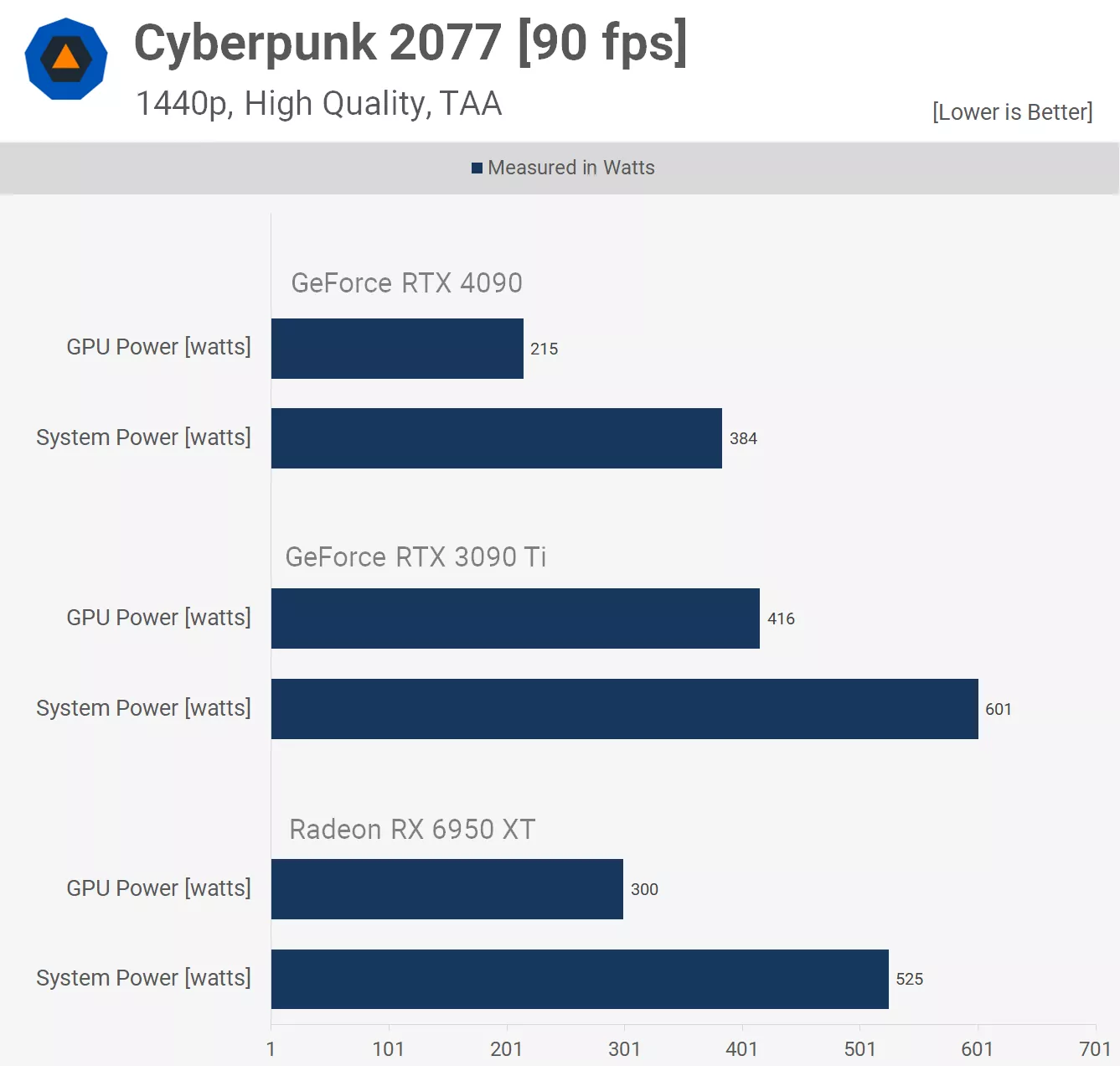

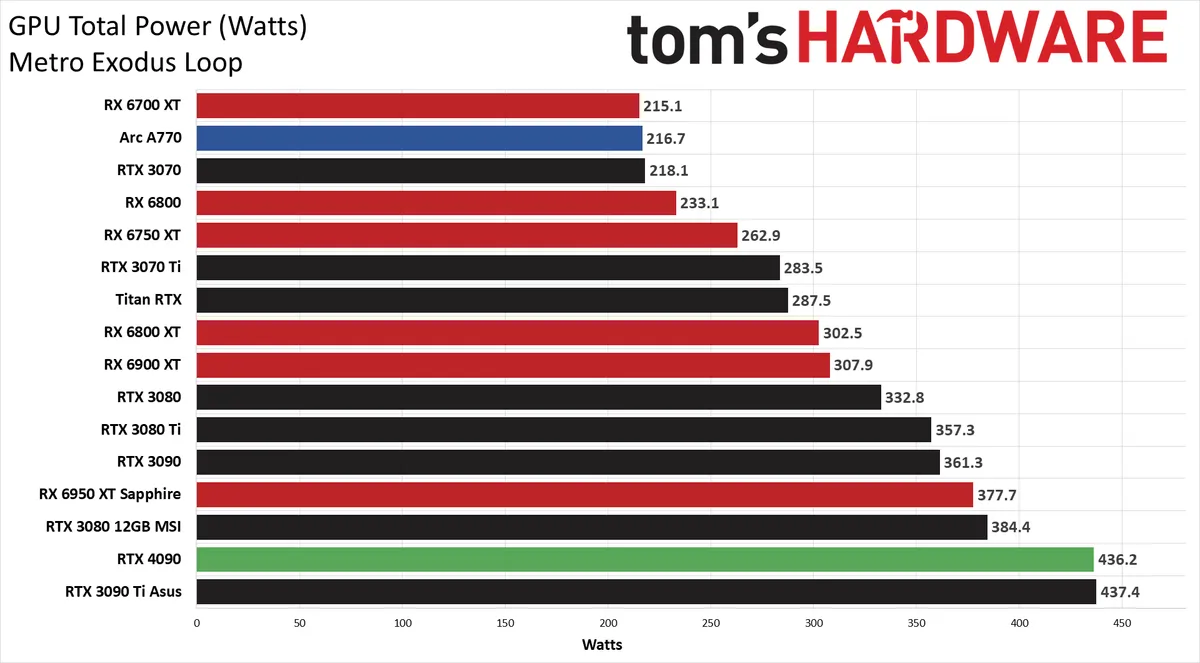

Below is one slide of one game but there are lots of reviews out there that have made this comparison and it's pretty unanimous.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,541

I don't know what you are trying to say here but it sounds like you already own a 6900XT and you play at 1440p, and you are saying that you don't see the need to upgrade to a 4090.

In which case I agree at 1440p with a 4090 you will always be CPU limited with the current offerings out there the 4090 is not for you.

But in terms of power usage, the 4000 series is more power efficient than both AMD and Nvidia's previous architectures.

Below is one slide of one game but there are lots of reviews out there that have made this comparison and it's pretty unanimous.

View attachment 520097

Hmm odd chart

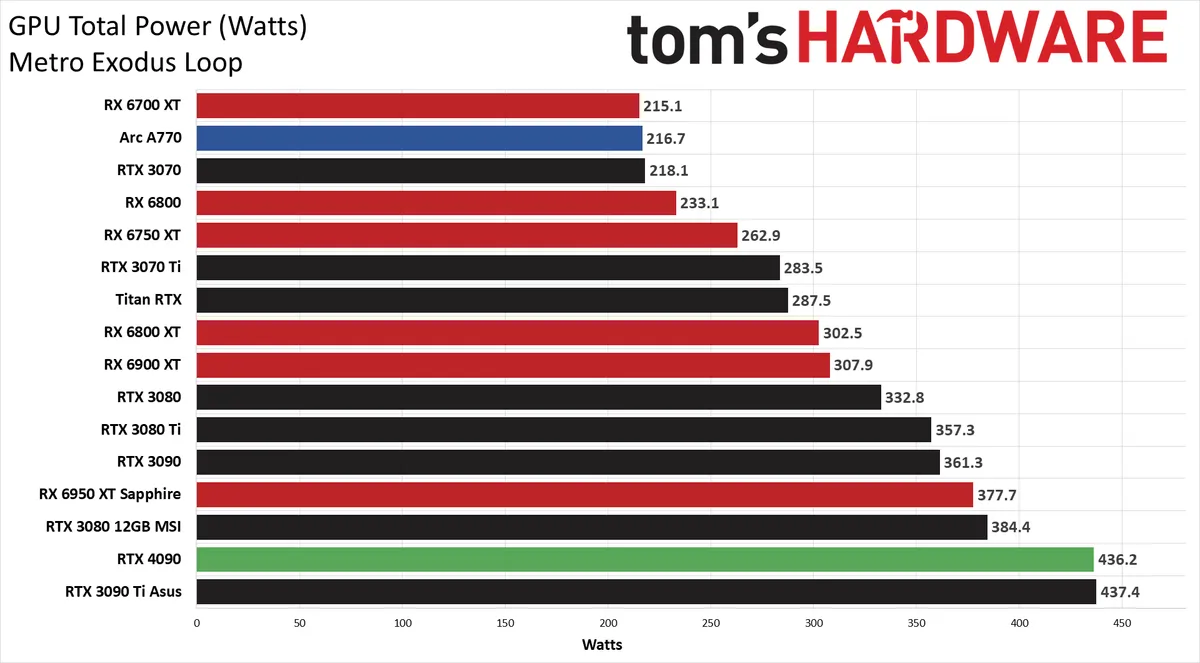

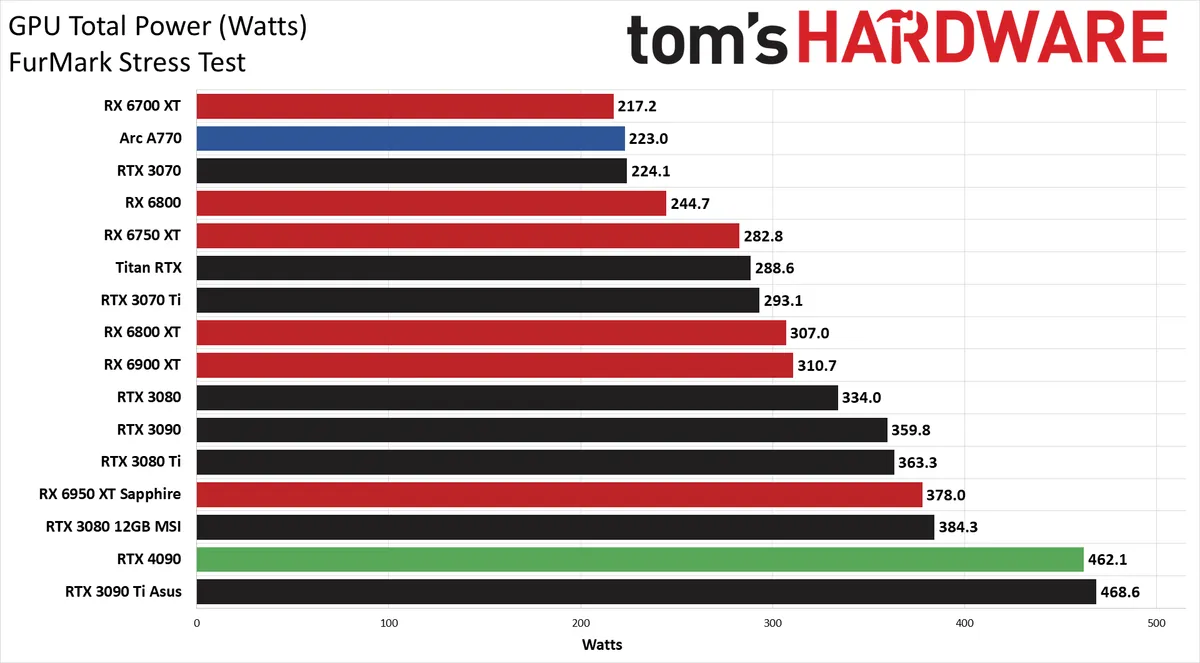

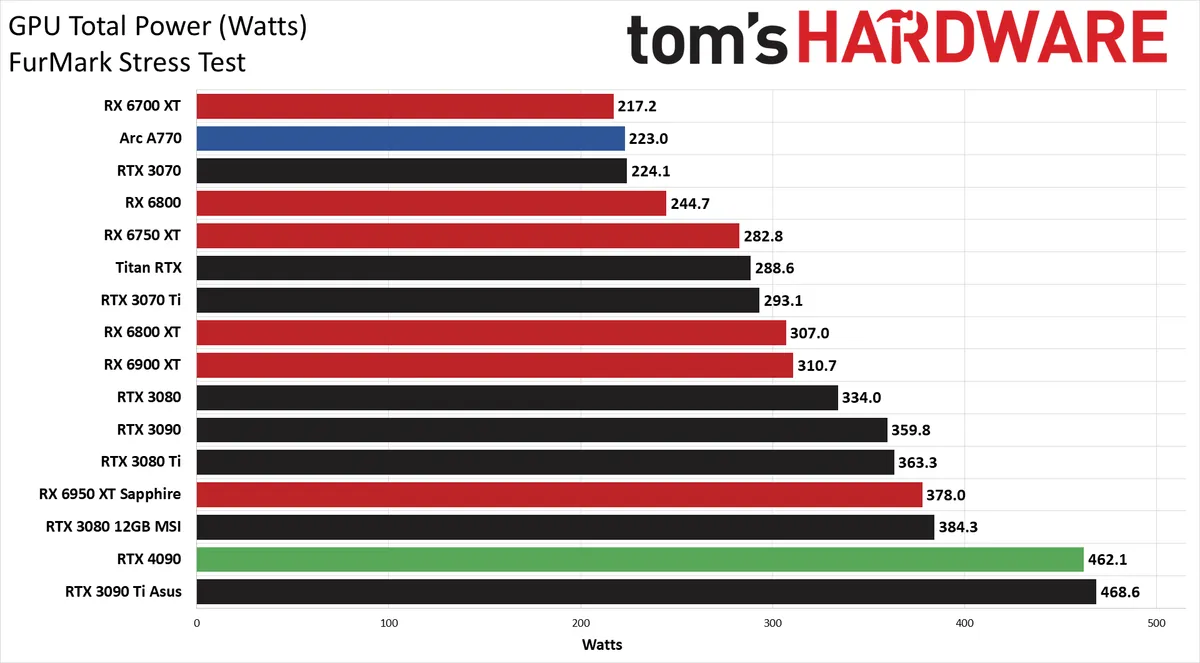

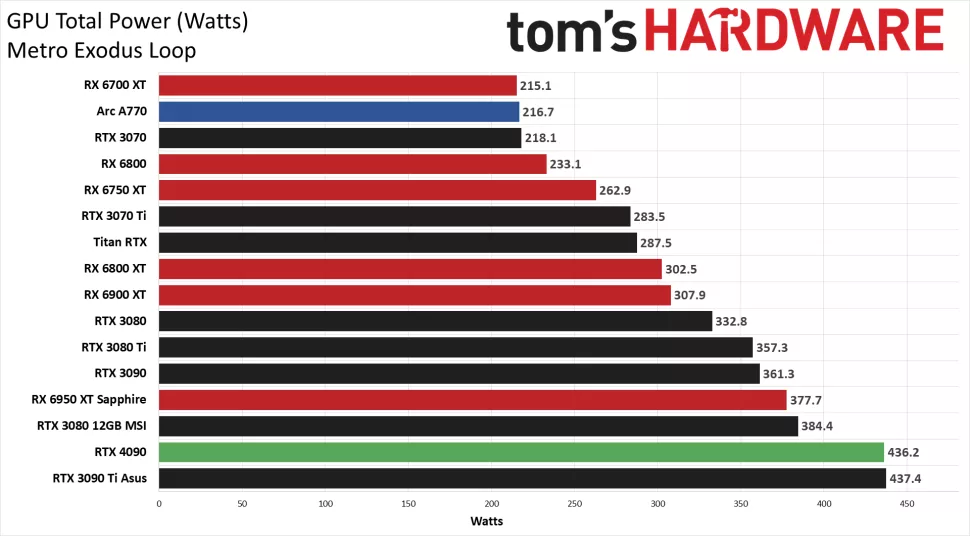

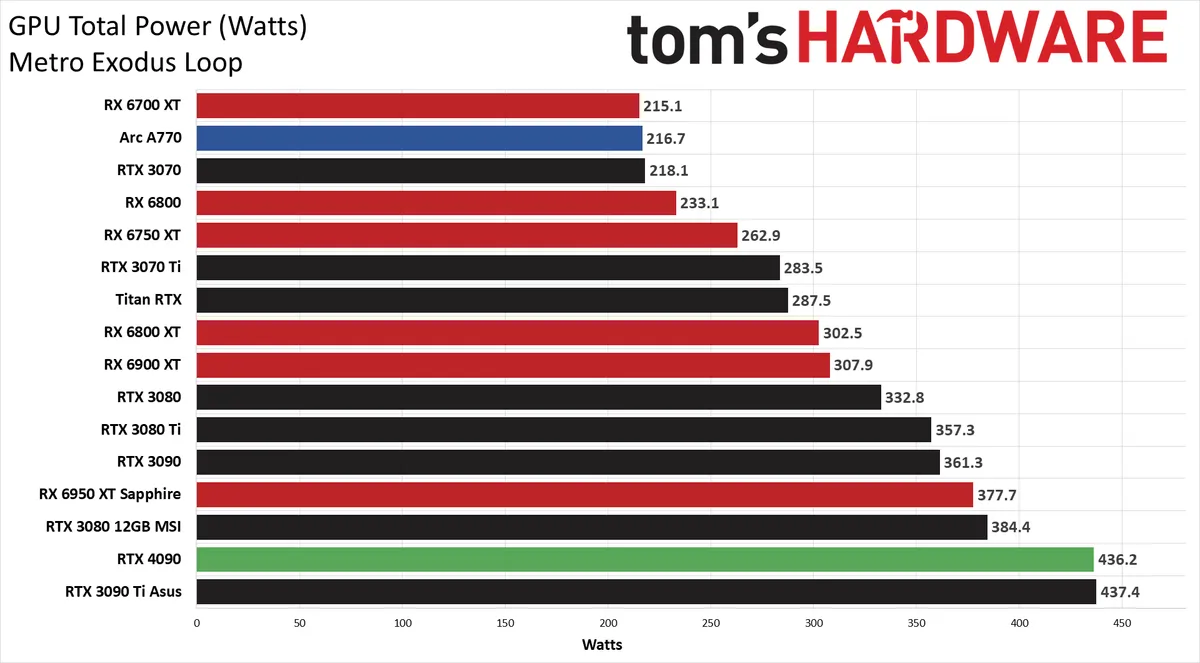

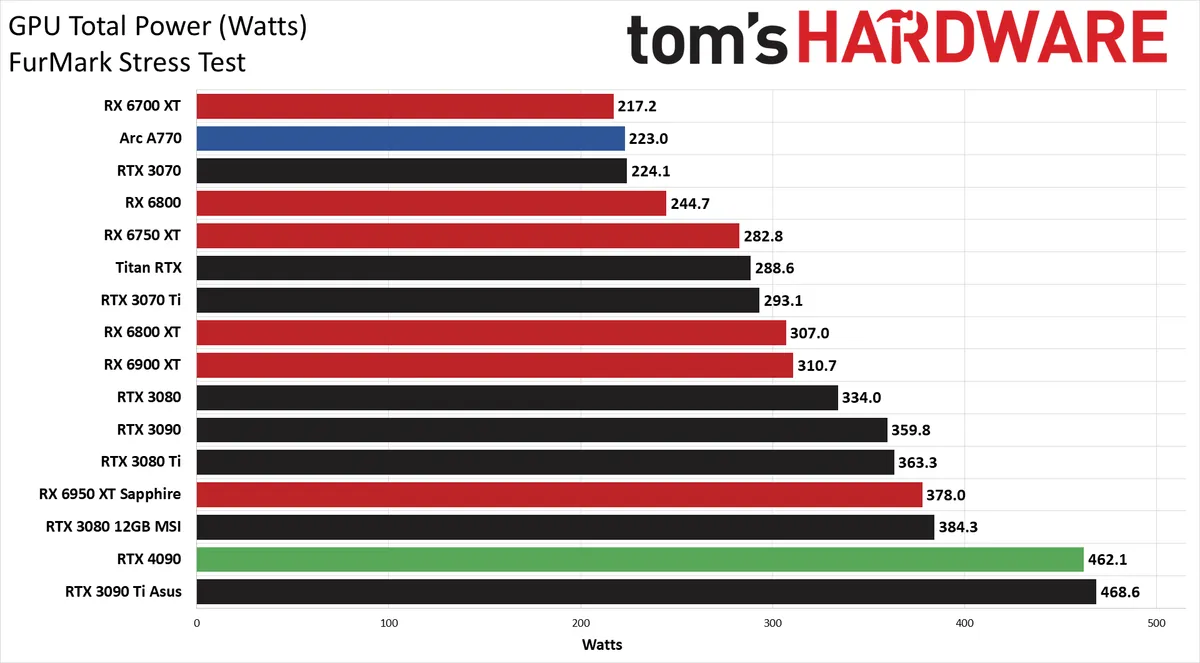

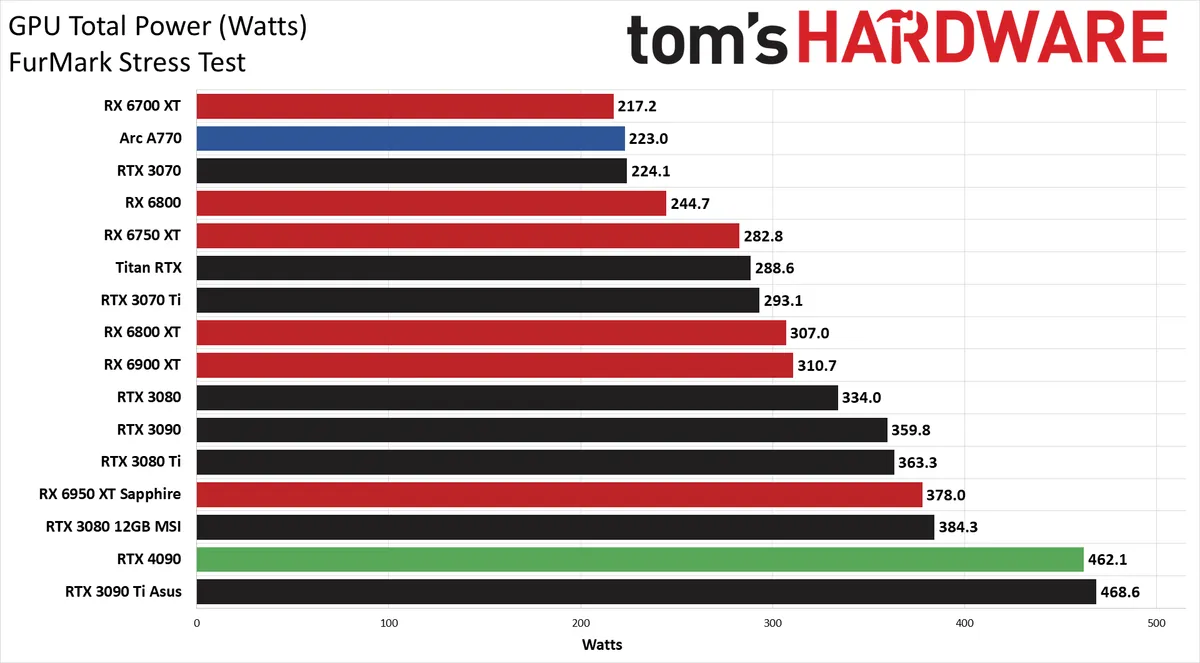

The test done by Toms Hardware shows the 4090 pulling 462.1 watts while doing 116.3 fps average in Metro Exodus, the 6950XT while pulling 378.0is only putting out 57.6 fps.Hmm odd chart

So if you are looking for watts per frame the 4090 is well ahead.

4090 3.97w per frame

6950XT 6.56w per frame

https://www.tomshardware.com/reviews/nvidia-geforce-rtx-4090-review/4

Last edited:

LodeRunner

Gawd

- Joined

- Sep 8, 2006

- Messages

- 661

That wattage chart is only a somewhat useful comparison tool without the performance numbers.

I wonder how well it will take to undervolting. My 2070S overclocks better when I set it to 85-90% power limit instead of allowing it to go up to 120%.

I wonder how well it will take to undervolting. My 2070S overclocks better when I set it to 85-90% power limit instead of allowing it to go up to 120%.

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 935

Well the 4090 is voltage limited, not power limited, so not sure that would be productive like it was with Ampere.That wattage chart is only a somewhat useful comparison tool without the performance numbers.

I wonder how well it will take to undervolting. My 2070S overclocks better when I set it to 85-90% power limit instead of allowing it to go up to 120%.

auntjemima

[H]ard DCOTM x2

- Joined

- Mar 1, 2014

- Messages

- 12,141

Well, now WE are moving goalposts. While its perf/watt is better, it still uses more wattage at stock.The test done by Toms Hardware shows the 4090 pulling 462.1 watts while doing 116.3 fps average in Metro Exodus, the 6900XT while pulling 310.7w is only putting out 57.6 fps.

So you would need a 3900xt pulling 627.33 watts to match the framerate of the 4090, assuming perfect scaling.

https://www.tomshardware.com/reviews/nvidia-geforce-rtx-4090-review/4

I've edited it for clarity since you quoted it but yes, but given the person I responded too was calling BS on the post I had put up showing the 4090 drawing significantly less than both the 3090ti and the 6950xt when frame locked in context it is a perfectly reasonable response.Well, now WE are moving goalposts. While its perf/watt is better, it still uses more wattage at stock.

But in context, it's not even that much more you are looking at just under 440 watts under a normal gaming load, compared to just under 380 for the 6950 xt. So we are talking a difference of 60 watts on the outside which is less than the difference between the 6950 and the 6900.

So again from the toms hardware charts for Metro Exodus which the person I was responding to was using to prove his point.

The 4090 is a 450w part, just like the 3090 before it, the cards power delivery may be capable of up to 600w, and the cooler may even be able to dissipate it but at the end of the day, running stock is still just a 450w part.

Thunderdolt

Gawd

- Joined

- Oct 23, 2018

- Messages

- 1,015

1) The Metro loop is done at 4K. At your 1440p, the 4090 power draw goes down because it spends half the time idling waiting for the CPU to catch up.Hmm odd chart

2) Do you find yourself playing a lot of Furmark?

From Tom's:

For the RTX 4090 Founders Edition, our previous settings of 2560x1440 ultra in Metro and 1600x900 in FurMark clearly weren't pushing the GPU hard enough. Power draw was well under the rated 450W TBP

auntjemima

[H]ard DCOTM x2

- Joined

- Mar 1, 2014

- Messages

- 12,141

Shhh you'll scare him!1) The Metro loop is done at 4K. At your 1440p, the 4090 power draw goes down because it spends half the time idling waiting for the CPU to catch up.

2) Do you find yourself playing a lot of Furmark?

From Tom's:

For the RTX 4090 Founders Edition, our previous settings of 2560x1440 ultra in Metro and 1600x900 in FurMark clearly weren't pushing the GPU hard enough. Power draw was well under the rated 450W TBP

ye olde project plowshare I see, the ultimate doubling down https://st.llnl.gov/news/look-back/plowshare-programHmm odd chart

Hmm odd chart

Lmao. Dude... You gotta stop.

Watts/frame is what matters.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)