Hi guys, could you please help me to understand lanes? Here are the points I'm currently "struggling" with:

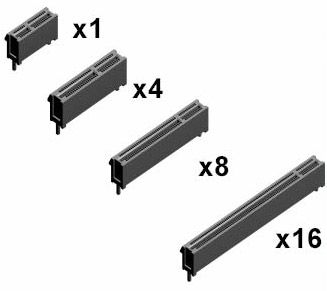

- A "full-bandwidth" slot is the one which # of contacts (e.g. x8) is equal to the max # of lanes (i.e. 8) this slot can connect to. Is it right, that the # of lanes available to "full-bandwidth" slots is defined by MoBo and CPU (whichever has less lanes), while the number of lanes available to "secondary, non full-bandwidth" slots is defined by MoBo and Chipset (whichever has less lanes)? In other words, if CPU has 16 lanes, MoBo has 18 and Chipset has 20, finally there will be 16 lanes (theoretically) available to full-bandwidth slots and 18 lanes to non full-bandwidth slots - is that correct?

- Why the logic mentioned in point #1 (if it's correct of course!) doesn't work in paired AMD X570 Chipset and AMD Ryzen 3000/Zen2 CPU? Ryzen 3000 has 24 PCIe lanes (4 to connect with MoBo and the rest 20 for full-bandwidth slots, e.g. x16 GPU and x4 NVME M.2 SSD), while X570 Chipset has 16 lanes - all available to non full-bandwidth slots. So, at the end of the day all 40 (24 + 16) lanes co-exist and work, which is somehow not aligned with the logic described in point #1 (Chipset should have decreased the # of CPU's lanes to 16, but it did not!).

- How will you finish this phrase: "the sum of lanes needed for all the current and future devices to run at their max performance shall.... [be not less than... , be equal to... , etc.]"

- What happens if the device gets less lanes than the max. # it supports? Does SSD start to write/read data slower, or does GPU start to generate less fps?

- Is it correct, that different MoBos built on the same chipset may have different # of available lanes, because each MoBo manufacturer re-routes/hardwires lanes as they wish? Shall the lesser # of lanes result only in lesser # of slots, or also in slower port speeds?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)