GCN supported DX 12 which happened way beyond 7970 launch and supported it much better than Maxwell and even Pascal for that matter. Probably the best example in favor for AMD - not too many others. Nvidia tends to put a lot of money, effort and support for new features. While at times it turns out to be a power play that fails (PhysX, GameWorks, GSync). I like that Nvidia took the chance and pushed out RTX - very cool! Do not like the price tag that came with it and at present, at least for me, it is not enough improvement to bother with for the cost and performance hit. I don't know, will have to see what Nvidia does with Super.I would really, really not advise investing too much attention to 'features' on AMD cards. Going back through their lineage even to the first ATi GPUs, there is a long history of AMD / ATi introducing 'features' that do not get used until they make their way into DirectX and are supported by other vendors, and in a form that is not compatible with what is originally released. Navi could always buck the trend, but it's a strong trend.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Navi discussion thread

- Thread starter TheRookie

- Start date

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

GCN supported DX 12 which happened way beyond 7970 launch and supported it much better than Maxwell and even Pascal for that matter.

Sure, but they got 'features' into DX12 that then weren't used for more than a single game, and Nvidia didn't bother offering support (not sure about Intel). Even if they get ahead of the DirectX release- and even if the features are used on consoles!- AMD / ATi has a long record of not getting software support for them. They really haven't had a technology leadership success since the ATi 9700 Pro.

While at times it turns out to be a power play that fails (PhysX, GameWorks, GSync)

These are all successes- PhysX is the most used physics engine, GameWorks is implemented in DirectX and gets the hardware actually used, and G-Sync still sets the standard for VRR. PhysX and G-Sync don't appear to have gone the way Nvidia likely wanted them to go, but they have still had a massive impact. GameWorks is perhaps one of the better things they've done- Nvidia has always lead in terms of supporting developers, which is understandable given that it's the software that sells the hardware (see Xbox One sales versus PS4...).

crazycrave

[H]ard|Gawd

- Joined

- Mar 31, 2016

- Messages

- 1,878

I would really, really not advise investing too much attention to 'features' on AMD cards. Going back through their lineage even to the first ATi GPUs, there is a long history of AMD / ATi introducing 'features' that do not get used until they make their way into DirectX and are supported by other vendors, and in a form that is not compatible with what is originally released. Navi could always buck the trend, but it's a strong trend.

Free Sync released with the 290x as I use it at times .

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Free Sync released with the 290x as I use it at times .

Why is this relevant? Freesync released well after G-Sync.

Point is the hardware had this capability and was supported while Nvidia did not support until it became obvious they should even when their hardware was fully capable of supporting the VESA adaptive sync standard (Nvidia Power grab failure again). Navi new features such as lower lag and sharpening will work right out of the gate. The wave 32 programming which will increase performance significantly will happen since next generation consoles will have this same ability.Why is this relevant? Freesync released well after G-Sync.

Last edited:

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Point is the hardware had this capability

You are making a massive omission here- it wasn't the GPU makers that were the holdup, it was the display makers. Nvidia forged ahead by solving the whole problem and then producing appropriate hardware that wound up in displays, before AMD even had a proof of concept to show. They threw one together last minute.

That's why it's irrelevant.

AMD was working with VESA on the displayport 1.2a standard for an open standard. Nvidia did not participate nor solved any problem for VESA. They came up with a proprietary, costly hardware standard restricting it to their only hardware costing manufacturers additional costs to impliment. Anyway the winner was Adaptive Sync (FreeSync), open standards, AMD, VESA and everyone else who now can have HDTV's with Adaptive Sync, gaming consoles, cheaper higher quality monitors etc. Nvidia power grab once again failed. In this case a feature AMD help forge which Nvidia could use (pulling teeth in this case for Nvidia to benefit gamers) happen and won. AMD worked with the display manufacturers to give a better solution which has now been accepted even by Nvidia. No one really cares about Gsync anymore and Navi fully supports FreeSync II, HDR plus adaptive sync, HDMI Adapative Sync (Nvidia?) etc.You are making a massive omission here- it wasn't the GPU makers that were the holdup, it was the display makers. Nvidia forged ahead by solving the whole problem and then producing appropriate hardware that wound up in displays, before AMD even had a proof of concept to show. They threw one together last minute.

That's why it's irrelevant.

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

AMD was working with VESA on the displayport 1.2a standard for an open standard.

Remember that Nvidia used the base standard for mobile G-Sync. They were quite aware that it was available, in mobile panels, and completely unavailable on the desktop. They had to do that themselves. You remember AMD's first hacked-together laptop demo after Nvidia demoed G-Sync on a monitor with a desktop GPU, right?

The relevant DP standard is used for power saving. That's why it exists in the first place, and why it was available on mobile. Yeah, everyone was aware of it. Even Intel. Even phone SoC makers which use similar technology. Not a new thing, and definitely not 'invented' by AMD.

I will concede that now, years later, Freesync 2 has more or less reached experience parity with G-Sync. That is, where G-Sync was when it released. We'll probably have to wait for Freesync 3 before AMD actually forces display vendors to adhere to the level of quality that Nvidia requires for G-Sync monitors though.

No one really cares about Gsync anymore

Some actually care about getting the best VRR experience. Even Samsung is shipping G-Sync monitors for the first time, and there's stuff coming out of LG that's extremely promising. As usual, Nvidia is pushing the innovation, and AMD is lagging to catch up.

Let's just get back to Navi, all that effort Nvidia did was for naught in the end. Navi competition with Super will be interesting and hopefully AMD just reduces the price outright because what they are pitting Navi against is a moving target and will be at a lower price. Don't know what Nvidia will strike Navi with but Nvidia is good at responding when they have a whole frinking lineup already to let loose. Everything AMD put out in E3 will be wrong shortly dealing with performance/price/competition. lolRemember that Nvidia used the base standard for mobile G-Sync. They were quite aware that it was available, in mobile panels, and completely unavailable on the desktop. They had to do that themselves. You remember AMD's first hacked-together laptop demo after Nvidia demoed G-Sync on a monitor with a desktop GPU, right?

The relevant DP standard is used for power saving. That's why it exists in the first place, and why it was available on mobile. Yeah, everyone was aware of it. Even Intel. Even phone SoC makers which use similar technology. Not a new thing, and definitely not 'invented' by AMD.

I will concede that now, years later, Freesync 2 has more or less reached experience parity with G-Sync. That is, where G-Sync was when it released. We'll probably have to wait for Freesync 3 before AMD actually forces display vendors to adhere to the level of quality that Nvidia requires for G-Sync monitors though.

Some actually care about getting the best VRR experience. Even Samsung is shipping G-Sync monitors for the first time, and there's stuff coming out of LG that's extremely promising. As usual, Nvidia is pushing the innovation, and AMD is lagging to catch up.

5700 $349

5700XT $399

5700XT Aniversary Edition $449

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

all that effort Nvidia did was for naught in the end.

I'd point out that the consumer awareness of VRR and demand for quality solutions (i.e., not Freesync was when it was introduced) has been heightened. Perhaps if you only view G-Sync as a 'power play', you won't see that, but I call that 'mission accomplished'.

Everything AMD put out in E3 will be wrong shortly dealing with performance/price/competition. lol

They're going to have to undercut Nvidia on price / performance just as a practicality. If Nvidia offers a similar performing part with RT, AMD has to be cheaper. That's how the marketing will play out.

So, good for those looking in the resulting price brackets for something new

Navi competition as well as Vega Vii pricing considerations

https://videocardz.com/81046/nvidia-geforce-rtx-20-super-series-to-launch-mid-july

If the above information is accurate:

2080 - 2080 Super has 4% increase in Cuda cores - not much to write home about unless clock speeds are increased which one could OC anyways.

2070 - 2070 Super has 11% increase in Cuda cores which should make this faster than the Navi 5700 XT, sorry AMD you will be limping out of the gate

2060 - 2060 Super has 13% increase in Cuda cores, 8gb memory, wider memory bus meaning much larger memory bandwidth - this looks to be a very good part but I do not think it will overtake the Navi 5700 but then the regular 2070 pricing will be interesting.

It really comes down to pricing in the end. Vega Vii pricing may look high if the regular 2080 price is cheaper. Not to mention that the 1650, 1660 and 1660 Ti prices will be lowered making the Rx 5xx series pointless.

I just don't see AMD competing here well unless they further lower their pricing on Navi and also come out with more viable products.

https://videocardz.com/81046/nvidia-geforce-rtx-20-super-series-to-launch-mid-july

If the above information is accurate:

2080 - 2080 Super has 4% increase in Cuda cores - not much to write home about unless clock speeds are increased which one could OC anyways.

2070 - 2070 Super has 11% increase in Cuda cores which should make this faster than the Navi 5700 XT, sorry AMD you will be limping out of the gate

2060 - 2060 Super has 13% increase in Cuda cores, 8gb memory, wider memory bus meaning much larger memory bandwidth - this looks to be a very good part but I do not think it will overtake the Navi 5700 but then the regular 2070 pricing will be interesting.

It really comes down to pricing in the end. Vega Vii pricing may look high if the regular 2080 price is cheaper. Not to mention that the 1650, 1660 and 1660 Ti prices will be lowered making the Rx 5xx series pointless.

I just don't see AMD competing here well unless they further lower their pricing on Navi and also come out with more viable products.

Last edited:

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

It really comes down to pricing in the end. Vega Vii pricing may look high if the regular 2080 price is cheaper. Not to mention that the 1650, 1660 and 1660 Ti prices will be lowered making the Rx 5xx series pointless.

I just don't see AMD competing here well unless they further lower their pricing on Navi and also come out with more viable products.

AMD marketing left for Intel it seems

AMD might have just given up where if there offering is faster then the Nvidia counterpart it still won't outsell or draw even.

Devoid of any smart ideas on marketing this might be the end result of what others decided for you.

from https://hardforum.com/threads/navi-discussion-thread.1982708/page-3#post-1044231206

The idea that it is $100 to expensive still makes sense but how it will impact pricing as things go along is something that is hard to measure.

misterbobby

2[H]4U

- Joined

- Mar 18, 2014

- Messages

- 3,814

How in the hell could they possibly do a 512 bit big Navi when even the little Navi with only 40 compute units and a 256 bit bus is at 225 watts?Any guesses as to what Navi 20 would be like?

384-bit bus is probably a safe assumption

maybe even the return of the 512-bit bus

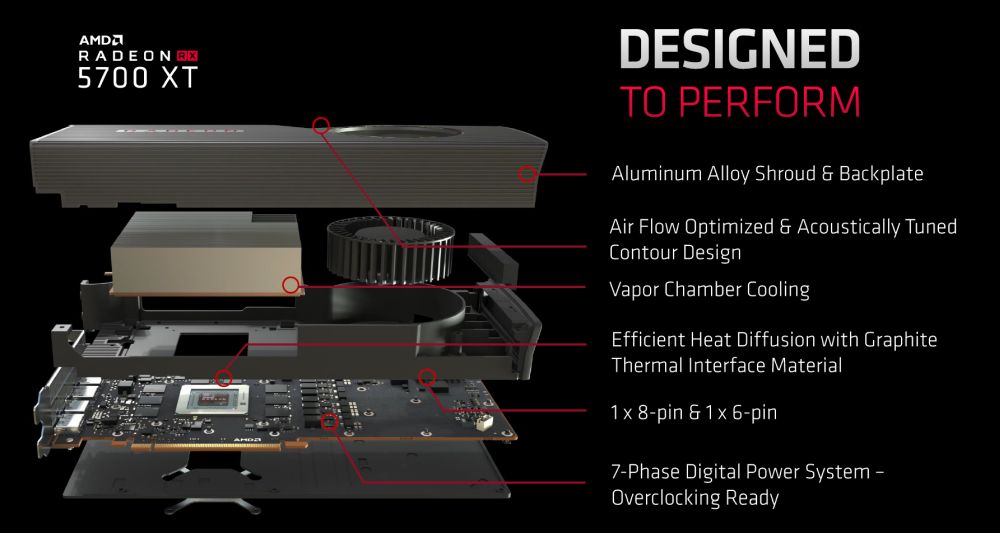

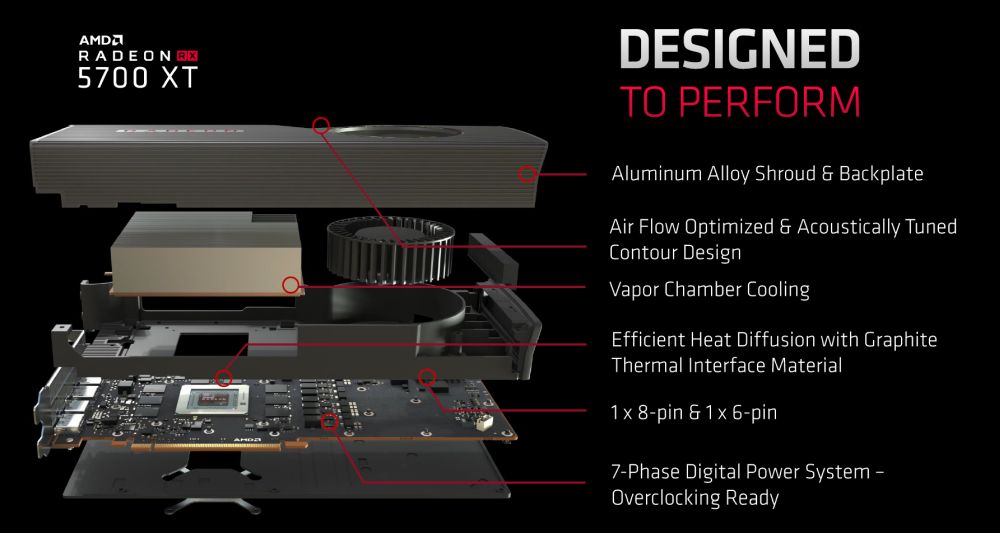

And I really just do not get the 5700 series as they are very power hungry for what they are and cost quite a lot. Who the hell are these cards for? Someone with a Vega 56 or 64 will not see a big upgrade and someone with a 570 or 580 is not the type of person to spend 400-450 bucks on a gpu. And anybody that was maybe looking at a 2070 but wanted to wait for Navi hoping for lower prices essentially waited for nothing. There are have already been occasional sales on the 2070 for well under 450 bucks. And that was on cards with good cooler not the blower crap that Navi will come with at its MSRP.

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

And I really just do not get the 5700 series as they are very power hungry for what they are and cost quite a lot. Who the hell are these cards for? Someone with a Vega 56 or 64 will not see a big upgrade and someone with a 570 or 580 is not the type of person to spend 400-450 bucks on a gpu. And anybody that was maybe looking at a 2070 but wanted to wait for Navi hoping for lower prices essentially waited for nothing. There are have already been occasional sales on the 2070 for well under 450 bucks. And that was on cards with good cooler not the blower crap that Navi will come with at its MSRP.

In the previously linked video AdoredTV calculates how much the cost is of Polaris to make for the sake of Navi being the replacement for it. It ends up that with the ram Navi is about $50 more expensive to make then Polaris.

When you put those things together with MSRP for Polaris RX 480 you can come to only one conclusion that AMD marketing not going to work any more because they can not make the difference when selling products in certain slots.

The other thing is that Intel is expected to join the gpu market and the time they can get some extra money for their gpu is now rather then when the market has 3 players.

People that wanted to buy the 2070 have no other option then to go with Nvidia no one that buys Nvidia will wait for AMD. That is reflected in most of the sales data. There is no benefit in choosing RTX 2070 because of segmented performance makes the card a nono for the RTX feature that leaves DLSS which in my opinion is a worse option then AMD counterpart FidelityFX.

And yes the blower feature is somewhat puzzling but this time around they limited the blower RPM, so now instead of thinking someone is using doing choirs in house you will hit the thermal ceiling and just slows it down.

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

https://fudzilla.com/news/graphics/48893-custom-radeon-rx-5700-cards-come-in-mid-august

According to our sources, custom Radeon RX 5700 won't be available before mid-August.

It appears that the Radeon RX 5700 series will only be available with reference design when it launches on July 7th as custom versions from usual AMD AIB partners won't be available before mid-August, in best case scenario.

I am still flummoxed by the price pf Navi

If Nvidia with such a large chip size still has room to cut prices of 2060 & 2070

Then why can't Navi, with only half the chip size, come at a lesser price

Is it because of more expensive VRM & cooling or latent mining demand for Navi cards ?

If Nvidia with such a large chip size still has room to cut prices of 2060 & 2070

Then why can't Navi, with only half the chip size, come at a lesser price

Is it because of more expensive VRM & cooling or latent mining demand for Navi cards ?

funkydmunky

2[H]4U

- Joined

- Aug 28, 2008

- Messages

- 3,866

They will and are, but they do not need to be very aggressive yet. They are targeting a nice profit margin on these chips, and as long as they sell well they will be happy to slightly undercut Nvidia and keep the $$. This isn't the bankrupt AMD to all our chagrin.I am still flummoxed by the price pf Navi

If Nvidia with such a large chip size still has room to cut prices of 2060 & 2070

Then why can't Navi, with only half the chip size, come at a lesser price

Is it because of more expensive VRM & cooling or latent mining demand for Navi cards ?

I am still flummoxed by the price pf Navi

If Nvidia with such a large chip size still has room to cut prices of 2060 & 2070

Then why can't Navi, with only half the chip size, come at a lesser price

Is it because of more expensive VRM & cooling or latent mining demand for Navi cards ?

NVidia hasn't cut prices, and really isn't expected to, expect maybe to clear some models for the Super Refresh.

7nm is much denser, but more expensive than 12nm (really 16nm), So Price/Transistor is very similar, as are transistor counts and 10.8 Billion 2070 vs 10.3 Billion 5700.

5700 is cheaper to product than 2070, but not by a lot.

According to our sources, custom Radeon RX 5700 won't be available before mid-August.

Why do they always do this? It's pretty much equivalent to saying the real release date will be mid August at the soonest.

Not really. It's just saying that initially there will only be reference blower designs.

Auer

[H]ard|Gawd

- Joined

- Nov 2, 2018

- Messages

- 1,972

According to our sources, custom Radeon RX 5700 won't be available before mid-August.

Why do they always do this? It's pretty much equivalent to saying the real release date will be mid August at the soonest.

No clue what took so long, now it's pretty much a year gone by since the 2070 launch before AMD managed to counter. /shrug

Can't help to feel like AMD really doesn't care that much about the enthusiast GPU market anymore and has settled for a small share just to keep the brand in the segment for visibility.

Much like the R VII was/is around just so they can say "Hey were still here".

How in the hell could they possibly do a 512 bit big Navi when even the little Navi with only 40 compute units and a 256 bit bus is at 225 watts?

AMD hasn't been shy about making much more power hungry video cards

Radeon RX Vega 64 is 295W

Radeon RX Vega 64 Liquid Edition is 345W

And I really just do not get the 5700 series as they are very power hungry for what they are and cost quite a lot. Who the hell are these cards for? Someone with a Vega 56 or 64 will not see a big upgrade and someone with a 570 or 580 is not the type of person to spend 400-450 bucks on a gpu. And anybody that was maybe looking at a 2070 but wanted to wait for Navi hoping for lower prices essentially waited for nothing. There are have already been occasional sales on the 2070 for well under 450 bucks. And that was on cards with good cooler not the blower crap that Navi will come with at its MSRP.

It's obviously for people on the Radeon RX 570/580, GeForce GTX 1060 and older video cards.

I am still flummoxed by the price pf Navi

If Nvidia with such a large chip size still has room to cut prices of 2060 & 2070

Then why can't Navi, with only half the chip size, come at a lesser price

Is it because of more expensive VRM & cooling or latent mining demand for Navi cards ?

Turing is on 12nm and Navi is 7nm

7nm is much more expensive than 12nm and you can't compare die size alone without considering the different fabrication process.

Last edited:

misterbobby

2[H]4U

- Joined

- Mar 18, 2014

- Messages

- 3,814

As I just said, people that buy 570 and 580 cards do not spend 400 to 450 bucks on a video card so it can't possibly be for them.AMD hasn't been shy about making much more power hungry video cards

Radeon RX Vega 64 is 295W

Radeon RX Vega 64 Liquid Edition is 345W

It's obviously for people on the Radeon RX 570/580, GeForce GTX 1060 and older video cards.

As I just said, people that buy 570 and 580 cards do not spend 400 to 450 bucks on a video card so it can't possibly be for them.

Really?

So are you are saying that I don't exist?

misterbobby

2[H]4U

- Joined

- Mar 18, 2014

- Messages

- 3,814

MOST people that buy 570/580 cards do NOT spend 400-450 bucks on a video card. If that was their typical budget then they would already be on a faster more expensive video card.Really?

So are you are saying that I don't exist?

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

As I just said, people that buy 570 and 580 cards do not spend 400 to 450 bucks on a video card so it can't possibly be for them.

Should put some caveats in there. In general I agree that people that are shopping in the Polaris range of cards are not Navi customers, however, every generation there are those that decide they want something from a faster bracket, and perhaps less so, those that own Polaris-level cards for other systems that will purchase Navi for those systems or for something else.

I bought a 570 not long ago for my wives new machine. It was a great deal... and its going to see light gaming. Also Linux users so NV is out.

I am building a new machine for me once ryzen 2 is out. I am not as heavy a gamer as I used to be... and frankly nothing on the high end makes me believe I am going to see a $800 price difference between a 580 (or vega 56). I will probably go with a vega56 as there are some great deals localy and it won't cost me much more then a new 580. I for sure will be looking at pricing on the 5700... probably the vega is going to be the better buy. Still if the price is somewhat close I might splurge a bit from where I was planning to go.

I am building a new machine for me once ryzen 2 is out. I am not as heavy a gamer as I used to be... and frankly nothing on the high end makes me believe I am going to see a $800 price difference between a 580 (or vega 56). I will probably go with a vega56 as there are some great deals localy and it won't cost me much more then a new 580. I for sure will be looking at pricing on the 5700... probably the vega is going to be the better buy. Still if the price is somewhat close I might splurge a bit from where I was planning to go.

Considering what has been going on between mining... and in general crap upgrade cycles. I would say perhaps its fair to say a lot of people have been buying lower end GPUs waiting for something to once again spend a bit more money on. The last 3-4 years 4k gaming hasn't been an option for real unless you where willing to jump up to $800+. That seem to still pretty much be the case.

So with something actually exciting like Ryzen 2 launching. It is very possible a lot of people that have been waiting to upgrade. Just bite the bullet and spend 400-600 bucks on a video card rather then put a <2060 <vega in their shiny new systems. I have to say if I build a new 3800/3900 system it will be very painful putting another cheapo 1080p card in it. lol

I think that is why AMD has sort of missed a great opportunity with Navi. A lot of AMD again and for the first time users are going to jump on Ryzen 2. Its a bit to bad they didn't price the 5700xt and 5700 just a bit lower to make the choice a non choice. As it is NV is probably going to see a bigger bump then AMD will in GPU sales tied to their Ryzen launch.

So with something actually exciting like Ryzen 2 launching. It is very possible a lot of people that have been waiting to upgrade. Just bite the bullet and spend 400-600 bucks on a video card rather then put a <2060 <vega in their shiny new systems. I have to say if I build a new 3800/3900 system it will be very painful putting another cheapo 1080p card in it. lol

I think that is why AMD has sort of missed a great opportunity with Navi. A lot of AMD again and for the first time users are going to jump on Ryzen 2. Its a bit to bad they didn't price the 5700xt and 5700 just a bit lower to make the choice a non choice. As it is NV is probably going to see a bigger bump then AMD will in GPU sales tied to their Ryzen launch.

misterbobby

2[H]4U

- Joined

- Mar 18, 2014

- Messages

- 3,814

This is my last comment to you as you are beyond clueless and not worth wasting any more time on. MOST people typically buy cards in the same price range as they have a budget. Every goddamn company knows this and that is the reason they have different price segments. Exceptions can always happen but AGAIN what you are saying is not the norm as MOST people that bought sub $200 cards are not going to spend 400-450 bucks on a gpu upgrade.I get really skeptical when someone make a statement that include "most people".

Obviously, statements such as "most people don't want to be murdered" is generally accepted as true, but not statements like yours.

For all you know, someone bought a $200 video card to get PC gaming and then decided to upgrade to a ~$500 video card for better FPS and/or higher resolution.

Auer

[H]ard|Gawd

- Joined

- Nov 2, 2018

- Messages

- 1,972

I think that is why AMD has sort of missed a great opportunity with Navi. A lot of AMD again and for the first time users are going to jump on Ryzen 2. Its a bit to bad they didn't price the 5700xt and 5700 just a bit lower to make the choice a non choice. As it is NV is probably going to see a bigger bump then AMD will in GPU sales tied to their Ryzen launch.

The biggest possible bump for Nvidia would be 20% more of the market share and that would put them at 100%.

Yet AMD is pushing neither price nor performance and not so sure about innovating either.

I guess sometimes staying afloat is a win too.

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

I guess sometimes staying afloat is a win too.

That's all AMD can do until they decide to invest in GPU development again.

That's all AMD can do until they decide to invest in GPU development again.

...and what makes you think AMD hasn't?

Ironic how you think I am clueless when you are the one making an unsupported claim.

According to you:

People who bought $200 video card in the past will buy $200 video card in the future.

People who bought $500 video card in the past will buy $500 video card in the future.

People who bought $700 video card in the past will buy $700 video card in the future.

etc.

Except he isn't really saying that, he is just saying for budget gamers, they will not go out of their way to spend more than what they budgeted for. I suspect people who tend to spend a certain price range for a GPU will not go out of their way to spend outside their price range, but of course, it is hard to determine that since I don't data for it. The problem for AMD at the moment, there is no replacement for RX 500 series in the 200-300 range as 5700 and 5700XT are replacement for Vega 56 and Vega 64.

Except he isn't really saying that, he is just saying for budget gamers, they will not go out of their way to spend more than what they budgeted for. I suspect people who tend to spend a certain price range for a GPU will not go out of their way to spend outside their price range, but of course, it is hard to determine that since I don't data for it. The problem for AMD at the moment, there is no replacement for RX 500 series in the 200-300 range as 5700 and 5700XT are replacement for Vega 56 and Vega 64.

So what you are saying is that AMD is missing a competitior to the GeForce GTX 1660 Ti.

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

Wave 32 apparently is of use for this..I wonder how well Navi mines?

Hmm may have to buy several of them maybe.

That sounds just like DXR.I would really, really not advise investing too much attention to 'features' on AMD cards. Going back through their lineage even to the first ATi GPUs, there is a long history of AMD / ATi introducing 'features' that do not get used until they make their way into DirectX and are supported by other vendors, and in a form that is not compatible with what is originally released.

Ref cards are usually better quality than aftermarket and they are not binned to fit the 55 different models. Never seen aftermarket clock any higher, often most hardcore OC guys go for reference on AMD for a reason, as all you are waiting for is a different cooler. If it undervolts ok you won't even hear the blower anyway on most setups.No custom coolers from day #1, if that is the case and i will have to wait yet another month, then i might very well take my money elsewhere.

Actually I personally see reasonably often people making jumps to mid-high end cards from mid-lower end cards once they get the bug or want to hand a PC down and upgrade. I would say it's a pretty poor assumption to make. That said someone with a long history of cheaper cards, is unlikely to do this, I would certainly not say it's rare or unheard of. I do however agree pricing is $50 too high. That said it gives room to price drop after the STI cards come out.I am sorry that you lack even the most basic of common sense. Maybe get through your head that it is not normal for people that typically spend around 150-200 bucks on a gpu to all of a sudden move to the 400-450 buck price range. Of course there will always be exceptions but it what you are describing is NOT the norm.

But I guess my friend is an alien then, went from a 1k shitbox laptop to a 3k full water cooled 100% AMD gaming rig.

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

...and what makes you think AMD hasn't?

They're way behind in performance and specs. Years behind.

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

That sounds just like DXR.

Which works quite well, is in games now, and is implemented in hardware now. This is the kind of think that AMD is known for not pulling off, thus the basis for caution.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)