erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,868

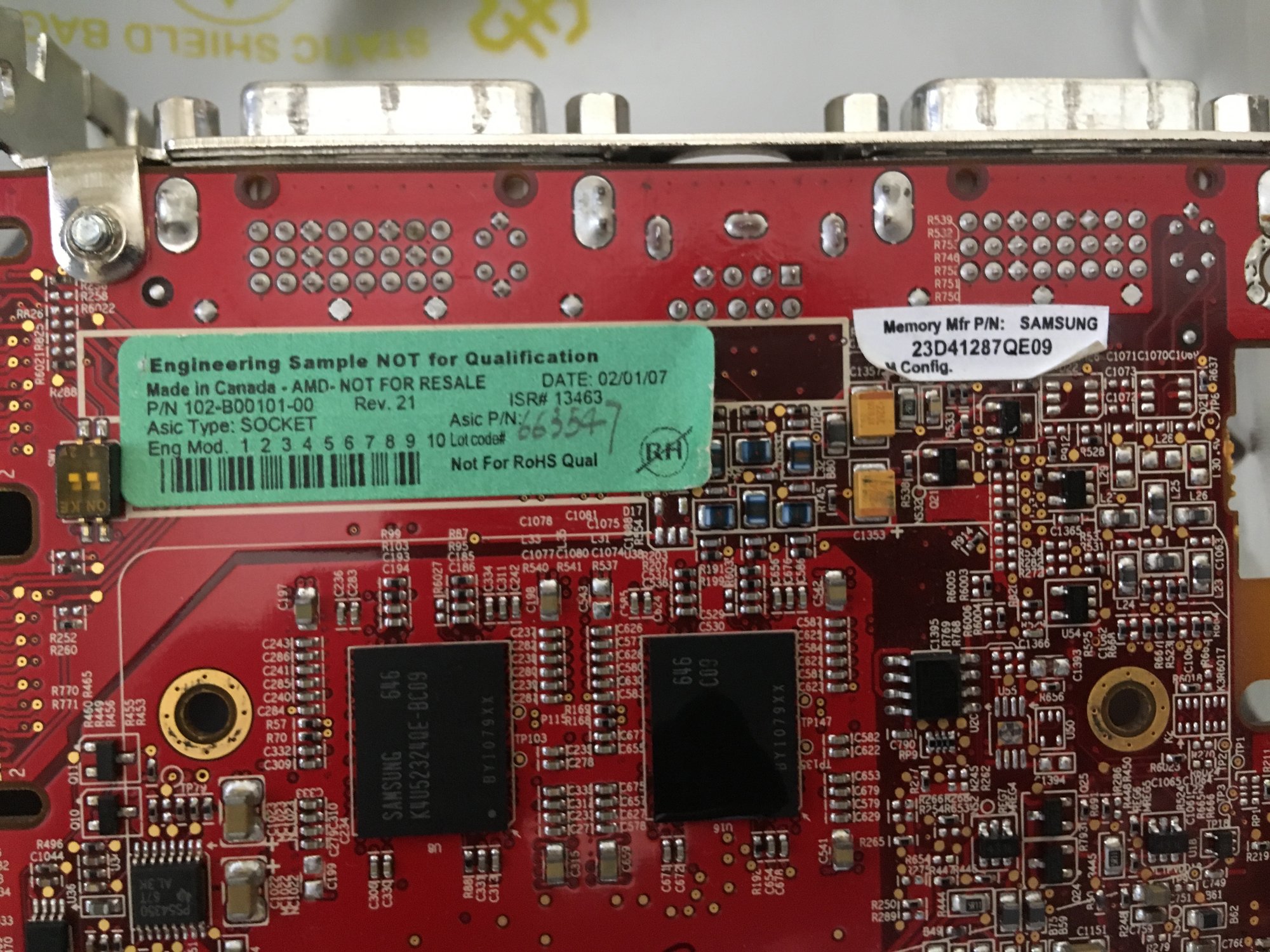

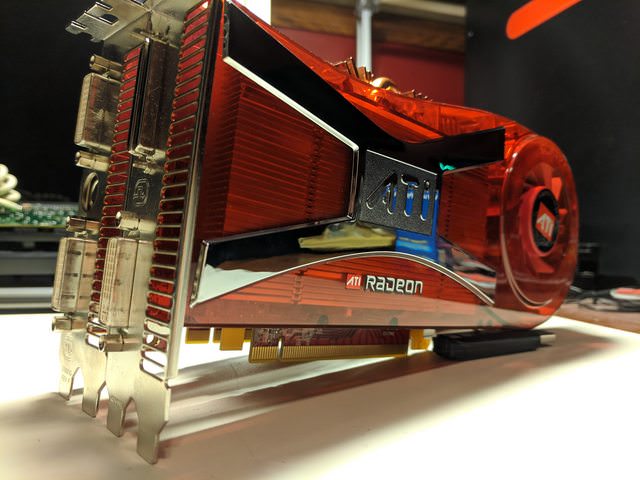

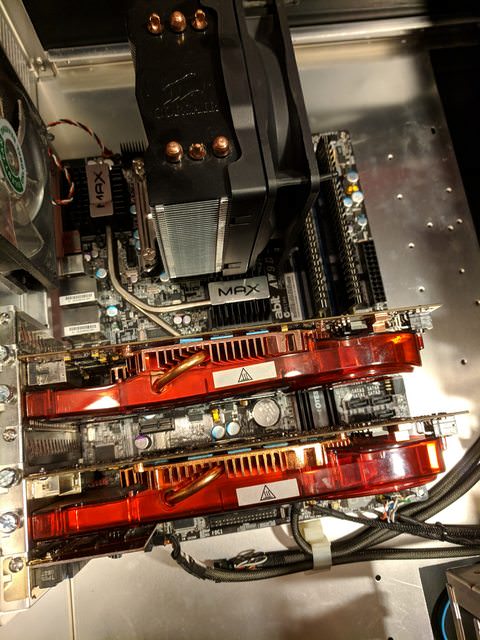

Is this the 2900 XTX?

https://www.ebay.com/itm/183524453672

when i google "102-b00101-00" i find references that it might be...

GPU Device Id: 0x1002 0x9400 113-B00101-19e 102-B00101-00 R600XTX BIOS GDDR4 796e/1009m (C) 1988-2005, ATI Technologies Inc. ATOMBIOSBK-ATI VER010.039.000.000.023973

https://www.ebay.com/itm/183524453672

when i google "102-b00101-00" i find references that it might be...

GPU Device Id: 0x1002 0x9400 113-B00101-19e 102-B00101-00 R600XTX BIOS GDDR4 796e/1009m (C) 1988-2005, ATI Technologies Inc. ATOMBIOSBK-ATI VER010.039.000.000.023973

As an eBay Associate, HardForum may earn from qualifying purchases.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)