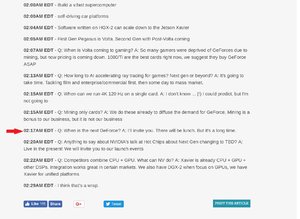

This is from Anands feed over at Computex when they asked nV when the next GeForce is going to be released....

This is simply a low information thread and nothing more. All we have to go off is "it will be a long time" but what defines long time?

What do you guys/gals think about this very ambiguous exchange between Anandtech's reporter and the nV representative stating the red arrow indicated line in the screenshot?

They indicated the following:

This is simply a low information thread and nothing more. All we have to go off is "it will be a long time" but what defines long time?

What do you guys/gals think about this very ambiguous exchange between Anandtech's reporter and the nV representative stating the red arrow indicated line in the screenshot?

They indicated the following:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)