Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Vega Rumors

- Thread starter grtitan

- Start date

Anarchist4000

[H]ard|Gawd

- Joined

- Jun 10, 2001

- Messages

- 1,659

That's assuming a single Vega isn't already faster than the 1080Ti. A dual would be well beyond any current Nvidia offerings and not have to use any more power.33%? Why not just buy a 1080ti and be done with it?

Any cost savings (assuming that we are choosing between panels that have a choice between FS ans GS) of buying FS would have been easily destroyed by the fact that you have to buy 2 Vega GPUs.

Wouldn't that be funny. Only a single or half stack of HBM2 enabled on all the current benchmarks. Second stack just waiting to be enabled for another 307GB/s. Almost like they've been emulating Vega11 this whole time with a single stack of HBM2.Now that it is official that AMD uses Synopsys IP, that aggregate number lining up with test results suggest that it is indeed the real bandwidth of the card and not whatever they claim it is based on chips being clocked sky high.

lolfail9001

[H]ard|Gawd

- Joined

- May 31, 2016

- Messages

- 1,496

I see you have left denial stage and went straight into dreamland, eh.Wouldn't that be funny. Only a single or half stack of HBM2 enabled on all the current benchmarks. Second stack just waiting to be enabled for another 307GB/s. Almost like they've been emulating Vega11 this whole time with a single stack of HBM2.

P. S. there is only 1 controller on Vega die, just saying.

Anarchist4000

[H]ard|Gawd

- Joined

- Jun 10, 2001

- Messages

- 1,659

Because two controllers offering over 300GB/s each is somehow an excuse to measuring only 300GB/s?LOL! Does that sound technical to you? a 3 years old can't come up with excuses this bad.

No, it's just simple physics and a pattern observed by every processor in recent memory. Power is a square, closer to cube with leakage, of voltage which is related to frequency. Lowering clocks obviously results in a large decrease in power. Same reason larger GPUs are more power efficient at similar performance.Man, hearing your excuses after RX Vega's bad performance is revealed in reviews is going to be worth it. Vega faster than 1080Ti? Why didn't AMD show it then? Dual won't use more power? are they gonna feed on the energy of the universe?

In the case of a dual GPU, lowering clocks a bit can easily halve the power draw. Nothing complicated about that.

One controller per HBM2 stack. The pictures of Vega I've seen have two stacks. A single stack of HBM2 is 256GB/s so a 300GB/s controller for everything doesn't make a whole lot of sense.P. S. there is only 1 controller on Vega die, just saying.

lolfail9001

[H]ard|Gawd

- Joined

- May 31, 2016

- Messages

- 1,496

Numbers do, though, just saying.so a 300GB/s controller for everything doesn't make a whole lot of sense.

D

Deleted member 93354

Guest

Kyle, can you comment on a RX Vega x2 type SKU ? (ie: was that RX2 an oops?)

(People without 1,200watt PSUs and trying to min/max their buying power, need not apply)

A 750 Watt PSU would do the job

(400 GPU + 200 System) / 80% duty = 750 Watt PSU

I mentioned this earlier, but there are many people spouting facetious epitaphs over Vega's demise, that are not even concerned with facts.

Additionally, Vega is not only the name for the GPU uArch, but also the brand. Dr Su said, AMD felt the name was so kewl, they planned to keep the code name "Vega", as also the brand name. I am making note of this, because I do not think people here (and elsewhere) have a grasp on RX Vega's entire product stack, or what RX's scope actually is. AMD has Polaris for mainstream and Vega for upstream. How far upstream AMD plans on going is still speculation, but it is beyond what Pascal brings.

We know for a fact, that RX is different than FE in some sort of way, because Raja noted that certain SKU within the RX product stack will feature AMD "infinity Fabric". So we know (using logic), that there are going to be RX Vega cards released, that feature a Vega x2 design of some sorts. And given what we know with Zen uArch on Ryzen and AMD using their fabric to make ThreadRipper, we can make a highly speculative (Yet, still logical) opinion, that Vega's uArch can also be placed on fabric, to get some sort of "TitanRipper" RX Vega card.

Lastly, the idea that Vega can not compete with 1080ti, or Titan Xp means those people touting such things, are not clued into what is going on, and don't have a full grasp of AMD's direction. Again, the idea that AMD can't compete with Nvidia is not based on anything (except overzealous NVidia fan kidz), because Wall Street is saying yes AMD can. Mocking a product because it makes you feel good, only hurts you. Your words become meaningless. AMD has traditionally released a x2 card for each uArch, why would Vega be any different ? Why ignore "infinity fabric" comments ?

I suspect that RX Vega (single die) will perform within between gtx1080 and the gtx1080ti. And a killer x2 sku coming around X-mas. This looks really good for AMD, because Nvidia has nothing to offer a Gamer's for the next 10 months.

AMD's mindshare is about to explode !

~ sine wave ~

Additionally, Vega is not only the name for the GPU uArch, but also the brand. Dr Su said, AMD felt the name was so kewl, they planned to keep the code name "Vega", as also the brand name. I am making note of this, because I do not think people here (and elsewhere) have a grasp on RX Vega's entire product stack, or what RX's scope actually is. AMD has Polaris for mainstream and Vega for upstream. How far upstream AMD plans on going is still speculation, but it is beyond what Pascal brings.

We know for a fact, that RX is different than FE in some sort of way, because Raja noted that certain SKU within the RX product stack will feature AMD "infinity Fabric". So we know (using logic), that there are going to be RX Vega cards released, that feature a Vega x2 design of some sorts. And given what we know with Zen uArch on Ryzen and AMD using their fabric to make ThreadRipper, we can make a highly speculative (Yet, still logical) opinion, that Vega's uArch can also be placed on fabric, to get some sort of "TitanRipper" RX Vega card.

Lastly, the idea that Vega can not compete with 1080ti, or Titan Xp means those people touting such things, are not clued into what is going on, and don't have a full grasp of AMD's direction. Again, the idea that AMD can't compete with Nvidia is not based on anything (except overzealous NVidia fan kidz), because Wall Street is saying yes AMD can. Mocking a product because it makes you feel good, only hurts you. Your words become meaningless. AMD has traditionally released a x2 card for each uArch, why would Vega be any different ? Why ignore "infinity fabric" comments ?

I suspect that RX Vega (single die) will perform within between gtx1080 and the gtx1080ti. And a killer x2 sku coming around X-mas. This looks really good for AMD, because Nvidia has nothing to offer a Gamer's for the next 10 months.

AMD's mindshare is about to explode !

~ sine wave ~

I mentioned this earlier, but there are many people spouting facetious epitaphs over Vega's demise, that are not even concerned with facts.

Additionally, Vega is not only the name for the GPU uArch, but also the brand. Dr Su said, AMD felt the name was so kewl, they planned to keep the code name "Vega", as also the brand name. I am making note of this, because I do not think people here (and elsewhere) have a grasp on RX Vega's entire product stack, or what RX's scope actually is. AMD has Polaris for mainstream and Vega for upstream. How far upstream AMD plans on going is still speculation, but it is beyond what Pascal brings.

We know for a fact, that RX is different than FE in some sort of way, because Raja noted that certain SKU within the RX product stack will feature AMD "infinity Fabric". So we know (using logic), that there are going to be RX Vega cards released, that feature a Vega x2 design of some sorts. And given what we know with Zen uArch on Ryzen and AMD using their fabric to make ThreadRipper, we can make a highly speculative (Yet, still logical) opinion, that Vega's uArch can also be placed on fabric, to get some sort of "TitanRipper" RX Vega card.

Lastly, the idea that Vega can not compete with 1080ti, or Titan Xp means those people touting such things, are not clued into what is going on, and don't have a full grasp of AMD's direction. Again, the idea that AMD can't compete with Nvidia is not based on anything (except overzealous NVidia fan kidz), because Wall Street is saying yes AMD can. Mocking a product because it makes you feel good, only hurts you. Your words become meaningless. AMD has traditionally released a x2 card for each uArch, why would Vega be any different ? Why ignore "infinity fabric" comments ?

I suspect that RX Vega (single die) will perform within between gtx1080 and the gtx1080ti. And a killer x2 sku coming around X-mas. This looks really good for AMD, because Nvidia has nothing to offer a Gamer's for the next 10 months.

AMD's mindshare is about to explode !

~ sine wave ~

The only thing AMD is going to make explode is your PSU with that.

lolfail9001

[H]ard|Gawd

- Joined

- May 31, 2016

- Messages

- 1,496

Nah, someone's PSU is.AMD's mindshare is about to explode !

I mean, Vega's epitaph won't be very interesting if there will be one. "22".I mentioned this earlier, but there are many people spouting facetious epitaphs over Vega's demise, that are not even concerned with facts.

Here is Rx Vega's entire product stack: air cooled Vega 64, liquid cooled Vega 64, air (mostly) cooled Vega 56. Fiji 2.0, now with less memory bandwidth. Hell, even product stack sounds the same: air cooled Fiji XT (Nano), liquid cooled Fiji XT (Fury X) and air cooled cut SKU (Furies).I am making note of this, because I do not think people here (and elsewhere) have a grasp on RX Vega's entire product stack

Look man, if you are going to accuse anyone of spouting facetious epitaphs, at least bring sources to your own claims. And since "IF" is basically a buzzword, even if you do, it does not translate into anything just yet. Technically they could call memory controller "Infinity fabric connecting GPU and 2 stacks of brand new Samsung HBM2!" and it would be correct. Hm, i should apply for their marketing vacancy, because looks i did a better job of using their buzzwords than they usually do.because Raja noted that certain SKU within the RX product stack will feature AMD "infinity Fabric".

[citation needed]Again, the idea that AMD can't compete with Nvidia is not based on anything (except overzealous NVidia fan kidz), because Wall Street is saying yes AMD can.

- Joined

- May 18, 1997

- Messages

- 55,701

I did check at the wall loads while gaming. A 500w PSU would be just fine for gaming. That said, the 1800X was not loaded up or overclocked. Still NDA on this information being any more specific. But I would suggest that a good quality 750W would be fine for a full blown enthusiast clocked system.A 750 Watt PSU would do the job

(400 GPU + 200 System) / 80% duty = 750 Watt PSU

That's assuming a single Vega isn't already faster than the 1080Ti. A dual would be well beyond any current Nvidia offerings and not have to use any more power.

OK, let us not argue about the 1080ti bit, since neither of us have any concrete data right now.

Why on earth would you want to downclock 2 GPUs to below the threshold of "worth it to Crossfire or SLI" just to save some power? It makes NO sense to me.

2 GPUs running at 33% faster than 1 single GPU is absolutely shoddy scaling (we are comparing running 2 downclocked vs 1 normal clocked).

By downclocking the pair of Vegas just to run them cold, you have just eliminated the very reason to run them in pair.

If I were in that situation (let us, for the sake of argument, assume that Vega IS 1080ti in performance, though I am VERY skeptical of it), I'd just run custom loop on both them, than to run them cooler by running them slower. As a bonus, it would also eliminate the need to mount 2 radiators in the case

Your method simply makes NO sense.

Nah, someone's PSU is.

I mean, Vega's epitaph won't be very interesting if there will be one. "22".

Here is Rx Vega's entire product stack: air cooled Vega 64, liquid cooled Vega 64, air (mostly) cooled Vega 56. Fiji 2.0, now with less memory bandwidth. Hell, even product stack sounds the same: air cooled Fiji XT (Nano), liquid cooled Fiji XT (Fury X) and air cooled cut SKU (Furies).

Look man, if you are going to accuse anyone of spouting facetious epitaphs, at least bring sources to your own claims. And since "IF" is basically a buzzword, even if you do, it does not translate into anything just yet. Technically they could call memory controller "Infinity fabric connecting GPU and 2 stacks of brand new Samsung HBM2!" and it would be correct. Hm, i should apply for their marketing vacancy, because looks i did a better job of using their buzzwords than they usually do.

[citation needed]

Anecdotal remarks like yours, are exactly what sets your post apart from an educated one.

I am sorry, but you have nearly 1500 posts, I highly doubt you need me to explain "infinity fabric" to you. What you do need to explain is why YOU happen to think, there is not a Vega x2 in the works ? Given all we know, AMD slides and Raja own remarks. What makes YOU think it is all a lie ? What is YOUR source ? You have to prove to the community that Raja is lying, or give stroing evidence he doesn't know what he is talking about. Or it's anecdotal threadcrapping !

I am strickly speaking about Facts, ones that you seem to not want to acknowledge, (or are simple & truly) ignorant of ? Sorry, you can not expect anyone here to believe you. If AMD can place two GPU on one card without infinity fabric in years past, you are saying they have zero chance of figuring out how to do it with Infinity Fabric ? A technology they have been designing in AMD pursuance of HSA for many years and what people call AMD's "secret sauce". You are pretending (& facetiously so) that AMD x2 card is not possible. And would not work... cuz "epitaph".

My god, ThreadRipper releases in just 2 weeks using AMD infinity Fabric. Why are you acting so "surprised?" if AMD desides to spread some of their secret sauce all over Vega too ??

Infinity fabric is not a buzzword, it is an actual AMD technology.

You need to get educated:

So before you go on and threadcrap a conversation, please defend your position, because attacking AMD isn't working for you!

~ sine wave ~

You want AMD to put two Vega GPUs on a single card? That would consume over 880 watts when overclocked. Is that even possible? What kind of power connectors would it need? I guess they could undervolt them a bit and maybe get the thing down to 500 watts, but that's still a ton of power for that level of performance, which I assume would fall short of GTX 1070 SLI and perhaps match a single GTX 1080 Ti in games that scale well. Not exactly a "win" for AMD, IMO.Anecdotal remarks like yours, are exactly what sets your post apart from an educated one.

I am sorry, but you have nearly 1500 posts, I highly doubt you need me to explain "infinity fabric" to you. What you do need to explain is why YOU happen to think, there is not a Vega x2 in the works ? Given all we know, AMD slides and Raja own remarks. What makes YOU think it is all a lie ? What is YOUR source ? You have to prove to the community that Raja is lying, or give stroing evidence he doesn't know what he is talking about. Or it's anecdotal threadcrapping !

I am strickly speaking about Facts, ones that you seem to not want to acknowledge, (or are simple & truly) ignorant of ? Sorry, you can not expect anyone here to believe you. If AMD can place two GPU on one card without infinity fabric in years past, you are saying they have zero chance of figuring out how to do it with Infinity Fabric ? A technology they have been designing in AMD pursuance of HSA for many years and what people call AMD's "secret sauce". You are pretending (& facetiously so) that AMD x2 card is not possible. And would not work... cuz "epitaph".

My god, ThreadRipper releases in just 2 weeks using AMD infinity Fabric. Why are you acting so "surprised?" if AMD desides to spread some of their secret sauce all over Vega too ??

Infinity fabric is not a buzzword, it is an actual AMD technology.

You need to get educated:

So before you go on and threadcrap a conversation, please defend your position, because attacking AMD isn't working for you!

~ sine wave ~

- Joined

- Aug 12, 2004

- Messages

- 8,377

I keep seeing this thread pop up and for an instant thinking Chevy is bringing it back (probably is some very lame manner). lol

You want AMD to put two Vega GPUs on a single card? That would consume over 880 watts when overclocked. Is that even possible? What kind of power connectors would it need? I guess they could undervolt them a bit and maybe get the thing down to 500 watts, but that's still a ton of power for that level of performance, which I assume would fall short of GTX 1070 SLI and perhaps match a single GTX 1080 Ti in games that scale well. Not exactly a "win" for AMD, IMO.

Your comments are anecdotal.

AMD has put two GPUs on one card in nearly every generation of uArch they have sold. I am not asking AMD to do this, we know this is going to happen. The prose or question should be, is how.

Like I said, given what we know. They will do this using "Infinity Fabric" and having two gpu within the same SOC. The benefits of this are incredible, and it's is entirely plasuable, given threadripper.

Secondly, perhaps you don't know about underclocking and using massive parallel designs, & how that works ?

Just understand, there are other advantages an X2 design would bring, than just computational performance. Additionally, I don't think a RX Vega 64 will use as much energy as people are assuming. They are basing their speculation on FE Vega and it's driver/bios. AMD warned people not to do this.

But who are we ?

Anarchist4000

[H]ard|Gawd

- Joined

- Jun 10, 2001

- Messages

- 1,659

WHILE using less power, which was the point I was making. Scaling would likely exceed that, but being rather conservative there.2 GPUs running at 33% faster than 1 single GPU is absolutely shoddy scaling (we are comparing running 2 downclocked vs 1 normal clocked).

I'm saying AMD would do this on a dual part, but it could apply to Crossfire. Point being the margins on that part would be high enough to throw more silicon at the problem.Why on earth would you want to downclock 2 GPUs to below the threshold of "worth it to Crossfire or SLI" just to save some power? It makes NO sense to me.

The main point I'm making here is that doubling GPUs for more performance can do so with less power consumption! Getting more performance while cutting power in half wouldn't be unreasonable.

Not necessarily. If you chose to overclock sure, but power consumption isn't a fixed value. As I mentioned above, you can double cards while using less power and still have better performance.That would consume over 880 watts when overclocked. Is that even possible?

Dan

Supreme [H]ardness

- Joined

- May 23, 2012

- Messages

- 8,023

I might sidegrade when it comes out, I have a 75hz 34" freesync monitor. Swap the 1080 for a vega.

ecmaster76

[H]ard|Gawd

- Joined

- Feb 6, 2007

- Messages

- 1,150

You want AMD to put two Vega GPUs on a single card? That would consume over 880 watts when overclocked. Is that even possible? What kind of power connectors would it need? I guess they could undervolt them a bit and maybe get the thing down to 500 watts, but that's still a ton of power for that level of performance, which I assume would fall short of GTX 1070 SLI and perhaps match a single GTX 1080 Ti in games that scale well. Not exactly a "win" for AMD, IMO.

I would suggest that anyone over volting a dual GPU card should be prepared for the consequences

Perhaps you haven't seen the current lack of Crossfire support in games. Crossfire and SLI are both dying. nVidia has come out and said that they can no longer support SLI the way they once did. Less than 1% of gamers use SLI. I'm sure the same can be said of Crossfire. You say AMD will release a dual Vega card. My question is, what happened to Polaris? Why no dual Polaris card?Your comments are anecdotal.

AMD has put two GPUs on one card in nearly every generation of uArch they have sold. I am not asking AMD to do this, we know this is going to happen. The prose or question should be, is how.

Like I said, given what we know. They will do this using "Infinity Fabric" and having two gpu within the same SOC. The benefits of this are incredible, and it's is entirely plasuable, given threadripper.

Secondly, perhaps you don't know about underclocking and using massive parallel designs, & how that works ?

Just understand, there are other advantages an X2 design would bring, than just computational performance. Additionally, I don't think a RX Vega 64 will use as much energy as people are assuming. They are basing their speculation on FE Vega and it's driver/bios. AMD warned people not to do this.

But who are we ?

If AMD wants to release a dual Vega card with any type of moderately reasonable power consumption and heat output, they will have to underclock it to the point that it will struggle to beat a single GTX 1080 Ti. Which would largely defeat the purpose.

I have never been a fan of the dual cards, and I don't think it's fair to call them the world's most powerful GPU if and when they can beat the fastest single card.

Araxie

Supreme [H]ardness

- Joined

- Feb 11, 2013

- Messages

- 6,463

WHILE using less power, which was the point I was making. Scaling would likely exceed that, but being rather conservative there.

I'm saying AMD would do this on a dual part, but it could apply to Crossfire. Point being the margins on that part would be high enough to throw more silicon at the problem.

The main point I'm making here is that doubling GPUs for more performance can do so with less power consumption! Getting more performance while cutting power in half wouldn't be unreasonable.

Not necessarily. If you chose to overclock sure, but power consumption isn't a fixed value. As I mentioned above, you can double cards while using less power and still have better performance.

Then you add Xfire to the mix... and you will be most of the time only gaming with a single card. Yea Yea I know your case of flawless Xfire experience playing only selected AMD tittles and very old games,however lot of people wanting to play the latest and greatest with the best settings possible will be found even performing worse than a single VEGA. That's far from ideal.. yes in a world were multiGPU work in every tittle available in the market as soon as launched you would be right, but until then.. I stay away from multi GPU setups and this is from a guy who was SLI user for years.

While that is true, it is far from a good solution. The problem is the drivers. A lot of games aren't supporting SLI and Crossfire any more. You are correct in stating that it can be effective on a hardware level. I'm running GTX 1070 SLI, and with a mild undervolt, I can have each card consume only 100w under load, or 200w for both cards. That's less than a single GTX 1080 Ti, and it can give me better performance in the games that scale well. However on average I would say that my setup is 20% slower than a GTX 1080 Ti card, all things considered. There are games that don't scale at all, and there are others that don't scale well.Not necessarily. If you chose to overclock sure, but power consumption isn't a fixed value. As I mentioned above, you can double cards while using less power and still have better performance.

I was at PDXLAN last weekend and took the vega challenge...I knew which was which immediately simply by what monitors they use.

They were both ultrawide 100hz panels.

The issue is that there are no 100hz freesync IPS panels and no 100hz gsync VA panels...So if you knew what to look for in the panel quality it was pretty easy to see which setup was which. VA panels have color shift, and IPS panels have the "ips glow" and more noticeable backlight bleed in the corners.

For reference the panels they used were I believe to be both Asus panels, PG348Q for gsync and MX34VQ for freesync.

They were both ultrawide 100hz panels.

The issue is that there are no 100hz freesync IPS panels and no 100hz gsync VA panels...So if you knew what to look for in the panel quality it was pretty easy to see which setup was which. VA panels have color shift, and IPS panels have the "ips glow" and more noticeable backlight bleed in the corners.

For reference the panels they used were I believe to be both Asus panels, PG348Q for gsync and MX34VQ for freesync.

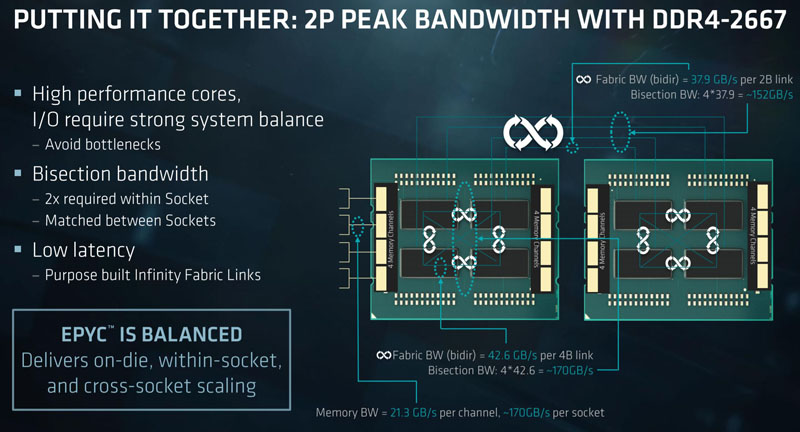

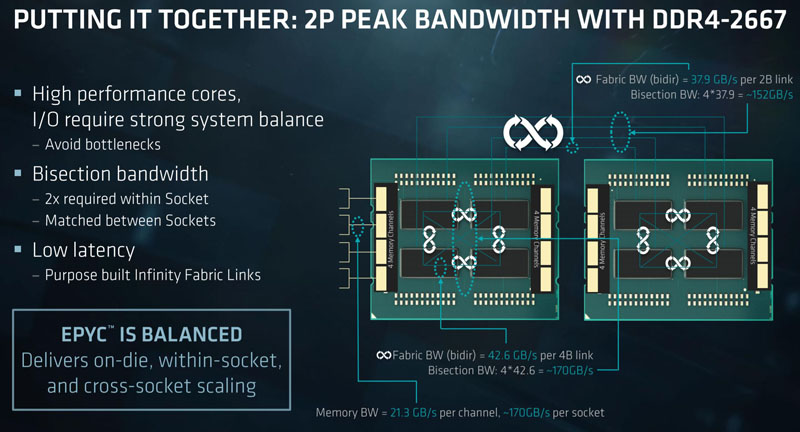

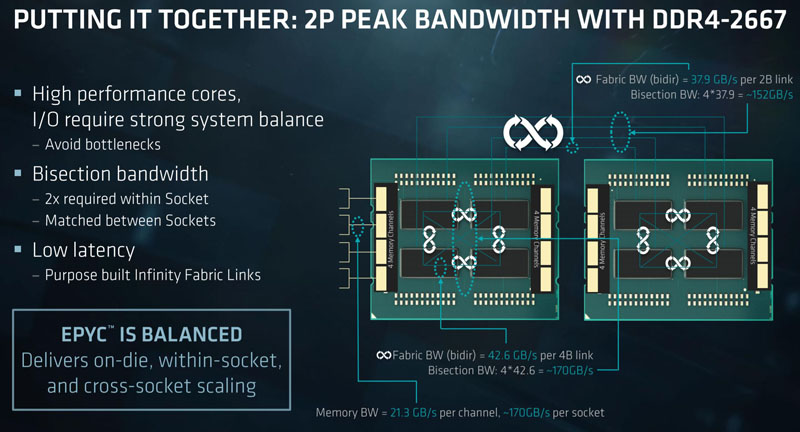

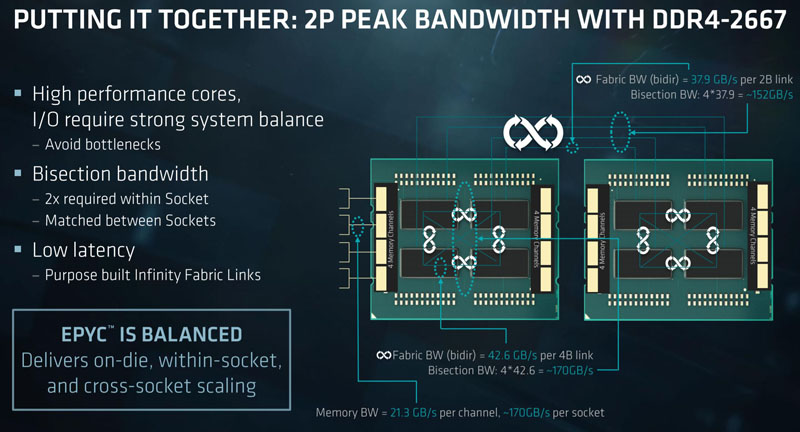

I like visuals.

Here is a good idea of what infinity fabric can do in the CPU world.

Now, just ponder what Crossfire/Sli was. Then ask yourself how many pins are on a PCIe x16 Bus? And why do we need a second card, when we can just place a second 484mm^2 next to one another in an X2 design ?

Wouldn't that be pretty close to the size of Volta ?

Here is a good idea of what infinity fabric can do in the CPU world.

Now, just ponder what Crossfire/Sli was. Then ask yourself how many pins are on a PCIe x16 Bus? And why do we need a second card, when we can just place a second 484mm^2 next to one another in an X2 design ?

Wouldn't that be pretty close to the size of Volta ?

Last edited:

Your comments are anecdotal.

AMD has put two GPUs on one card in nearly every generation of uArch they have sold. I am not asking AMD to do this, we know this is going to happen. The prose or question should be, is how.

Like I said, given what we know. They will do this using "Infinity Fabric" and having two gpu within the same SOC. The benefits of this are incredible, and it's is entirely plasuable, given threadripper.

Secondly, perhaps you don't know about underclocking and using massive parallel designs, & how that works ?

Just understand, there are other advantages an X2 design would bring, than just computational performance. Additionally, I don't think a RX Vega 64 will use as much energy as people are assuming. They are basing their speculation on FE Vega and it's driver/bios. AMD warned people not to do this.

But who are we ?

Infinity fabric will not help Vega in any way or form at least not for gaming. Now if we are talking about cross fire or multi card solutions for pro apps, it functions the same way as current tech does, and the speed difference is negligible as the old tech hasn't even hit its limits yet. So lets get off the hype train about infinity fabric.

Vega 64 uses 280+ watts at stock air cooled that is a given. No amount of other BS is going to change that.

That is going to be happening in future generations of GPUs, after Volta and probably after Navi. They are going to have to come up with a way to support it properly within the drivers. It's not the same as SLI/Crossfire. Once everyone is using GPUs like that it will be different. The games will all scale, the developers will not have any choice.I like visuals.

Here is a good idea of what infinity fabric can do in the CPU world.

Now, just ponder what Crossfire/Sli was. Then ask yourself how many pins are on a PCIe x16 Bus? And why do we need a second card, when we can just place a second 484mm^2 next to one another in an X2 design ?

Wouldn't that be pretty close to the size of Volta ?

~ sine wave ~

I like visuals.

Here is a good idea of what infinity fabric can do in the CPU world.

Now, just ponder what Crossfire/Sli was. Then ask yourself how many pins are on a PCIe x16 Bus? And why do we need a second card, when we can just place a second 484mm^2 next to one another in an X2 design ?

Wouldn't that be pretty close to the size of Volta ?

~ sine wave ~

Dude have you ever used a Xfire system and have tested the amount of bandwidth needed current applications to maintain performance?

You don't need to put up AMD marketing slides to know its NOT going to make a different in today's graphics cards. And all the other pro stuff, nV has its own fabric tech, and when those companies that need that speed, they will fork over the money to get that tech.

Its not like they are short on money when projects that cost millions of bucks are what is being marked at man.

WHILE using less power, which was the point I was making. Scaling would likely exceed that, but being rather conservative there.

I'm saying AMD would do this on a dual part, but it could apply to Crossfire. Point being the margins on that part would be high enough to throw more silicon at the problem.

The main point I'm making here is that doubling GPUs for more performance can do so with less power consumption! Getting more performance while cutting power in half wouldn't be unreasonable.

Not necessarily. If you chose to overclock sure, but power consumption isn't a fixed value. As I mentioned above, you can double cards while using less power and still have better performance.

how would scaling exceed? Please give me examples, cause if you drop frequency lets say on a dual GPU part you scaling still goes based on that drop + the advantages of the drop in voltage needs. Scaling of performance though is still based on the frequency, which won't be linear in AMD cases. But there is nothing to exceed anything, its understood those things will happen.

Right now dual Vega doesn't look likely, if that was the plan, they wouldn't have pushed single Vega cards to such a degree, no need to.

Yes you are correct, but you are not going to get double the GPU performance over a single card either, because if you have less power consumption, you have to drop frequency and thus voltages.

Also AMD hasn't done well with dual products in the past. Will they do it again, cause I don't see them doing a dual Vega without using at least 400 watts. Which is a butt load, and its performance will be like around a 1070 x 2? Doesn't seem that promising when looking at a 1080ti or Titan product which would be what around 20% performance difference for a Vega product that would only function with both GPU's in limited games and apps?

Anarchist4000

[H]ard|Gawd

- Joined

- Jun 10, 2001

- Messages

- 1,659

My argument would definitely be AMD got the work distribution working. The resource management was the hard part and HBCC addresses that. Paging data as it's needed. So give up on AFR in favor of split screen. Then the draw stream binning separating work by screen space. Top that off with ample bandwidth between cards for what data does need moved. Without that I'd agree Crossfire/SLI aren't worth it. The tech seems to be falling inline though and a 3 way Threadripper may not be unreasonable. Really cool would be a 12 or 16 way Epyc.Then you add Xfire to the mix... and you will be most of the time only gaming with a single card. Yea Yea I know your case of flawless Xfire experience playing only selected AMD tittles and very old games,however lot of people wanting to play the latest and greatest with the best settings possible will be found even performing worse than a single VEGA. That's far from ideal.. yes in a world were multiGPU work in every tittle available in the market as soon as launched you would be right, but until then.. I stay away from multi GPU setups and this is from a guy who was SLI user for years.

Agreed, but as I said above, this would be different from Crossfire/SLI as it should be completely transparent.While that is true, it is far from a good solution. The problem is the drivers. A lot of games aren't supporting SLI and Crossfire any more. You are correct in stating that it can be effective on a hardware level. I'm running GTX 1070 SLI, and with a mild undervolt, I can have each card consume only 100w under load, or 200w for both cards. That's less than a single GTX 1080 Ti, and it can give me better performance in the games that scale well. However on average I would say that my setup is 20% slower than a GTX 1080 Ti card, all things considered. There are games that don't scale at all, and there are others that don't scale well.

Like I said before, I don't think 880w power consumption on a single card is feasible. The power consumption with Vega is simply too high. It reminds me of Hawaii.how would scaling exceed? Please give me examples, cause if you drop frequency lets say on a dual GPU part you scaling still goes based on that drop + the advantages of the drop in voltage needs. Scaling of performance though is still based on the frequency, which won't be linear in AMD cases. But there is nothing to exceed anything, its understood those things will happen.

Right now dual Vega doesn't look likely, if that was the plan, they wouldn't have pushed single Vega cards to such a degree, no need to.

Yes you are correct, but you are not going to get double the GPU performance over a single card either, because if you have less power consumption, you have to drop frequency and thus voltages.

Also AMD hasn't done well with dual products in the past. Will they do it again, cause I don't see them doing a dual Vega without using at least 400 watts. Which is a butt load, and its performance will be like around a 1070 x 2? Doesn't seem that promising when looking at a 1080ti or Titan product which would be what around 20% performance difference for a Vega product that would only function with both GPU's in limited games and apps?

Like I said before, I don't think 880w power consumption on a single card is feasible. The power consumption with Vega is simply too high. It reminds me of Hawaii.

Worse that Hawaii, more like Fiji, but in reality probably in the middle of both, cause Fiji has water cooling its hard to determine where Vega will land.

Well, we have seen dual GPUs many times before, and it has never been transparent. It always requires SLI/Crossfire support in the drivers. So barring something revolutionary, this is not going to happen. IMO.Agreed, but as I said above, this would be different from Crossfire/SLI as it should be completely transparent.

Well, we have seen dual GPUs many times before, and it has never been transparent. It always requires SLI/Crossfire support in the drivers. So barring something revolutionary, this is not going to happen. IMO.

yeah the tech doesn't have the speed yet for full transparency. The latency difference is too great when sending data from the master to a slave GPU even on the same PCB and this is why Xfire and SLI were created to begin with so that data doesn't need to be transferred over. Now how fast these fabrics work is the determinate factor. When the fabric will be fast enough bidirectionally from master to slave and vice versa then SLi and Xfire will be dropped, but until then some sort of mGPU solution has to be used.

lolfail9001

[H]ard|Gawd

- Joined

- May 31, 2016

- Messages

- 1,496

I don't think about AMD's actions, it hurts one's ability to deduce. If they do it, great (/sarcasm, in case), someone may even buy one. If they don't, good for them, they managed to successfully cut losses on Vega.What you do need to explain is why YOU happen to think, there is not a Vega x2 in the works ?

Considering that Raja said exactly jackshit about dual Vega card as far as anyone is aware, the one who need to bring evidence here is you.Given all we know, AMD slides and Raja own remarks. What makes YOU think it is all a lie ? What is YOUR source ? You have to prove to the community that Raja is lying, or give stroing evidence he doesn't know what he is talking about. Or it's anecdotal threadcrapping !

100% chance, actually. They will just call the PLX bridge "Infinity Fabric". Boom!If AMD can place two GPU on one card without infinity fabric in years past, you are saying they have zero chance of figuring out how to do it with Infinity Fabric ?

It refers to like 3 different technologies in Zeppelin alone and another 2 in Vega as far as i can count. For actual AMD technology, it's basically a Chimera.Infinity fabric is not a buzzword, it is an actual AMD technology.

Attacking your points, however, works out perfectly well.So before you go on and threadcrap a conversation, please defend your position, because attacking AMD isn't working for you!

Here is a good idea of what it does in the CPU world: https://www.servethehome.com/amd-epyc-infinity-fabric-latency-ddr4-2400-v-2666-a-snapshot/Here is a good idea of what infinity fabric can do in the CPU world.

Now try to use that bandwidth and latency for a video card, let alone 2 that need to communicate on regular basis. Xfire may just look amazing in comparison.

That said, i do post a lot, because i like arguments.

Yeah the impact of infinity fabric over CPU's is that great, in the world of GPU's its going to be even more, factors of x10 at least.

its a tech for the future, but right now, its not fast enough to equal or supercede traditional monolithic approach.

its a tech for the future, but right now, its not fast enough to equal or supercede traditional monolithic approach.

(25) You are allowed ONE SIGNATURE PER POST, do not put your signature in the body of your post. A signature section is supplied for that usage. No one wants to see your name on your posts multiple times as it is annoying.

Like I said before, I don't think 880w power consumption on a single card is feasible. The power consumption with Vega is simply too high. It reminds me of Hawaii.

Nobody here is talking about crossfire. It seems that you and others here are unfamiliar with massive parallelism and downclocks. So I understand why you keep trying to troll using 800watts. The simple answer is because your assumption of wattage is wrong. AMD can design any power envelope they want.

ie: SOC

@lolcail9001

You have no credibility, again to facetiously downplay & assume that Infinity fabric is a simple PLX chip. Illustrates you didnt know or understand the subject. There is a control side to the fabric, that you are not addressing, or simple not aware of. And what you are overlooking and seem to not get, even in a most rudimentary fashion, even if AMD just created crossfire on a chip, how is that bad for us High End Gamers ?

Perhaps you should wait a week, before claiming to know what AMD can't do. Seems awfully rude to keep badmouthing AMD for no reason, RX is not even out.

Infinity fabric exists for Vega. Raja said so. So you are not arguing with me, but yourself. I don't have to defend anything. But you do have to defend why you pretend it doesnt exist, even after being told and knowing what Raja said.

Last edited:

Nobody here is talking about crossfire. It seems that you and others here are unfamiliar with massive parallelism and downclocks. So I understand why you keep trying to troll using 800watts. The simple answer is because your assumption of wattage is wrong. AMD can design any power envelope they want.

ie: SOC

@lolcail9001

You have no credibility, again to facetiously downplay & assume that Infinity fabric is a simple PLX chip. Illustrates you didnt know or understand the subject. There is a control side to the fabric, that you are not addressing, or simple not aware of. And what you are overlooking and seem to not get, even in a most rudimentary fashion, even if AMD just created crossfire on a chip, how is that bad for us High End Gamers ?

Perhaps you should wait a week, before claiming to know what AMD can't do. Seems awfully rude to keep badmouthing AMD for no reason, RX is not even out.

Infinity fabric exists for Vega. Raja said so. So you are not arguing with me, but yourself. I don't have to defend anything. But you do have to defend why you pretend it doesnt exist, even after being told and knowing what Raja said.

~ sine wave ~

Epyc is going to have a butt load of problems with certain types of apps. And that specific reason, dual Vega is using infinity fabric ROP's and control silicon those specific problems will be even worse. ROP L2 cache and and the control silicon in GPU's need data locality to function at appropriate speeds without the latency causing through put issues. This is why YOU CAN"T get by with infinity fabric alone to remove mGPU techniques.

The thing is, even if AMD does use infinity fabric to essentially stitch two Vega GPUs into one massive GPU, the die size will simply be too big. IMO it will be beyond the current limits of technology and the current manufacturing process, along with the laws of physics. Vega already has a very similar die size to a GTX 1080 Ti. I just don't think AMD can realistically double that using current technology, on the current node. And I don't think a dual GPU Vega using the more traditional methods will be useful, as per the reasons I have already stated in this thread.Nobody here is talking about crossfire. It seems that you and others here are unfamiliar with massive parallelism and downclocks. So I understand why you keep trying to troll using 800watts. The simple answer is because your assumption of wattage is wrong. AMD can design any power envelope they want.

ie: SOC

@lolcail9001

You have no credibility, again to facetiously downplay & assume that Infinity fabric is a simple PLX chip. Illustrates you didnt know or understand the subject. There is a control side to the fabric, that you are not addressing, or simple not aware of. And what you are overlooking and seem to not get, even in a most rudimentary fashion, even if AMD just created crossfire on a chip, how is that bad for us High End Gamers ?

Perhaps you should wait a week, before claiming to know what AMD can't do. Seems awfully rude to keep badmouthing AMD for no reason, RX is not even out.

Infinity fabric exists for Vega. Raja said so. So you are not arguing with me, but yourself. I don't have to defend anything. But you do have to defend why you pretend it doesnt exist, even after being told and knowing what Raja said.

~ sine wave ~

I would love to be wrong about this. A double sized Vega chip would certainly be interesting. I just don't think we're there yet. It is coming, but not for a couple more generations. nVidia is doing this also.

The thing is, even if AMD does use infinity fabric to essentially stitch two Vega GPUs into one massive GPU, the die size will simply be too big. IMO it will be beyond the current limits of technology and the current manufacturing process, along with the laws of physics. Vega already has a very similar die size to a GTX 1080 Ti. I just don't think AMD can realistically double that using current technology, on the current node. And I don't think a dual GPU Vega using the more traditional methods will be useful, as per the reasons I have already stated in this thread.

I would love to be wrong about this. A double sized Vega chip would certainly be interesting. I just don't think we're there yet. It is coming, but not for a couple more generations. nVidia is doing this also.

Well if they stitch two together, its not a issue with die size cause its still two dies. The main problem is right now Vega architecture was just made to have direct access to L2 cache with their ROP's, why was this done? It was done because it stop cache trashing and thus reduces bandwidth and power consumption, end result increases performance too. But the main reason these results happen is because of data locality. If that doesn't happen, and it won't happen with stitching two dies together, that benefit is lost and worse yet, the data locality of L1 cache and register space necessary for shader operations via control silicon will not happen either, at least not across the two dies. That is the crux of the problem. Infinity fabric or any mesh technology for that matter is just part of the problem being solved, not the whole thing.

And as you stated its possible it might happen in a couple of gens, but even that is pretty aggressive IMO at least for gaming, I can see it for HPC or DL though.

Bandalo

2[H]4U

- Joined

- Dec 15, 2010

- Messages

- 2,660

The thing is, even if AMD does use infinity fabric to essentially stitch two Vega GPUs into one massive GPU, the die size will simply be too big. IMO it will be beyond the current limits of technology and the current manufacturing process, along with the laws of physics. Vega already has a very similar die size to a GTX 1080 Ti. I just don't think AMD can realistically double that using current technology, on the current node. And I don't think a dual GPU Vega using the more traditional methods will be useful, as per the reasons I have already stated in this thread.

I would love to be wrong about this. A double sized Vega chip would certainly be interesting. I just don't think we're there yet. It is coming, but not for a couple more generations. nVidia is doing this also.

I don't think it's impossible, but it's going to be VERY, VERY expensive to make it work. Someone will have to justify that cost with hard performance numbers before they're going to dump the cash into R&D.

I don't think it's impossible, but it's going to be VERY, VERY expensive to make it work. Someone will have to justify that cost with hard performance numbers before they're going to dump the cash into R&D.

Its impossible right now and extremely expensive with the way current chip architectures are. Pretty much L1 cache data and register space will have to be shared across both dies. Currently there is just no way to do this without introducing a global cache (L2) which increases latency then the latency of the communication lanes.

Now if that latency can be hidden by software then yeah it will work, but that is a lot of work and totally different programming methodology. Raja stated this with the introduction of Fury Pro, it was to help push devs to start thinking differently in their approach to making gaming engines. Its going to take a long time to do that though.

just some generalized info on L1 and L2 cache usage in CPU's

https://www.extremetech.com/extreme...-why-theyre-an-essential-part-of-modern-chips

This is why that problem will show up with infinity fabric cause its gotta use L2 cache.

Now this is for CPU's you may say but in effect the GPU and CPU caches work similarly when it comes to latency. The moment L2 cache is used the processor is loosing just under half its performance, that latency can be hidden by drivers, but the interconnect latency what is going hide that? Cause the GPU's don't know what is coming in, there nothing like prefetching and analyzing the scene, this must be done on the fly or based on the renderer, must be planned out application side.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)