Well one thing Ryzen's release did was show us that everyone who has a high end video card is CPU bound. I seem to recall most people who dislike AMD thought that current rendering API's were only beneficial to the crap CPU's that AMD made. It was some sort of conspiracy bankrolled by AMD for the benefit of their CPU division. Why were we never shown all of this CPU bottleneck before now?

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Ryzen with 3600MHz RAM Benchmark

- Thread starter CAD4466HK

- Start date

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

It makes perfect sense if you don't have an AMD branded erection lasting longer than 4 hours.

He shows the math and tells you some programs respond better to infinity fabric clock, and some to memory latency. Not rocket surgery.

1+1= 2 , now all my babbling will be valid I showed you mathematics ! When there is such a difference on the bios between platforms what he suggest "might" work for some certainly not for everything that is out on AM4.

When you start of promising people that cheaper ram can be the way to go without any verification on several different vendor products it is obvious that this is just someone rambling. What makes it worse he throws in several disclaimers in the message might work, certain benchmarks, little bit of gain all the signs that gives you a clear warning it is a waste of time.

He proves it himself when he mentions the ram that did give him his firestrike record.

Cheap ram + math = not much , expensive ram + firestrike = record. Video says cheap ram , does not work out that way now does it ?

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

Changing timings from 17 to 22 on that video just lost me on the spot. Are we trying to cheat out numbers, or gain performance??

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,639

1+1= 2 , now all my babbling will be valid I showed you mathematics ! When there is such a difference on the bios between platforms what he suggest "might" work for some certainly not for everything that is out on AM4.

When you start of promising people that cheaper ram can be the way to go without any verification on several different vendor products it is obvious that this is just someone rambling. What makes it worse he throws in several disclaimers in the message might work, certain benchmarks, little bit of gain all the signs that gives you a clear warning it is a waste of time.

He proves it himself when he mentions the ram that did give him his firestrike record.

Cheap ram + math = not much , expensive ram + firestrike = record. Video says cheap ram , does not work out that way now does it ?

I'll just accept the fact you are ignoring that the video is talking about how memory speed is tied to infinity fabric and move on.

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

That is the most important point yes, but achieving 3200 RAM speed with good timing (CL14 or 15) is not the same as doing so with 22 timings lol

The main point remains the same, what that video should be telling you is buy RAM with 3000+ Mhz AND timing around 14 to 15.

What that video missed to tell you is that you might not see any benefits whatsoever with 22 timings on a 2400 RAM bumped to 3200 or so. So might as well just ignore it. So to me, it is more than a bit misleading.

The main point remains the same, what that video should be telling you is buy RAM with 3000+ Mhz AND timing around 14 to 15.

What that video missed to tell you is that you might not see any benefits whatsoever with 22 timings on a 2400 RAM bumped to 3200 or so. So might as well just ignore it. So to me, it is more than a bit misleading.

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

Question for the RAM experts: Generally speaking, which would be faster for gaming or benchmarks, a 3400 C16, a 3200 C15 or a 3733 C17?

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,639

That is the most important point yes, but achieving 3200 RAM speed with good timing (CL14 or 15) is not the same as doing so with 22 timings lol

The main point remains the same, what that video should be telling you is buy RAM with 3000+ Mhz AND timing around 14 to 15.

What that video missed to tell you is that you might not see any benefits whatsoever with 22 timings on a 2400 RAM bumped to 3200 or so. So might as well just ignore it. So to me, it is more than a bit misleading.

Again, you're not getting the point. You guys are tunnel visioned on expensive kits with tight timings which a lot of people didn't buy for Ryzen because it wasn't initially supported. This is simply an option to show you can get more performance for certain types of programs by understanding the way the infinity fabric works. People have been doing all sorts of shit like turning off HPET just to get a handful more frames in games, here is another option. Simple. Stop getting bogged down with the $200 memory kits. Of COURSE the 3200 Cas14 is going to be better if it runs, nobody is debating that. If you DIDNT buy it though, this gives you something to play with, and will likely be pretty important when the lower cost chips come out and budget builds get popular.

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

Again, you're not getting the point. You guys are tunnel visioned on expensive kits with tight timings which a lot of people didn't buy for Ryzen because it wasn't initially supported. This is simply an option to show you can get more performance for certain types of programs by understanding the way the infinity fabric works. People have been doing all sorts of shit like turning off HPET just to get a handful more frames in games, here is another option. Simple. Stop getting bogged down with the $200 memory kits. Of COURSE the 3200 Cas14 is going to be better if it runs, nobody is debating that. If you DIDNT buy it though, this gives you something to play with, and will likely be pretty important when the lower cost chips come out and budget builds get popular.

No we exactly got that point, what we dont see is benchmarks that show any sort of gains (gaming or otherwise) with that 2400 kit running at 3200 with 22 timings! You see, it might benefit the infinity fabric and all, but the much slower timings of C22 might just render any "gains" to be the same or yet worse at the end of the day. Any scientific claims should be supported by proof, benchmarks before and after with that same memory kit is what I want to see.

RAM being expensive has nothing to do with this discussion, this is just market demand and supply. I much rather have a "real" kit running at 3200 with 15 timing than some 2400 C17 kit running at 22 timing and what it would seem to be an artificial 3200. Get it?

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,639

No we exactly got that point, what we dont see is benchmarks that show any sort of gains (gaming or otherwise) with that 2400 kit running at 3200 with 22 timings! You see, it might benefit the infinity fabric and all, but the much slower timings of C22 might just render any "gains" to be the same or yet worse at the end of the day. Any scientific claims should be supported by proof, benchmarks before and after with that same memory kit is what I want to see.

RAM being expensive has nothing to do with this discussion, this is just market demand and supply. I much rather have a "real" kit running at 3200 with 15 timing than some 2400 C17 kit running at 22 timing and what it would seem to be an artificial 3200. Get it?

I don't understand why this is so hard for you to comprehend. He isn't benchmarking anything. Its simply an option to try if you had a slow kit. If you already have a fast kit, bravo! No need to worry about it, move on with your life. If you bought 2133 at launch, maybe see if this helps you int he program you use. I don't know how much more barney style I can break this down for you, get it?

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

I don't understand why this is so hard for you to comprehend. He isn't benchmarking anything. Its simply an option to try if you had a slow kit. If you already have a fast kit, bravo! No need to worry about it, move on with your life. If you bought 2133 at launch, maybe see if this helps you int he program you use. I don't know how much more barney style I can break this down for you, get it?

Because he is increasing the timing by... a lot. So essentially it comes back to the same thing as you do nothing. So whats special about that video? If I made his claim and I can back it up by numbers, then its youtube worthy. At that point you are better off OCing your CPU for 100mhz more and leave your RAM alone than slowing memory timings from 17 to 22.

I see 3 ways of getting more performance out of your memory: increase frequency with the same timings, use the same frequency with faster timings, or faster frequency with faster timing.

Can you do some testings and confirm if you gain any performance?

Last edited:

lolfail9001

[H]ard|Gawd

- Joined

- May 31, 2016

- Messages

- 1,496

Just saying, the only sticks that can work at frequencies above 3000 with Ryzen presently are fine with 3200C14 by their very existence and even better.Because he is decreasing the timing by... a lot.

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

Just saying, the only sticks that can work at frequencies above 3000 with Ryzen presently are fine with 3200C14 by their very existence and even better.

Meant to say increasing the timings

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,639

Because he is increasing the timing by... a lot. So essentially it comes back to the same thing as you do nothing. So whats special about that video? If I made his claim and I can back it up by numbers, then its youtube worthy. At that point you are better off OCing your CPU for 100mhz more and leave your RAM alone than slowing memory timings from 17 to 22.

I see 3 ways of getting more performance out of your memory: increase frequency with the same timings, use the same frequency with faster timings, or faster frequency with faster timing.

Can you do some testings and confirm if you gain any performance?

The timing increases, but so does the RAM speed. Overall latency stays the about the same, hence the math. Since the RAM speed is theoretically overall the same, but you've increased the mhz it runs at, you've also increased the mhz the infinity fabric runs at. This can lead to a performance increase that the memory was not capable of alone because it can't run the faster speed with tighter timings due to the binning. This is not a way to increase RAM performance but a way to make it stay the same while using it to increase CPU performance. Like he said, memory sensitive applications may not benefit, but others like games that enjoy increased inter-core communications might.

I would love to test this but I'm now stuck at 2133mhz where I used to be running 3200 because of this AGESA update.

Here, Crucial talks about it here minus the Ryzen stuff. http://pics.crucial.com/wcsstore/CrucialSAS/pdf/en-us-c3-whitepaper-speed-vs-latency-letter.pdf

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

We need benchmarks!

Formula.350

[H]ard|Gawd

- Joined

- Sep 30, 2011

- Messages

- 1,102

I'm not going to try and determine if you are understanding of how the Infinity Fabric on Ryzen functions, but will say that just based on the stuff I've skimmed, you may not.We need benchmarks!

As such I highly recommend you read at least the first 10 pages of this thread. If you won't, then at least the first page. TheStilt offers tons of good info.

https://forums.anandtech.com/threads/ryzen-strictly-technical.2500572

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

I'm not going to try and determine if you are understanding of how the Infinity Fabric on Ryzen functions, but will say that just based on the stuff I've skimmed, you may not.

As such I highly recommend you read at least the first 10 pages of this thread. If you won't, then at least the first page. TheStilt offers tons of good info.

https://forums.anandtech.com/threads/ryzen-strictly-technical.2500572

I totally get it, but I also think if you wanna talk about Fabric, we should keep that RAM at 3000mhz+ WITH 14-15s timings. Sacrificing memory timings for better fabric interconnection is just a wild theory at this point.

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

And if you are trying to solicit that every ounce of performance through fast infinity fabric, I would say that 2400 RAM is not for you in the first place. Trident Z 3200 with CL14 is what you need, with a x370 MB, and then OC that CPU to 3.8 to 3.99. Lets not waste time arguing about theories that are not so far based on benchmarks.

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,639

I totally get it, but I also think if you wanna talk about Fabric, we should keep that RAM at 3000mhz+ WITH 14-15s timings. Sacrificing memory timings for better fabric interconnection is just a wild theory at this point.

You clearly don't get it. I don't think I've ever seen someone so hung up on timings (while simultaneously not understanding overall latency), nor anyone that thinks literally every person that bought Ryzen is going to have the money for a $200 kit of RAM.

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

You clearly don't get it. I don't think I've ever seen someone so hung up on timings (while simultaneously not understanding overall latency), nor anyone that thinks literally every person that bought Ryzen is going to have the money for a $200 kit of RAM.

Fine, lets do a before and after with a DDR2400 kit, one test with the stock timings and frequency, and the other with the 3200 and 22 timings. I would like to see realbench used or something similar that takes into account various real-life tests. Till then, lets not argue for nothing please

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

You clearly don't get it. I don't think I've ever seen someone so hung up on timings (while simultaneously not understanding overall latency), nor anyone that thinks literally every person that bought Ryzen is going to have the money for a $200 kit of RAM.

To be honest the person in the video does not get it, you either have a valid point which can be reproduced (on all of the AM4 platform, with multiple 2400hmz ddr4 tested) or you just suggest that 2400mhz DDR4 will bail you out on expensive ram by this suggestion alone the compatibility issues between ram ic and so on it is the worst advice you can give people even if you lack the funds to buy more expensive ram.

.

It lacks specifics it lacks understanding that if you purchase the 2400 mhz ddr4 it has a good change of not doing anything more then 2400 in performance even if you are able to overclock it to 3000 mhz due to issues with bios and compatibility.

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

To be honest the person in the video does not get it, you either have a valid point which can be reproduced (on all of the AM4 platform, with multiple 2400hmz ddr4 tested) or you just suggest that 2400mhz DDR4 will bail you out on expensive ram by this suggestion alone the compatibility issues between ram ic and so on it is the worst advice you can give people even if you lack the funds to buy more expensive ram.

.

It lacks specifics it lacks understanding that if you purchase the 2400 mhz ddr4 it has a good change of not doing anything more then 2400 in performance even if you are able to overclock it to 3000 mhz due to issues with bios and compatibility.

Yep that is another way of saying what I said before. At the end it could end up being a disappointment more than anything.

Let's not blame the crazy high prices of DDR4 on some unbenched claims is my point. I still rather buy less RAM (3200@14 or 15 CL) now and add later than misleadingly buy 2400 and drool over unknown numbers

Magic Hate Ball

Limp Gawd

- Joined

- Jul 28, 2016

- Messages

- 366

You clearly don't get it. I don't think I've ever seen someone so hung up on timings (while simultaneously not understanding overall latency), nor anyone that thinks literally every person that bought Ryzen is going to have the money for a $200 kit of RAM.

I bought my TridentZ 2x8gb 3200CL14 for $140 sometime mid-March.

Just because prices are wildly high right now doesn't mean we should discuss more reasonable kits like mine that will be affordable again in the future. And probably for less than what I paid for it to boot.

Formula.350

[H]ard|Gawd

- Joined

- Sep 30, 2011

- Messages

- 1,102

I got my TridentZ 2x8GB 3200 CL15 kit on Feb 20 for $125 on sale at NewEgg ($135 after tax, damn TN 9.5%...)  But until I have SubTimings to mess with I've just left it at 14-15-15-35 (silly Ryzen bug with odd-numebr CLs at higher speeds. Runs CL15 at 2133 though *shrug*)

But until I have SubTimings to mess with I've just left it at 14-15-15-35 (silly Ryzen bug with odd-numebr CLs at higher speeds. Runs CL15 at 2133 though *shrug*)

HOWEVER. I'm not downloading a 300+MB file to do so, that's just retarded. Sorry, just I am not fortunate enough to have even the option of a landline where I am, so I'm stuck with Cellular. As such, my speeds are in the 0.20Mbit range, but even then I have a monthly limit so I wouldn't be grabbing that anyhow.

I have AIDA64, there's also older versions of MaxxPi that I can get.

Otherwise hopefully this: https://panthema.net/2013/pmbw/

or maybe even this: http://zsmith.co/bandwidth.html Nevermind, wasn't a compiled EXE.

will be sufficient enough?

The first is old, 2013, hasn't been updated from what I see (but might not need to be either), and the other seems to at least be current. I grabbed both.The latter has the option to run one per core (multi-instances) and then you can total up one of the outputs to get the max transfer speed.

Other than your OK on an alternative program to use, I'll also need to know what timings you want ran at 2400? We're talking JEDEC defaults I assume, since you mentioned wanting the 3200 kit to be ran at CL22, which if that's the case then my kit is showing a JEDEC compliant profile at 2133 of 15-15-15-36. So will this profile in the Thaiphoon database for 2400 with 16-16-16-39 timings be acceptable? There's a few, but one is 15-15-15-36 (same as 2133), another i 17-17-17-39. and the final one is 18-17-17-39. (Note: I only have experience with 2133, 2933 and 3200 on my motherboard, and I can say that only at 2133 can I guarantee odd-CL timings, so at 2400 I don't know if 17-17-17 will work [hence asking if 16-16-16 would be OK]).

Also, being that JEDEC doesn't have official support for DDR4@3200, I'm finding three generic profiles available for 3200 (see: non-performance). 20-20-20-52, 22-22-22-52 and 24-24-24-52, and all presumably are rated as such to function at 1.2V

EDIT: Struck comments above. Also am running PMBW.... *sigh* I'm not liking this program... lol It runs though a complete loop FOR EVERY THREAD AVAILABLE!

Run 1, 1 thread... Run 2, 2 threads... Run 3, 3 threads... Over 10mins now and it's 1/2 way. I'm reading the page and look like there's a switch I can run to specify thread counts.

I'm thinking of 'standardizing' a test sequence that runs 1, 4, 8, 16 threads, so save time...

EDIT2: Mmmkay... Maybe I'm an idiot, but I seriously have NO idea where it drops the Plots PDF file that the stats2gnuplot.exe creates o_0 It's not in C:\, or Documents folder, or the folder it's all in, nor on my desktop, the Downloads folder, my User folder, OR the Temp folder in AppData  It was by sheer luck that -h was a command variable and my entry didn't jive, which spat out a bit of "help" (/help, /h, /?, -help, -?, do not work). So if anyone can make sense of it:

It was by sheer luck that -h was a command variable and my entry didn't jive, which spat out a bit of "help" (/help, /h, /?, -help, -?, do not work). So if anyone can make sense of it:

Usage: C:\pmbw-0.6.2-win64\stats2gnuplot.exe [-v] [-h hostname] [files...]

So on his page, the usage of "stats2gnuplot stats.txt | |gnuplot" gets interpreted as stats.txt as a file, | as a file, and gnuplot as a file; thus, erroring on the latter two. The 'hostname' is just your computer name, so no help. -v however, I don't know the function of (incase the folder has a password?)

This page seems to indicate it should be showing up in the same folder as the stats.txt, but I can assure you it isn't lol Guess I'll try putting the stats and exe into the C:\ root folder and see if that helps. Otherwise if not, you'll be stuck with a fairly unhelpful txt file full of nigh-incomprehensible data.

.... and that did nothing, either. Mkay then. Doing a system search of *.pdf yields no file by the reported output, either.

EDIT3: Blah. So -v = Verbose. I found the undocumented switch of -o which overrides output, which has to be placed BEFORE input file or it is treated as an input file :| Nevertheless, even with the output being overridden to c:\ it still fails. Even if set to c:\plot.pdf it still fails. Then I figured ok... maybe there's a program called GNUPlot that this is trying to call... Well there is, so I install it, and still able to get anything different to happen *sigh* I'm beginning to think that PMBW isn't going to be a viable choice either.

EDIT4: Holy.

Epic.

Facepalm.

Batman.

So for starters... Windows 10's "Search" feature? Junk. Not sure what M$ was thinking there. Give me back Win7's search, please and thank you.

Second... That stats2gnuplot program is dumb as a truck. When you run the program, which calls up a command prompt, you would think it would execute from its own directory. Or, at the very least, would recognize that "Oh, hey, I am in this directory. The input file is also in this directory. I should, like, output the file to this same directory!". PFFFT NOPE! It outputs the file into... wait for it... the directory that the command prompt has loaded from.

IN SHORT... I found the damn file. It was in the bloody System32 folder

We'll just pretend all of this didn't take over an hour and a half, mkay?

EDIT5: Last one update, as I'm off to bed. Searched endlessly for some other program(s) and ultimately have come up with nothing. PMBW takes eons to complete all it's runs on JUST 1 thread (roughly 30mins), so running it 4 times total per memory speed, would eat up 4hrs total (two memory speeds). UNLESS it was only ran with at one thread count. That wouldn't be so painful then.

I'm running a really old version of the STREAM benchmark (since anything newer requires you to compile, which I can't). 1 run went quick. 10 runs went quick. 100 runs went quick. 100000 runs.... not so much! lol Still running after a couple mins :\

I also grabbed the last version of RightMark Memory Test, but that's from 2008... so how effective it'll be at determining what you want will be completely up in the air.

The ball is in your court. I've put in way more effort in this than I should've.

Fine. *sigh* I'll entertain your request.Fine, lets do a before and after with a DDR2400 kit, one test with the stock timings and frequency, and the other with the 3200 and 22 timings. I would like to see realbench used or something similar that takes into account various real-life tests. Till then, lets not argue for nothing please

HOWEVER. I'm not downloading a 300+MB file to do so, that's just retarded. Sorry, just I am not fortunate enough to have even the option of a landline where I am, so I'm stuck with Cellular. As such, my speeds are in the 0.20Mbit range, but even then I have a monthly limit so I wouldn't be grabbing that anyhow.

I have AIDA64, there's also older versions of MaxxPi that I can get.

Otherwise hopefully this: https://panthema.net/2013/pmbw/

will be sufficient enough?

The first is old, 2013, hasn't been updated from what I see (but might not need to be either), and the other seems to at least be current. I grabbed both.

Other than your OK on an alternative program to use, I'll also need to know what timings you want ran at 2400? We're talking JEDEC defaults I assume, since you mentioned wanting the 3200 kit to be ran at CL22, which if that's the case then my kit is showing a JEDEC compliant profile at 2133 of 15-15-15-36. So will this profile in the Thaiphoon database for 2400 with 16-16-16-39 timings be acceptable? There's a few, but one is 15-15-15-36 (same as 2133), another i 17-17-17-39. and the final one is 18-17-17-39. (Note: I only have experience with 2133, 2933 and 3200 on my motherboard, and I can say that only at 2133 can I guarantee odd-CL timings, so at 2400 I don't know if 17-17-17 will work [hence asking if 16-16-16 would be OK]).

Also, being that JEDEC doesn't have official support for DDR4@3200, I'm finding three generic profiles available for 3200 (see: non-performance). 20-20-20-52, 22-22-22-52 and 24-24-24-52, and all presumably are rated as such to function at 1.2V

EDIT: Struck comments above. Also am running PMBW.... *sigh* I'm not liking this program... lol It runs though a complete loop FOR EVERY THREAD AVAILABLE!

Run 1, 1 thread... Run 2, 2 threads... Run 3, 3 threads... Over 10mins now and it's 1/2 way. I'm reading the page and look like there's a switch I can run to specify thread counts.

I'm thinking of 'standardizing' a test sequence that runs 1, 4, 8, 16 threads, so save time...

So on his page, the usage of "stats2gnuplot stats.txt | |gnuplot" gets interpreted as stats.txt as a file, | as a file, and gnuplot as a file; thus, erroring on the latter two. The 'hostname' is just your computer name, so no help. -v however, I don't know the function of (incase the folder has a password?)

This page seems to indicate it should be showing up in the same folder as the stats.txt, but I can assure you it isn't lol Guess I'll try putting the stats and exe into the C:\ root folder and see if that helps. Otherwise if not, you'll be stuck with a fairly unhelpful txt file full of nigh-incomprehensible data.

.... and that did nothing, either. Mkay then. Doing a system search of *.pdf yields no file by the reported output, either.

EDIT3: Blah. So -v = Verbose. I found the undocumented switch of -o which overrides output, which has to be placed BEFORE input file or it is treated as an input file :| Nevertheless, even with the output being overridden to c:\ it still fails. Even if set to c:\plot.pdf it still fails. Then I figured ok... maybe there's a program called GNUPlot that this is trying to call... Well there is, so I install it, and still able to get anything different to happen *sigh* I'm beginning to think that PMBW isn't going to be a viable choice either.

EDIT4: Holy.

Epic.

Facepalm.

Batman.

So for starters... Windows 10's "Search" feature? Junk. Not sure what M$ was thinking there. Give me back Win7's search, please and thank you.

Second... That stats2gnuplot program is dumb as a truck. When you run the program, which calls up a command prompt, you would think it would execute from its own directory. Or, at the very least, would recognize that "Oh, hey, I am in this directory. The input file is also in this directory. I should, like, output the file to this same directory!". PFFFT NOPE! It outputs the file into... wait for it... the directory that the command prompt has loaded from.

IN SHORT... I found the damn file. It was in the bloody System32 folder

We'll just pretend all of this didn't take over an hour and a half, mkay?

EDIT5: Last one update, as I'm off to bed. Searched endlessly for some other program(s) and ultimately have come up with nothing. PMBW takes eons to complete all it's runs on JUST 1 thread (roughly 30mins), so running it 4 times total per memory speed, would eat up 4hrs total (two memory speeds). UNLESS it was only ran with at one thread count. That wouldn't be so painful then.

I'm running a really old version of the STREAM benchmark (since anything newer requires you to compile, which I can't). 1 run went quick. 10 runs went quick. 100 runs went quick. 100000 runs.... not so much! lol Still running after a couple mins :\

I also grabbed the last version of RightMark Memory Test, but that's from 2008... so how effective it'll be at determining what you want will be completely up in the air.

The ball is in your court. I've put in way more effort in this than I should've.

Last edited:

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

Wait how can you be a serious OCer without having RealBench... come on! Are you going charge us posting on here too?? lol

I want an original kit running 2400 running at stock timings (whatever it comes with) --> Benchmark it with cinebench or realbench or geekbench

Then on that same system, same kit, I would like to run the memory at 3200 with any timing over CL22 ( 24-24-24-52 is fine).

Compare the results with a a bench test which takes into account various applications. Not sure what you got yourself involved into there...

I want an original kit running 2400 running at stock timings (whatever it comes with) --> Benchmark it with cinebench or realbench or geekbench

Then on that same system, same kit, I would like to run the memory at 3200 with any timing over CL22 ( 24-24-24-52 is fine).

Compare the results with a a bench test which takes into account various applications. Not sure what you got yourself involved into there...

Im on my way home from work... I'll run realbench when I get there at 24-24-24-52 and I guess i could do 2400 at 14 14 14 34? Not sure what timings usually are on ddr4 at those speeds.

Have 3600cl16 Trident Z running @ 3200cl14 atm so kit shouls be fine to test with. Will be a GB ab350 gaming 3 and I'll leave my 1700 at stock and depending on how long it takes I might OC it to 3.9 or something see how the scores scale with the clocks.

*edit* Sorry for the delay but I'm running into a weird issue when I changed ram settings as you'll soon see.

For some reason the heavy multitasking is taking way too long to complete where I don't know what kind of load it's putting but I'll find out in a second. Though image editing and encoding both show gains with the faster ram and OpenCL is a wash I wouldn't trust the numbers till I know what's going in that last test.

*edit 2* After a quick restart Realbench began to work correctly and this is the run I got and had resource monitor to make sure proper load. I'll do a few more runs to make sure they match then switch back to 3200 and run that a few more times and check the load on that.

*edit 3* Just noticed 22 instead of 24 running it now and will update.

Also ran another at 2400 for this result.

and here is 3200cl24 *bah didn't have cpu Z under mem but Realbench shows you the timings anyways.

So you can see there was small gains with the much looser timings and better ram speed. Though note that worse sticks of ram will probably be under 2T command rate but I don't have access to the lower timings on my board/bios. Others can test further or I can later if I'm up and not being lazy. Let me know if you want me to change anything.

2400CL12

41.977

39.6939

18788

66.4024

119,472

3200CL24

38.5964

39.598

19058

62.5187

124,569

3200CL14

39.9626 Slower than CL24 for some reason maybe CPU was parked or something idk

35.296 Way faster

19024 Slightly slower than CL24

58.0028 Good amount faster than the two previous

129,814 5k boost from CL24 to CL14 I'll rerun stuff later for my own curiosity and post it when I can.

*edit 3* Bout to start playing FFXIV and rolled back my timings and ran a 3200CL14 randomly added those above this.

Have 3600cl16 Trident Z running @ 3200cl14 atm so kit shouls be fine to test with. Will be a GB ab350 gaming 3 and I'll leave my 1700 at stock and depending on how long it takes I might OC it to 3.9 or something see how the scores scale with the clocks.

*edit* Sorry for the delay but I'm running into a weird issue when I changed ram settings as you'll soon see.

For some reason the heavy multitasking is taking way too long to complete where I don't know what kind of load it's putting but I'll find out in a second. Though image editing and encoding both show gains with the faster ram and OpenCL is a wash I wouldn't trust the numbers till I know what's going in that last test.

*edit 2* After a quick restart Realbench began to work correctly and this is the run I got and had resource monitor to make sure proper load. I'll do a few more runs to make sure they match then switch back to 3200 and run that a few more times and check the load on that.

*edit 3* Just noticed 22 instead of 24 running it now and will update.

Also ran another at 2400 for this result.

and here is 3200cl24 *bah didn't have cpu Z under mem but Realbench shows you the timings anyways.

So you can see there was small gains with the much looser timings and better ram speed. Though note that worse sticks of ram will probably be under 2T command rate but I don't have access to the lower timings on my board/bios. Others can test further or I can later if I'm up and not being lazy. Let me know if you want me to change anything.

2400CL12

41.977

39.6939

18788

66.4024

119,472

3200CL24

38.5964

39.598

19058

62.5187

124,569

3200CL14

39.9626 Slower than CL24 for some reason maybe CPU was parked or something idk

35.296 Way faster

19024 Slightly slower than CL24

58.0028 Good amount faster than the two previous

129,814 5k boost from CL24 to CL14 I'll rerun stuff later for my own curiosity and post it when I can.

*edit 3* Bout to start playing FFXIV and rolled back my timings and ran a 3200CL14 randomly added those above this.

Last edited:

Formula.350

[H]ard|Gawd

- Joined

- Sep 30, 2011

- Messages

- 1,102

lol I never claimed to be a "serious overclocker"Wait how can you be a serious OCer without having RealBench... come on! Are you going charge us posting on here too?? lol

I want an original kit running 2400 running at stock timings (whatever it comes with) --> Benchmark it with cinebench or realbench or geekbench

Then on that same system, same kit, I would like to run the memory at 3200 with any timing over CL22 ( 24-24-24-52 is fine).

Compare the results with a a bench test which takes into account various applications. Not sure what you got yourself involved into there...

Also, a 300MB program does not an extreme-overclocker make! Pretty sure you just insulted about 90% of the overclocking community by claiming that lol

Nevertheless I'll still run something, just for completion sake, since mine is a 1700X on an X370 board. However, beings you swear by RealBench, JunkBoy's results are already enough for us to ask how your foot tastes lol He even ran them with tighter than JEDEC timings, and 3200 STILL handily trounced 2400! Imagine how much better Ryzen would'e been with the Infinity Fabric running 1:1? (Stilt says if you had the right debug hardware, the internal switch is still there to trigger 1:1, but for any consumer it's impossible to enable. EDIT: there are portions of the Infinity Fabric that do run 1:1, but I was referring to those tied to the Memory side which run at 1/2 of Memory CLK)

At any rate I believe I have GeekBench, and if not can get it. I'll also be running it at JEDEC timings for DDR4-2400 of 16-16-16-39, since 15-15-15 is "Optional", and as such it's fair to assume that CAS 20 is similarly the "Optional" timing set for 3200, so I'll be using 22-22-22-52 for that speed (as per:Micron datasheet and calculated* based on page5 for my Samsung IC datasheet). I'm just being thorough and as analytical as I can be. No bias imposed.

*calculated based on each CAS for each speed increases by 2; therefore 2933 would be CL21 and 3200 would be CL23; but with Odd CLs being out on Ryzen, I'll be dropping it down to 22 across the board.

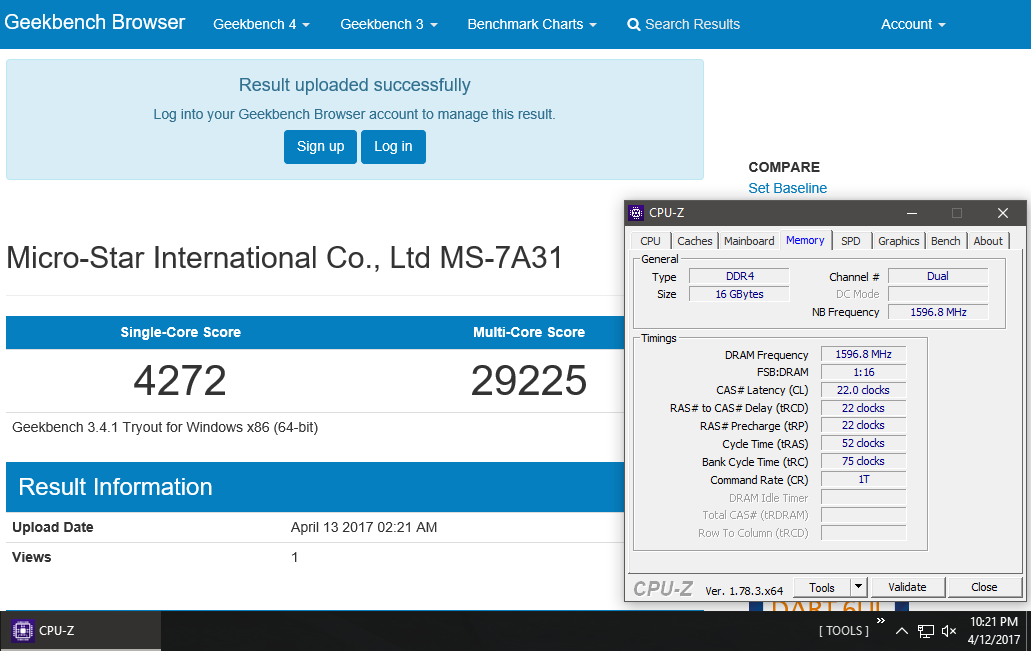

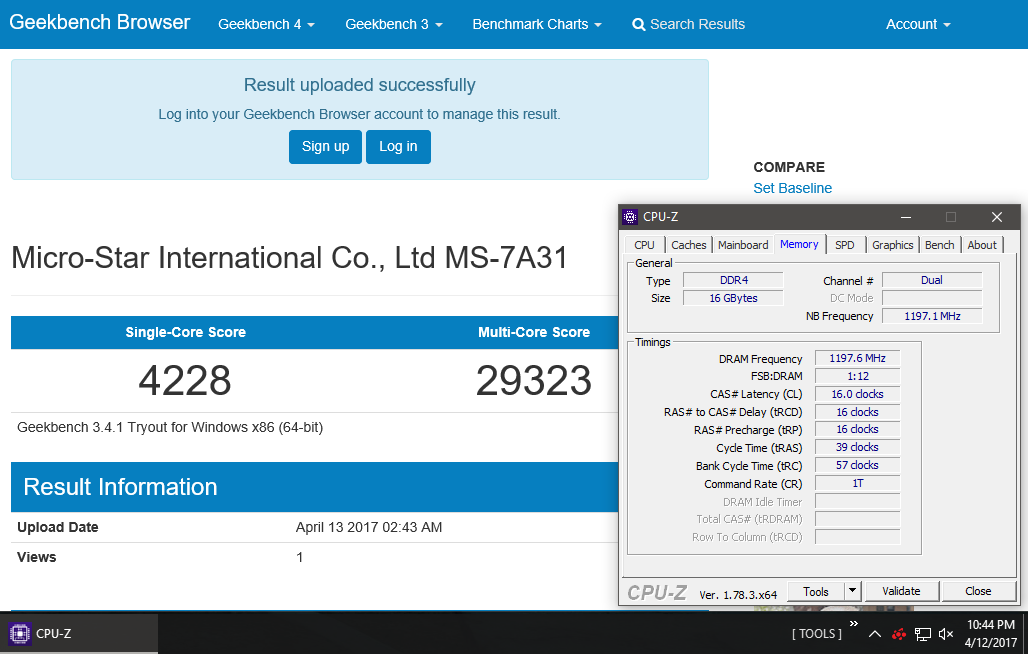

EDIT: 3200 22-22-22-52 GreekBench run.

http://browser.primatelabs.com/geekbench3/8322831

and @2400 16-16-16-39 GeekBench run.

http://browser.primatelabs.com/geekbench3/8322836

Seems to be too CPU related of a test to make as night and day of a difference like RealBench. 3200 and 2400 are basically equal in GeekBench regards. Some of the standouts 3200's | 2400's...

JPEG Decompress Multi-Core: 38068 (941.0 Mpixels/sec) | 31661 (782.7 Mpixels/sec)

Mandelbrot Multi-Core: 37314 (38.2 Gflops) | 55774 (57.2 Gflops) [I dare say this is a blip, based on single-core score]

SGEM Multi-Core: 42022 (117.7 Gflops) | 40074 (112.2 Gflops)

Ray-Trace Multi-Core: 43583 (51.4 Mpixels/sec) | 44643 (52.6 Mpixels/sec)

Memory bandwidth favors the 3200, no surprise.

AIDA64 Latency: 86.0ns | 93.7ns

(I have the screenshots IF you require. Mem speeds again reflect GeekBench, but way more exaggerated, cache doesn't change since CPU clock didn't change)

Last edited:

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

JunkBoy & Formula.350: are you guys testing with original 2400 sticks, or just lowering the frequency / latency on an original 3200 kit?

I used this kits then down clocked speed and timings but it's 3600C16 kit Samsung B.JunkBoy & Formula.350: are you guys testing with original 2400 sticks, or just lowering the frequency / latency on an original 3200 kit?

Formula.350

[H]ard|Gawd

- Joined

- Sep 30, 2011

- Messages

- 1,102

Down clocked and timed.JunkBoy & Formula.350: are you guys testing with original 2400 sticks, or just lowering the frequency / latency on an original 3200 kit?

There is no difference between a 2400 Samsung B-die kit and a 3200, or a 3600. There's nothing magical that's going to happen on a 2400 kit at 16-16-16-39 compared to a 3200 kit running at 2400 16-16-16-39. IF anything, the 3200 kit will result in better 2400 performance due to the ICs being higher quality.

But to answer your question, like Junkboy, I'm using my 3200 kit. I'm not going to go out and buy another kit just to do this, as that'd be pointless. lol

I updated my earlier post finally. I had gotten sidetracked and forgot to hit "Save Changes" until just now

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

Thanks for the results, gonna look them over later today, have not had time yet!

Was hoping that someone with a real 2400 kit does the tests for us, exactly like in the video. You see, right now we are downgrading from 3200 to 2400 and adding more timing (which is proven to be possible of course), but the trick is to actually go from a 2400 kit to 3200 with 22 timing. The doubt is in the other way around. Am no expert, but I have a feeling it would be much harder going from a real 2400 kit to 3200 with 22 timings, and even then, if any benefits are shown.

I will post back when I go through your results in detail

Was hoping that someone with a real 2400 kit does the tests for us, exactly like in the video. You see, right now we are downgrading from 3200 to 2400 and adding more timing (which is proven to be possible of course), but the trick is to actually go from a 2400 kit to 3200 with 22 timing. The doubt is in the other way around. Am no expert, but I have a feeling it would be much harder going from a real 2400 kit to 3200 with 22 timings, and even then, if any benefits are shown.

I will post back when I go through your results in detail

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,639

Thanks for the results, gonna look them over later today, have not had time yet!

Was hoping that someone with a real 2400 kit does the tests for us, exactly like in the video. You see, right now we are downgrading from 3200 to 2400 and adding more timing (which is proven to be possible of course), but the trick is to actually go from a 2400 kit to 3200 with 22 timing. The doubt is in the other way around. Am no expert, but I have a feeling it would be much harder going from a real 2400 kit to 3200 with 22 timings, and even then, if any benefits are shown.

I will post back when I go through your results in detail

My 2666 kit, which is closer to 2400, does 3200 CL15 with zero problems and I suspect it is Hynix not Samsung (I honestly never tried CL14, the board its on doesn't have enough memory options for me to really work on it). Or did, before the AGESA update on my B350 board. Now I'm stuck at 2133. I managed to run 2133 10-10-10-24 with 1.250V. Can't go 1mhz higher or I get POST loop. I have my new CHVI on the desk but I won't have time to slam it into the system until the weekend.

Formula.350

[H]ard|Gawd

- Joined

- Sep 30, 2011

- Messages

- 1,102

The thing is, really, that as far as I know JEDEC has not certified unbuffered, non-registered memory that fast (aka desktop memory). So the REAL trick is finding modules specced for 3200 AND with a CL of 20, 22 or 24.Thanks for the results, gonna look them over later today, have not had time yet!

Was hoping that someone with a real 2400 kit does the tests for us, exactly like in the video. You see, right now we are downgrading from 3200 to 2400 and adding more timing (which is proven to be possible of course), but the trick is to actually go from a 2400 kit to 3200 with 22 timing. The doubt is in the other way around. Am no expert, but I have a feeling it would be much harder going from a real 2400 kit to 3200 with 22 timings, and even then, if any benefits are shown.

I will post back when I go through your results in detail

Your best bet would be to look on eBay for really old modules from 2015, when it had started to roll out. All I'm really finding though are Micron ICs from back then that were rated at 3200 CL14. But even that that's the problem... what they're rated for in their datasheets and what they CAN run (thus why we have DDR4-3733 modules at CL14), that's the difference for what you will find on the retail sticks. Memory slated for say HP, Dell, etc, are the ones that adhere to the JEDEC/IC Maker's specs, because they need to ensure that at the end of the day the stuff works at the speeds and timings stated. That's why we're all able to at LEAST run 2133.

Neverthless, it's already been shown in various places that increasing portions of the Infinity Fabric speed that are tied in some way to the Memory clock will help performance in those areas (which I think the slowest part only runs at 1/2, I don't think there's any 1/4 speed areas). I've got so many other more pertinent things to do that I haven't gone back over those details is all. They're in Stilt's thread I linked above at AnandTech, but not all of those details are on the main page, and there are (last I looked) around 50pages of posts. BUT you could whittle them down by searching that thread and filter out to show ONLY posts by TheStilt.

I'm not going to try and determine if you are understanding of how the Infinity Fabric on Ryzen functions, but will say that just based on the stuff I've skimmed, you may not.

As such I highly recommend you read at least the first 10 pages of this thread. If you won't, then at least the first page. TheStilt offers tons of good info.

https://forums.anandtech.com/threads/ryzen-strictly-technical.2500572

With the release of the additional SKUs it is now easier to see just how Ryzen behaves in terms of the Cache structure, Data Fabric, CCX, and their relationship to faster clocks and what scope this has on influencing performance relative to Intel.

1st it can now be said that the increased memory speed only influences latency performance of cross communication CCX dependency for data and thread dependency, it does not have any influence on performance if threads and data are on the same CCX apart from similar way to Intel (meaning game sensitivity to memory).

This is important because AMD is in the process of convincing developers to put the critical thread and data dependencies organisation onto the same CCX, also Microsoft with their 'Game Mode' is meant to better organise this that game related schedules are together while other general and OS ones are separted from it.

The result, once such optimisation happens you get less of the Data Fabric higher speed benefits as the caches/cores themselves are not influenced by this nor really influenced by core clock speeds.

So that is something to consider, it is sort of balanced between more benefit when games/apps are poorly implemented across CCXs and minimal when games/applications are well threaded-data structured and organised to same CCX.

In the latter situation it comes more down to game memory sensitivity that can be noticed on both AMD and Intel.

This is now clearer with some reviews investigating this nature further with latest SKUs.

This shows that the only improvement comes when thread/data dependency is the other CCX, and this test analysis aligns in terms of trends with what Tom's Hardware also did as a comparable test.

Top lines are across CCX, next is same CCX, fastest is same core.

https://www.pcper.com/reviews/Proce...Core-i5/CCX-Latency-Testing-Pinging-between-t

But regarding cache-data performance it also has the potential to suffer depending upon its size and how used, and importantly trying to simulate other SKU cores with say the 1800X will not match 6C or 4C in real world, so little weight can be put behind testing the 8C/16 to that of the reduced cores.

In the more recent hardware.fr cache test, they show yet again something strange about the way the L3 cache is partitioned for each CCX.

The 4C/8T suffers a jump at 3MB while the other SKUs suffer a jump at 6MB, logically the jumps should had aligned just after 4MB and the higher L3 products after 8MB.

But they have now captured this behaviour as if the L3 is further split again for every SKU apart from the 1600X.

Something unusual is going on at the higher levels of cache limit and may be:

- a bug or quirk that AMD needs to clarify with using the L3 Cache by apps

- there is some kind of further partitioning that can kick in either related to the core mesh structure or each cache is 2x1MB per core design .

- The comment by AMD 'mostly victim cache" means that the L3 cache is not fully usable at times as expected causing it to be reduced or seem further partitioned/'reserved'.

http://www.hardware.fr/articles/959-5/nouvel-agesa-diminuer-latence-ram.html

The good news though is that the 1600X is near perfect in expected trend, so should be something that AMD can clarify and maybe a resolution for, but to date they have not responded to any queries from the publications that have looked into these behaviours and captured them (PCPer/Tom's/Hardware.fr).

Cheers

Last edited:

Formula.350

[H]ard|Gawd

- Joined

- Sep 30, 2011

- Messages

- 1,102

EDIT: Just to clarify for everyone, the fabric speed is only 1:2 (1/2 of Memory's speed) when factoring in the DDR of memory. In other words DDR4-3200 is actually 1600MHz, which is the fabric speed. If interested, this is the post in question that lays most of that out, but I figure it worth clarifying since when talking about memory, actual-speed and effective-speed can start to muddy things up a bit.

-----------

CSI_PC Nice post

Yea I had seen those cross-core latency spike graphs from the initial Ryzen launch.

Other's findings and my own thoughts, that may be of interest: Chew at XS forums had this to say today...

As for the CCX latency being memory speed dependent -- which as a side-thought quick, I can't help but wonder if it was intended to run full speed like in the Zen ES [engineering samples], and perhaps that was keeping memory clocks from hitting higher speeds (as had been reported), so they decided best to dial that back until they can sort it out -- it won't make a big impact right now if they can indeed convince folk to specifically program for it... however, lets say that the specific area of the Infinity Fabric remains clocked at RAMclk/2, what will that do to performance of things when they finally DO begin to have enough thread awareness to utilize Ryzen to its fullest? Won't that create a very real pinch point again, which the programming won't solve (meaning higher memory speeds will actually matter)?

Lastly, from what I had seen, and unfortunately I can't recall if it was Anand forums or XS or even here but word was that all the Ryzen SKUs are based on the 8C package and the only thing happening is cut cores, with full cache left in play. That even the 4 core versions are a 2+2, instead of a unique die with only a 4C module. I don't know how that all plays into things though, especially since it appears your info is saying otherwise based on your summary. Part of me would think (armchair engineering alert) since they are just cut down 4+4 chips, that they'd leave the L3 fully intact in order to preserve all the ccx interconnects. However I also would've thought they'd layout the core modules in a stacked fashion lol

Nevertheless, Ryzen's outlook is positive in my opinion. Bulldozer wasn't so great to start with, but comparing its starting performance to its final iteration's performance, Excavator came a long way. (again, only comparing it to itself, not Intel heh). So to imagine where Ryzen will go from here is kinda exciting.

-----------

CSI_PC Nice post

Yea I had seen those cross-core latency spike graphs from the initial Ryzen launch.

Other's findings and my own thoughts, that may be of interest: Chew at XS forums had this to say today...

"The biggest kicker is the new win 10 update that everyone thinks is the shiznit with gaming mode.......just makes things even worse.

Latest agesas are losing 6-12 secs in 32m pi.......new win 10 build 1703 ( gaming mode ) losing another 30-40 seconds in 32m pi......no love lost really RTC makes 2d benching win 10 pointless anyway......."

Whether or not that is anything to worry about in general, in terms of other programs that are non-3D related (Photoshop, as an immediate example that comes to mind), will have to be determined in the weeks to come. Also as to whether that pertains to gaming on Ryzen with the Game Mode update will be interesting to find out, but lets hope not.Latest agesas are losing 6-12 secs in 32m pi.......new win 10 build 1703 ( gaming mode ) losing another 30-40 seconds in 32m pi......no love lost really RTC makes 2d benching win 10 pointless anyway......."

As for the CCX latency being memory speed dependent -- which as a side-thought quick, I can't help but wonder if it was intended to run full speed like in the Zen ES [engineering samples], and perhaps that was keeping memory clocks from hitting higher speeds (as had been reported), so they decided best to dial that back until they can sort it out -- it won't make a big impact right now if they can indeed convince folk to specifically program for it... however, lets say that the specific area of the Infinity Fabric remains clocked at RAMclk/2, what will that do to performance of things when they finally DO begin to have enough thread awareness to utilize Ryzen to its fullest? Won't that create a very real pinch point again, which the programming won't solve (meaning higher memory speeds will actually matter)?

Lastly, from what I had seen, and unfortunately I can't recall if it was Anand forums or XS or even here but word was that all the Ryzen SKUs are based on the 8C package and the only thing happening is cut cores, with full cache left in play. That even the 4 core versions are a 2+2, instead of a unique die with only a 4C module. I don't know how that all plays into things though, especially since it appears your info is saying otherwise based on your summary. Part of me would think (armchair engineering alert) since they are just cut down 4+4 chips, that they'd leave the L3 fully intact in order to preserve all the ccx interconnects. However I also would've thought they'd layout the core modules in a stacked fashion lol

Nevertheless, Ryzen's outlook is positive in my opinion. Bulldozer wasn't so great to start with, but comparing its starting performance to its final iteration's performance, Excavator came a long way. (again, only comparing it to itself, not Intel heh). So to imagine where Ryzen will go from here is kinda exciting.

Last edited:

EDIT: Just to clarify for everyone, the fabric speed is only 1:2 (1/2 of Memory's speed) when factoring in the DDR of memory. In other words DDR4-3200 is actually 1600MHz, which is the fabric speed. If interested, this is the post in question that lays most of that out, but I figure it worth clarifying since when talking about memory, actual-speed and effective-speed can start to muddy things up a bit.

-----------

CSI_PC Nice post

Yea I had seen those cross-core latency spike graphs from the initial Ryzen launch.

Other's findings and my own thoughts, that may be of interest: Chew at XS forums had this to say today...

"The biggest kicker is the new win 10 update that everyone thinks is the shiznit with gaming mode.......just makes things even worse.Whether or not that is anything to worry about in general, in terms of other programs that are non-3D related (Photoshop, as an immediate example that comes to mind), will have to be determined in the weeks to come. Also as to whether that pertains to gaming on Ryzen with the Game Mode update will be interesting to find out, but lets hope not.

Latest agesas are losing 6-12 secs in 32m pi.......new win 10 build 1703 ( gaming mode ) losing another 30-40 seconds in 32m pi......no love lost really RTC makes 2d benching win 10 pointless anyway......."

As for the CCX latency being memory speed dependent -- which as a side-thought quick, I can't help but wonder if it was intended to run full speed like in the Zen ES [engineering samples], and perhaps that was keeping memory clocks from hitting higher speeds (as had been reported), so they decided best to dial that back until they can sort it out -- it won't make a big impact right now if they can indeed convince folk to specifically program for it... however, lets say that the specific area of the Infinity Fabric remains clocked at RAMclk/2, what will that do to performance of things when they finally DO begin to have enough thread awareness to utilize Ryzen to its fullest? Won't that create a very real pinch point again, which the programming won't solve (meaning higher memory speeds will actually matter)?

Lastly, from what I had seen, and unfortunately I can't recall if it was Anand forums or XS or even here but word was that all the Ryzen SKUs are based on the 8C package and the only thing happening is cut cores, with full cache left in play. That even the 4 core versions are a 2+2, instead of a unique die with only a 4C module. I don't know how that all plays into things though, especially since it appears your info is saying otherwise based on your summary. Part of me would think (armchair engineering alert) since they are just cut down 4+4 chips, that they'd leave the L3 fully intact in order to preserve all the ccx interconnects. However I also would've thought they'd layout the core modules in a stacked fashion lol

Nevertheless, Ryzen's outlook is positive in my opinion. Bulldozer wasn't so great to start with, but comparing its starting performance to its final iteration's performance, Excavator came a long way. (again, only comparing it to itself, not Intel heh). So to imagine where Ryzen will go from here is kinda exciting.

Thanks

Just to add.

Well technically the Fabric is working at the same 1:1 clock speed as DDR4 memory because the actual fundamental clock is half the DDR, it is just that it pulls another trick to fit double the data into the original clock cycle just like GDDR5X quadruples that data into original clock.

Having the fabric operate double the clock speed to the DDR4 memory actual clock could get very messy as it is then synched to the data rather than the actual framing-signal clock generated by the hardware.

It sounds simple to just use the data rate 'clock' inside the actual clock source, but this is incredibly complex as can be seen with the headache of getting memory to work at higher clock speeds with Ryzen platform for now without even doing something like that.

It sort of goes against all concepts of transmission theory to try and do that when one is talking about a direct transmission/framework between two endpoints (that being Data Fabric to Memory), and doing iwill also be against the JEDEC standards unless the Data Fabric can run both clocks and with the original to memory while double to CCX (which looks like so far it cannot) - maybe this is part of the next product of Ryzen down the line with an improved Data Fabric *shrug*.

Cheers

Last edited:

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

Thanks

Just to add.

Well technically the Fabric is working at the same 1:1 clock speed as DDR4 memory because the actual fundamental clock is half the DDR, it is just that it pulls another trick to fit double the data into the original clock cycle just like GDDR5X quadruples that data into original clock.

Having the fabric operate double the clock speed to the DDR4 memory actual clock could get very messy as it is then synched to the data rather than the actual framing-signal clock generated by the hardware.

It sounds simple to just use the data rate 'clock' inside the actual clock source, but this is incredibly complex as can be seen with the headache of getting memory to work at higher clock speeds with Ryzen platform for now without even doing something like that.

It sort of goes against all concepts of transmission theory to try and do that when one is talking about a direct transmission/framework between two endpoints (that being Data Fabric to Memory), and doing iwill also be against the JEDEC standards unless the Data Fabric can run both clocks and with the original to memory while double to CCX (which looks like so far it cannot) - maybe this is part of the next product of Ryzen down the line with an improved Data Fabric *shrug*.

Cheers

Thanks for the link, there is actually TONS of info in there, it would take someone weeks to go through it all

What I would like to know is... well let me explain, and am throwing some theoretical numbers here. If 2400 DDR4 vs 3200 DDR4 gets us (lets say) a 20% performance boost.. The bigger question becomes: to what extent can higher memory frequency give us more performance? In other words, would going from 2400 DDR4 to say 4000 DDR4 have 30%, 40% increase in performance?

I think to answer these questions, we need to know first what is the maximum practical Fabric frequency rate is and divide that by 2 to know the maximum practical DDR4 speed that can be used.

** Anything below this point is my own understanding, if anything is wrong, please correct me **:

But really, all this Data Fabric is mostly for inter-CCX speed, meaning. Since Ryzen 7 uses 2 CCXs (each CCX consisting of 4 cores), it is mostly useful for multi-threaded applications that can actually utilize more than 4 cores (8 threads) at once.

So say for games that user under 4 cores (8 threads), theoretically, having faster RAM would not help the Data Fabric as it would not be even used.

HOWEVER, at the same time, and this is where things might get confusing, faster RAM and lower latency does help each core individually within the same CCX, because the lower latency (thus faster data fetching beyond the L1, L2, and L3 cashes the cores routinely use) would mean better performance.

So in conclusion, faster RAM and lower latency can benefit each core (and therefore each individual CCX) to some extent, however, the real benefit here is really when the 2 CCXs communicate with each other for anything beyond 4 core or 8 threads.

JustReason

razor1 is my Lover

- Joined

- Oct 31, 2015

- Messages

- 2,483

To the last part, as long as 2 CCXs exist then the data fabric will come in to play to varying degrees of course. Even on the R7 8c/16t, only 4 thread usage is no guarantee to remain on one CCX. The windows scheduler loves moving around. Although some games do tend to remain on the same cores, for me Fallout does this for the most part.Thanks for the link, there is actually TONS of info in there, it would take someone weeks to go through it all

What I would like to know is... well let me explain, and am throwing some theoretical numbers here. If 2400 DDR4 vs 3200 DDR4 gets us (lets say) a 20% performance boost.. The bigger question becomes: to what extent can higher memory frequency give us more performance? In other words, would going from 2400 DDR4 to say 4000 DDR4 have 30%, 40% increase in performance?

I think to answer these questions, we need to know first what is the maximum practical Fabric frequency rate is and divide that by 2 to know the maximum practical DDR4 speed that can be used.

** Anything below this point is my own understanding, if anything is wrong, please correct me **:

But really, all this Data Fabric is mostly for inter-CCX speed, meaning. Since Ryzen 7 uses 2 CCXs (each CCX consisting of 4 cores), it is mostly useful for multi-threaded applications that can actually utilize more than 4 cores (8 threads) at once.

So say for games that user under 4 cores (8 threads), theoretically, having faster RAM would not help the Data Fabric as it would not be even used.

HOWEVER, at the same time, and this is where things might get confusing, faster RAM and lower latency does help each core individually within the same CCX, because the lower latency (thus faster data fetching beyond the L1, L2, and L3 cashes the cores routinely use) would mean better performance.

So in conclusion, faster RAM and lower latency can benefit each core (and therefore each individual CCX) to some extent, however, the real benefit here is really when the 2 CCXs communicate with each other for anything beyond 4 core or 8 threads.

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

To the last part, as long as 2 CCXs exist then the data fabric will come in to play to varying degrees of course. Even on the R7 8c/16t, only 4 thread usage is no guarantee to remain on one CCX. The windows scheduler loves moving around. Although some games do tend to remain on the same cores, for me Fallout does this for the most part.

Not sure if core affinity can be manually assigned in Windows for individual cores. I know it can be done in Linux and other OSes for various appliances and usages, but I have no idea if Windows goes as far as allowing that. But I don't know to what extent Ryzen itself manages it CCXs and core distribution. They might do it in a way to spread the load evenly (across various cores physically) in order to distribute heat properly, but this is just a very wild unproven assumption. I do know that the first core (core 0) gets hammered almost always as its the default to be used for most processes. But then, Ryzen could be different. There is a lot that is still unknown, or known to a few and still to be discovered.

JustReason

razor1 is my Lover

- Joined

- Oct 31, 2015

- Messages

- 2,483

For my 8350 core 0 is usually the main core but say about 30% of the time it can be another, again usually an odd core being windows treats the even cores as HT.Not sure if core affinity can be manually assigned in Windows for individual cores. I know it can be done in Linux and other OSes for various appliances and usages, but I have no idea if Windows goes as far as allowing that. But I don't know to what extent Ryzen itself manages it CCXs and core distribution. They might do it in a way to spread the load evenly (across various cores physically) in order to distribute heat properly, but this is just a very wild unproven assumption. I do know that the first core (core 0) gets hammered almost always as its the default to be used for most processes. But then, Ryzen could be different. There is a lot that is still unknown, or known to a few and still to be discovered.

Floor_of_late

n00b

- Joined

- Apr 8, 2017

- Messages

- 62

Check this site for info, read the conclusion if you wish not to read the entire thing, but it does seem that windows is, and am quoting "the CCX design of 8-core Ryzen CPUs appears to more closely emulate a 2-socket system". So if I understand that correctly, all cores in one CCX will be utilized before the 4 other cores from the second CCX will be.

But I am also reading in that post that even the inter-core communication within the same CCX is also done through the infinity fabric. Anyway I will read up more and update.

But I am also reading in that post that even the inter-core communication within the same CCX is also done through the infinity fabric. Anyway I will read up more and update.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)