Adding new info. like a video is pretty big. And you completely rewrote post #78 which was only 1 sentence when I replied too.Err there were two reviews there, and I just added to my original post with the links and added one sentence. So if you think that is substantial, geez.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

1st Ryzen CPU Review Leaked

- Thread starter SixFootDuo

- Start date

freeloader1969

2[H]4U

- Joined

- Dec 13, 2003

- Messages

- 2,238

Should change the title of the thread to "preview of Zen". No one reviews an ES.

Adding new info. like a video is pretty big. And you completely rewrote post #78 which was only 1 sentence when I replied too.

Not arguing with you about my post, you replied to a post that had the first half of the post there, before the links lol, so what ever.

The info is there now, so there ya go.

Eh that sorta happened with the original K7 I believe.Should change the title of the thread to "preview of Zen". No one reviews an ES.

Someone benched a ES K7 before it launched back in 1999 or so and it did pretty poorly on the tests which prompted AMD to make some public comments about it being a ES and not representative of the final product. That was back when Sanders was still running things I think so a very different AMD.

You're supposed to finish your post then hit reply, especially since you seem to care about context and keeping things straight in a thread right?you replied to a post that had the first half of the post there, before the links lol, so what ever.

I already addressed it in a post you quoted on the previous page.The info is there now, so there ya go.

sorry typo lol, yeah you are right

Well, my tablet countered with a Skylark, so we're square.

You're supposed to finish your post then hit reply, especially since you seem to care about context and keeping things straight in a thread right?

I already addressed it in a post you quoted on the previous page.

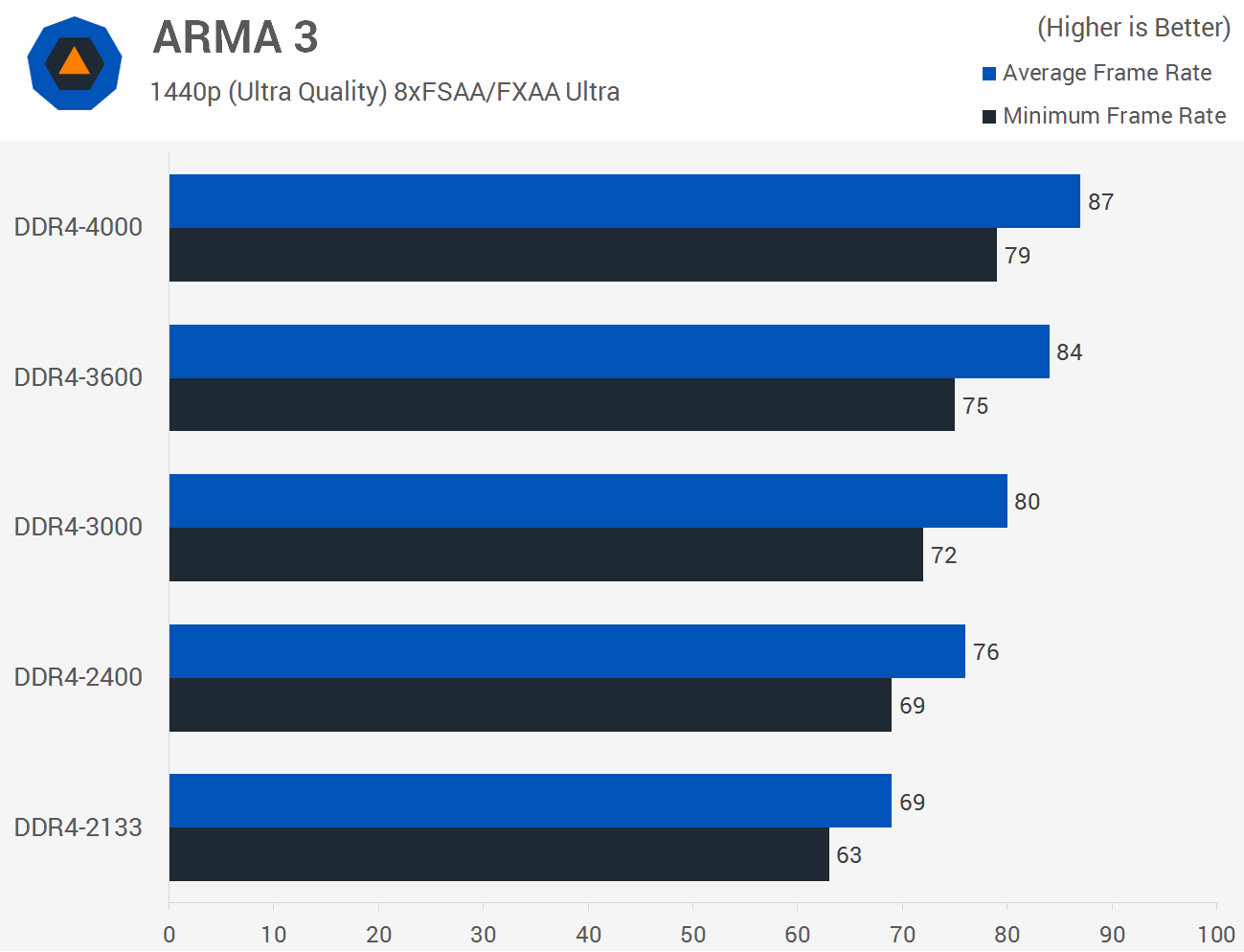

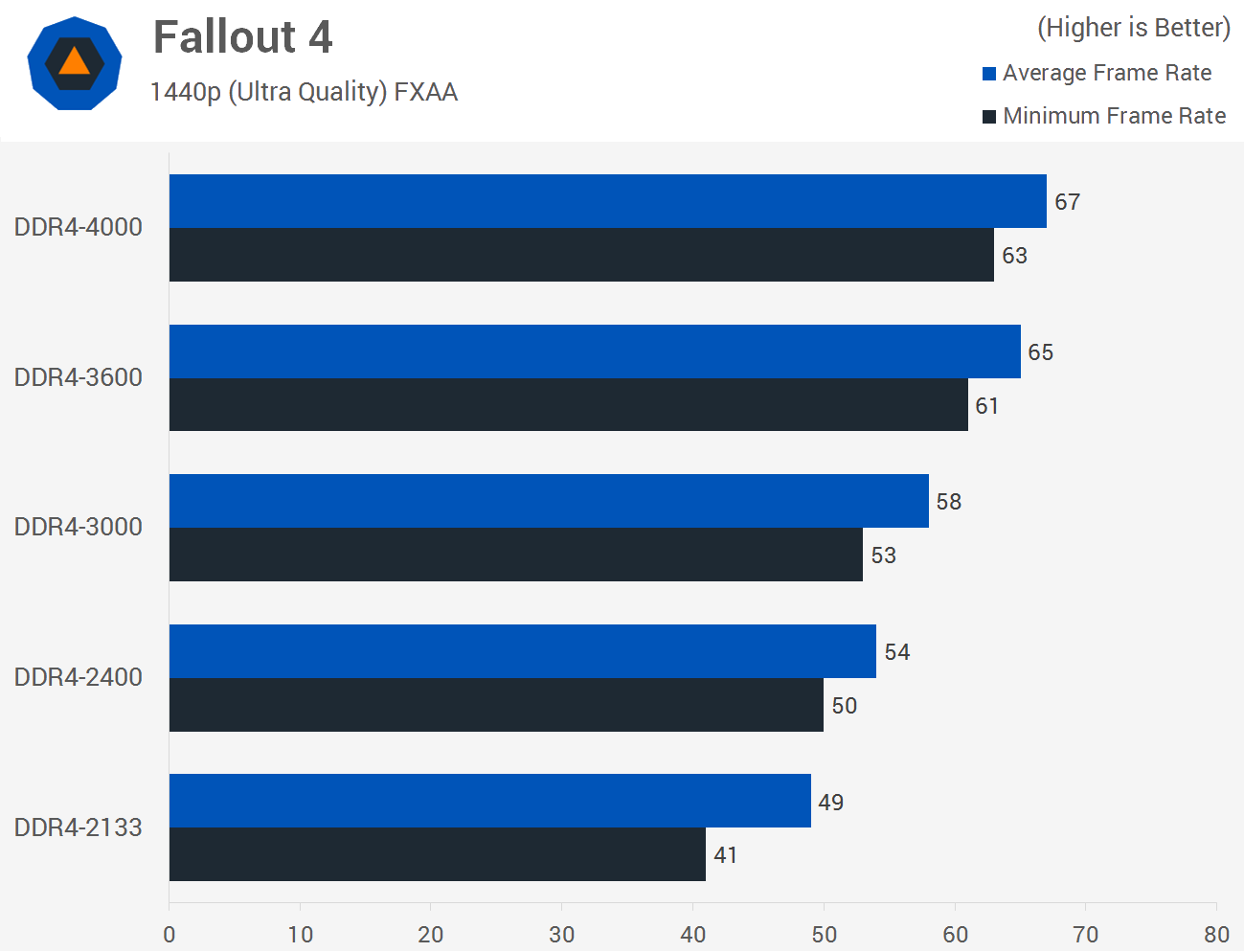

and you totally missed the link. The video was only part of it not only that if you watched the full video you would know the min frame times were better for the higher memory in more than 2 of the games tested......

No I skimmed the link. I didn't mention it because its shit and does things like play up a .3 second increase in Excel as if its significant. Its not so much a real article as it is a memory ad. And min. frame rates hardly budged dude. Keep reaching.and you totally missed the link. The video was only part of it not only that if you watched the full video you would know the min frame times were better for the higher memory in more than 2 of the games tested......

Until you can show me large performance increases in line with large increases of memory bandwidth across the board in nearly all apps you've got nothing as far as I'm concerned. That is what happens when bottlenecks are fixed.

Factum

2[H]4U

- Joined

- Dec 24, 2014

- Messages

- 2,455

New poster appears, spreads lot of AMD PR, don't like facts...timed PR or fanboy?

No I skimmed the link. I didn't mention it because its shit and does things like play up a .3 second increase in Excel as if its significant. Its not so much a real article as it is a memory ad. And min. frame rates hardly budged dude. Keep reaching.

Until you can show me large performance increases in line with large increases of memory bandwidth across the board in nearly all apps you've got nothing as far as I'm concerned. That is what happens when bottlenecks are fixed.

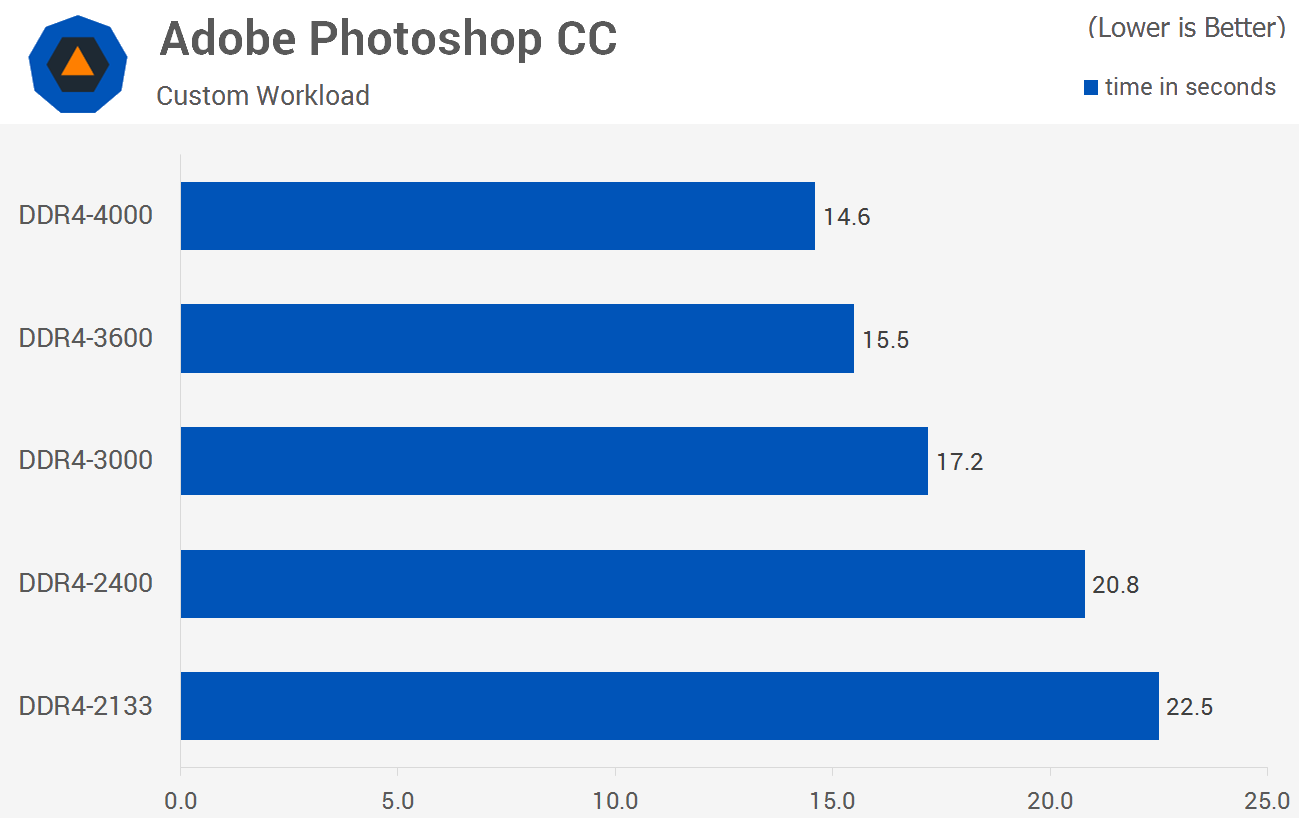

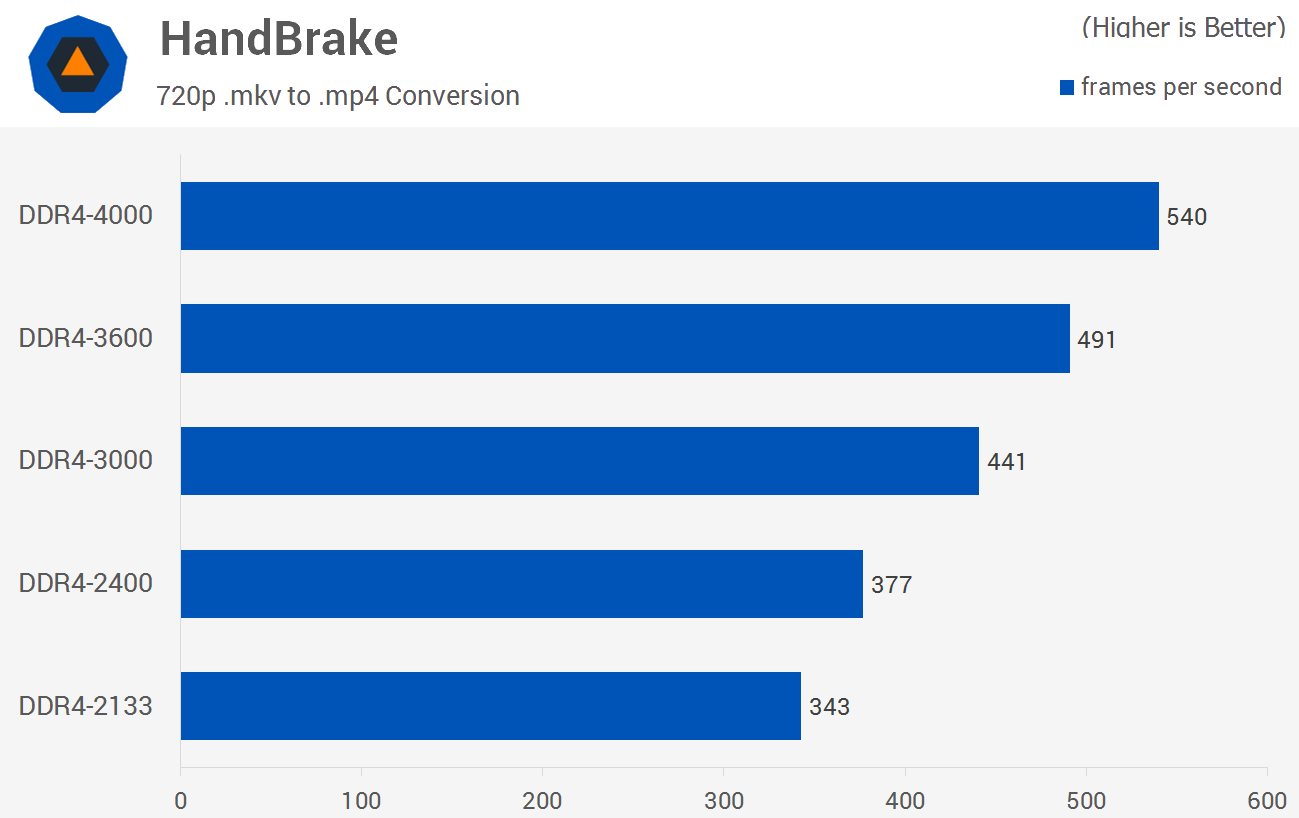

so 54% increase in app performance when doing something isn't big for you?

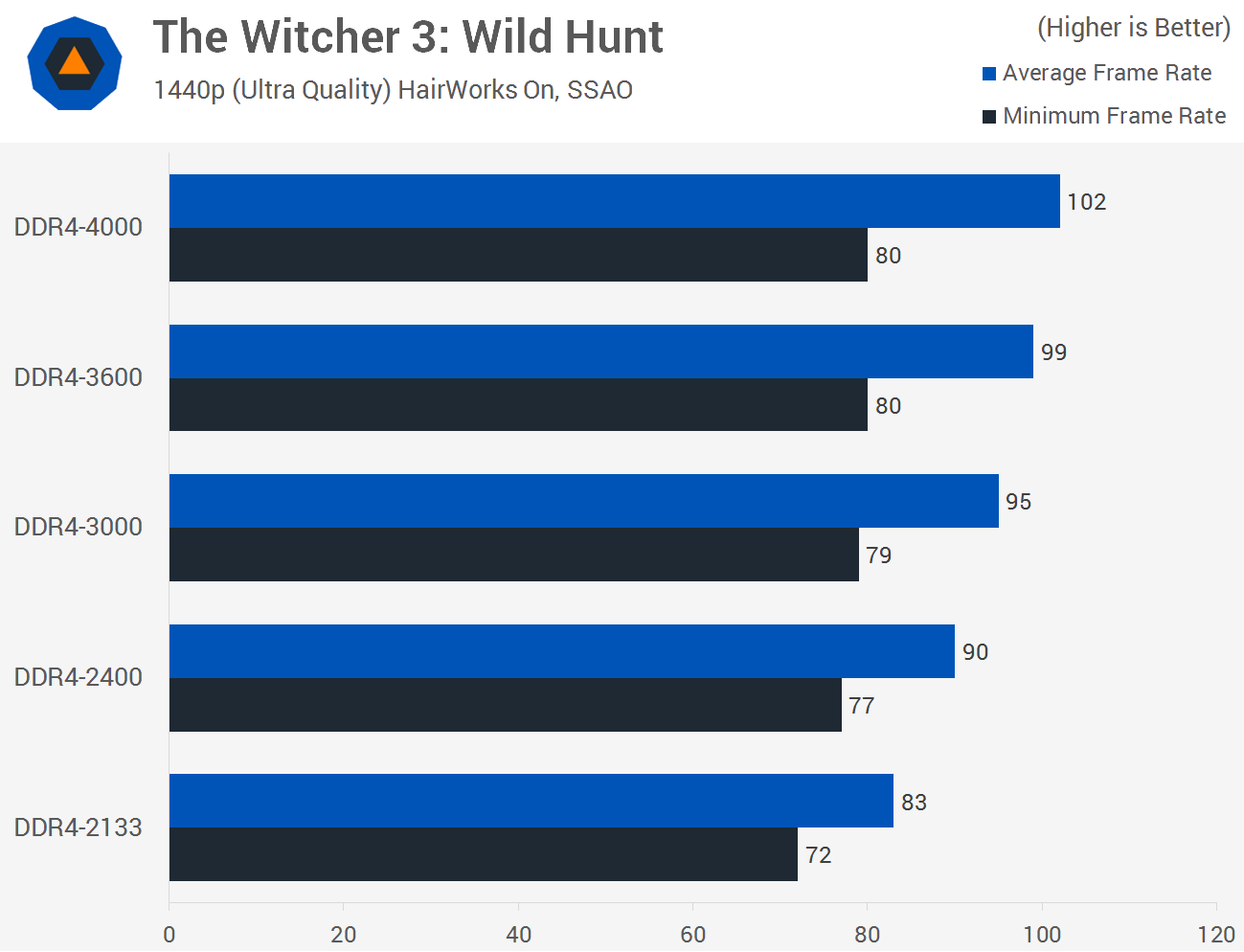

a 19% increase in frame rates in a game isn't big for you,

Going to link the graphs

Sorry but its not .3 seconds I'm looking at here

How many FPS difference is there?

That's a pretty big increase in FPS its nowhere near the 5%, its double that.

Well this ones is 19%

Highly App dependent but they all show the same thing, Skylake is Bandwidth bound

Now this is the question I have for you, and how anyone can extrapolate data when the CPU is boosting different under different loads, and what the memory frequency and how it will affect the performance?

Orange + GRAPEFRUIT = UGLI FRUIT

That is what ya get when someone tries to extrapolate data like this.

Last edited:

Is it across the board? You know it isn't. Its application specific. We have the links on the previous page showing it frequently does nothing. So you know my answer. Stop being facetious.so 54% increase in app performance when doing something isn't big for you?

Is it across the board? You know it isn't. Its application specific. We have the links on the previous page showing it frequently does nothing. So you know my answer. Stop being facetious.

Wow do you want me to quote myself when I stated that, it was well before you even started posting in this thread

What are you trying to show here? Memory bandwidth, IPC, changes, what? What are you trying to baseline before you make your extrapolation? Cause it all changes based on what programs what you are looking at and what you are trying to equalize before you extrapolate. Pointing to different reviews and trying to show something, doesn't show only one thing.

I'm not being facetious at all. I was very clear on what I was talking about and tried to clear up the mess the other guy started with.

So after showing you what I stated was on the money, now I'm acting silly, and it all doesn't matter?

That quote has nothing to do with what I've said nor your other claims about Skylake being bandwidth bottlenecked or Purley either. Its just a vague platitude at best and at worst it comes off to me like you're trying to discredit other sources of information.Wow do you want me to quote myself when I stated that, it was well before you even started posting in this thread

Nope on both. The constant after the fact post rediting information + constant rediting snark/insults + the way you misattributed something to me shows otherwise.I'm not being facetious at all. I was very clear on what I was talking about

Silly? What?? You come off as something else to me. And its not too hard to show other sources of information that demonstrates you're not anywhere near to being on the money here: http://www.silentpcreview.com/article1478-page4.html. Next page has general performance scaling with various apps with DDR4 2133 vs DDR4 3000.So after showing you what I stated was on the money, now I'm acting silly, and it all doesn't matter?

Just like the previous links it shows hardly any difference. That would not be true if Skylake had a memory bandwidth bottleneck.

dude did you just show me a benchmark suite with a gt 640 with games that probably are GPU bound on that particular card?

But the next page quite interesting totally different then the ones I linked to, so.......

Well maybe something different with the motherboards being tested?

But the next page quite interesting totally different then the ones I linked to, so.......

Well maybe something different with the motherboards being tested?

At the resolutions they're using it wouldn't matter. Notice how they're all relatively low. There is only 1 game with fps much below 60. Improvements due to a system memory bandwidth bottleneck being alleviated would still be apparent across the board (edit) in a clear and large fashion too.dude did you just show me a benchmark suite with a gt 640 with games that probably are GPU bound on that particular card?

At the resolutions they're using it wouldn't matter. Notice how they're all relatively low. There is only 1 game with fps much below 60. Improvements due to a system memory bandwidth bottleneck being alleviated would still be apparent across the board.

Can't make assumptions like that. There is no way to. The gt 640 is a damn slow card its pretty much close to integrated graphics for last gen or maybe even 2 gens ago CPU's...... Its like 2 or 3 times slower than a 750ti if I'm not mistaken

With a bottleneck? Yes you can. Fixing bottlenecks allows you to get large performance increases, once you fix it the difference is dramatic and obvious.Can't make assumptions like that. There is no way to.

Its slow but that doesn't matter for what they're trying to test. They don't need it to do 100fps at 1440p to see if there is any large improvement in performance. Especially for bottlenecks.The gt 640 is a damn slow card its pretty much close to integrated graphics for last gen or maybe even 2 gens ago

edit: they do have IGPU only performance tests in that same article. More bandwidth is something that will indeed help the IGPU a whole lot.

With a bottleneck? Yes you can. Fixing bottlenecks allows you to get large performance increases, once you fix it the difference is dramatic and obvious.

Its slow but that doesn't matter for what they're trying to test. They don't need it to do 100fps at 1440p to see if there is any large improvement in performance. Especially for bottlenecks.

edit: they do have IGPU only performance tests in that same article. More bandwidth is something that will indeed help the IGPU a whole lot.

If you can identify the bottlenecks and the % variation yes but by those you can't.

TheLAWNoob

Limp Gawd

- Joined

- Jan 10, 2016

- Messages

- 330

Would quad channel 2133Mhz match dual channel 4000Mhz RAM?

I will be getting a Xeon E5 for my gaming rig soon and it probably doesn't support XMP.

I will be getting a Xeon E5 for my gaming rig soon and it probably doesn't support XMP.

I'm not sure why so many people are looking at the gaming results, comparing Ryzen to the 6700k, and declaring problems. Intel's own 8 core gets beaten soundly by the 6700k at gaming as well. Assuming the base clock of the retail chip is 3.4GHz (which they stated it would be), the 8 core Zen is only going to be a bit behind Intel's similarly clocked (6/8 core chips) at gaming. I don't see that as a bad result.

If gaming is all you do, then yes, the 8 core Zen chip is a probably a bad buy. But on the same token, so is the 6900k. It doesn't mean they're bad chips. It means 8 core chips tend to sacrifice clock speed and that doesn't make for top of the line gaming in most games. We'll have to see if the 4/6 core Zen offerings have enough clock speed to compete. I'm personally suspecting they won't and the 6700/7700k will still be the top end for gaming, but let's not burn an 8 core chip because it's gaming isn't top end.

That's a really good point, but in the graph it shows Ryzen performing significantly worse than a 6900K in gaming. I think a higher base clock should help, but I doubt it will make up the difference.

Price will be key, but the fact that people were expecting Ryzen to cost more than half as much less than the 6900K (even if the performance was superior) means AMD is in a lose-lose situation.

Who can afford to produce a superior product and charge half the market price?

Palladium@SG

Limp Gawd

- Joined

- Feb 8, 2015

- Messages

- 283

Looking at the overall MT bench, Zen is at most SB IPC at 3.3GHz all core turbo. Coupled with the fact Intel is rumored to price the i3-K at an absurd $170 pretty much means AMD is again forced to significantly undercut Intel which is good or bad depending on where you stand.

That's a really good point, but in the graph it shows Ryzen performing significantly worse than a 6900K in gaming. I think a higher base clock should help, but I doubt it will make up the difference.

Price will be key, but the fact that people were expecting Ryzen to cost more than half as much less than the 6900K (even if the performance was superior) means AMD is in a lose-lose situation.

Who can afford to produce a superior product and charge half the market price?

Frank Lucas?

Taking that it's lower clocked ES on beta mobo, those are surprisingly good results. It will be probably much closer to 6700k (and 7700k). Now it's all about pricing. If top Ryzen is about 300-350 euro, it will hit hard Intel 6+ core lineup and pricing. And will blow up their mainstream platforms.

Though, for people like me, who got 4790k and newer chips, until games start running at more than 4 cores, there is still no need to upgrade. Maybe in 2 gets, when Intel tweaks coffee lake and amd tweaks new gen of Ryzen, I'll consider change.

But it's good to see AMD coming back, it will force the innovation, generation upgrades might finally be meaningful, and we might get modern version of Core 2 Duo in response

Though, for people like me, who got 4790k and newer chips, until games start running at more than 4 cores, there is still no need to upgrade. Maybe in 2 gets, when Intel tweaks coffee lake and amd tweaks new gen of Ryzen, I'll consider change.

But it's good to see AMD coming back, it will force the innovation, generation upgrades might finally be meaningful, and we might get modern version of Core 2 Duo in response

SixFootDuo

Supreme [H]ardness

- Joined

- Oct 5, 2004

- Messages

- 5,825

One thing that comes along with age is the fact, none of this really matters at the end of the day. There is a lot of spit and fire in here over a few ms of performance one way or the other. You guys do realize that right? lol

If they can deliver the Ryzon, their highest tier part for $375 to $425, in that range, then they are going to capture the widest part of the interest spectrum for builders / upgrades. I would be one of them as I'm wanting to retire my 5820K after 2 years.

I've been pretty vocal against AMD recently. I'm hoping their new GPU and CPU get priced to where I feel comfortable moving to an all AMD platform. Hell, they even have branded Memory and SSD's. I'll go all in if the money is right. Too close to Intel and man, that's going to be a tough tough call.

If they can deliver the Ryzon, their highest tier part for $375 to $425, in that range, then they are going to capture the widest part of the interest spectrum for builders / upgrades. I would be one of them as I'm wanting to retire my 5820K after 2 years.

I've been pretty vocal against AMD recently. I'm hoping their new GPU and CPU get priced to where I feel comfortable moving to an all AMD platform. Hell, they even have branded Memory and SSD's. I'll go all in if the money is right. Too close to Intel and man, that's going to be a tough tough call.

Shintai

Supreme [H]ardness

- Joined

- Jul 1, 2016

- Messages

- 5,678

The article's data gives the exact picture opposite of what you're saying it does + the articles conclusion is AGAINST buying the highest speed memory possible (in fact they don't even recommend above DDR4 2666) since its effectively a waste of money. Its quite blunt about this.

edit: I never said the above + even when overclocked to 5Ghz+ Skylake doesn't see much if any benefit from more bandwidth. Its not bandwidth limited for desktop apps at all. Servers and HPC are a different story but that market buys Xeons and eventually whatever AMD calls server Zen, not HEDT chips.

Anyone should know that Skylake likes 3000Mhz+ memory to really give what it got.

Shintai

Supreme [H]ardness

- Joined

- Jul 1, 2016

- Messages

- 5,678

The Intel quotes probably came from the horse shit often posted at glassdoor. Lots of disgruntled or former employees post things there.

I wouldn't be surprised. There is always someone burning bridges when they think they are better than they are.

How many in here haven't experienced someone that didn't exactly take it well that they got laid off.

Shintai

Supreme [H]ardness

- Joined

- Jul 1, 2016

- Messages

- 5,678

Should change the title of the thread to "preview of Zen". No one reviews an ES.

Most official reviews are actually ES samples.

Shintai

Supreme [H]ardness

- Joined

- Jul 1, 2016

- Messages

- 5,678

Would quad channel 2133Mhz match dual channel 4000Mhz RAM?

I will be getting a Xeon E5 for my gaming rig soon and it probably doesn't support XMP.

Yes and no. Bandwidth yes, latency no. However with E5 etc you have a much bigger cache that gives a great benefit there. So the result would be yes and then some.

Reality

[H]ard|Gawd

- Joined

- Feb 16, 2003

- Messages

- 1,937

One thing that comes along with age is the fact, none of this really matters at the end of the day. There is a lot of spit and fire in here over a few ms of performance one way or the other. You guys do realize that right? lol

If they can deliver the Ryzon, their highest tier part for $375 to $425, in that range, then they are going to capture the widest part of the interest spectrum for builders / upgrades. I would be one of them as I'm wanting to retire my 5820K after 2 years.

I've been pretty vocal against AMD recently. I'm hoping their new GPU and CPU get priced to where I feel comfortable moving to an all AMD platform. Hell, they even have branded Memory and SSD's. I'll go all in if the money is right. Too close to Intel and man, that's going to be a tough tough call.

AMD isn't going to price 8/16 Ryzen at less than half of what intel is offering in that bracket, you people are crazy. top end Ryzen will be at least $500 USD, if not more.

Tup3x

[H]ard|Gawd

- Joined

- Jun 8, 2011

- Messages

- 1,942

Good luck for AMD trying to sell those chips then. Personally I think it should cost less than Intel's 6 core CPUs because AM4 platform is much simpler. Octa core Zen should be comparable in size to mainstream quad core i7 and the platform is similar so I don't see why it shoul cost more. Also 6 core i7s aren't >500 € chips and I'm pretty sure that AMD wants to undercut those.AMD isn't going to price 8/16 Ryzen at less than half of what intel is offering in that bracket, you people are crazy. top end Ryzen will be at least $500 USD, if not more.

SixFootDuo

Supreme [H]ardness

- Joined

- Oct 5, 2004

- Messages

- 5,825

Don't let ES / Beta this or that warp your expectations. These numbers are going to be pretty close to retail.

edit: Ok damn I can't find the article I was just reading. I was going to link it here to make my point.

Some web site took ES chips and their retail counterparts and benched them. The difference was very very slight. Their was about 4 or 5 chips.

If someone knows the article I am talking about please link it.

edit: Never mind they were Intel chips

edit: Ok damn I can't find the article I was just reading. I was going to link it here to make my point.

Some web site took ES chips and their retail counterparts and benched them. The difference was very very slight. Their was about 4 or 5 chips.

If someone knows the article I am talking about please link it.

edit: Never mind they were Intel chips

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,567

Thanks to the OP at /r/AMD_Stock, we have all the pages on the Zen preview,

The album of photos: http://imgur.com/a/KOXPd

Also included are comments summarizing CanardPC's article on Intel's outlook, including this:

It does jive with a few things I heard from people I know in the industry. The current CEO of Intel seems a lot like Hector Ruiz was for AMD. But from what little I know I think there is some truth to that posting, take it for what it's worth.

From a performance standpoint it boils down to max attainable frequency; at 4GHz it would trade blows with a stock 6900K in threaded applications (and Broadwell has virtually no clock headroom), while more or less matching the 6700K in games. If it's stuck at 3.4GHz (which isn't a stretch, remember how poorly Broadwell clocked?) it better be $349.

At the very least it looks like we're back to the Phenom II vs i7-9xx days, AMD will give you significantly better multithreaded performance for less while not being awful at single threaded applications (unlike the disaster that was Bulldozer); I'd probably still buy Intel as my primary gaming rig, but Ryzen will put a cap on how much Intel can charge.

At the very least it looks like we're back to the Phenom II vs i7-9xx days, AMD will give you significantly better multithreaded performance for less while not being awful at single threaded applications (unlike the disaster that was Bulldozer); I'd probably still buy Intel as my primary gaming rig, but Ryzen will put a cap on how much Intel can charge.

SixFootDuo

Supreme [H]ardness

- Joined

- Oct 5, 2004

- Messages

- 5,825

I Don't care about the cores as much but I do love my 6 core 12 threaded 5820K.

Unless this chip is around $350 then I will stick with Intel. Anyone can buy a 5820k at Microcenter for $299 or less on eBay used.

You can get 4.6ghz out of a 5820k @ 1.35v and good cooling.

@ 4.6ghz and 3000mhz DDR4 I just really don't need anything.

Unless this chip is around $350 then I will stick with Intel. Anyone can buy a 5820k at Microcenter for $299 or less on eBay used.

You can get 4.6ghz out of a 5820k @ 1.35v and good cooling.

@ 4.6ghz and 3000mhz DDR4 I just really don't need anything.

lisa implied the boost wasn't working. and the probable reason she showed 16 thread tests rather than 1 thread tests is because the boost indeed wasn't working.

so there's no use to these (leaked?) game tests. the real product could be 400mhz different, which is a lot

so there's no use to these (leaked?) game tests. the real product could be 400mhz different, which is a lot

Shintai

Supreme [H]ardness

- Joined

- Jul 1, 2016

- Messages

- 5,678

lisa implied the boost wasn't working. and the probable reason she showed 16 thread tests rather than 1 thread tests is because the boost indeed wasn't working.

so there's no use to these (leaked?) game tests. the real product could be 400mhz different, which is a lot

The reason could also be that there wasn't room for it. Power consumption was up at the TDP limit. And we got plenty of hints that boost will depend on cooling solutions and temperature. So from the sound of it, boost will be quite random from user to user.

https://www.techpowerup.com/228684/amd-ryzen-demo-event-beats-usd-1-100-8-core-i7-6900k-with-lower-tdpHigh power consumption and poor game performance. Yep it's another AMD chip

"Again at 3.4 GHz, Ryzen was shown "beating the game frame-rates of a Core i7 6900K playing Battlefield 1 at 4K resolution, with each CPU paired with an Nvidia Titan X GPU".

Yep, indeed it's another AMD chip, how borring

Last edited:

Shintai

Supreme [H]ardness

- Joined

- Jul 1, 2016

- Messages

- 5,678

https://www.techpowerup.com/228684/...eats-usd-1-100-8-core-i7-6900k-with-lower-tdp

"Again at 3.4 GHz, Ryzen was shown "beating the game frame-rates of a Core i7 6900K playing Battlefield 1 at 4K resolution, with each CPU paired with an Nvidia Titan X GPU".

Yep, indeed it's another AMD chip, how borring

It didn't beat 6900K in BF1. From people there Ryzen was quite a bit from it. 6900K gave 10%+ higher frame rates and unlike Ryzen was GPU limited.

If I dont recall wrong it was "comparable" in the live stream , but then the press it became beating.

https://hardforum.com/threads/amd-zen-performance-preview.1908926/page-14#post-1042703485

Last edited:

TheLAWNoob

Limp Gawd

- Joined

- Jan 10, 2016

- Messages

- 330

It didn't beat 6900K in BF1. From people there Ryzen was quite a bit from it. 6900K gave 10%+ higher frame rates and unlike Ryzen was GPU limited.

If I dont recall wrong it was "comparable" in the live stream , but then the press it became beating.

https://hardforum.com/threads/amd-zen-performance-preview.1908926/page-14#post-1042703485

Damn I almost mistaken you for an intel fanboi until I saw the facts.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)