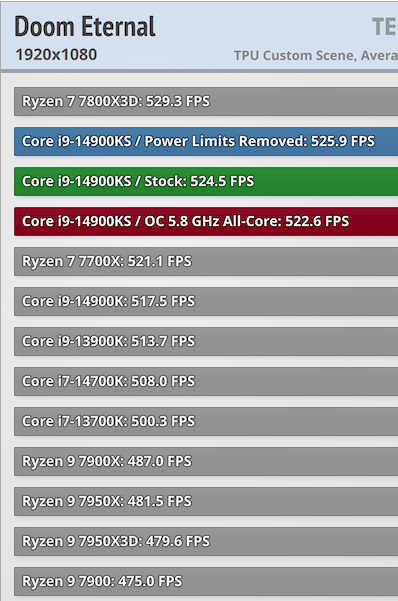

People need to stop saying "best of both worlds" it isn't. It's a compromise. a 7950x3d is still slower than a 7950x in most non-gaming scenarios. Best of both worlds means just that. BEST. It would have to be faster than a 7950x in non gaming workloads and faster than a 7800x3d in gaming workloads.

It's faster than anything but the 7950X in non-gaming workloads, and faster than anything in gaming workloads. It'd be even slower if it had vcache on both dies in non-gaming workloads and no faster in gaming workloads. So it is the best compromise possible with current technology, which is 2-3% faster in gaming than anything, and 2-3% slower in non-gaming than the absolute best. Happy?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)