Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,866

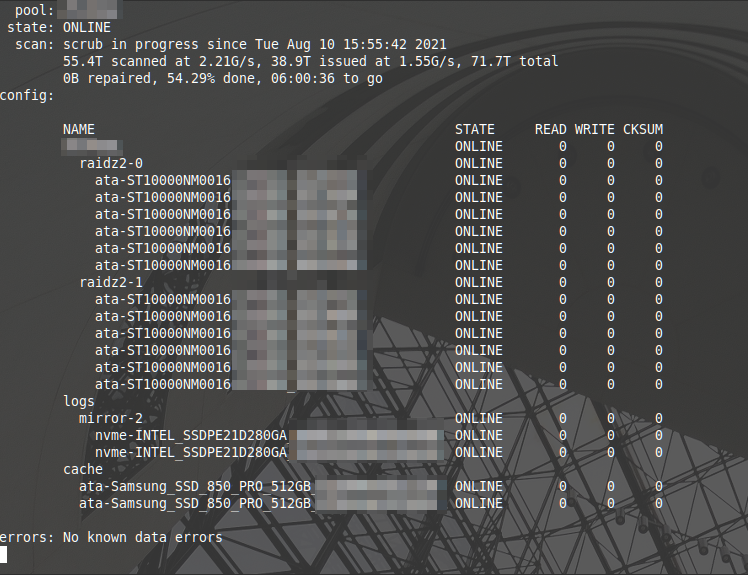

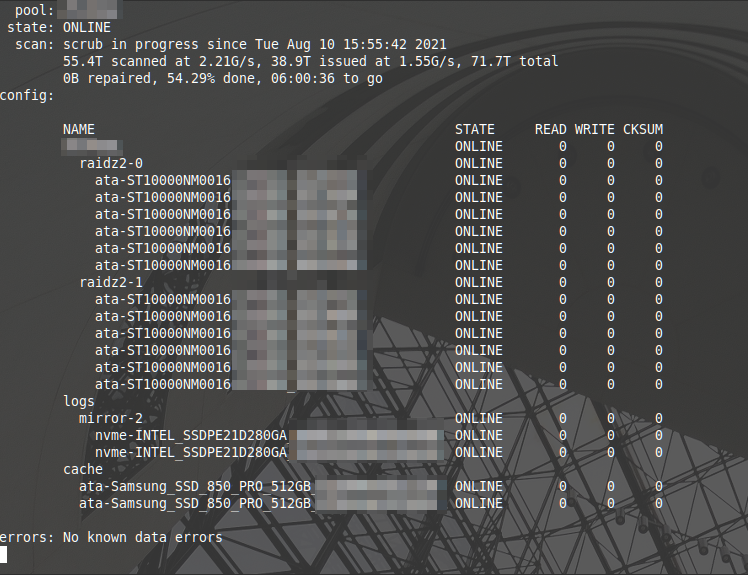

I was doing a scrub today, after (hopefully) chasing down a very annoying SAS cable issue that was causing errors and was reminded how cool it is to see these arrays tear through massive amounts of data during scrubs and resilvers, so I figured I'd post mine.

What have you got?

What have you got?

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)