I figured this would be the right place to share some of my findings and recent tests involving zfs, since it seems alot of people have picked up interested here in the past months.

I have a fairly good sized zfs storage server currently in production (15TB, 21 spindles), that has been running well for the past 2-3 years or so without a real hitch.

We are looking to change the performance characterisitics of this machine to bring the speed up to par with what I was intially expecting, though since I cannot take this box down at the moment I threw some spare parts together to do some testing with to see if its even possible to get the speeds we need out of this kind of storage device.

The current machine is setup as a storage server, mostly serving larger files, and acting as a backup machine for /home as well as most of our applications. The speed of the machine however has not been up to my standards (of what i would exect from a machine with this kind of hardware, and this spindle count) To a Windows 7 machine, i get ~52MB/s transfer, moving from the same machine to the server i get ~70MB/s write. The array in the windows 7 machine is capable of 200+MB/s so thats not the issue, also all connected with GigE (will be playing the diffrent NICs here later.) The drives in the server are configured as 3 x 7 drive Raidz2's striped. So the array should easily saturate a gigE line.

But since I cant really do alot of testing on that machine (limited to off hours at the moment, and i dont want to stay up that late) I have setup another small lab to simulate the environment we would like to have setup so that I can get some rough performance numbers.

__________________________________________________

We would like to setup a ZFS backed ESXi storage pool to store VMs on to move away from DAS, basically a ZFS SAN if you will (and without the 100K price tag too!)

The final current drafted deployment would be 1155 sandybridge Xeon, 16-24GB RAM (depending what motherboards offer) LSI 1068E HBA, HP Expander, and 21 spindles (currently 21 x 1TB 7200.12 Seagates, though will end up moving to 2-3TB drives here within 16 months), either a dual, or quadport Intel NIC. Have ESXi installed to an internal flash drive, and use VT-D to pass the controller through to a freeBSD (w/e RELEASE version at the time) wich will run ZFS on the attached drives. This way we will be able to use the idle clocks on the machine for a few extra things, secondary DNS server, backup domain controller, etc. The machine would act as again a central fileserver, as well as a backing for other ESXi hosts.

The simple test lab that I currently have setup should allow me to explore nearly all possible configurations to see if the above would be suitable (minus the VT-D, but that we can gamble on.)

Configuration of the assembled test lab is as follows;

Storage Server;

Xeon 3075 (dual core 2.66Ghz

8GB RAM

single broadcom gigE NIC

1068e controller

6x 1TB 7200.12 Seagate drives

1x 60GB Mushkin Callisto SSD (for playing with cache drive configs, etc.)

HP Procurve Layer 3 GigE switch (cant remmeber the model i think its a 2848)

ESX Box;

2x 2.8Ghz Xeon

3GB RAM

2x 146GB SCSI HDDs

2x Intel GigE NICs

Now the storage box is currently installed with freeBSD 8.1-Release to its own drive (onboard controller) with the 6 1TB drives connected to the 1068E controller (SSD also connected to onboard controller) With the entire 1068e backed zpool being exported via nfs through the procurve to the ESX 4.1 box (NFS has its own NIC on the ESX box)

I have a Windows XP, as well as Windows 7 VM loaded on the ESX box, with ESX carving out a 25GB test drive for each (only one VM running at a time, so there should be 0 load on the storage machine save for the VM (and whever ESX polls it)

I ran a few tests, 6 drive Raidz, 6 drive Raidz with ZIL disabled via vfs.zfs.zil_disable=1 in loader.conf, SSD attached as a ZIL device (ZIL reenabled), then just the SSD on its own (I would have assumed that the SSD on its own should have topped out the ethernet connection...) and lastly a 6 drive stripe, then with the SSD added as a ZIL device.

All in all these benchmarks have me scratching my head a bit, and starting to point my finger at the ESX NFS daemon maybe? also watching disk performacne with gstat I see that the SSD topped out around 65MB/s or 4200 iops... not even close to what I would expect from it.

I am currently installign a test FreebSD server to mount the NFS share with it to bypass the ESX NFS daemon completly and check the performance there.

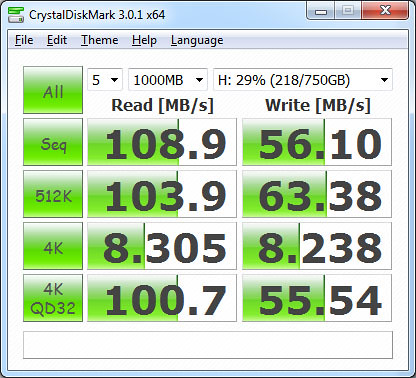

In the included screenshots are my benchmarks from my windows test VMs, I did one bench with Windows 7 as I know it has a much more robust TCP/IP stack than XP does, though it got some pretty odd results... they can probobly be attributed to OS caching.

My big questions is I have not used NFS in a deployment like this before, would these benchmarks be considered normal? does the NFS protocol have a large overhead? as many of these benchmarks seem to indicate a hardcap at ~80MB/s. Would these results be considered enough for an NFS backed ESXi store? I came into this totally expecting to pin the GigE line at atleast 100MB/s both ways...

I also know the ZIL enabled results showing ~5MB/s write are due to ESXi forcing each write to be commited to stable media with SYNC commands.

Sorry for the long winded post

Raidz Zil Enabled

Raidz Zil Disabled

SSD Only Zil Enabled

SSD Only Zil Disabled

SSD Only Zil Disabled Windows 7

Stripe Zil Enabled

Stripe SSD as ZIL Device

I have a fairly good sized zfs storage server currently in production (15TB, 21 spindles), that has been running well for the past 2-3 years or so without a real hitch.

We are looking to change the performance characterisitics of this machine to bring the speed up to par with what I was intially expecting, though since I cannot take this box down at the moment I threw some spare parts together to do some testing with to see if its even possible to get the speeds we need out of this kind of storage device.

The current machine is setup as a storage server, mostly serving larger files, and acting as a backup machine for /home as well as most of our applications. The speed of the machine however has not been up to my standards (of what i would exect from a machine with this kind of hardware, and this spindle count) To a Windows 7 machine, i get ~52MB/s transfer, moving from the same machine to the server i get ~70MB/s write. The array in the windows 7 machine is capable of 200+MB/s so thats not the issue, also all connected with GigE (will be playing the diffrent NICs here later.) The drives in the server are configured as 3 x 7 drive Raidz2's striped. So the array should easily saturate a gigE line.

But since I cant really do alot of testing on that machine (limited to off hours at the moment, and i dont want to stay up that late) I have setup another small lab to simulate the environment we would like to have setup so that I can get some rough performance numbers.

__________________________________________________

We would like to setup a ZFS backed ESXi storage pool to store VMs on to move away from DAS, basically a ZFS SAN if you will (and without the 100K price tag too!)

The final current drafted deployment would be 1155 sandybridge Xeon, 16-24GB RAM (depending what motherboards offer) LSI 1068E HBA, HP Expander, and 21 spindles (currently 21 x 1TB 7200.12 Seagates, though will end up moving to 2-3TB drives here within 16 months), either a dual, or quadport Intel NIC. Have ESXi installed to an internal flash drive, and use VT-D to pass the controller through to a freeBSD (w/e RELEASE version at the time) wich will run ZFS on the attached drives. This way we will be able to use the idle clocks on the machine for a few extra things, secondary DNS server, backup domain controller, etc. The machine would act as again a central fileserver, as well as a backing for other ESXi hosts.

The simple test lab that I currently have setup should allow me to explore nearly all possible configurations to see if the above would be suitable (minus the VT-D, but that we can gamble on.)

Configuration of the assembled test lab is as follows;

Storage Server;

Xeon 3075 (dual core 2.66Ghz

8GB RAM

single broadcom gigE NIC

1068e controller

6x 1TB 7200.12 Seagate drives

1x 60GB Mushkin Callisto SSD (for playing with cache drive configs, etc.)

HP Procurve Layer 3 GigE switch (cant remmeber the model i think its a 2848)

ESX Box;

2x 2.8Ghz Xeon

3GB RAM

2x 146GB SCSI HDDs

2x Intel GigE NICs

Now the storage box is currently installed with freeBSD 8.1-Release to its own drive (onboard controller) with the 6 1TB drives connected to the 1068E controller (SSD also connected to onboard controller) With the entire 1068e backed zpool being exported via nfs through the procurve to the ESX 4.1 box (NFS has its own NIC on the ESX box)

I have a Windows XP, as well as Windows 7 VM loaded on the ESX box, with ESX carving out a 25GB test drive for each (only one VM running at a time, so there should be 0 load on the storage machine save for the VM (and whever ESX polls it)

I ran a few tests, 6 drive Raidz, 6 drive Raidz with ZIL disabled via vfs.zfs.zil_disable=1 in loader.conf, SSD attached as a ZIL device (ZIL reenabled), then just the SSD on its own (I would have assumed that the SSD on its own should have topped out the ethernet connection...) and lastly a 6 drive stripe, then with the SSD added as a ZIL device.

All in all these benchmarks have me scratching my head a bit, and starting to point my finger at the ESX NFS daemon maybe? also watching disk performacne with gstat I see that the SSD topped out around 65MB/s or 4200 iops... not even close to what I would expect from it.

I am currently installign a test FreebSD server to mount the NFS share with it to bypass the ESX NFS daemon completly and check the performance there.

In the included screenshots are my benchmarks from my windows test VMs, I did one bench with Windows 7 as I know it has a much more robust TCP/IP stack than XP does, though it got some pretty odd results... they can probobly be attributed to OS caching.

My big questions is I have not used NFS in a deployment like this before, would these benchmarks be considered normal? does the NFS protocol have a large overhead? as many of these benchmarks seem to indicate a hardcap at ~80MB/s. Would these results be considered enough for an NFS backed ESXi store? I came into this totally expecting to pin the GigE line at atleast 100MB/s both ways...

I also know the ZIL enabled results showing ~5MB/s write are due to ESXi forcing each write to be commited to stable media with SYNC commands.

Sorry for the long winded post

Raidz Zil Enabled

Raidz Zil Disabled

SSD Only Zil Enabled

SSD Only Zil Disabled

SSD Only Zil Disabled Windows 7

Stripe Zil Enabled

Stripe SSD as ZIL Device

![[H]ard|Forum](/styles/hardforum/xenforo/logo_light.png)