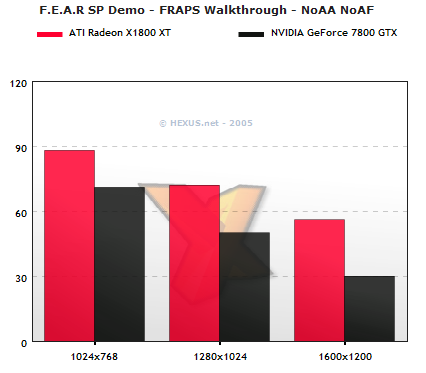

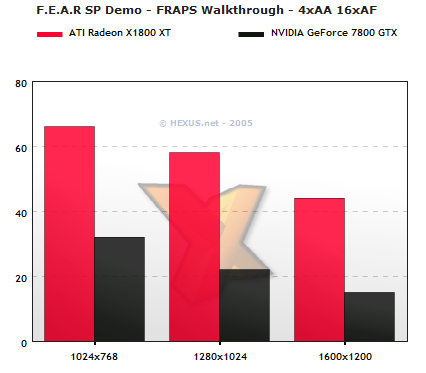

WOW... a picture is worth a thousand words...

ATi has proved that you dont need 24pipes to get performance... with their x1800xt that has 16pipes... it kills!

Btw this review was done with the Catalyst 5.9 which arent even optimized for the X1K cards!

P.s. Damn the X1600 which is 128bit beats the x800XL!

Link: http://www.hothardware.com/viewarticle.cfm?articleid=734&cid=2

ATi has proved that you dont need 24pipes to get performance... with their x1800xt that has 16pipes... it kills!

Btw this review was done with the Catalyst 5.9 which arent even optimized for the X1K cards!

P.s. Damn the X1600 which is 128bit beats the x800XL!

Link: http://www.hothardware.com/viewarticle.cfm?articleid=734&cid=2

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)