Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Why OLED for PC use?

- Thread starter Quiz

- Start date

But which display can show pictures of Spider-Man?!

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

The truth:

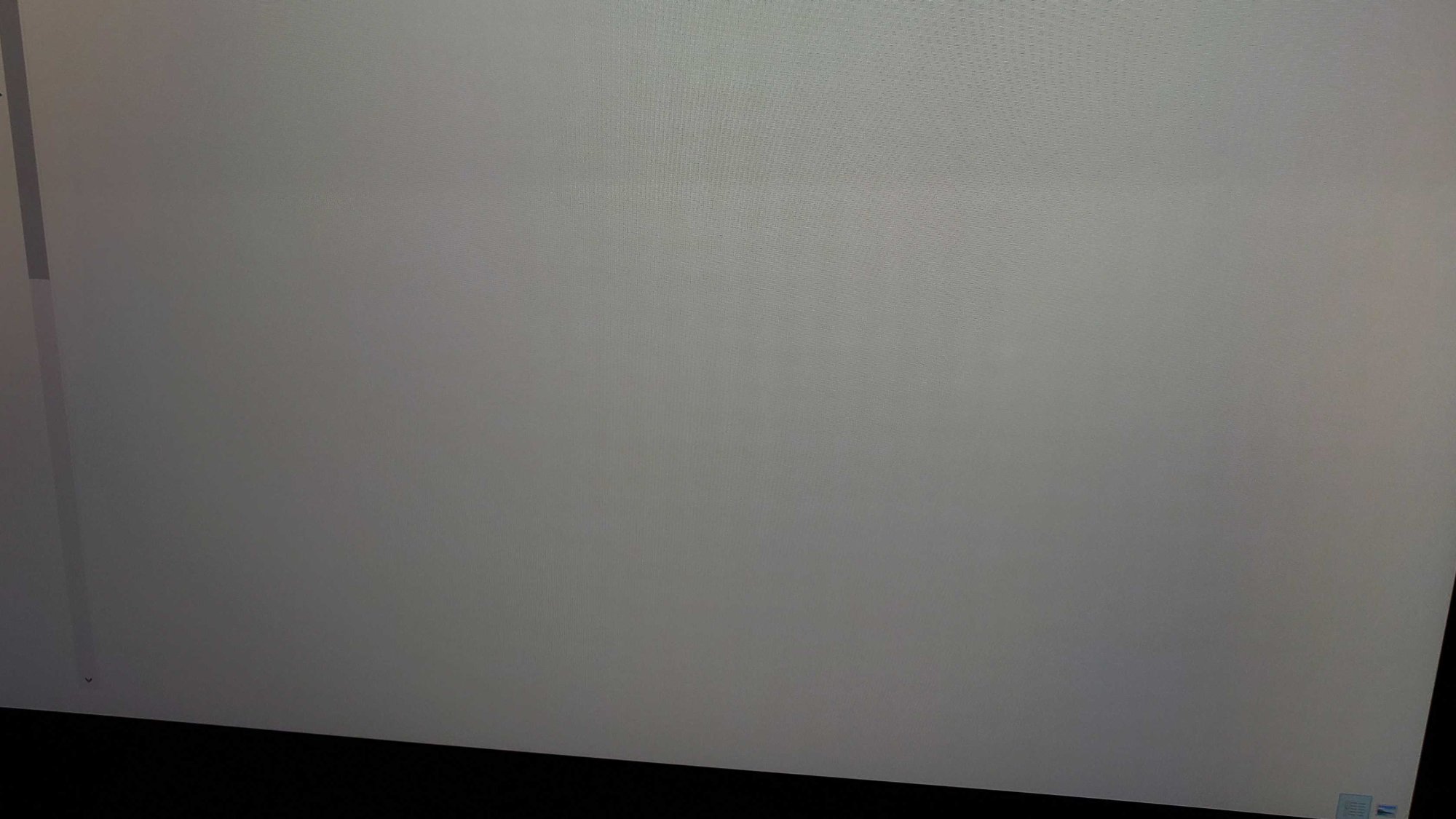

PG35VQ HDR vs AW3423DW HDR

PG35VQ SDR vs AW3423DW HDR

@kramnelis

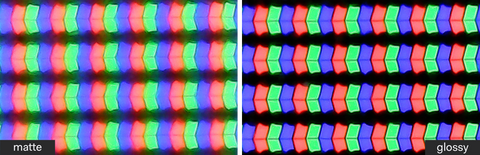

I've been saying this for a while on YT: AW3423DW is not a good screen in terms of brightness and the picture looking fuzzy. When i got the LG C2, it was like night and day in terms of POP and being more clear. Its not the coating making for a slightly veiled "matte" look, but either the pixel structure or the quantum dot layer is to blame for a fuzzy picture. Its also not the PPI. I don't give a rats ass about text fringing, you use 150% at 4K anyway, so that pretty much disappears with bigger text.

WOLED isn't perfect either, a glossy LCD RGB stripe is slightly crisper even with 2.5 points lower PPI. But the difference between the 34" QD-OLED Alienware and LG C2 is much bigger than LG C2 vs. my glossy 21.5" 1080p LCD.

I've been saying this for a while on YT: AW3423DW is not a good screen in terms of brightness and the picture looking fuzzy. When i got the LG C2, it was like night and day in terms of POP and being more clear. Its not the coating making for a slightly veiled "matte" look, but either the pixel structure or the quantum dot layer is to blame for a fuzzy picture. Its also not the PPI. I don't give a rats ass about text fringing, you use 150% at 4K anyway, so that pretty much disappears with bigger text.

WOLED isn't perfect either, a glossy LCD RGB stripe is slightly crisper even with 2.5 points lower PPI. But the difference between the 34" QD-OLED Alienware and LG C2 is much bigger than LG C2 vs. my glossy 21.5" 1080p LCD.

Still would take ABL drawbacks over shit blacks, haloing and motion issues.The truth:

PG35VQ HDR vs AW3423DW HDR

View attachment 547880

View attachment 547881

PG35VQ SDR vs AW3423DW HDR

View attachment 547882

View attachment 547883

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

That's why you can only see SDR on OLED.Still would take ABL drawbacks over shit blacks, haloing and motion issues.

Any actual HDR image with OLED always looks this bad. A little bit tweak of EOTF FALD LCD can have as much black as OLED.

xDiVolatilX

2[H]4U

- Joined

- Jul 24, 2021

- Messages

- 2,514

Yup everything about OLED is dim especially HDR. You should see my QN90B in HDR with FALD and Contrast enhancer both on high holy shit It's jaw dropping at full brightness in all it's glory it's a sight to beholdThe truth:

PG35VQ HDR vs AW3423DW HDR

View attachment 547880

View attachment 547881

PG35VQ SDR vs AW3423DW HDR

View attachment 547882

View attachment 547883

That's why you can only see SDR on OLED.

Any actual HDR image with OLED always looks this bad. A little bit tweak of EOTF FALD LCD can have as much black as OLED.

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

There should be a 3rd misconception.

An average 100nits image with a few dots of 1000nits is not exactly HDR.

It's an iceberg or even less. It doesn't have HDR 10bit color. It's a market material as 99% of the image is still in SDR range. Then SDR can do the similar thing.

An average 100nits image with a few dots of 1000nits is not exactly HDR.

It's an iceberg or even less. It doesn't have HDR 10bit color. It's a market material as 99% of the image is still in SDR range. Then SDR can do the similar thing.

I just don't agree with the premise that HDR can't be impactful on an OLED. My experience says something different, despite expecting to reach that conclusion as well. Honestly, when I bought it, I had kind of written HDR off and figured I'd want to use SDR for most things because I assumed HDR wouldn't be impactful, and I'm kind of blown away how well HDR works (native HDR - not a fan of the AutoHDR where it tries to force SDR into an HDR space). I did do the calibration so Windows knows where the limits are, etc. And some of the games like Cyberpunk have their own calibration.

I've played around with two different native HDR games (Cyberpunk 2077 and Dead Space) as well as some HDR YouTube videos since going OLED, and the HDR seems plenty impactful, a lot more than I expected to be frank. Bright sections (police headlights in the beginning sequence of Cyberpunk 2077, or the sun through the spaceship window in Dead Space, or showers of sparks in Dead Space, or flames and overhead lights in HDR video demos on YouTube...but even full-field stuff like a sunny beach look good). That's in no way to say on a FALD you're not going to have higher brightness, but especially in a dark room, it's plenty bright on an OLED in 99% of scenarios (well, all of the ones I've tried so far; I can't pick out a problematic scene yet).

Sure, if you compare side-by-side, the FALD is going to be brighter (my TV is FALD and the HDR is brilliantly bright), but that doesn't mean you can't have a very enjoyable HDR experience on an OLED panel, and I've been doing just that and think it looks great. HDR is bright enough to make me flinch, and at least so far in my limited testing, I haven't noticed any real ABL problems for the HDR content I've seen thus far.

Now, if you want dim, my first LG OLED TV, before they had tone mapping, had absolutely miserable HDR, and I thought the problem was with me. Things were legitimately too dim to see most of the time. I had no idea why I disliked HDR so until I upgraded to a FALD TV and saw what HDR was really supposed to look like. That said, that is NOT an issue here on a modern LG OLED panel. HDR looks great! My TV can go brighter in HDR, but the perfect blacks here help make up for that, and I'd honestly say *in a dark room* it's a tossup as to which I'd want to play on in HDR.

I've played around with two different native HDR games (Cyberpunk 2077 and Dead Space) as well as some HDR YouTube videos since going OLED, and the HDR seems plenty impactful, a lot more than I expected to be frank. Bright sections (police headlights in the beginning sequence of Cyberpunk 2077, or the sun through the spaceship window in Dead Space, or showers of sparks in Dead Space, or flames and overhead lights in HDR video demos on YouTube...but even full-field stuff like a sunny beach look good). That's in no way to say on a FALD you're not going to have higher brightness, but especially in a dark room, it's plenty bright on an OLED in 99% of scenarios (well, all of the ones I've tried so far; I can't pick out a problematic scene yet).

Sure, if you compare side-by-side, the FALD is going to be brighter (my TV is FALD and the HDR is brilliantly bright), but that doesn't mean you can't have a very enjoyable HDR experience on an OLED panel, and I've been doing just that and think it looks great. HDR is bright enough to make me flinch, and at least so far in my limited testing, I haven't noticed any real ABL problems for the HDR content I've seen thus far.

Now, if you want dim, my first LG OLED TV, before they had tone mapping, had absolutely miserable HDR, and I thought the problem was with me. Things were legitimately too dim to see most of the time. I had no idea why I disliked HDR so until I upgraded to a FALD TV and saw what HDR was really supposed to look like. That said, that is NOT an issue here on a modern LG OLED panel. HDR looks great! My TV can go brighter in HDR, but the perfect blacks here help make up for that, and I'd honestly say *in a dark room* it's a tossup as to which I'd want to play on in HDR.

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

To see accurate images, HDR need to be viewed in a dark room regardless of the content.

What you described still belong to the images where SDR can do similar. They might be pleasant to look but won't be anywhere near impactful or realistic because a few dots of highlight doesn't show color while high APL images shows both contrast and color. This is where HDR shines the most. And most people ignore that only high brightness can show 10bit 1024 shades of color.

Eyes can flinch at an average 100nits image with a few dots of 600nits flashlight against 1nits black background. This is how the eyes work. But when there is an average 400nits APL images, eyes will adapt to it without issues if the monitor is flicker-free.

Windows Auto HDR corresponds to the range of both color and contrast. If a monitor is capable of HDR 1000, the SDR will be scaled to HDR 1000. If the monitor is good at HDR, Auto HDR will look good.

Choosing a monitor is a choice between contrast, color, flickers, response time.

What you described still belong to the images where SDR can do similar. They might be pleasant to look but won't be anywhere near impactful or realistic because a few dots of highlight doesn't show color while high APL images shows both contrast and color. This is where HDR shines the most. And most people ignore that only high brightness can show 10bit 1024 shades of color.

Eyes can flinch at an average 100nits image with a few dots of 600nits flashlight against 1nits black background. This is how the eyes work. But when there is an average 400nits APL images, eyes will adapt to it without issues if the monitor is flicker-free.

Windows Auto HDR corresponds to the range of both color and contrast. If a monitor is capable of HDR 1000, the SDR will be scaled to HDR 1000. If the monitor is good at HDR, Auto HDR will look good.

Choosing a monitor is a choice between contrast, color, flickers, response time.

Respectfully, I see a world of difference between the SDR and HDR versions of these games. Don't get me wrong - the SDR looks good, but you can tell pretty quickly it's SDR and the highlights don't pop nearly as much as the HDR version. Perfect blacks help the contrast, and I haven't any complaints as to color quality for the content I've seen. That's not to say a tech with higher brightness/high APL doesn't have better colors - I believe you, and it makes sense. But anecdotally, my experience is games and such in HDR still looks very very good with a lot more impact than SDR, so it's still worth using HDR on OLEDs in my opinion.

I don't tend to like Auto HDR on anything, regardless of the monitor quality; it looks okay on some things but not so great on others. That's down to personal preference tho'. If something isn't built for HDR, too many possible unintended effects. (I don't like it on my brighter FALD TV either). I prefer the look something was natively made for (SDR or HDR respectively), generally speaking.

As far as flicker, I haven't noticed anything perceptible. I'd actually grade this OLED monitor much easier on the eyes overall than most past ones I've used. I'm sure some people are more sensitive than others tho'.

I don't tend to like Auto HDR on anything, regardless of the monitor quality; it looks okay on some things but not so great on others. That's down to personal preference tho'. If something isn't built for HDR, too many possible unintended effects. (I don't like it on my brighter FALD TV either). I prefer the look something was natively made for (SDR or HDR respectively), generally speaking.

As far as flicker, I haven't noticed anything perceptible. I'd actually grade this OLED monitor much easier on the eyes overall than most past ones I've used. I'm sure some people are more sensitive than others tho'.

Last edited:

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

SDR on OLED is only 250nits top while SDR on FALD LCD is 500-600nits similar to HDR 400. There is more pop between SDR sRGB 80nits vs HDR 400. But there isn't much difference between SDR Adobe 400nits vs HDR 400.

Only true HDR monitors can look good with Windows Auto HDR as it showcase the range of the monitors on both contrast and colors. Most monitors only get contrast with auto HDR.

Only true HDR monitors can look good with Windows Auto HDR as it showcase the range of the monitors on both contrast and colors. Most monitors only get contrast with auto HDR.

Why would I want SDR above 120 nits?SDR on OLED is only 250nits top while SDR on FALD LCD is 500-600nits similar to HDR 400. There is more pop between SDR sRGB 80nits vs HDR 400. But there isn't much difference between SDR Adobe 400nits vs HDR 400.

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

On the true HDR monitors, 400nits SDR is basically HDR400 compared the original sRGB 80nits while the true HDR1000 is not anywhere a monitor like AW3423DW is capable of.Why would I want SDR above 120 nits?

It means every SDR image I see has at least 4x range compared the original sRGB 80nits that won't look anywhere as good. These monitors can turn SDR into HDR.

OLED cannot do this. OLED doesn't even have Adobe colorpace. It only has DCI-P3.

AwesomeOwl

Weaksauce

- Joined

- Feb 15, 2011

- Messages

- 88

Perhaps the sun is shining on your monitor, or maybe you are on a crusade to save humanity from the evil OLED by showing them the divine light of the FALD LCD - halos and all.Why would I want SDR above 120 nits?

SDR is SDR. It will never not be SDR. SDR on an HDR monitor shouldn't be any different from SDR on an SDR monitor. What is the difference between your imaginary "SDR Adobe 400nits" and SDR on a regular old fashioned 400 nits capable SDR monitor?On the true HDR monitors, 400nits SDR is basically HDR400 compared the original sRGB 80nits while the true HDR1000 is not anywhere a monitor like AW3423DW is capable of.

What?It means every SDR image I see has at least 4x range compared the original sRGB 80nits that won't look anywhere as good.

Very true. No monitor can do that, since it is literally impossible as there is no hidden information stored in an SDR image that can be magically revealed or enhanced.These monitors can turn SDR into HDR.

Do what?OLED cannot do this.

Right... Which is a huge problem... Or maybe not...OLED doesn't even have Adobe colorpace. It only has DCI-P3.

Last edited:

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

SDR is SDR. It will never not be SDR. SDR on an HDR monitor shouldn't be any different from SDR on an SDR monitor. What is the difference between your imaginary "SDR Adobe 400nits" and SDR on a regular old fashioned 400 nits capable SDR monitor?

If only you could see what I see with an actual HDR monitors that makes SDR similar to HDR. So the images are always 4x better than the original.

If only you could see what I see with an actual HDR monitors that makes SDR similar to HDR. So the images are always 4x better than the original.

Last edited:

AwesomeOwl

Weaksauce

- Joined

- Feb 15, 2011

- Messages

- 88

Yeah, if only... However, I am afraid that no one can see what you see.If only you could see what I see with an actual HDR monitors that makes SDR similar to HDR. So the images are always 4x better than the original.

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

Get a true HDR monitor first.Yeah, if only... However, I am afraid that no one can see what you see.

AwesomeOwl

Weaksauce

- Joined

- Feb 15, 2011

- Messages

- 88

Perhaps I will consider it if you answer my questions. You would of course have to give me the definition of what a True HDR Monitor is first as well. Also, if your True HDR Monitor turns SDR into HDR, what does that make actual HDR viewed on this True HDR Monitor?Get a true HDR monitor first.

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

I don't do imagination.Perhaps I will consider it if you answer my questions. You would of course have to give me the definition of what a True HDR Monitor is first as well. Also, if your True HDR Monitor turns SDR into HDR, what does that make actual HDR viewed on this True HDR Monitor?

I have said many times 400nits SDR can beat OLED HDR400 when hit by ABL. What does this mean? OLED can only display SDR 8bit. It won't display 10bits 1024 shades of colors when the brightness is cramped under 200nits.

PG35VQ SDR vs AW3423DW HDR

When the actual HDR1000 shows up, OLED is dwarfed by true HDR monitors with 3X - 5X higher range side by side. This is the actual HDR with 10bit 1024 shades colors lit by at least 1000nits.

PG35VQ HDR vs AW3423DW SDR

So what is a true HDR monitor?

A true HDR monitor has flicker-free 1000+nits in large 60% window not some gimmick 2% window.

You will have frequent ABL in HDR 1000 mode that shrinks both contrast and color. You will have eye strain with high APL images if the monitor flickers.

Last edited:

AwesomeOwl

Weaksauce

- Joined

- Feb 15, 2011

- Messages

- 88

That's funny, because it seems like it's all you do. If it's not imagination, then where do you obtain this magic Adobe SDR 400 nits material?I don't do imagination.

In certain material, of course. It's a real shame that there is no True HDR Monitor that can do dark scenes without major artifacts.When the actual HDR1000 shows up, OLED is dwarfed by true HDR monitors with 3X - 5X higher range side by side. This is the actual HDR with 10bit 1024 shades colors lit by at least 1000nits.

Your pictures are absolutely meaningless btw. They could just as well be two identical SDR monitors at different brightness.

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

It's more like you are the one doing imagination because you don't even know most HDR is graded from just sRGB 80nits. The color can be stretched.That's funny, because it seems like it's all you do. If it's not imagination, then where do you obtain this magic Adobe SDR 400 nits material?

In certain material, of course. It's a real shame that there is no True HDR Monitor that can do dark scenes without major artifacts.

Your pictures are absolutely meaningless btw. They could just as well be two identical SDR monitors at different brightness.

You can use a true HDR monitor to do the similar stretch automatically. That's why I said Adobe SDR 400ntis from sRGB 80nits looks very similar to HDR400.

And you don't know SDR can have infinite contrast, 0 black as well. That's why I said most people don't even realize what they are seeing on OLED is in fact SDR.

Imagination is a powerful tool. I like how you deny the pictures as if they are not true. You cannot show HDR on this forum but relative brightness is still there.

You don't want me to lit up the light to show the stand of AW3423DW as proof because there is no polarizer on that OLED. It can look grey/red under the light.

AwesomeOwl

Weaksauce

- Joined

- Feb 15, 2011

- Messages

- 88

What am I imagining? I am not the one coming up with ridiculous terms such as "Adobe SDR 400 nits". Please do give me a source on this HDR grading from "sRGB 80nits", just so I can see what on earth you're actually trying to say. Of course you won't provide any external sources to any of your claims about anything.It's more like you are the one doing imagination because you don't even know most HDR is graded from just sRGB 80nits. The color can be stretched.

I don't? That's news to me. But good thing there are all-knowing beings, such as yourself, that can enlighten me on these things.And you don't know SDR can have infinite contrast, 0 black as well. That's why I said most people don't even realize what they are seeing on OLED is in fact SDR.

I believe that you have in fact proven this, yes.Imagination is a powerful tool.

Where? I said they are meaningless. They are useless. They can't be used to prove anything.I like how you deny the pictures as if they are not true.

I don't?You don't want me to lit up the light to show the stand of AW3423DW as proof because there is no polarizer on that OLED. It can look grey/red under the light.

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

If you've bought the remastered UHD version of the old movies such as 1975 Jaws, there is a section specifically explained how the old Rec709/sRGB footage is regraded to HDR1000 for better images more than what the director had seen before.What am I imagining? I am not the one coming up with ridiculous terms such as "Adobe SDR 400 nits". Please do give me a source on this HDR grading from "sRGB 80nits", just so I can see what on earth you're actually trying to say. Of course you won't provide any external sources to any of your claims about anything.

I don't? That's news to me. But good thing there are all-knowing beings, such as yourself, that can enlighten me on these things.

I believe that you have in fact proven this, yes.

Where? I said they are meaningless. They are useless. They can't be used to prove anything.

I don't?

Remastered movies are all graded from the lower ranges of sRGB/Rec.709 80nits to HDR 1000. I can grade movies myself. I know how it works.

A true HDR monitors has Adobe color to automatically deliver better images similar to HDR400 so you don't need to see sRGB 80nits while the actual HDR shows more powerful impacts

You can keep denying it with your imagination while 400nits Adobe SDR easily destroys OLED HDR400 which is still inside SDR range.

Last edited:

Sorry, but mapping SDR to HDR does not always work well/look good. It CAN, but sometimes (I'd even say often) it just doesn't. Doesn't matter the monitor tech. I tried Auto HDR (after Windows HDR calibration) on a $3K very well reviewed FALD LCD and in some games, things just did not look the way they were supposed to. For example, in one game (that doesn't natively support HDR), a cutscene looked much more washed out with Auto HDR than it did under SDR, where it looked a lot more natural. I've seen similar things on my FALD TV, which is great at HDR, but I always try to watch SDR as SDR because I think it legitimately looks better/more natural than trying to blow out SDR into an HDR image.

HDR has a lot more information than just being stretched to fit a certain amount of nits. It knows the sun is supposed to be X number of nits. And sparks or headlights a different value. A lot of games and applications also allow customizing the tone-mapping to your specific monitor so you can set the ceilings/floors and it sticks within that range. There's badly done native HDR, to be sure, but that just shows that HDR is very dependent on being mapped properly to look its best and having proper support for setting the appropriate limits on the display.

Also, it's just factually incorrect to say running a game in native HDR on an OLED is the same as an SDR image. It's simply not. While they look good in both modes, games like Dead Space or Cyberpunk 2077 look a world different in native HDR vs their SDR version, especially as far as the highlights. And it's something you just simply couldn't do in SDR. (Sure, you could crank up the brightness in SDR on a FALD, but then you're just asking for eyestrain - learned that with my FALD TV when it was set a little too bright initially for SDR.) There is no contest that higher brightness panels like FALD ones have more impactful HDR. It's a fact they can get closer to what HDR is graded as (1000 nits or what-have-you). It's also a fact they also have their own issues, like blooming in certain situations or dimming certain things down too much because of reliance on FALD algorithms, just as OLED has certain limitations like ABL or possibility of burn-in. But, that does NOT mean OLED's can't still have impactful, good-looking HDR that differs significantly from SDR, especially with modern tone-mapping.

HDR has a lot more information than just being stretched to fit a certain amount of nits. It knows the sun is supposed to be X number of nits. And sparks or headlights a different value. A lot of games and applications also allow customizing the tone-mapping to your specific monitor so you can set the ceilings/floors and it sticks within that range. There's badly done native HDR, to be sure, but that just shows that HDR is very dependent on being mapped properly to look its best and having proper support for setting the appropriate limits on the display.

Also, it's just factually incorrect to say running a game in native HDR on an OLED is the same as an SDR image. It's simply not. While they look good in both modes, games like Dead Space or Cyberpunk 2077 look a world different in native HDR vs their SDR version, especially as far as the highlights. And it's something you just simply couldn't do in SDR. (Sure, you could crank up the brightness in SDR on a FALD, but then you're just asking for eyestrain - learned that with my FALD TV when it was set a little too bright initially for SDR.) There is no contest that higher brightness panels like FALD ones have more impactful HDR. It's a fact they can get closer to what HDR is graded as (1000 nits or what-have-you). It's also a fact they also have their own issues, like blooming in certain situations or dimming certain things down too much because of reliance on FALD algorithms, just as OLED has certain limitations like ABL or possibility of burn-in. But, that does NOT mean OLED's can't still have impactful, good-looking HDR that differs significantly from SDR, especially with modern tone-mapping.

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

The image inside sRGB 80nits is never a natural or realistic look. It is a compromise to technology. sRGB 80nits looks lifeless now.Sorry, but mapping SDR to HDR does not always work well/look good. It CAN, but sometimes (I'd even say often) it just doesn't. Doesn't matter the monitor tech. I tried Auto HDR (after Windows HDR calibration) on a $3K very well reviewed FALD LCD and in some games, things just did not look the way they were supposed to. For example, in one game (that doesn't natively support HDR), a cutscene looked much more washed out with Auto HDR than it did under SDR, where it looked a lot more natural. I've seen similar things on my FALD TV, which is great at HDR, but I always try to watch SDR as SDR because I think it legitimately looks better/more natural than trying to blow out SDR into an HDR image.

HDR has a lot more information than just being stretched to fit a certain amount of nits. It knows the sun is supposed to be X number of nits. And sparks or headlights a different value. A lot of games and applications also allow customizing the tone-mapping to your specific monitor so you can set the ceilings/floors and it sticks within that range. There's badly done native HDR, to be sure, but that just shows that HDR is very dependent on being mapped properly to look its best and having proper support for setting the appropriate limits on the display.

Also, it's just factually incorrect to say running a game in native HDR on an OLED is the same as an SDR image. It's simply not. While they look good in both modes, games like Dead Space or Cyberpunk 2077 look a world different in native HDR vs their SDR version, especially as far as the highlights. And it's something you just simply couldn't do in SDR. (Sure, you could crank up the brightness in SDR on a FALD, but then you're just asking for eyestrain - learned that with my FALD TV when it was set a little too bright initially for SDR.) There is no contest that higher brightness panels like FALD ones have more impactful HDR. It's a fact they can get closer to what HDR is graded as (1000 nits or what-have-you). It's also a fact they also have their own issues, like blooming in certain situations or dimming certain things down too much because of reliance on FALD algorithms, just as OLED has certain limitations like ABL or possibility of burn-in. But, that does NOT mean OLED's can't still have impactful, good-looking HDR that differs significantly from SDR, especially with modern tone-mapping.

When a monitor can display extended range from sRGB 80nits to Adobe 400nits, it's already halfway decent as it is a higher range.

HDR needs both contrast and color. If AutoHDR looks washed out, it is either that cutscene not supported by AutoHDR or it means the monitor doesn't provide enough color when the brightness gets higher.

This video has 3 stages. The 1st has sRGB 80nits. The 2nd has only the boosted contrast but keeps the same color of sRGB. The 3rd is the HDR1000 with both boosted contrast and extended Rec.2020 color.

And there are already monitors can do 1000nits SDR. If you put Rec.2020 colorspace, they look the same as HDR1000. That's why I said many average OLED 100nits image with a few dots highlight are not exactly HDR. They are inside the range of 400nits SDR 8-bit.

xDiVolatilX

2[H]4U

- Joined

- Jul 24, 2021

- Messages

- 2,514

This is getting good

sharknice

2[H]4U

- Joined

- Nov 12, 2012

- Messages

- 3,759

LCD is garbage

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,312

We all have our opinions on the current state of display tech.

My take on a few things. . . .

- As some have said in the thread, HDR always looks best in a dim room no matter what the tech so claiming bright room use as a reason for brighter screen is a pretty moot point as far as I'm concerned. HDR content is a priority. Best/optimal/"proper" use for a media display should be designing the room layout and light sources around the display to begin with not the other way around. The same kind of thing goes for audio setups.

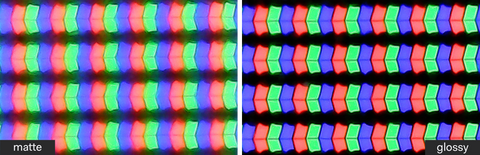

- Glossy is richer and clearer so is a big consideration as far as I'm concerned. If the fact is that you require a matte type ag surface treatment for your room environment, your room environment is polluting the screen with direct light sources and compromising your screen even with AG. The surface treatment will also raise blacks (that's an especially bad thing imo) and can make text and details not as clear, like a haze or light frost/glaze. While people say they don't like seeing reflections, especially of themself (for whatever reason) - you'll still see ghost shapes and blobs of the room contents, people, and bright walls etc. in the screen on black screens areas for example, and where direct lights hit the screen surface treatment you'll get bright area blobs and bars that wash out the screen in those areas. The fact that you "require" matte type AG surface treatment also implies you aren't in control of your lighting in the first place. If your ambient lighting levels vary, the way your eyes and brain perceive contrast and saturation will change one way or another so your calibration/levels aren't the same anymore to you. Throwing them out the window (or the windows throwing them off of the screen as the case may be).

- Text fringing and low PPI exacerbating issues on larger TV gaming screens (42" , 45" , 48" , even 55") are such a vocal issue not so much because of the non-standard subpixel layout but because so many people are trying to shoehorn those larger format screens onto a desk where the result is low PPD beneath 60ppd+ (and getting bad viewing angles besides). 4k screens at the human viewing angle of 50 to 60 degrees (using a simple thin spined floor tv stand or wall mount, etc. for larger 4k screens) get 77 to 64 PPD, no matter what the size of the 4k screen. Bigger perceived pixel sizes, bigger problems.

A 27" 4k screen's fine perceived pixel density due to the pixels pre degree at normal 50 to 60 deg viewing angle will look exactly the same relatively as a larger 4k screen at the distance to get those same viewing angles.

Personally I think a separate screen for desktop and apps is a better idea anyway, using the OLED as the media and gaming "stage" for dynamic content.

- ABL and ASBL(though you can disable ASBL) are a tradeoff but scenes in media and games are usually pretty dynamic with real camera cinematography in media and virtual camera cinematography in games + free mouse-looking, movement keying and controller panning throughout. That usage scenario overall is not static so with a few exceptions it really doesn't happen as frequently ... or as for as long when it does... as you might think or see propagandized. It's still a thing though for sure but I don't find myself seeing it happen enough and long enough to bother me in both pc gaming on my 48cx or in my living room media watching on a C1 OLED.

- Some FALD IPS or VA screens, like some of the 1400 nit 8k tvs, also have ABL. As LCDs get brighter they won't all necessarily have long % screen brightness durations, and ABL is not limited to OLEDs necssarily in all cases.

The samsung QN900B 8k QLED (VA LCD) per RTings:

- FALD dimming "halos" or blooming "halos" (depending which way the firmware leans) will cause loss of details throughout usage and the overall black depth won't be near as great in contrasted scenes no matter what they say (matte type AG coatings also raise blacks to boot). LCD will never be as fine as side by side per pixel emissive razor's edge contrast (down to ultra black) and sbs per pixel detail in color. We won't get that until another tech like microled can do per pixel emissive display , and that micro-led at even a healthy enthusiast gamer level pricing seems a very long way off yet.

Here are two pictures of that 8k QN900B's backlights/FALD-zones, the 2nd one contrasted to see the zones better. That is what 1,344 zones looks like (for comparison , the pro art ucx is 1152 zones). They are large. Compare that to every pixel (4k = 8,294,400 pixels) being emitted individually and able to provide that per pixel sbs contrast, and per pixel sbs detail in colors.

- LG OLED reserves the top 25% of it's brightness for a wear evening routine. People saying you'll burn it in right away as if there is no 25% buffer are ill informed. You'd have to pull some serious jackass maneuver to do that. In normal usage you are instead burning down your oled emitters very slowly and the wear evening routine compares outputs and "cuts them down to even" and then "pushes them back up" in intensity throughout years of the wear evening routine. So you won't get burn in until you bottom out your buffer. How long that takes will depend on how abusive or careless you are - but "normal" OLED use with ordinary precautions should last years. Unfortunately there is no way to tell how much of the buffer you have remaining, via the osd or the service menu afaik so best use scenario is not using the oled as a static app/desktop monitor but plenty of people do and go over 3 - 4 years and running currently w/o burning through their buffer in that more abusive usage scenario.

.

Kramnelis is a zealot for lifting the whole standard scene Mids a lot lot more than the default, not just increasing the high end's highlights and light sources - which is his preference and opinion - so that might explain part of the reason why he is especially aggressive about lower range of OLED compared to say his 1500nit pro art FALD LCD. While I disagree with a lot of his opinions on that (as I said, unlike Kram, I don't think the whole scene needs to be lifted brighter, at least not "unnecessarily") - Kramnelis isn't wrong across the board though by any means, at least as far as his base concepts of brighter and brighter HDR peaks as goals on the high end being more and more realistic and colorful, like future 4000nit HDR and 10,000 nit HDR screens someday, and longer sustained periods. . - it's just that doing it with (FALD) LCD panels is a huge tradeoff or downgrade of many facets of picture quality. OLED, even if not the most pure color at the high end and even if not achieving the highest current ranges (though compared to HDR 4000 and HDR 10,000 - even 1500nit is low). or sustained periods. . OLED is starting to climb to 1000nit peak highlights and perhaps a bit over in the meantime. Heatsink tech and QD-OLED tech and perhaps some other advancements may improve this over gens. There are tradeoffs either way though for sure. We do need much higher peaks eventually. Especially for highlights and direct light sources. But for many of us, after seeing OLED -> (FALD) LCD is not the way to do it. OLED needs to be replaced by another better per pixel emissive display technology someday for sure, but it's not LCD.

My take on a few things. . . .

- As some have said in the thread, HDR always looks best in a dim room no matter what the tech so claiming bright room use as a reason for brighter screen is a pretty moot point as far as I'm concerned. HDR content is a priority. Best/optimal/"proper" use for a media display should be designing the room layout and light sources around the display to begin with not the other way around. The same kind of thing goes for audio setups.

- Glossy is richer and clearer so is a big consideration as far as I'm concerned. If the fact is that you require a matte type ag surface treatment for your room environment, your room environment is polluting the screen with direct light sources and compromising your screen even with AG. The surface treatment will also raise blacks (that's an especially bad thing imo) and can make text and details not as clear, like a haze or light frost/glaze. While people say they don't like seeing reflections, especially of themself (for whatever reason) - you'll still see ghost shapes and blobs of the room contents, people, and bright walls etc. in the screen on black screens areas for example, and where direct lights hit the screen surface treatment you'll get bright area blobs and bars that wash out the screen in those areas. The fact that you "require" matte type AG surface treatment also implies you aren't in control of your lighting in the first place. If your ambient lighting levels vary, the way your eyes and brain perceive contrast and saturation will change one way or another so your calibration/levels aren't the same anymore to you. Throwing them out the window (or the windows throwing them off of the screen as the case may be).

- Text fringing and low PPI exacerbating issues on larger TV gaming screens (42" , 45" , 48" , even 55") are such a vocal issue not so much because of the non-standard subpixel layout but because so many people are trying to shoehorn those larger format screens onto a desk where the result is low PPD beneath 60ppd+ (and getting bad viewing angles besides). 4k screens at the human viewing angle of 50 to 60 degrees (using a simple thin spined floor tv stand or wall mount, etc. for larger 4k screens) get 77 to 64 PPD, no matter what the size of the 4k screen. Bigger perceived pixel sizes, bigger problems.

A 27" 4k screen's fine perceived pixel density due to the pixels pre degree at normal 50 to 60 deg viewing angle will look exactly the same relatively as a larger 4k screen at the distance to get those same viewing angles.

Personally I think a separate screen for desktop and apps is a better idea anyway, using the OLED as the media and gaming "stage" for dynamic content.

- ABL and ASBL(though you can disable ASBL) are a tradeoff but scenes in media and games are usually pretty dynamic with real camera cinematography in media and virtual camera cinematography in games + free mouse-looking, movement keying and controller panning throughout. That usage scenario overall is not static so with a few exceptions it really doesn't happen as frequently ... or as for as long when it does... as you might think or see propagandized. It's still a thing though for sure but I don't find myself seeing it happen enough and long enough to bother me in both pc gaming on my 48cx or in my living room media watching on a C1 OLED.

- Some FALD IPS or VA screens, like some of the 1400 nit 8k tvs, also have ABL. As LCDs get brighter they won't all necessarily have long % screen brightness durations, and ABL is not limited to OLEDs necssarily in all cases.

The samsung QN900B 8k QLED (VA LCD) per RTings:

.The Samsung QN900B has amazing peak brightness in SDR. Scenes with small areas of bright lights get extremely bright, and overall, it can easily overcome glare even in a well-lit room. Unfortunately, it has a fairly aggressive automatic brightness limiter (ABL), so scenes with large bright areas, like a hockey rink, are considerably dimmer.

.Thanks to its decent Mini LED local dimming feature, blacks are deep and uniform in the dark, but there's some blooming around bright highlights and subtitles

.Local dimming results in blooming around bright objects.

.Like most Samsung TVs, the local dimming feature behaves a bit differently in 'Game' mode. There's a bit less blooming, but it's mainly because blacks are raised across the entire screen, so the blooming effect isn't as noticeable.

- FALD dimming "halos" or blooming "halos" (depending which way the firmware leans) will cause loss of details throughout usage and the overall black depth won't be near as great in contrasted scenes no matter what they say (matte type AG coatings also raise blacks to boot). LCD will never be as fine as side by side per pixel emissive razor's edge contrast (down to ultra black) and sbs per pixel detail in color. We won't get that until another tech like microled can do per pixel emissive display , and that micro-led at even a healthy enthusiast gamer level pricing seems a very long way off yet.

Here are two pictures of that 8k QN900B's backlights/FALD-zones, the 2nd one contrasted to see the zones better. That is what 1,344 zones looks like (for comparison , the pro art ucx is 1152 zones). They are large. Compare that to every pixel (4k = 8,294,400 pixels) being emitted individually and able to provide that per pixel sbs contrast, and per pixel sbs detail in colors.

- LG OLED reserves the top 25% of it's brightness for a wear evening routine. People saying you'll burn it in right away as if there is no 25% buffer are ill informed. You'd have to pull some serious jackass maneuver to do that. In normal usage you are instead burning down your oled emitters very slowly and the wear evening routine compares outputs and "cuts them down to even" and then "pushes them back up" in intensity throughout years of the wear evening routine. So you won't get burn in until you bottom out your buffer. How long that takes will depend on how abusive or careless you are - but "normal" OLED use with ordinary precautions should last years. Unfortunately there is no way to tell how much of the buffer you have remaining, via the osd or the service menu afaik so best use scenario is not using the oled as a static app/desktop monitor but plenty of people do and go over 3 - 4 years and running currently w/o burning through their buffer in that more abusive usage scenario.

.

Kramnelis is a zealot for lifting the whole standard scene Mids a lot lot more than the default, not just increasing the high end's highlights and light sources - which is his preference and opinion - so that might explain part of the reason why he is especially aggressive about lower range of OLED compared to say his 1500nit pro art FALD LCD. While I disagree with a lot of his opinions on that (as I said, unlike Kram, I don't think the whole scene needs to be lifted brighter, at least not "unnecessarily") - Kramnelis isn't wrong across the board though by any means, at least as far as his base concepts of brighter and brighter HDR peaks as goals on the high end being more and more realistic and colorful, like future 4000nit HDR and 10,000 nit HDR screens someday, and longer sustained periods. . - it's just that doing it with (FALD) LCD panels is a huge tradeoff or downgrade of many facets of picture quality. OLED, even if not the most pure color at the high end and even if not achieving the highest current ranges (though compared to HDR 4000 and HDR 10,000 - even 1500nit is low). or sustained periods. . OLED is starting to climb to 1000nit peak highlights and perhaps a bit over in the meantime. Heatsink tech and QD-OLED tech and perhaps some other advancements may improve this over gens. There are tradeoffs either way though for sure. We do need much higher peaks eventually. Especially for highlights and direct light sources. But for many of us, after seeing OLED -> (FALD) LCD is not the way to do it. OLED needs to be replaced by another better per pixel emissive display technology someday for sure, but it's not LCD.

Last edited:

The image inside sRGB 80nits is never a natural or realistic look. It is a compromise to technology. sRGB 80nits looks lifeless now.

Huh? If something is made in SDR (whether TV, movie, or a game), it's generally mastered in sRGB and meant to display in sRGB. If you're going for creator's intent, it's the most accurate. I'm also not sure where you're getting the 80 nits per se as being optimal - that's pretty dim, especially for most home rooms. 100 is often the standard for SDR, even in a dark room... Based on personal preference and varying light conditions, I calibrate SDR to closer to 160 nits as I like a bit of added brightness without pushing things over the top or making things too inaccurate (I don't mind things a bit brighter than reference). And I'm very pleased with how content look. Yes, SDR to me looks more natural than Auto HDR. Auto HDR doesn't necessarily do a bad job - in some circumstances, it can look quite good. But since there's no manual grading or conversion, you're trusting an algorithm to figure out what's supposed to be bright vs dim and more importantly HOW bright, and that doesn't always work as expected. For me, the unintended effects aren't worth it - I'd rather see SDR as it was mastered to be seen. For those that prefer an HDR look, that's fine, but it's a personal preference, and has nothing to do with "lifeless"ness. It's the standard showing you what was always meant to be shown.

When a monitor can display extended range from sRGB 80nits to Adobe 400nits, it's already halfway decent as it is a higher range.

I'm not really sure what you're saying. If you mean extending the color range, you're just artificially enhancing the colors and brightness. A lot of people prefer that. Hell, I find enhanced color ranges quite appealing, but they're also not accurate if accuracy is something that matters to you. I can take an image and blow the colors out and it looks very pretty. But it doesn't look like the creator intended, either.

HDR needs both contrast and color. If AutoHDR looks washed out, it is either that cutscene not supported by AutoHDR or it means the monitor doesn't provide enough color when the brightness gets higher.

"Supported by Auto HDR" isn't so much a thing (if the game supports DirectX 11 or 12, it supports Auto HDR). It's going to try to convert any game content to HDR using an algorithm [that cutscene is done in-engine anyways, not video], and depending on that content, is going to have mixed results. By the way, just for giggles, I just fired it up on my OLED in both SDR and Auto HDR. The Auto HDR version looked quite good and much closer to SDR than when I saw it on the FALD monitor I had. Definitely not *as* washed out. BUT, SDR still looked slightly better and more contrasty. Colors looked good in both. Menu text and such were too bright in HDR. As I said, you're trusting an algorithm to do more than an algorithm can realistically do perfectly.

This is different than, say, when a movie is remastered for HDR (like the Jaws example earlier I'd imagine, though to be fair, I haven't seen it, and I don't know all the details of the remastering). But from what I can tell, it was graded and enhanced by PEOPLE, not simply letting the a piece of equipment expand the range. That's necessary and crucial if you want an accurate image without the anomalies that a technology like Auto HDR can add. Again, if you prefer the look of Auto HDR, there's not a thing wrong than that. But SDR definitely has its advantages and is the most accurate, unless something is hand-enhanced to HDR.

This video has 3 stages. The 1st has sRGB 80nits. The 2nd has only the boosted contrast but keeps the same color of sRGB. The 3rd is the HDR1000 with both boosted contrast and extended Rec.2020 color.

And there are already monitors can do 1000nits SDR. If you put Rec.2020 colorspace, they look the same as HDR1000. That's why I said many average OLED 100nits image with a few dots highlight are not exactly HDR. They are inside the range of 400nits SDR 8-bit.

I'm really not sure what this video is supposed to show. It doesn't say what movie it is, what it was originally mastered in (SDR or HDR), or how it was graded to HDR (manually by a person or automatically by a machine). Sure, the third one looks "best", but I could just up the brightness of my TV in SDR and get something similar (but it'd be eye-searingly bright on, say, a white screen).

An OLED monitor is more than capable of showing a movie like that beautifully, in SDR or in HDR, as long as you have some degree of light control in your room. It could be too dim in a very bright room.

Kramnelis isn't wrong across the board though by any means, at least as far as his base concepts of brighter and brighter HDR peaks as goals on the high end being more and more realistic and colorful, like future 4000nit HDR and 10,000 nit HDR screens someday, and longer sustained periods. . - it's just that doing it with (FALD) LCD panels is a huge tradeoff or downgrade of many facets of picture quality. OLED, even if not the most pure color at the high end and even if not achieving the highest current ranges (though compared to HDR 4000 and HDR 10,000 - even 1500nit is low). or sustained periods. . OLED is starting to climb to 1000nit peak highlights and perhaps a bit over in the meantime. Heatsink tech and QD-OLED tech and perhaps some other advancements may improve this over gens. There are tradeoffs either way though for sure. We do need much higher peaks eventually. Especially for highlights and direct light sources. But for many of us, after seeing OLED -> (FALD) LCD is not the way to do it. OLED needs to be replaced by another better per pixel emissive display technology someday for sure, but it's not LCD.

Very informative post. I just want to clarify I do agree the brighter with HDR, the better. 1000%. And right now, in that aspect, OLED can't match LCD. I'm just arguing you can't discount HDR on OLEDs altogether and that they can still look good and be impactful in games now, and the tradeoffs with OLED are no worse (and in my opinion/for my uses better) than that of the FALD LCD's I've tried. I can't wait for a display tech with the perfect blacks of OLED, no more burn-in concerns even when abused a bit, and super high peak brightnesses, but we're just not there yet, and the blooming issues weren't worth it to me).

pippenainteasy

[H]ard|Gawd

- Joined

- May 20, 2016

- Messages

- 1,158

Am I the only one to find this an extremely annoying compromise? I'm not using OLED anywhere but my phone but I've tried auto-hiding my taskbar before and just found the lack of what is basically the permanent HUD with valuable info on my desktop to be an anti-feature.

Don't know if it's that big of an issue. The auto-dimming to me is the bigger issue in terms of general productivity use outside of gaming.

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

Huh? If something is made in SDR (whether TV, movie, or a game), it's generally mastered in sRGB and meant to display in sRGB. If you're going for creator's intent, it's the most accurate. I'm also not sure where you're getting the 80 nits per se as being optimal - that's pretty dim, especially for most home rooms. 100 is often the standard for SDR, even in a dark room... Based on personal preference and varying light conditions, I calibrate SDR to closer to 160 nits as I like a bit of added brightness without pushing things over the top or making things too inaccurate (I don't mind things a bit brighter than reference). And I'm very pleased with how content look. Yes, SDR to me looks more natural than Auto HDR. Auto HDR doesn't necessarily do a bad job - in some circumstances, it can look quite good. But since there's no manual grading or conversion, you're trusting an algorithm to figure out what's supposed to be bright vs dim and more importantly HOW bright, and that doesn't always work as expected. For me, the unintended effects aren't worth it - I'd rather see SDR as it was mastered to be seen. For those that prefer an HDR look, that's fine, but it's a personal preference, and has nothing to do with "lifeless"ness. It's the standard showing you what was always meant to be shown.

The sRGB standard is made for all monitors. It's the lowest standard. Creator's intent has no commercial benefits if the image cannot be displayed. So they intend sRGB image in a way that all monitors can see it. But these sRGB 80nits images are never good. They are common and lifeless. These creators are never satisfied with sRGB 80nits. This is why it is HDR at a higher range. Now there are monitors capable of higher range to make you see better images than they indented in limited sRGB.

"Supported by Auto HDR" isn't so much a thing (if the game supports DirectX 11 or 12, it supports Auto HDR). It's going to try to convert any game content to HDR using an algorithm [that cutscene is done in-engine anyways, not video], and depending on that content, is going to have mixed results. By the way, just for giggles, I just fired it up on my OLED in both SDR and Auto HDR. The Auto HDR version looked quite good and much closer to SDR than when I saw it on the FALD monitor I had. Definitely not *as* washed out. BUT, SDR still looked slightly better and more contrasty. Colors looked good in both. Menu text and such were too bright in HDR. As I said, you're trusting an algorithm to do more than an algorithm can realistically do perfectly.

This is different than, say, when a movie is remastered for HDR (like the Jaws example earlier I'd imagine, though to be fair, I haven't seen it, and I don't know all the details of the remastering). But from what I can tell, it was graded and enhanced by PEOPLE, not simply letting the a piece of equipment expand the range. That's necessary and crucial if you want an accurate image without the anomalies that a technology like Auto HDR can add. Again, if you prefer the look of Auto HDR, there's not a thing wrong than that. But SDR definitely has its advantages and is the most accurate, unless something is hand-enhanced to HDR.

Windows AutoHDR is a test for monitor HDR capability as it is done automatically. If a monitor is capable of accurate HDR, Windows AutoHDR will look good. It means the monitor has more color, and more accurate EOTF tracking. If something goes off, Windows AutoHDR will look bad such as being washed out. Of course, AutoHDR is not as good as manual HDR. But compared to sRGB it is better on a competent HDR monitor.

The sRGB standard is made for all monitors. It's the lowest standard. Creator's intent has no commercial benefits if the image cannot be displayed. So they intend sRGB image in a way that all monitors can see it. But these sRGB 80nits images are never good. They are common and lifeless. These creators are never satisfied with sRGB 80nits. This is why it is HDR at a higher range. Now there are monitors capable of higher range to make you see better images than they indented in limited sRGB.

You like what you like, and that's all well and good - it's wonderful we all have options to customize the picture to our preferences, regardless of the creator's intent. That said, the bottom line is if something is mastered in a technology, such as most SDR content in sRGB, by definition displaying it with any "expansion" (whether color range or brightness as autoconverted to HDR) is going to be at best the same and almost always less accurate than what it was mastered in. That's the case with the game I mentioned - it looks close and fairly good in Auto HDR, but it still looks better in SDR, with better contrast and without menu anomalies, because it was designed for display in sRGB. If something is mastered in sRGB, it by definition can't look more accurate than sRGB. It can look more saturated, or brighter, depending on preference, and a better HDR monitor may do a better job at Auto HDR than a poor one, for sure, but that still doesn't make it better than SDR and definitely increases deviation from reference, because it's a computer guessing about a lot of variables to try and fill out the HDR capabilites. Nothing wrong with liking deviation from reference if it's your thing - as mentioned, I myself prefer a slightly brighter than reference picture in SDR at 160 nits (vs. 100) because the enjoyment I get out of a slightly brighter image for my room is more important to me than any slight accuracy deviation a slight bump in brightness would cause. But it's up to each person to pick and choose how close to reference they want to be.

Windows AutoHDR is a test for monitor HDR capability as it is done automatically. If a monitor is capable of accurate HDR, Windows AutoHDR will look good. It means the monitor has more color, and more accurate EOTF tracking. If something goes off, Windows AutoHDR will look bad such as being washed out. Of course, AutoHDR is not as good as manual HDR. But compared to sRGB it is better on a competent HDR monitor.

The best HDR monitor in the world will still look odd and have anomalies (menus are one example) with SDR content in Auto HDR. sRGB is still more accurate, and aside from that, myself and many others will find it looks better and more natural to our eyes. It's perfectly fine if your prefer Auto HDR - many people do, but if you like accuracy, sRGB will get you closest.

Personally, I'll save HDR for content natively mastered in (or professionally converted to) HDR, and from what I've tested so far, I'm very happy with the few titles I've tried out on my OLED, even if the brightness can't get quite as high. I'm sure in these titles, a FALD monitor with higher brightness can look unquestioningly even better, and I don't dispute that, but the many tradeoffs in other areas weren't worth it to me, especially when I get enough impact to satisfy me from the HDR picture on OLED.

I do notice the auto-dimming on my LG, but only very occasionally on full-white screens (such as the stock Google homepage or a blank page on a word processor). I'd estimate even if 1/5th of the page has some other color/content on it, it doesn't happen...it's only when it's almost all white. It's not so distracting it really bothers me 98% of the time, and certainly less distracting than I found the blooming on the FALD IPS panels I tried, which was really distracting to me in productivity and day to day tasks. ABL certainly might be an issue if you're particularly sensitive to it, though. In the few circumstances it happens to me, generally I just notice it briefly and keep working. The picture, even with slightly limited brightness, still looks pretty good and just as readable, so it's not drastic enough I feel it impacts my work at all.Don't know if it's that big of an issue. The auto-dimming to me is the bigger issue in terms of general productivity use outside of gaming.

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

You just downplay the importance of brightness as if it doesn't matter. If there is no brightness then there is no color. Realistic images has much higher mid to showcase 10bit color. A higher range is always better.Kramnelis is a zealot for lifting the whole standard scene Mids a lot lot more than the default, not just increasing the high end's highlights and light sources - which is his preference and opinion - so that might explain part of the reason why he is especially aggressive about lower range of OLED compared to say his 1500nit pro art FALD LCD. While I disagree with a lot of his opinions on that (as I said, unlike Kram, I don't think the whole scene needs to be lifted brighter, at least not "unnecessarily") - Kramnelis isn't wrong across the board though by any means, at least as far as his base concepts of brighter and brighter HDR peaks as goals on the high end being more and more realistic and colorful, like future 4000nit HDR and 10,000 nit HDR screens someday, and longer sustained periods. . - it's just that doing it with (FALD) LCD panels is a huge tradeoff or downgrade of many facets of picture quality. OLED, even if not the most pure color at the high end and even if not achieving the highest current ranges (though compared to HDR 4000 and HDR 10,000 - even 1500nit is low). or sustained periods. . OLED is starting to climb to 1000nit peak highlights and perhaps a bit over in the meantime. Heatsink tech and QD-OLED tech and perhaps some other advancements may improve this over gens. There are tradeoffs either way though for sure. We do need much higher peaks eventually. Especially for highlights and direct light sources. But for many of us, after seeing OLED -> (FALD) LCD is not the way to do it. OLED needs to be replaced by another better per pixel emissive display technology someday for sure, but it's not LCD.

It's ridiculous to trade a little local dimming bloom or trade sustained 1600nits or trade 10bit color for a 200nits OLED experience. A dim image with a fewer dots highlight is not true HDR. FLAD LCD can display better than this.

As far as panel technology can go, manufacturers especially LG is on a road to go all-in just like Sharp. If things doesn't go well it will be too late for LG to realize that before jumping to the next gen tech the FALD LCD already takes the market.

kramnelis

Gawd

- Joined

- Jun 30, 2022

- Messages

- 890

Accuracy in limited sRGB doesn't mean it will have a better or a natural image. Accuracy is intended for mass distribution. It's more important for the creators. These creators might not have the intention or budget to implement HDR for better images. Even if they implement native HDR, the UI can still shine at max brightness just like every game made by Supermassive. These anomalies are easy to fix.The best HDR monitor in the world will still look odd and have anomalies (menus are one example) with SDR content in Auto HDR. sRGB is still more accurate, and aside from that, myself and many others will find it looks better and more natural to our eyes. It's perfectly fine if your prefer Auto HDR - many people do, but if you like accuracy, sRGB will get you closest.

Personally, I'll save HDR for content natively mastered in (or professionally converted to) HDR, and from what I've tested so far, I'm very happy with the few titles I've tried out on my OLED, even if the brightness can't get quite as high. I'm sure in these titles, a FALD monitor with higher brightness can look unquestioningly even better, and I don't dispute that, but the many tradeoffs in other areas weren't worth it to me, especially when I get enough impact to satisfy me from the HDR picture on OLED.

AutoHDR is a way if you want to see a higher range. It is only a matter of time before AutoHDR gives users various options such as adjusting levels of shadows, midtones, highlights, and saturation just like native HDR so they can see better HDR matched on the range of their monitors instead of SDR sRGB.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)