nah they won't

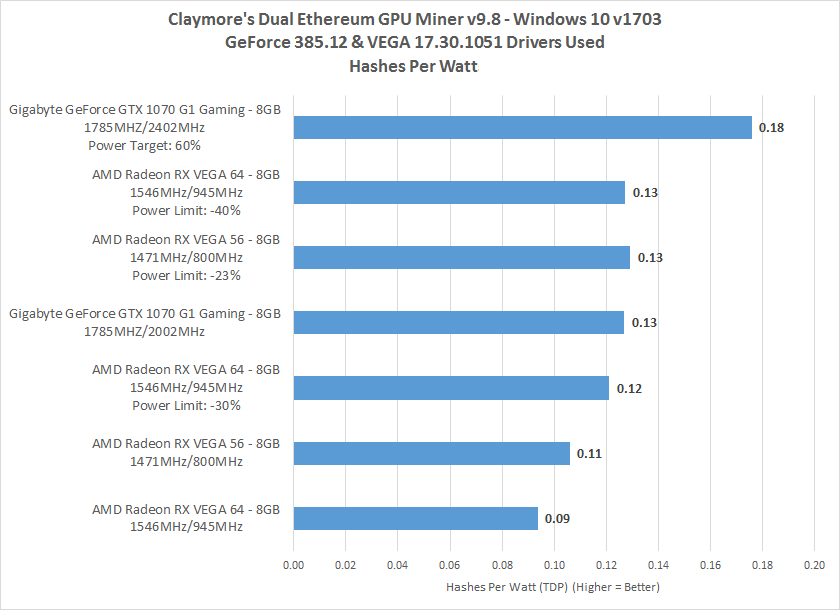

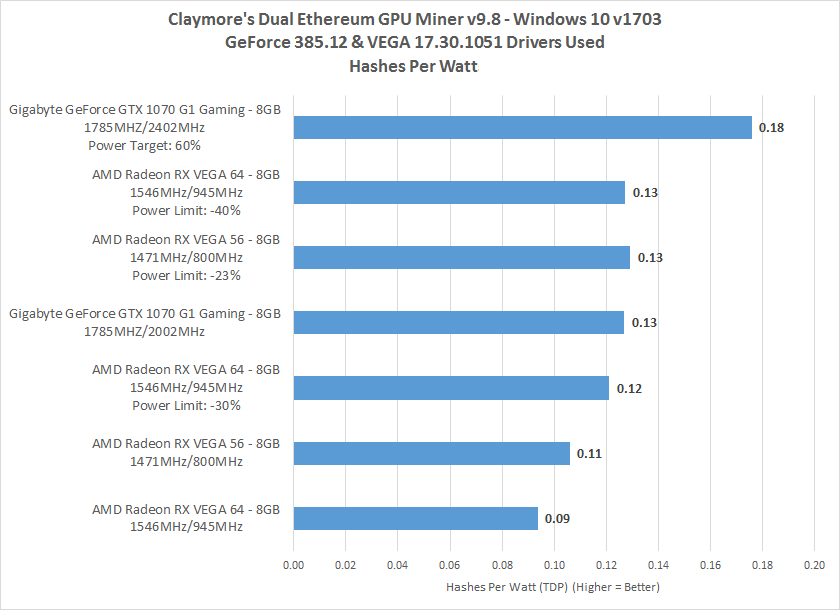

http://www.legitreviews.com/amd-radeon-rx-vega-64-vega-56-ethereum-mining-performance_197049

The difference is too great. 40% difference in profits, even small time miners won't do it. Cause it just eats into your bottom line. Its better to get 1060, cheaper and have better returns.

http://www.legitreviews.com/amd-radeon-rx-vega-64-vega-56-ethereum-mining-performance_197049

The difference is too great. 40% difference in profits, even small time miners won't do it. Cause it just eats into your bottom line. Its better to get 1060, cheaper and have better returns.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)