Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Vega Rumors

- Thread starter grtitan

- Start date

Presbytier

[H]ard|Gawd

- Joined

- Jun 21, 2016

- Messages

- 1,058

Considering this is a rumor thread here is one to wet the appetite.

Presbytier

[H]ard|Gawd

- Joined

- Jun 21, 2016

- Messages

- 1,058

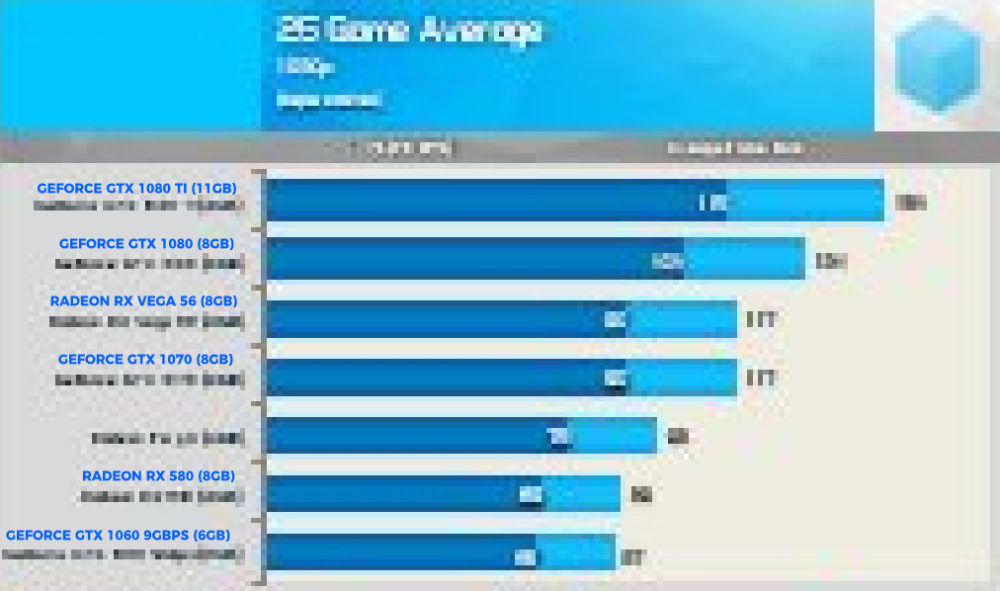

Supposedly it is an aggregate. So no idea what games or how many are tested. I in no way can or would even bother to verify if it was true. That being said we can surmise these may be close to correct and that still leaves us with a completely unexciting product launch.That is the worse graph i've ever seen. What games? Im calling BS on that one lol

Ocellaris

Fully [H]

- Joined

- Jan 1, 2008

- Messages

- 19,077

Supposedly it is an aggregate. So no idea what games or how many are tested. I in no way can or would even bother to verify if it was true. That being said we can surmise these may be close to correct and that still leaves us with a completely unexciting product launch.

Supposedly according to who? It's a graph with zero reference, just more fake shit to see who is dumb enough to repost it.

Presbytier

[H]ard|Gawd

- Joined

- Jun 21, 2016

- Messages

- 1,058

The over clocking makes it seem like it is fake. The amount of gains it is getting by percentage seems highly unrealistic.Well someone likes to make fake graphics, can't overclock an air Vega 64 without changing out coolers and even then its going to be difficult in a 2 slot solution.

Presbytier

[H]ard|Gawd

- Joined

- Jun 21, 2016

- Messages

- 1,058

Another rumor site i saw it on. This is a rumor thread so i posted it here in a rumor thread. It is a rumor and like all rumors you can either let it build up fanboy hope in your heart or just assume it is fake till proven otherwise.Supposedly according to who? It's a graph with zero reference, just more fake shit to see who is dumb enough to repost it.

Presbytier

[H]ard|Gawd

- Joined

- Jun 21, 2016

- Messages

- 1,058

That looks like shit, but it does say prototype.

cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,086

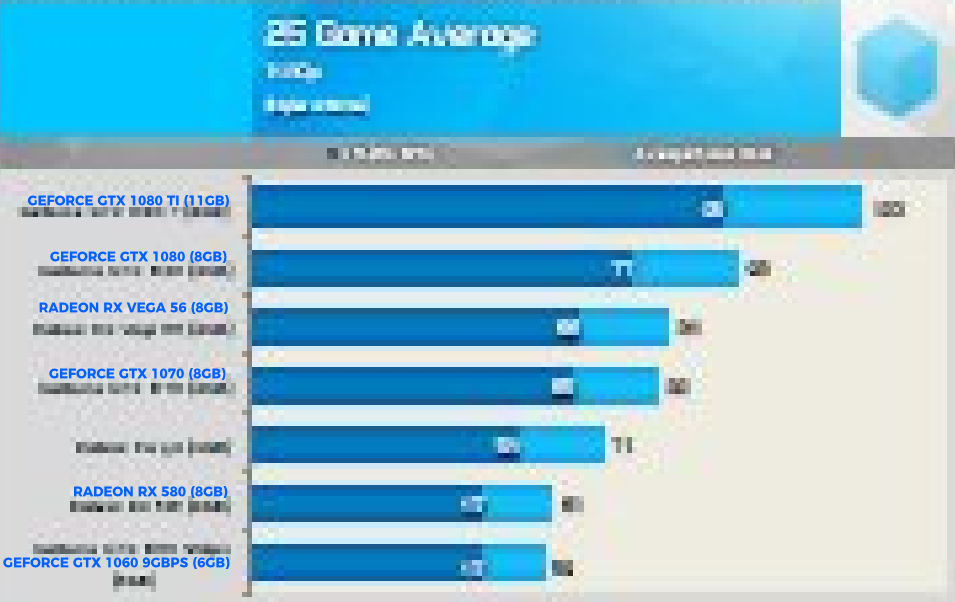

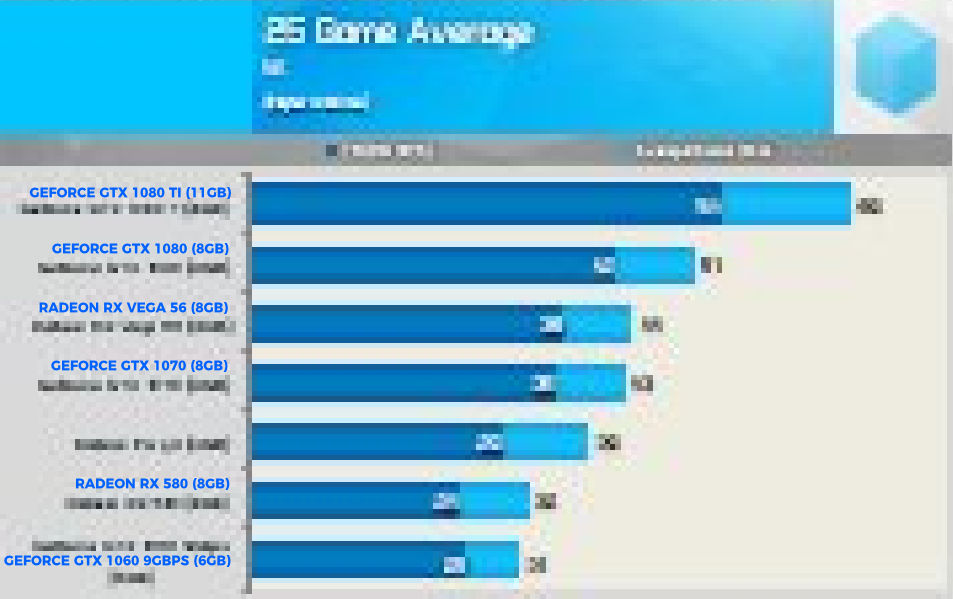

Yes, I have come to troll you'll! Muhahaha! Get out your magnifying glasses, bifocals, microscopes, and hell even a telescope to digest these numbers!

Ocellaris

Fully [H]

- Joined

- Jan 1, 2008

- Messages

- 19,077

Yes, I have come to troll you'll! Muhahaha! Get out your magnifying glasses, bifocals, microscopes, and hell even a telescope to digest these numbers!

Enhanced images... Looks like 1070 performance for the Vega 56. Nothing special, just matching Nvidia performance and price from 16 months ago at higher power consumption.

Last edited:

Ieldra

I Promise to RTFM

- Joined

- Mar 28, 2016

- Messages

- 3,539

Enhanced images... Looks like 1070 performance for the Vega 56. Nothing special, just matching Nvidia performance and price from 16 months ago at higher power consumption.

View attachment 33317 View attachment 33318 View attachment 33319

These are using AMD Sandbag (tm) drivers, wait for drivers to make Vega match Titan Xp

Presbytier

[H]ard|Gawd

- Joined

- Jun 21, 2016

- Messages

- 1,058

With it being that pixelated we have no idea if it is real or not.I told you guys these Tweaktown 56 leaked numbers were fake or min fps at best.

Presbytier

[H]ard|Gawd

- Joined

- Jun 21, 2016

- Messages

- 1,058

What I am curious about is why people got what they did. Respectable you-tubers got air-cooled 64 but AdoredTV got the Liquid cooled. Some got only Vega 56.Now the real slaughter will begin for Vega 64 tomorrow, there is a reason techspot/hardwareunboxed only recieved Vega 56, they test 25+ games, and the more you expand your results, the more miserable Vega 64 becomes.

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,691

AMD has done it. They've matched the GTX 1070, at the same MSRP, and it only took them a meager 429 days. Welcome back AMD, and congratulations to Raja and everyone at RTG.

AMD has done it. They've matched the GTX 1070, at the same MSRP, and it only took them a meager 429 days. Welcome back AMD, and congratulations to Raja and everyone at RTG.

while consuming 40% more power this card should be 50 bucks less.

Damage control.What I am curious about is why people got what they did. Respectable you-tubers got air-cooled 64 but AdoredTV got the Liquid cooled. Some got only Vega 56.

JustReason

razor1 is my Lover

- Joined

- Oct 31, 2015

- Messages

- 2,483

Is this to scale? Maybe the best graph yet. Guaranteed TRUE. lol.

HOLY SHIT DUDE VEGA GETS DESTROYED

Is this to scale? Maybe the best graph yet. Guaranteed TRUE. lol.

shad0w4life

Gawd

- Joined

- Jun 30, 2008

- Messages

- 689

41MH/s is pretty damn goodMagical one month drivers just turned to a pumpkin. So mining isn't good either :/ Well that sucks.

41MH/s is pretty damn good

not good enough with 300 watts. I can get 2 1070s for 100 bucks more that will give 20% more hashrates or 2 580s that give 10% more hashrates at 100 bucks less. The 2 1070's will use less power too 30% less and the rx 580s will too about 20% less.

Can't modify the clocks of the HBM 2 much either on Vega, so that really hurts it that means modifying for more efficiency like we do with the 580's or the 1070s not going to happen.

So it makes no sense for miners to buy these cards right now.

Last edited:

jkw

Gawd

- Joined

- Oct 10, 2004

- Messages

- 608

Is this to scale? Maybe the best graph yet. Guaranteed TRUE. lol.

WOW! Vega totally smoked the competition! Lower score is better for this graph, obviously.

Uh.. Not exactly. If I want numbers and want them quick i'll watch a short video showing various games with Mins/Max/Avgs showing while being compared against other cards. BUT, if I want an in depth technical review i'll read PCPER and AnandTech.

Looking at charts is faster than skimming through a video, based on my experience.

Oh so bad. I thought for sure Vega 56 would have a clear, albeit small, lead over GTX 1070. But if that graph is translated correctly.... this is overwhelmingly bad. Vega 64 will be 10+% slower than 1080 on average (except for DOOM and maybe one or two other games), and Vega 56 will be neck and neck with the 1070. WOW. So, so bad.

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

OMG. I feel like I am looking at photo proof of lizard people or something.Enhanced images... Looks like 1070 performance for the Vega 56. Nothing special, just matching Nvidia performance and price from 16 months ago at higher power consumption.

View attachment 33317 View attachment 33318 View attachment 33319

Ocellaris

Fully [H]

- Joined

- Jan 1, 2008

- Messages

- 19,077

With it being that pixelated we have no idea if it is real or not.

OMG. I feel like I am looking at photo proof of lizard people or something.

FrameBuffer

Limp Gawd

- Joined

- May 1, 2006

- Messages

- 310

It is he, who's name cannot be spoken..

Oh hey Voldemort!

AlexFromAU

n00b

- Joined

- Aug 9, 2017

- Messages

- 6

Time to dump your 500w power supplies. A Korean website put their Vega review online.

Original link: http://www.hwbattle.com/bbs/board.php?bo_table=hottopic&wr_id=7333&ckattempt=1

Archived link: http://archive.is/uYMVU

Original link: http://www.hwbattle.com/bbs/board.php?bo_table=hottopic&wr_id=7333&ckattempt=1

Archived link: http://archive.is/uYMVU

Is this to scale? Maybe the best graph yet. Guaranteed TRUE. lol.

made my fuckin day! ROFL!

Time to dump your 500w power supplies. A Korean website put their Vega review online.

Original link: http://www.hwbattle.com/bbs/board.php?bo_table=hottopic&wr_id=7333&ckattempt=1

Archived link: http://archive.is/uYMVU

Looking at results, trading blows with 1080, OC 1080 will put it on par or above with Vega liquid in most cases. But boy that power consumption is no joke....

Edit: Looks like running games on DX 11 will most likely put the 1080 above Vega Liquid.

jkw

Gawd

- Joined

- Oct 10, 2004

- Messages

- 608

I'm not impressed. :/ I suppose I'll still go pick mine up in a few hours, play with it, wait for new drivers, and then decide. Can always sell it to the miners for a profit if I don't like it.

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

Time to dump your 500w power supplies. A Korean website put their Vega review online.

Original link: http://www.hwbattle.com/bbs/board.php?bo_table=hottopic&wr_id=7333&ckattempt=1

Archived link: http://archive.is/uYMVU

I guess my getting a 1000W power supply actually paid off.

I'm not impressed. :/ I suppose I'll still go pick mine up in a few hours, play with it, wait for new drivers, and then decide. Can always sell it to the miners for a profit if I don't like it.

it hashes no better than FE been confirmed. Rumors were untrue and likely made up to drive up the initial sales. No miners will get it for that power consumption.

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

If those benchmarks are real, it's a little disappointing but not all that unexpected. Seems to trade blows with 1080 is some games, but it's getting beaten pretty bad in others.

Though I'm already kind of committed to keeping this rig AMD, so I will buy regardless.

Though I'm already kind of committed to keeping this rig AMD, so I will buy regardless.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)