peanuthead

Supreme [H]ardness

- Joined

- Feb 1, 2006

- Messages

- 4,699

Got it. Thanks for clarifying.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

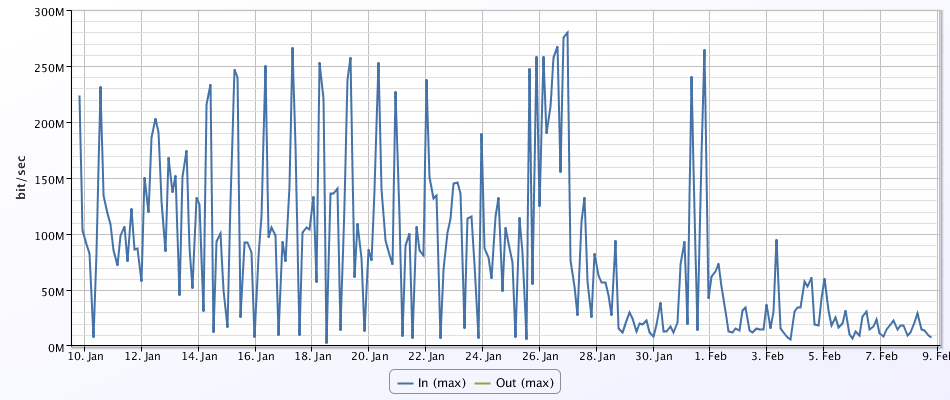

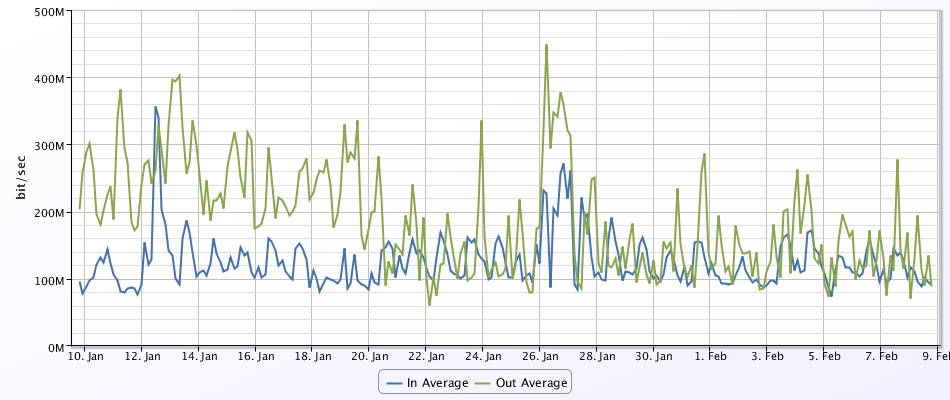

There are multiple 1GbE connections and storage, production, and management are all separate. But as far as storage, each ESXi host is only utilizing a 1GbE back to the NetApp. So as far as read/write and IOPS, we are a bit lacking due to that bottleneck.

Let's just disregard the obvious lack of network redundancy to your storage array, but are you actually saturating that GbE link? I assume that your vMotion traffic does have its own GbE as well or is that running on the same link as your iSCSI traffic?

Typically we find that bandwidth is rarely the problem. It's usually a mis config somewhere or simply put your disk can't keep up with the workload demand.

Whoever told you about how many VMs you can run on your current storage without know workload requirements should find a new occupation. Is that harsh? Probably but think about the risk that this statement can make. How the hell can anyone say that without knowing the workload. I've seen environments that require tens of thousands of IOPs on a three tier application with just three VM,s and your storage couldn't even come close to delivering that. It all depends on the workload. This is why a thorough assessment should be done.

Let's move on to your network. For Ethernet based storage in vSphere on 1Gb you should at least have 6 NICs preferably 8 but 6 will allow you to dedicate uplinks to specific traffic. In 6 NIC setup on standard vSwitch

VSwitch 0 Mgmt and vMotion 1 NIC active 1 NIC standby for each opposite vnic

VSwitch 1 NFS we can talk more about "balancing" load but at least have two NICs to accommodate failure

vSwitch 2 VM Port groups

You need someone to come in to do an assessment in your environment with industry standard tools AND to understand your business growth patterns and requirements.

Other than the load balancing of NFS, is there any benefit to having multiple datastores on a single aggregate for 1 shelf? I mean, they are all using the same hard drives anyway.

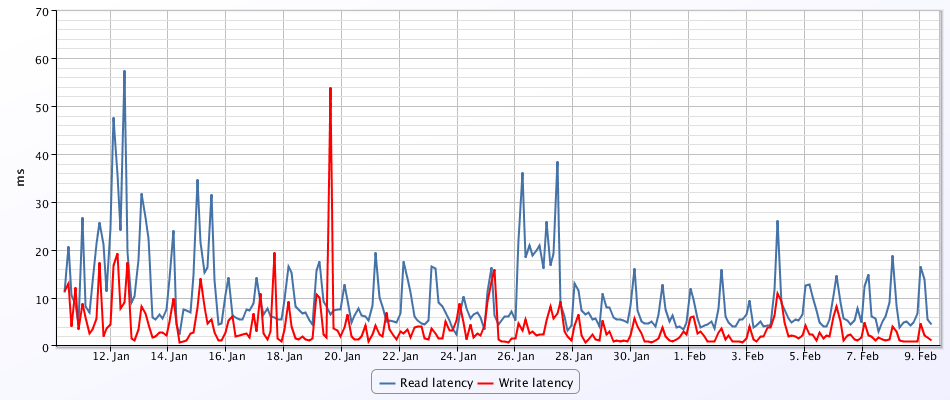

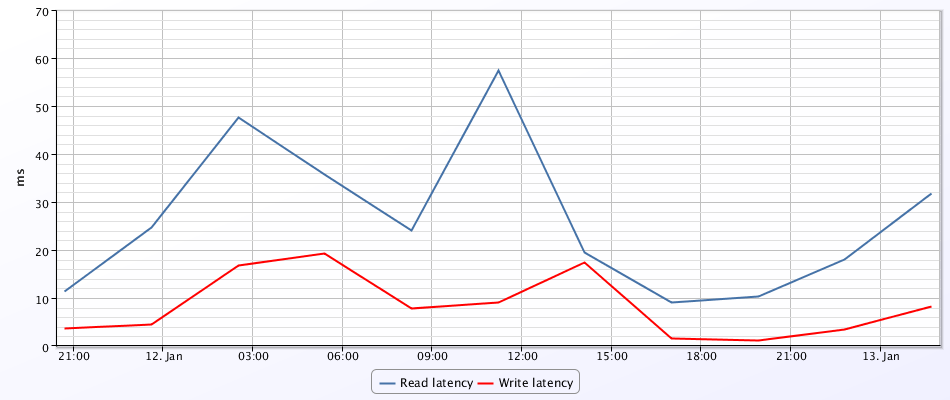

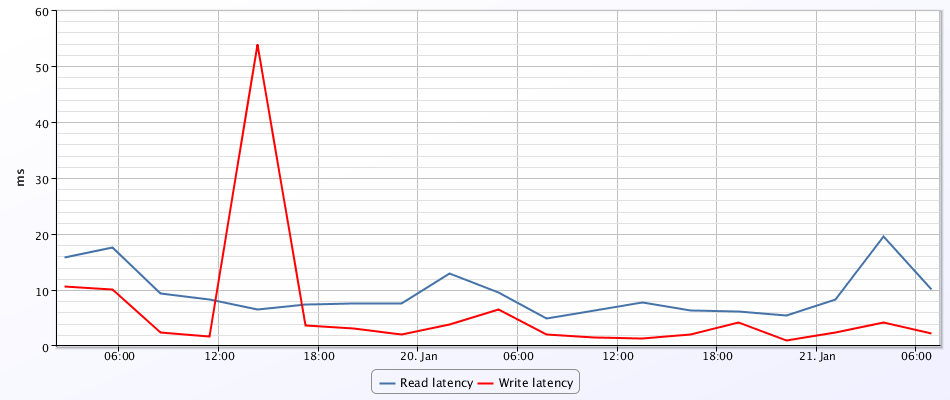

Looks like latency spikes around noon and midnight each day. Anything running during those times? AV scans? Backups? Replication? Batch jobs?

Also, check to make sure none of your VMs are swapping. That puts a good deal of extra load on your datastores they don't need. If you don't know how to check, download RVTools and run it against your vCenter server, go to the vRAM tab, and check the Swap Used and Limit fields. All your VMs should list 0 swap used and -1 for limit.

One definite benefit is that if VMFS becomes corrupted, or if a run-away snapshot fills up your entire datastore, you only crash so many VMs. If all of your VMs run on the same datastore then you crash all of them.

Permissions could matter if you run VMs for 3rd parties that have a login to your vCenter to provision their own drives, you want to keep those parties separate from your main environment so they don't monkey with storage that doesn't "belong" to them.

Depending on what product(s) you are using to run your VMs and whether this is applicable in your environment, but you may want to look into running some of your VMs as linked clones which may eliminate the pressing need for dedup on the storage side.

Looks like latency spikes around noon and midnight each day. Anything running during those times? AV scans? Backups? Replication? Batch jobs?

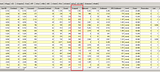

Also, check to make sure none of your VMs are swapping. That puts a good deal of extra load on your datastores they don't need. If you don't know how to check, download RVTools and run it against your vCenter server, go to the vRAM tab, and check the Swap Used and Limit fields. All your VMs should list 0 swap used and -1 for limit.

lol

YES

Your hosts are running out of RAM, have tried ballooning to save memory, but ultimately are having to resort to swapping to satisfy VM memory demands. That will kick the crap out of your storage because your datastores are being used as swap space.

You either need to lower the amount of memory assigned to VMs (which may not even help since they're already ballooning quite a bit), increase the RAM in your hosts, or add more hosts.

http://hardforum.com/newreply.php?do=newreply&p=1040613410

Yeah, we are supposed to get more hosts. I think I will push for that before going to 10GbE. Right now there are 15 hosts in a single cluster and it is a mess. For one, not all servers are the same. Memory from 64 GB to 144 GB. Since some CPUs are different gens, the EVC is set to Penryn. Not to mention they aren't all using the same amount of NICs.I want to create more clusters, increase the EVC to where it should be, and try to better match up clusters with the type of VM's that are running. This way the memory hogs are in a cluster with plenty of memory. Would like at least two new hosts with either 256 GB or 384 GB of RAM and two Intel Xeon E5-2650 v2 or better.

I'm not at near the capacity of knowledge Vader or CoW, so my responses won't be as good.

That is some crazy swapping going on. In reading about your host hardware I was reminded about vCenter/DRS. If memory serves me correctly when you have multiple hosts with such a drastic config doesn't vCenter/DRS have a difficult time knowing when to run and not run? (Sort of an open question.) I know you said you don't run it that often during the day, however I'm just throwing that out there.

I am also no expert on swapping, but I think there is only about 10 GB of memory being swapped total on a shelf with 8.95 TB of storage. Is that really a lot of swapping?

As for the DRS, I would like to get the clusters to a point where there isn't much DRS required. Especially since some VM's like our RDS Farm just can't be migrated without crashing.

I'm not at near the capacity of knowledge Vader or CoW, so my responses won't be as good.

That is some crazy swapping going on. In reading about your host hardware I was reminded about vCenter/DRS. If memory serves me correctly when you have multiple hosts with such a drastic config doesn't vCenter/DRS have a difficult time knowing when to run and not run? (Sort of an open question.) I know you said you don't run it that often during the day, however I'm just throwing that out there.

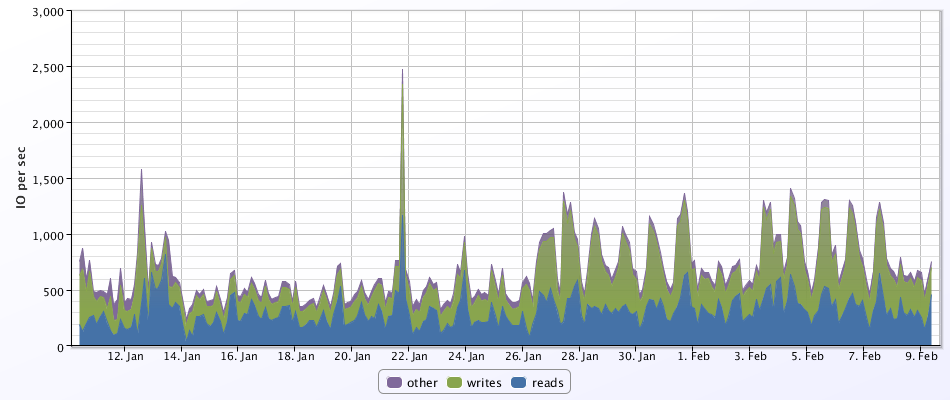

When you get to swapping your in a bad area. Generally you don't want to get to that area. I believe the memory reclaim order is TPS, ballooning, host RAM swapping then memory compression. Crazy; perhaps not. Remember, the swapping is not based on the storage shelf but based on the hosts' RAM usage versus capacity. The bottom line is that storage is being unnecessarily taxed.

It's not the amount of swap space being used, it's the unnecessary IOPs hitting your storage. Think about how many reads and writes go in and out of the RAM on your computer and how you have a dozen+ VMs all using your storage array as RAM. Spinning disks, no matter how many of them are in your Netapp, weren't made to handle IO designed for RAM.

In an earlier graph you showed your Netapp averaging 500+ IOPs, sometimes spiking over 1,000. I'd be willing to bet that number drops a good deal once you eliminate all the VM swapping and you'd also see your average latency drop a good deal as well.

Cool, thanks for the info. Is increasing the amount of memory a VM needs the only way to avoid swapping?

NO! Don't increase the RAM on the VM. You will cause a bigger issue. Either add RAM to the host(s) OR lower the RAM assigned to VMs. Since you are beyond ballooning, as said before, I doubt the lowering of RAM on the VMs will help. If you can reduce the RAM assigned to a VM (since it's not being used) then do so. This is just best practice; assign the RAM that it's using not what someone wants the value to be.

Ah, gotcha. I need to do more reading on this stuff. My proposed two new ESXi hosts will increase our overall memory capacity by 42%. If they go for it, hopefully that will eliminate these memory problems.

It will certainly help. As best practice don't over-assign resources to a VM if the VM doesn't need them. It could never hurt to go through periodically and inventory the VMs that you are running. This helps keep things nice and tighty

I agree. But some of these VM's are paid for by customers that wanted a specific amount of memory allocated to their VM. Being that they are paying for that much memory, not sure how to go back to them and say hey, we are going to decrease your memory allocation because you are not using it.

I am also no expert on swapping, but I think there is only about 10 GB of memory being swapped total on a shelf with 8.95 TB of storage. Is that really a lot of swapping?

Cool, thanks for the info. Is increasing the amount of memory a VM needs the only way to avoid swapping?

I agree. But some of these VM's are paid for by customers that wanted a specific amount of memory allocated to their VM. Being that they are paying for that much memory, not sure how to go back to them and say hey, we are going to decrease your memory allocation because you are not using it.

I find the above highly problematic. Your customers paid for memory that you aren't providing to them. If I pay my host for 1 GB of RAM then I expect that 1 GB to be available to me and not just 700 MB of physical RAM and 300 MB of RAM that resides on a swap disk. I am trying really hard to stay polite here but companies like the one you work for are basically ripping people off. It's at least unethical, and depending on your terms of service it may be in legally questionable territory.

I am not going to argue with you there, but it is not always easy to have all resources available when customers make requests on the fly.

And another client that has access to their own resource pool apparently gave themselves quite a few extra GB of memory they aren't even paying for. I believe they are paying for 64 GB of memory, have VM's totaling 164 GB and actively using 121 GB of memory.

I think part of the problem is our guys don't like to tell customers no or they need to wait until we procure more resources. They try to make everyone happy and then give me a huge headache.