I'm looking for advice on how to consolidate my current storage solution to better leverage some of my existing hardware as well as make some upgrades.

My main storage is configured as follows:

Supermicro X10SRL-F motherboard

E5-2680 v3 CPU - 12 Core @ 2.5GHz

32GB of DDR4 RAM

Intel X520 dual SFP+ 10G NIC

Areca ARC-1882IX-16 RAID Controller

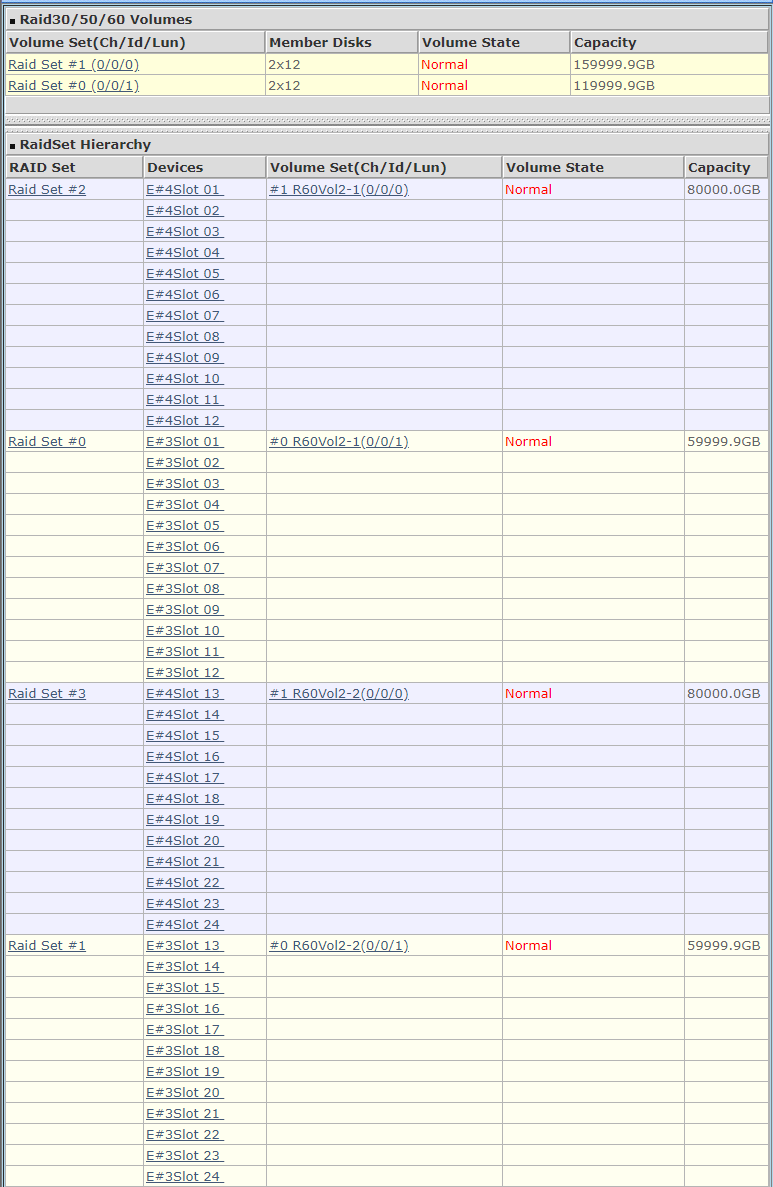

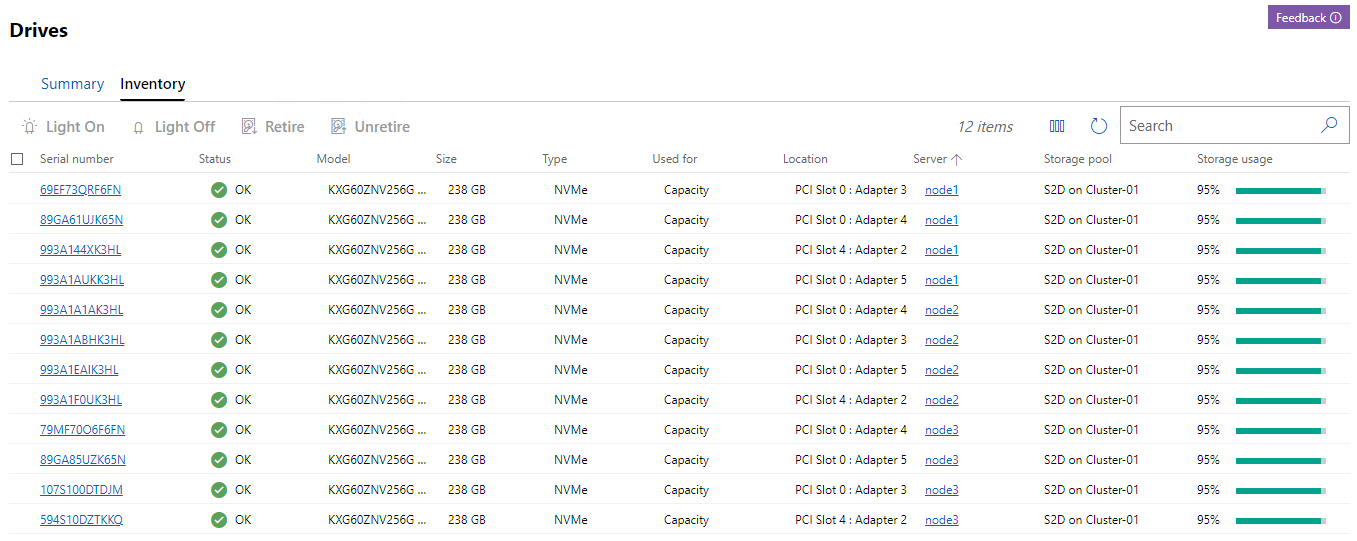

The storage is spreads across 2 Supermicro 846 chassis and contains a pair of RAID60 arrays configured as follows:

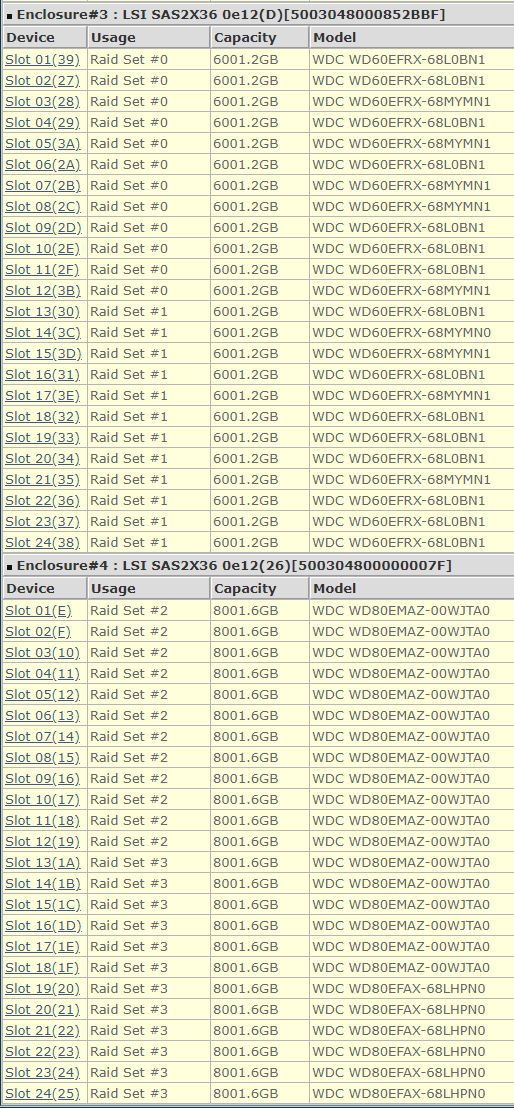

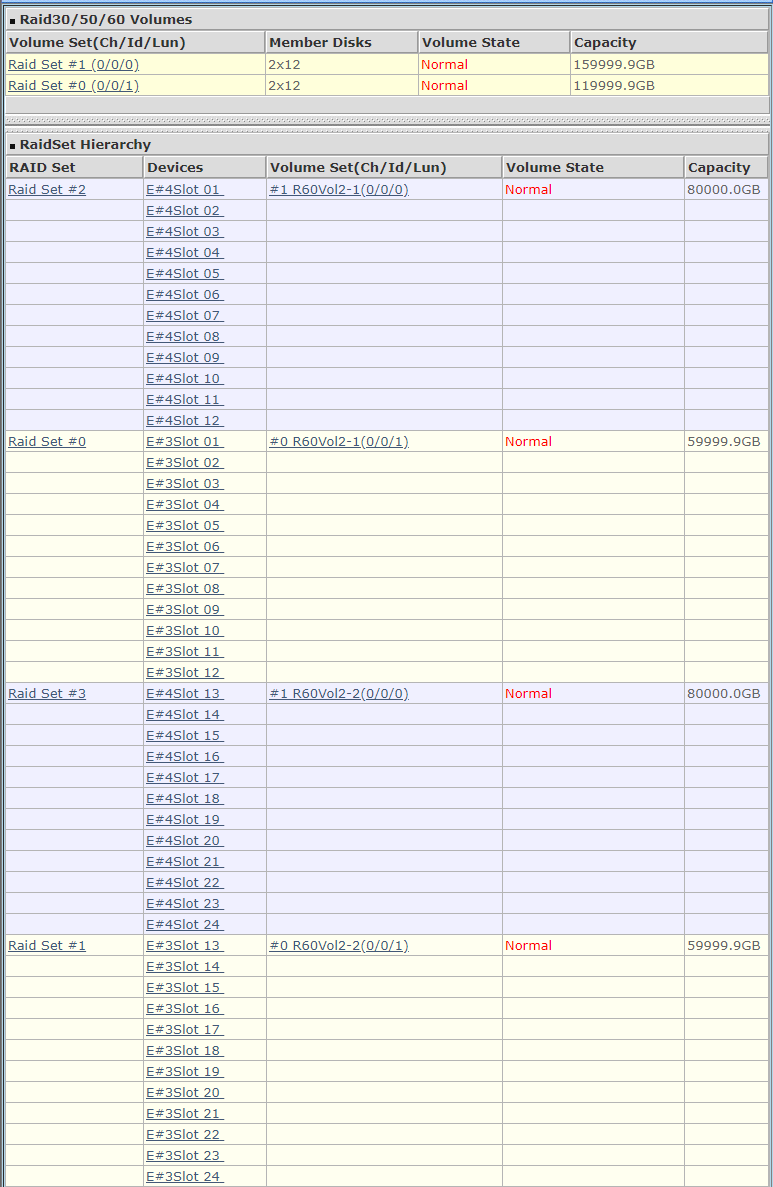

The drives in each chassis are as follows:

I have had this setup for years and it has been rock solid. Sure, I'll loose the occasional drive from time to time, but I always have cold spares on hand, and replacing a failed drive has always brought the array back to normal.

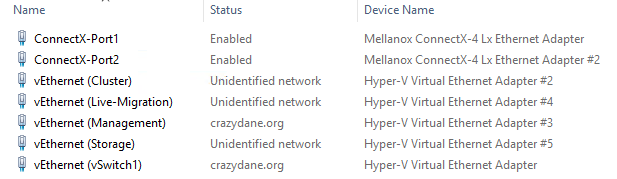

In addition to the main storage server above, I have a 3 Node Storage Spaces Direct cluster where each node consists of the following:

Supermicro X10SRL-F motherboard

E5-2680 v3 CPU - 12 Core @ 2.5GHz

32GB of DDR4 RAM

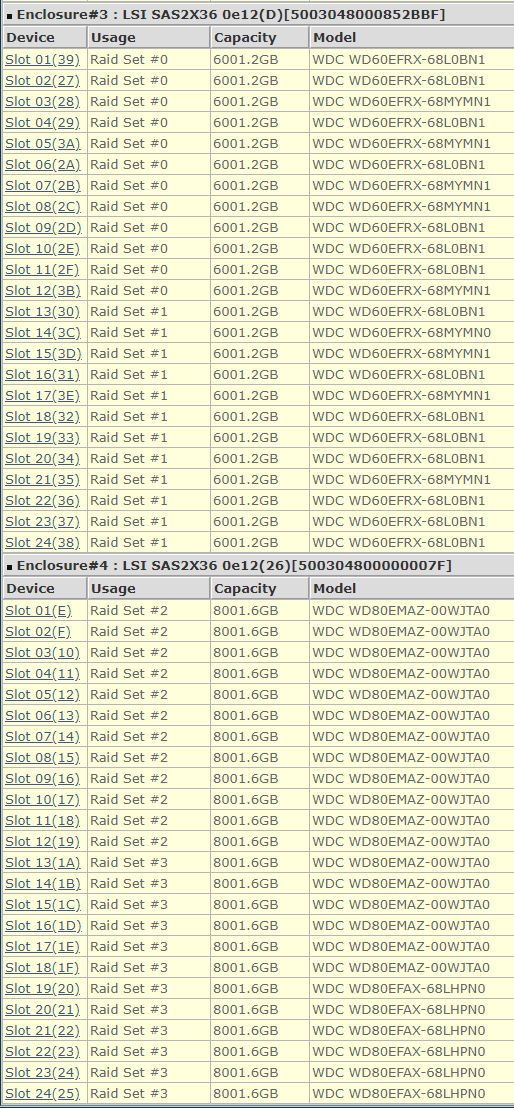

Mellanox ConnectX-4 CX4121A SFP28 Dual 25Gbps NIC

2x Supermicro AOC-SLG3-2M2 PCe to dual NVMe adapters

4x Toshiba 256GB NVMe flash drives

The X10's has bifurcation enabled so that all 4 NVMe's in each node have 4x PCIe 3.0 lanes to the CPU.

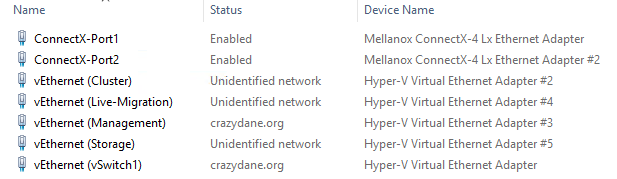

Each node have both 25Gbps LAN connections going to a Dell S5148F-ON switch and have the following vSwitch configuration:

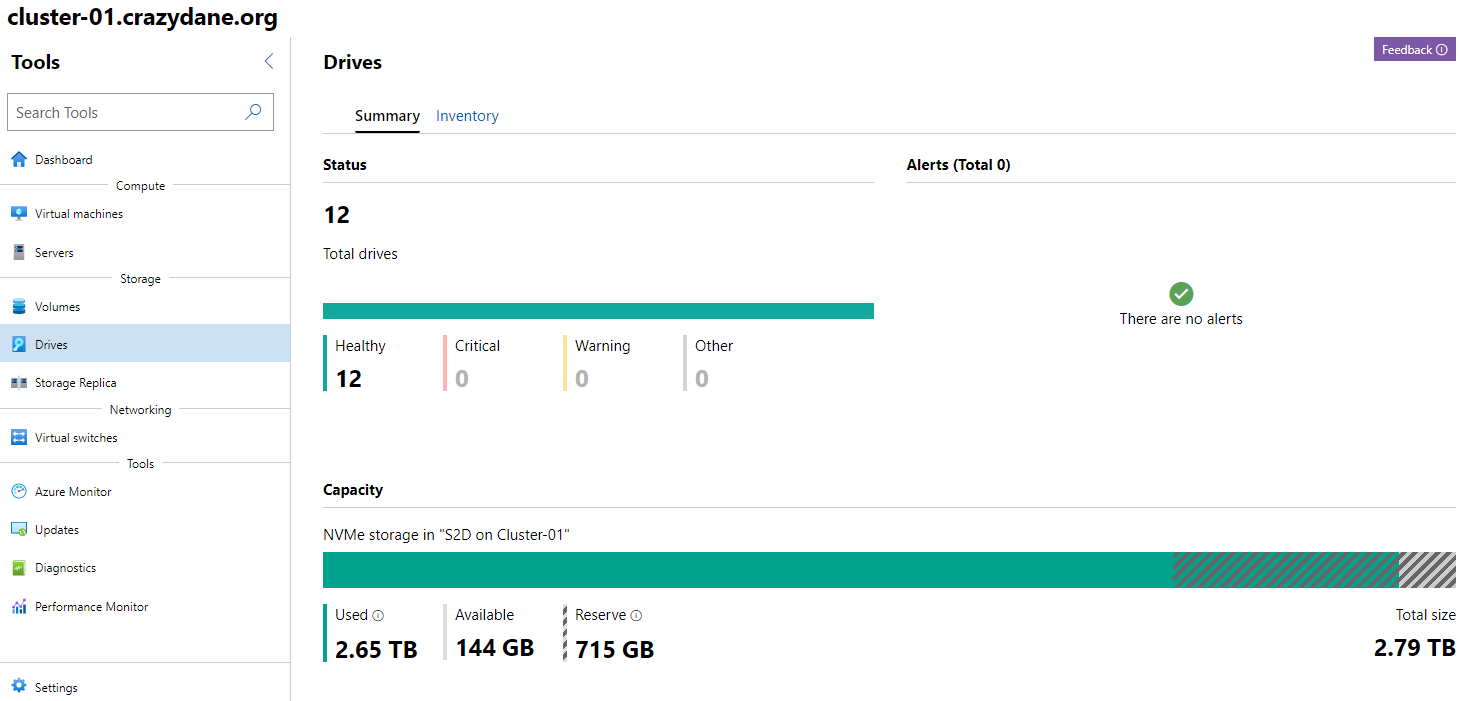

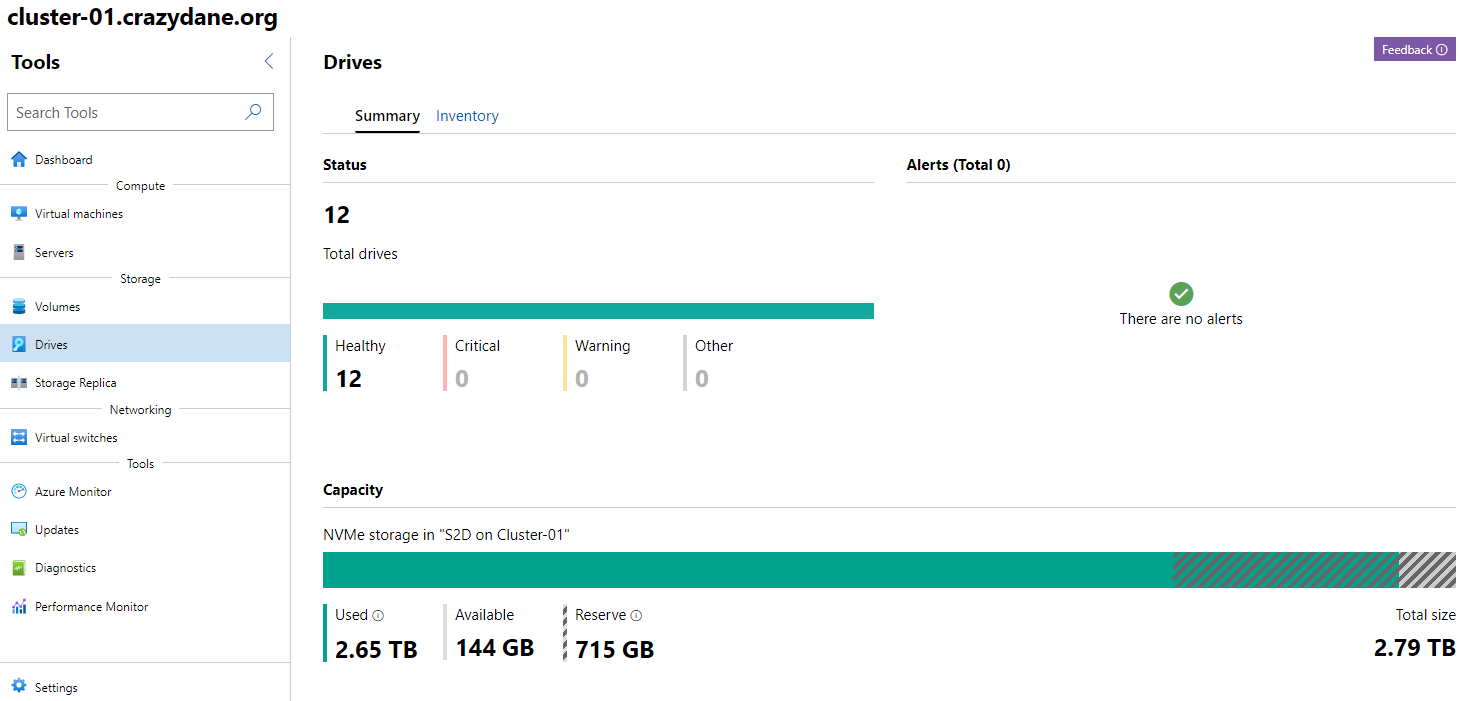

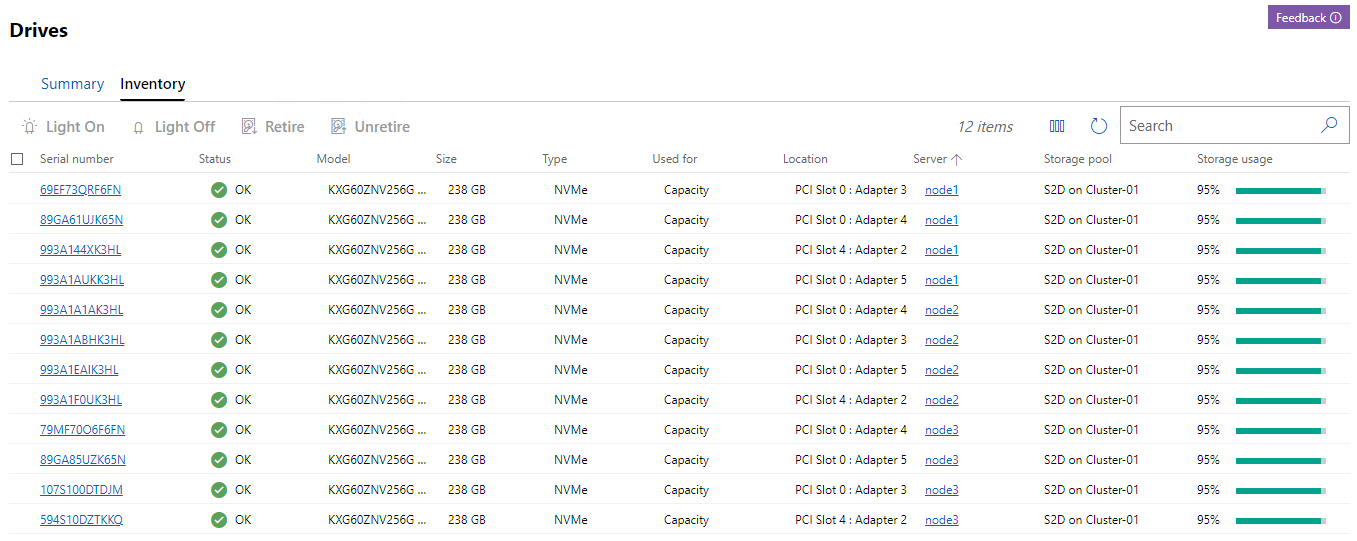

This then allowed me to validate the cluster and deploy S2D across the 3 Nodes which gave me the following:

I created a single VD using all the available space, which ended up only being about 900GB because with only 3 Nodes, S2D insist on doing a 3-way mirror. This means my storage efficiency is only 33%.

If I go to 4 Nodes, I have 50% efficiency, which still sucks compared to my RAID60 arrays, which have an efficiency of 83% (2/12-1*100).

S2D is pretty slick, but the 50% efficiency bites and it would take going to 7 Nodes to get to 67% and 9 Nodes to reach 75% and you finally max out at 80% efficiency with 16 nodes.

So moving all my spinner to S2D is not an option. Besides, my NVMe cache is way to small to support that anyway.

So other than going to a real SAN, are there any solutions that one assemble using off the shelf components to get tiered storage?

Alternatively, perhaps upgrading from my Areca 1882 to a BroadCom MegaRaid 9580-8i8e would be a viable alternative?

This way I could stand up my RAID 60 arrays on it as my fixed large volume tier, and also move all my NVMe storage to it as my high speed tier for VMs?

If I went this route, I would need to track down a chassis with a backplane that can support 32x NVMe drives that has a 8x SFF-8654 connector to match the internal connector on the MegaRaid 9580.

Supermicro has this guy:

But they only sell it turn-key starting at around $5,500 which is way too rich for my blood.

Any less expensive solutions out there?

In a nutshell, I'm looking for a single server solution that have handle my RAID60 arrays as well as a single NVMe array that I can grow over time. A 32 slot solution like the SMC pictured above would fit the bill nicely.

I would probably build this new solution on this soon to be released SMC motherboard:

https://www.supermicro.com/en/products/motherboard/H12SSL-i

Thanks!

My main storage is configured as follows:

Supermicro X10SRL-F motherboard

E5-2680 v3 CPU - 12 Core @ 2.5GHz

32GB of DDR4 RAM

Intel X520 dual SFP+ 10G NIC

Areca ARC-1882IX-16 RAID Controller

The storage is spreads across 2 Supermicro 846 chassis and contains a pair of RAID60 arrays configured as follows:

The drives in each chassis are as follows:

I have had this setup for years and it has been rock solid. Sure, I'll loose the occasional drive from time to time, but I always have cold spares on hand, and replacing a failed drive has always brought the array back to normal.

In addition to the main storage server above, I have a 3 Node Storage Spaces Direct cluster where each node consists of the following:

Supermicro X10SRL-F motherboard

E5-2680 v3 CPU - 12 Core @ 2.5GHz

32GB of DDR4 RAM

Mellanox ConnectX-4 CX4121A SFP28 Dual 25Gbps NIC

2x Supermicro AOC-SLG3-2M2 PCe to dual NVMe adapters

4x Toshiba 256GB NVMe flash drives

The X10's has bifurcation enabled so that all 4 NVMe's in each node have 4x PCIe 3.0 lanes to the CPU.

Each node have both 25Gbps LAN connections going to a Dell S5148F-ON switch and have the following vSwitch configuration:

This then allowed me to validate the cluster and deploy S2D across the 3 Nodes which gave me the following:

I created a single VD using all the available space, which ended up only being about 900GB because with only 3 Nodes, S2D insist on doing a 3-way mirror. This means my storage efficiency is only 33%.

If I go to 4 Nodes, I have 50% efficiency, which still sucks compared to my RAID60 arrays, which have an efficiency of 83% (2/12-1*100).

S2D is pretty slick, but the 50% efficiency bites and it would take going to 7 Nodes to get to 67% and 9 Nodes to reach 75% and you finally max out at 80% efficiency with 16 nodes.

So moving all my spinner to S2D is not an option. Besides, my NVMe cache is way to small to support that anyway.

So other than going to a real SAN, are there any solutions that one assemble using off the shelf components to get tiered storage?

Alternatively, perhaps upgrading from my Areca 1882 to a BroadCom MegaRaid 9580-8i8e would be a viable alternative?

This way I could stand up my RAID 60 arrays on it as my fixed large volume tier, and also move all my NVMe storage to it as my high speed tier for VMs?

If I went this route, I would need to track down a chassis with a backplane that can support 32x NVMe drives that has a 8x SFF-8654 connector to match the internal connector on the MegaRaid 9580.

Supermicro has this guy:

But they only sell it turn-key starting at around $5,500 which is way too rich for my blood.

Any less expensive solutions out there?

In a nutshell, I'm looking for a single server solution that have handle my RAID60 arrays as well as a single NVMe array that I can grow over time. A 32 slot solution like the SMC pictured above would fit the bill nicely.

I would probably build this new solution on this soon to be released SMC motherboard:

https://www.supermicro.com/en/products/motherboard/H12SSL-i

Thanks!

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)