Hey all,

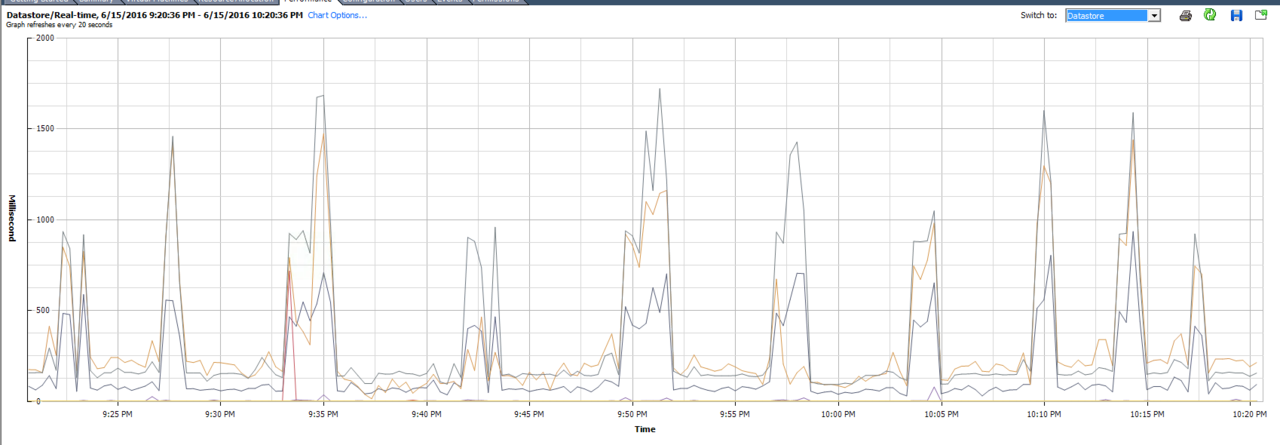

i currently have two whitebox esxi hosts running in my lab at home. They share an iScsi connection to a Qnap 4 bay NAS. Im looking at the datastore times and am noticing some rather high numbers which got me thinking.

server one is an intel i5-6500 with 32gb of ram.

server two is an intel i7-5820k with 64gb of ram.

with the amount of VM's i have running and ram i have in server two, i know i can run everything on server two and still have room to grow.

what im thinking is should i convert server one into a FreeNas box? My concern is that both servers have a single gigabit network connection. I will kill the pipe when everything is booting and most likely keep the data rates at 25% while everything is running.

The Qnap has two gigabit ports which i have port channeled together to present a 2gb link. However, looking at what vmware says the storage delay is and than looking at the qnap manager as to the disk delay time... im just thinking i could do better.

The disks are WD reds 1tb's x 4

i currently have two whitebox esxi hosts running in my lab at home. They share an iScsi connection to a Qnap 4 bay NAS. Im looking at the datastore times and am noticing some rather high numbers which got me thinking.

server one is an intel i5-6500 with 32gb of ram.

server two is an intel i7-5820k with 64gb of ram.

with the amount of VM's i have running and ram i have in server two, i know i can run everything on server two and still have room to grow.

what im thinking is should i convert server one into a FreeNas box? My concern is that both servers have a single gigabit network connection. I will kill the pipe when everything is booting and most likely keep the data rates at 25% while everything is running.

The Qnap has two gigabit ports which i have port channeled together to present a 2gb link. However, looking at what vmware says the storage delay is and than looking at the qnap manager as to the disk delay time... im just thinking i could do better.

The disks are WD reds 1tb's x 4

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)