After MC sees these aren't the hit they expected the price will be $199 very quickly. Maybe even over the weekend. I'll get one then.

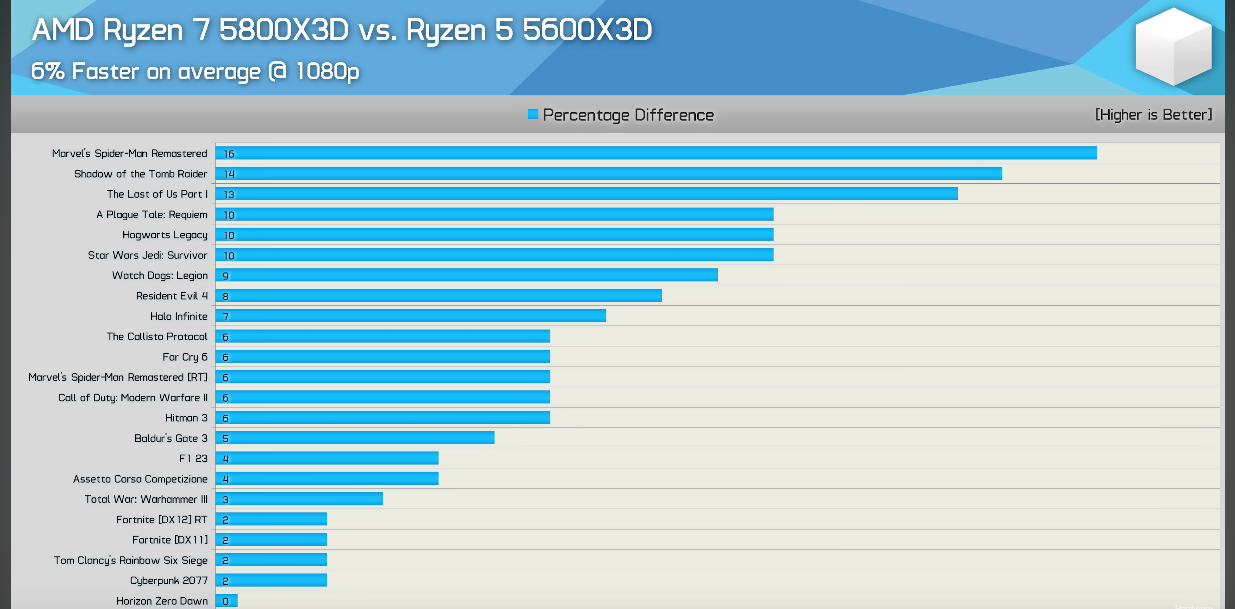

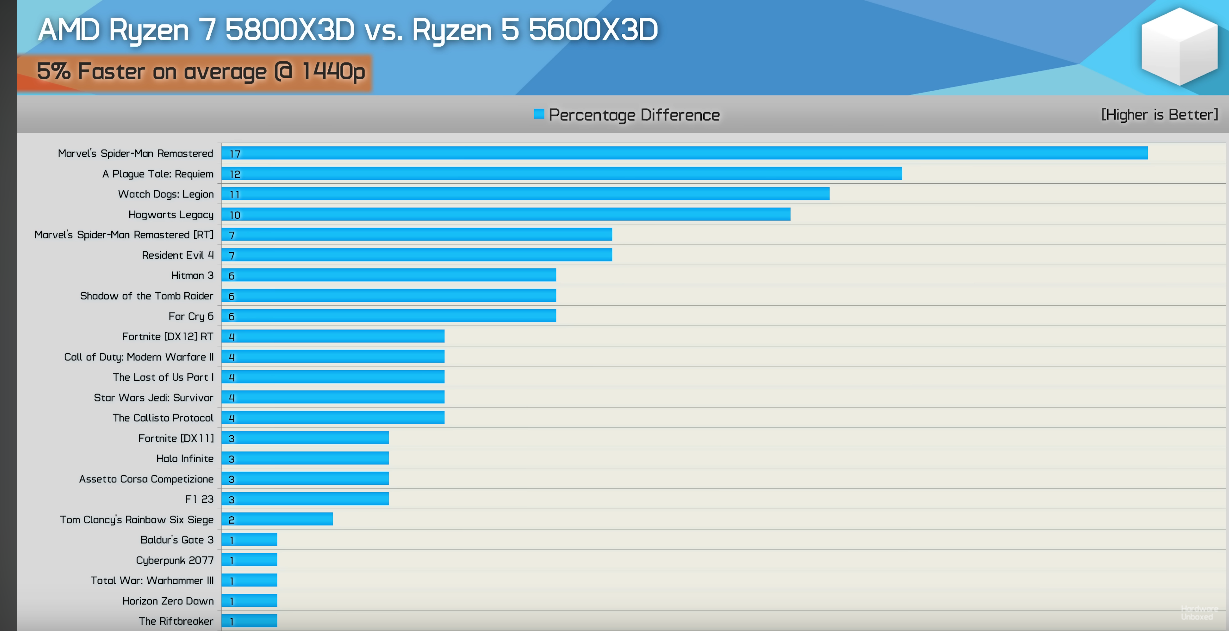

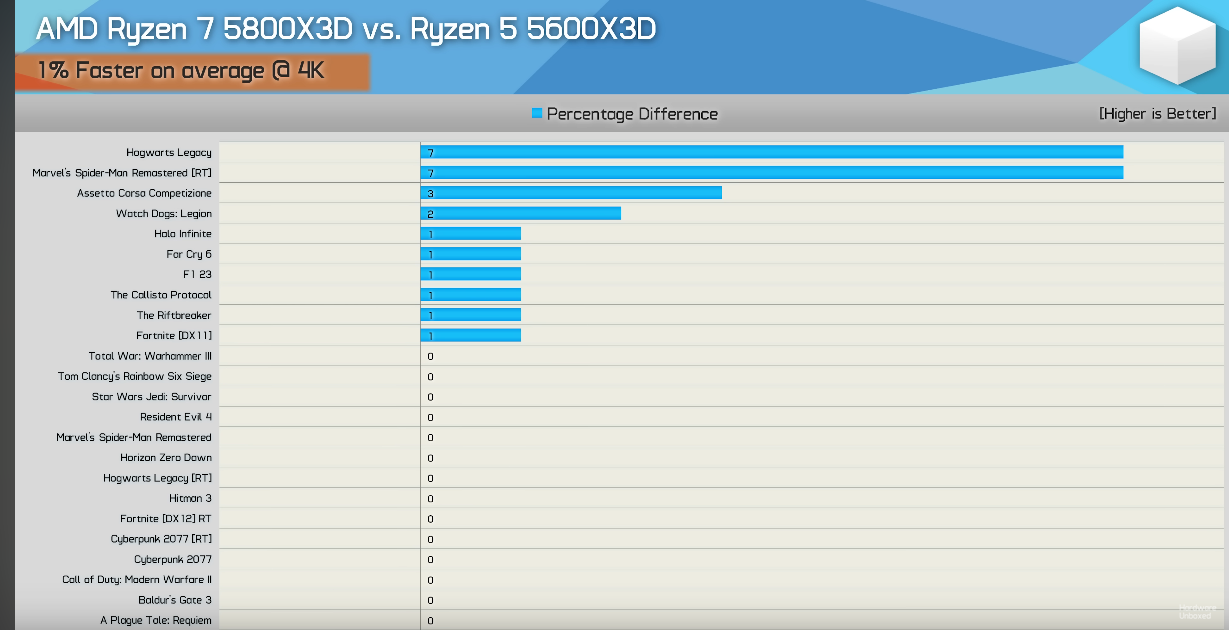

I'd still wait, the 5800X3D is the only AM4 cpu worth buying at this point. 6 core CPUs should only be in budget builds and the 5600X bundle at MC that was just at $189 last week is the way to go. You can slap that combo in almost any older case and if you have something built in the last 8 years, you can reuse the DDR4 for it. It also comes with a decent heatsink so you don't have to purchase one.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)