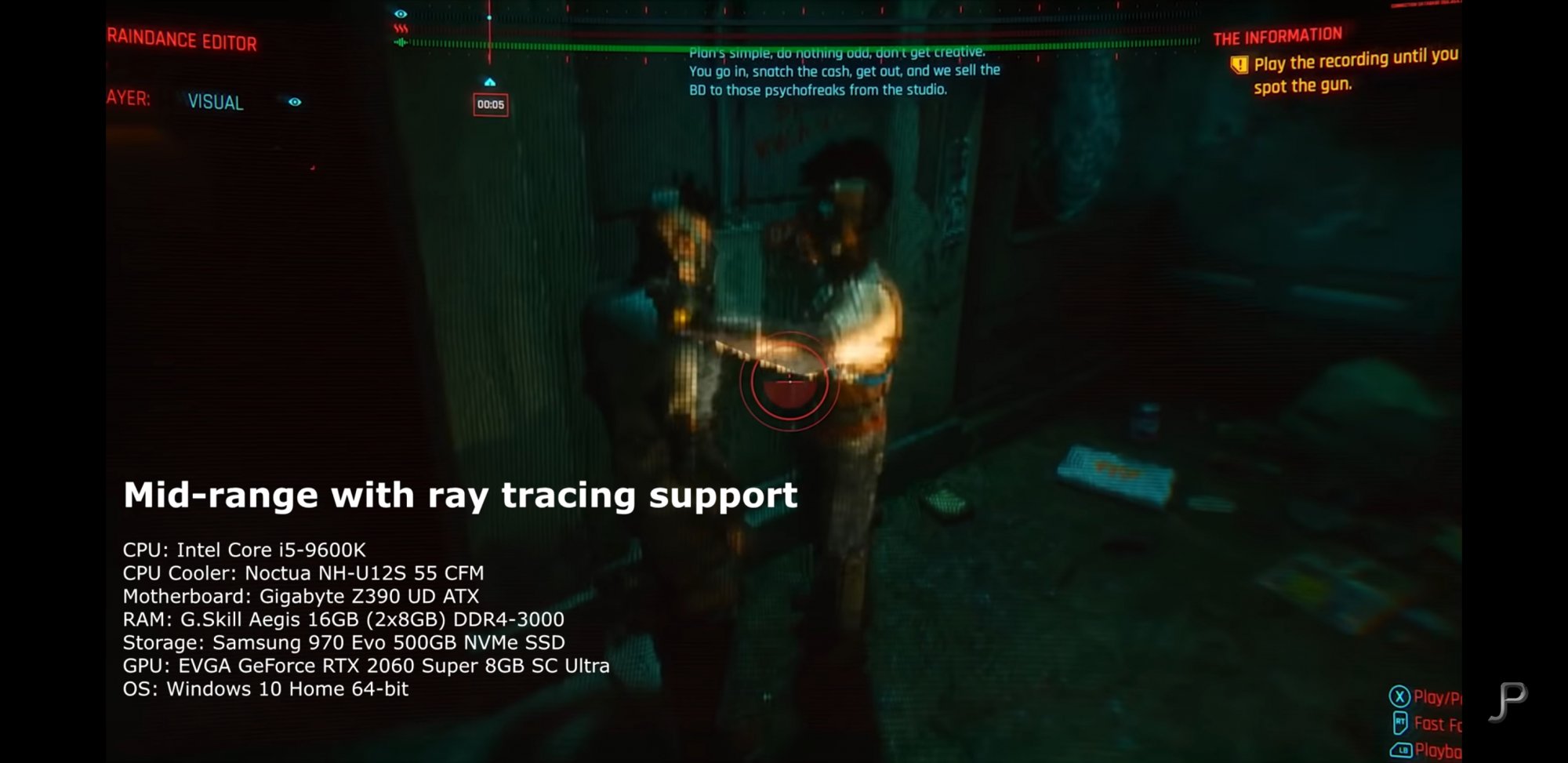

the 5700XT was never intended to compete in the high end...Big Navi is supposed to be the first AMD GPU to do that since the ATI days...

Never said it was. It's about extrapolating what the technology in 5700Xt, and more recently RDNA2 in XBSX, can do, and leaving AMD little room to breath.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)