Its going to be a beast even in a cut down consumer version: https://devblogs.nvidia.com/nvidia-ampere-architecture-in-depth/

There will be no consumer version of GA100. It doesn't even have RT cores. That is a pure data-center part.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Its going to be a beast even in a cut down consumer version: https://devblogs.nvidia.com/nvidia-ampere-architecture-in-depth/

There will be no consumer version of GA100. It doesn't even have RT cores. That is a pure data-center part.

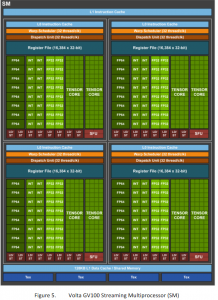

FP64 isn't needed in games, no. How did Volta compare to Turing? Was there any similarity between the two? Trying to remember because if NVIDIA is differentiating architectures between product lines than the forthcoming GeForce products could not even be based on or called Ampere.Yes it doesn't have RT but you can still get an idea of how the SM will look since both will have the tensor cores, fp/int 32 and the transistor count with that die size is gigantic.

View attachment 245435

View attachment 245434

Are fp64 needed at all in consumer gaming parts? I bet consumer Ampere will have fp64 replaced with fp32 and RT cores tacked on but look otherwise very similar to this. But 826 mm2 with 54.2B transistors vs Tesla T4 at 545 mm2 and 13.6B transistors is a gigantic leap. Just using that metric alone is enough to figure out that Ampere is going to be a significant jump from Turing.

FP64 isn't needed in games, no. How did Volta compare to Turing? Was there any similarity between the two? Trying to remember because if NVIDIA is differentiating architectures between product lines than the forthcoming GeForce products could not even be based on or called Ampere.

Ampere will eventually replace Nvidia’s Turing and Volta chips with a single platform that streamlines Nvidia's GPU lineup, Huang said in a pre-briefing with media members Wednesday.

..

Nvidia did not release any information Wednesday about consumer GPUs using Ampere, but when asked by a reporter in the briefing about the difference between enterprise and consumer approaches to Ampere, Huang said “there’s great overlap in the architecture, but not in the configuration.”

So definitely different than Turing. The interesting thing is Marketwatch has Jensen saying the following:

So they share a name this time. I don't think we are seeing much that will be in the gaming cards. Same Tensor cores?

A100 has four Tensor Cores per SM, which together deliver 1024 dense FP16/FP32 FMA operations per clock, a 2x increase in computation horsepower per SM compared to Volta and Turing.

Think about AMD's ray tracing video then watch the one from nVidia running on Turing at 9:50.

Ampere is going to absolutely crush AMD.

If they gut the fp64 and replace it with fp32 and also tack on RT then it will technically be the same architecture but a different "configuration". I have a feeling NVIDIA is going to push DLSS 3.0 very hard this generation given how much die space tensor cores are taking up and the 2x performance improvement they claim in their whitepaper. They'll probably emphasize it as much as RT if not more.

Is there any info on DLSS 3.0 and how it may differ from 2.0? Or is it same as DLSS 2.0 but on steroids (for those that have more tensor cores / Ampere GPU)

DLSS 3.0 "info" is just BS clickbait.

Is there any info on DLSS 3.0 and how it may differ from 2.0? Or is it same as DLSS 2.0 but on steroids (for those that have more tensor cores / Ampere GPU)

Hope the 3060 is one of the first cards announced and not just the 70/80

This is almost never the case. They always start at the top and work there way down. It makes for a more orderly clearance of old stock.

Do you think NVDA may start with 3080 Ti or do you see 3080 Ti as 2021 product? To me starting with 3080 Ti would make logical sense as they are very low volume product and can generate halo / excitement for lower end tiers as NVDA ramps up for mass production.

Something makes me think there might not be a 3080ti, I'm not sure what it was I read.

It was along the lines of there is likely to be a Titan then 3080 down, to try and keep the pricing up.

Most likely rubbish but it made me uneasy.

You could be correct, they could do Titan first, then mass production for lower end tiers then 3080 Ti sometimes in 2021 which would really suck for all of us stuck at 1080 Ti and waiting for a worthy successor. I do not think I will go for Titan as the price / performance ratio for those (in gaming) is beyond ridiculous.

GTX 1000 Launched with Titan instead of 1080ti.

RTX 2000 Launched with 2080ti instead of Titan, but it was priced like Pascal Titan.

So really launching with 3080ti or Titan is more like a naming/marketing decision than a pricing difference. Though calling a 2080ti instead of Titan did make a lot of people completely lose it.

To me the naming is irrelevant. They will launch with their Biggest die Ampere gaming part (GA 102?), and it will be expensive (> $1000) regardless of whether they call lit Titan or 3080Ti.

A very aggressive Nvidia is good for us. AMD can compete with price vs performance. Lisa Sue previous goal was to make an overall 45% profit margin which they were able to do.

I really like what Nvidia is doing with HPC, I don't think anyone will be close to them. Nvidia could be the next Apple. With there incredible AI performance, I can see some major potential shifts coming especially if Mvidia creates an AI driven OS that works with their hardware.

Ampere is looking to be the break away product, looking forward to their gaming cards and surprises coming with more AI driven software.

I am pretty sure you meant 45% gross margin, which is healthy. nVidia is at 60% gross margin / 20% profit iirc.

Low overhead businesses (not tech) can run around 30-40% gross and be healthy.

It's the same thing. They calculate gross margins as a way to track profit % made and that helps with efficiency for a company like nVidia or AMD. It doesn't count rent, administrative fees etc but strictly speaking if their gross margin is 60% then that's the profit % nVidia makes which is really good.

He said 45% which refers to gross margin (less used gross profit). The profit margin is what actually shows the company is profitable.... you can be at 40% gross margin (gross profit) and still lose money (negative profit margin) as a company.

I only point it out because people get riled up over nVidia but they are 20% profit margin (which is still high but not like old Pharma high).

Do you all think the 3080ti will be vastly superior to amd's big navi card? Since its rumored to be at 862 mm^2 vs 502 mm^2 i think nvidia has a big advantage here.

rumoured, raining salt, tasting like salt. Most of us do not know one way or another. The other factor is price per performance for what one will use it for. Just have to wait for the dust to clear to decide which is better for one own purposes. Both could be superior in some aspect or feature or one could be so worth the cost that even if the other is somewhat better it is not worth it. If Nvidia can capitalize more on their AI lead, useful functions that many would want, like DLSS, be implemented easily and used, then the added extra cost may will be worth it. I believe Nvidia is at a breakaway point, a clear break away where every competitor may not even be in the rearview mirror so to speak. Won't know until the stuff hits the ground.Do you all think the 3080ti will be vastly superior to amd's big navi card? Since its rumored to be at 862 mm^2 vs 502 mm^2 i think nvidia has a big advantage here.

Do you mind elaborating how nvidia would be at a breakaway point from your point of view? Yes they have an AI lead but does nvidia have other up and coming technologies that would push their lead performance wise in the gaming market?

They might release it as normal PCI-E card which you could then use for gaming.The giant Ampere is the Data Center part, not the gaming part. We really have no idea how big the Gaming Ampere part will be.

They might release it as normal PCI-E card which you could then use for gaming.

Price would be at least $3000 so not really cheap but it can and imho should happen.

It doesn't even contain RTX cores, so no real chance of that.

Too bad. It now seems a lot less probable they will do it.It doesn't even contain RTX cores, so no real chance of that.