If it makes your fps drop too much, then yes, it could.

Sure but that isn't specific to RT. Lots of ways to address fps drops including faster hardware.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

If it makes your fps drop too much, then yes, it could.

You mean baseless some rumors you like. It's just a regurgitation of the rumor brought up many posts ago.

You compare the product stack regardless of the price.I think this is an important component of the discussion. Can you really compare the 1080 Ti to the 2080 Ti when the 2080 (non-Ti) launched at a higher price than than the 1080 Ti?

2013 - 780 Ti launches at $700

2015 - 980 Ti launches at $650

2017 - 1080 Ti launches at $700

2018 - 2080 Ti launches at "$1000" but sells for $1200-1300

Ray tracing is more than reflections.Well I guess you could, but then you will die in the game. But you will have pretty stuff to look at while on the ground bleeding........

It's possible. The standard was released end of June last year. If Ampere is releasing end of the year then the standard could have been around long enough that it could be incorporated in new video cards by the time production had to ramp up. I wouldn't hold my breath, though. I would expect HDMI 2.1, however.Well this thread got interesting at the end

Anyone heard whether it's going to have DisplayPort 2?

If one wants 144hz 4K HDR at 4:4:4 RGB then DP2 will be needed. DP2 has significantly more bandwidth, ~80Gbps compared to 48GBps.You compare the product stack regardless of the price.

Ray tracing is more than reflections.

It's possible. The standard was released end of June last year. If Ampere is releasing end of the year then the standard could have been around long enough that it could be incorporated in new video cards by the time production had to ramp up. I wouldn't hold my breath, though. I would expect HDMI 2.1, however.

144 Hz would only push bandwidth up by 20% to around 36 Gbps. With the overhead that would not fit into 40 Gbps, but it shouldn't have issues with 48 which has a data rate of about 42.7 Gbps. We were talking about the LG CX, though, which is only a 10-bit 120 Hz panelIf one wants 144hz 4K HDR at 4:4:4 RGB then DP2 will be needed. DP2 has significantly more bandwidth, ~80Gbps compared to 48GBps.

Down Stream Compression for HDMI 2.1 should be able to give 144hz at 4K without issue. Some say it is lossless and that is not the case, it is supposed to be visually lossless -> The data once compressed cannot be reconstructed when decompressed so it is not a lossless compression model but visually, if most cannot tell the difference then that is probably not an issue except for the few that can. In any case it should be better than 4:2:2 or 4:2:0.

Really hope both will be available because I usually keep my graphics cards 3-5 years. Pretty sure Nvidia will have HDMI 2.1 due to what they are doing with LG with VRR and HDMI with current generation, not sure about DP2. AMD next gen consoles both have HDMI 2.1, I would expect the same with RNDA2 cards. As for DP2 I would expect AMD to support it, as for Nvidia? I doubt it, plus Nvidia will need to make a new GSync Module to support DP2 for the added bandwidth unless they stick to HDMI 2.1 for it using DSC.

Ultimately, NVidia isn't forced to deliver on anyone's expectations, and the conditions on the cost of silicon has changed drastically over past releases. Those poor releases where NVidia did little are also instructive. Often those were choices to not chase significant improvements.

If you spend 80% of a frame time doing Raster work, and 20% doing RT work, you don't shift your GPU drastically in favor of the reducing the 20% work.

But it should be an interesting release cycle with new AMD and NVidia parts dropping nearly together.

Here are the correct data rates and what you will need for the different resolutions, chroma sampling, freq etc.144 Hz would only push bandwidth up by 20% to around 36 Gbps. With the overhead that would not fit into 40 Gbps, but it shouldn't have issues with 48 which has a data rate of about 42.7 Gbps. We were talking about the LG CX, though, which is only a 10-bit 120 Hz panel

I meant to ask earlier in the thread. But, Where are you getting the 80/20 figures from? You have mentioned it a few times.

You dont understand the topic.I wondered about DisplayPort just because they NVidia don’t really treat HDMI as a first class citizen and monitors are already limited by 1.4 and HDMI 2.1 doesn’t really add much headroom.

It would also seem to me to be odd to go for a port switch for a generation, with the knock on effects to monitors that have a 2 year time to market, plus multiport is always a bit crap.

Guess we’ll find out this week (as we can infer it from the GA100), it was just an idle thought. Cable BS has been annoying me

You dont understand the topic.

NVidia were first to introduce HDMI 2.0 and will probably be first to introduce HDMI 2.1.

HDMI 2.0 is 18GB/s, HDMI 2.1 is 48GB/s, 2.7x faster.

UHD displays are HDMI 2.0 or HDMI 2.1, not HDMI 1.4

Nvidia has implemented HDMI VRR before AMD.My point over HDMI being second class is the G-Sync support, which took years and is an ongoing issue for hardware modules.

Not confirmed of course but this guy has been right on leaks before.

3080ti 50% faster than 2080ti. Boost clocks of 2.5ghz possible.

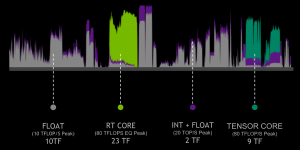

Frame time diagrams that show RT cores idle 80-90% of the time.

Frame time diagrams that show RT cores idle 80-90% of the time.

Can you link me to some?

While I do think Rasterized performance has an affect on Ray Traced performance I don't believe it's anything like as bad a bottleneck as you make it out to be. If it really was bad as you say, then a 2060 would have a much greater fall off in Ray Tracing compared to the 2080Ti. But, in games out there at the moment the Ray Tracing performance scales pretty much with the number of Gigarays a card has.

Can you link me to some?

While I do think Rasterized performance has an affect on Ray Traced performance I don't believe it's anything like as bad a bottleneck as you make it out to be. If it really was bad as you say, then a 2060 would have a much greater fall off in Ray Tracing compared to the 2080Ti. But, in games out there at the moment the Ray Tracing performance scales pretty much with the number of Gigarays a card has.

You do realize that games are hybrid of Raster and RT effects, and will be for the foreseeable future.

Or are you mixed up in what you are trying to say and you actually mean is that in Ray Traced games 80% is rasterised and 20% Ray Traced?

This is what I see happening, which is most likely not 100% correct:What I am saying is that in modern Ray Traced games, RT cores functional unit usage, is only 10%-20% of frame time. Not sure where the mixup you are talking about comes into play.

The source was that frame time diagram included in this thread, which I had seen before this thread. There is a similar one for Control, with multiple RT effects(more RT effects than any other modern game), where it comes in around 20% usage.

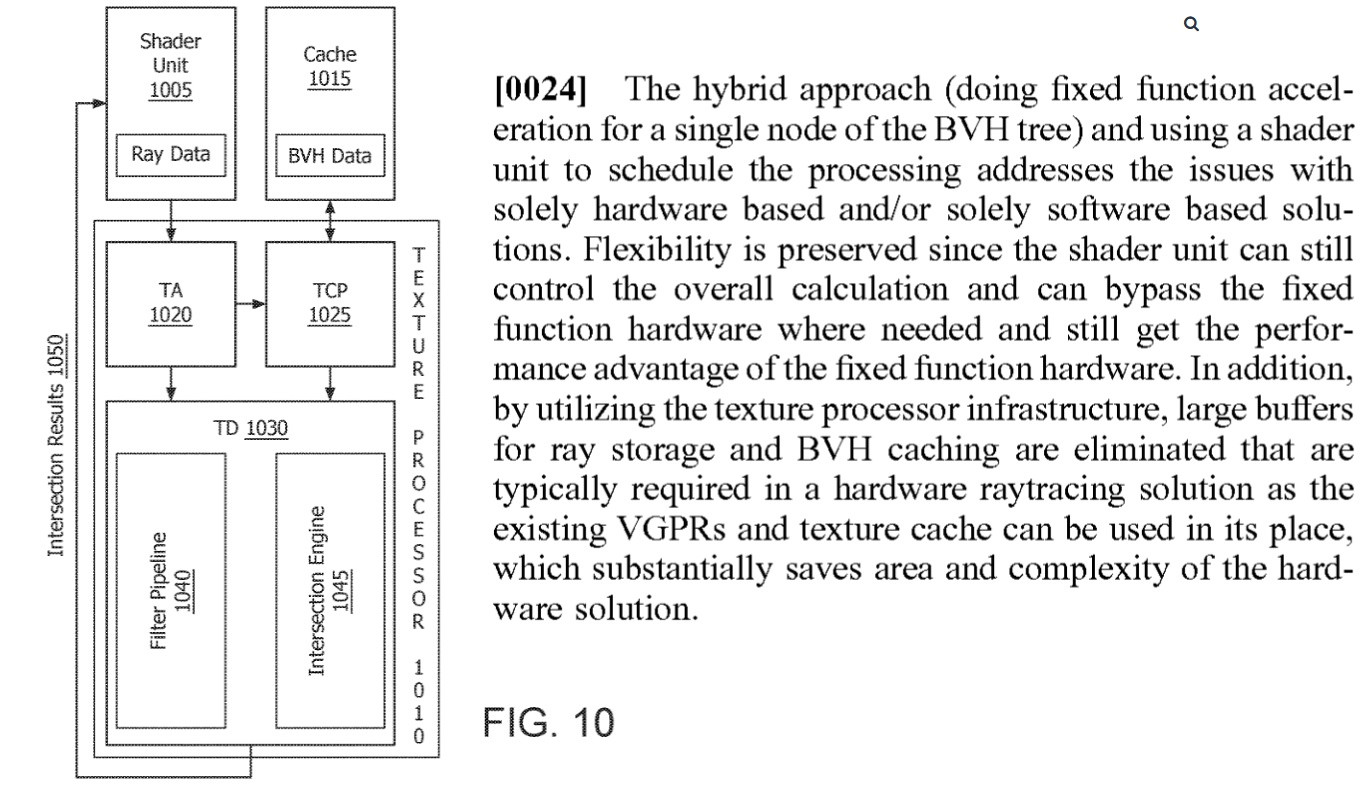

The potential issue with AMD's approach is you now have to program when and what to partition between whether a shader core is doing intersection testing and BVH traversal or doing rasterization. Since we are going to be stuck with the rasterization pipeline for a long time yet, it seems that you are potentially slowing down the entire rendering pipeline once you introduce any amount of ray tracing. By contrast, NVIDIA is getting it done with and out of the way so the slower rasterization process can work on the full data set at once while AMD is just spreading it out over a longer period. I admit I have not looked fully at the white papers for both, I am just working off my own experience in render code.This is what I see happening, which is most likely not 100% correct:

Nvidia:

AMD: (If the Patent method is actually used for RNDA2)

- Geometry setup: for RT it is not culled or limited culled if doing reflections. So most of the geometry has to be setup -> this will take longer due to transferring more data, takes more memory

- Once the geometry is setup, light rays or RT cores finds the intersections points, the higher quality or more rays cast, the longer this will take

- During this processing period, virtually no other rendering is taking place for that frame, other than finding intersection points - basically stalls the start of shading. So the percent will vary depending upon how many rays, complexity of geometry etc.

- Once the intersection points are found that data has to be transferred to the shaders which is another time delay and probably two caches or buffers (extra die space for RT core buffer data and shader buffer)

- Data in shaders for rasterization

AMD more hybrid approach to Nvidia more fixed function approach seems like for programmers they will have to adapt or optimize for each. Is this why we are seeing less RTX games or very few being announced which over time is dropped?

- Does the intersection point inside the texture block using the same Cache which eliminates a 2nd transfer of data, less duplication, saving die space which can be used for something else, less interruption

- Block Diagram

- View attachment 245200

- Appears AMD method will allow less stalling dual to parallel operations inside of the shader unit

- More versatile, for example: software controls how rays from objects can be ignored and not wasted or do not bounce light (If ray hits a given object -> ignored and no more calculations/time spent)

- So if just reflections are being used, any non reflective object would not need rays calculated

- Maybe why AMD's cruddy demo was all reflections

- This also implies direct optimization or programming needed for hardware

- Rays can be more concentrated for increase quality as needed, if many objects are flagged not to further calculate rays then more rays can be sent for objects that do have bounce

- Reflections

- Color bleed

- Caustics

- Occulsion

- Shadows

https://www.digitaltrends.com/computing/games-support-nvidia-ray-tracing/

The RT war is maybe over the horizon.

Really hard to say.. since it's processed in line with the geometry it doesn't have to do a bunch of geometry processing, send data to RT cores, then pass data again to render. We don't know the implementation details so it's hard to say. Guess we'll get the answer sometime near the end of this year early next year thoughThe potential issue with AMD's approach is you now have to program when and what to partition between whether a shader core is doing intersection testing and BVH traversal or doing rasterization. Since we are going to be stuck with the rasterization pipeline for a long time yet, it seems that you are potentially slowing down the entire rendering pipeline once you introduce any amount of ray tracing. By contrast, NVIDIA is getting it done with and out of the way so the slower rasterization process can work on the full data set at once while AMD is just spreading it out over a longer period. I admit I have not looked fully at the white papers for both, I am just working off my own experience in render code.

A note on this observation: if you cull the geometry too much, you lose reflections from objects that are either outside of the viewport angle of view or occluded by closer objects. Like Crytek's software raytracing demo, this may result in weird results that could detract from the user experience, which is the opposite goal of raytracing.Geometry setup: for RT it is not culled or limited culled if doing reflections. So most of the geometry has to be setup -> this will take longer due to transferring more data, takes more memory

Yes that is correct, since the objects that a reflection can see can be out of view space, virtually all the objects/geometry have to set up for the scene (one reason why Raytracing needs more memory when used). For reflection the objects need to be there but all the extra ray bouce don't have to be calculated for some of the objects.A note on this observation: if you cull the geometry too much, you lose reflections from objects that are either outside of the viewport angle of view or occluded by closer objects. Like Crytek's software raytracing demo, this may result in weird results that could detract from the user experience, which is the opposite goal of raytracing.

I'll assume that there's some point where it makes sense to cull, because culling less than in a raster-only rendering pipeline will obviously have performance impacts as you're pointing out.

As far as rumor goes, 2080 ti + 15% performance... price? will all depends on NVIDIA pricing on the next gen.. but I think they are in for a surprise.

15% for what? 3070? I hope you don't think 3080 Ti will only be 15% faster. Anyone who thinks it's anything less than 40% is complete moron. I can get >10% with a mild overclock.

The 2080 Ti "only" has 21% more cores than the 1080 Ti and it is up to 40% faster at 4K resolution. The increase in transistors is probably a better indicator of possible performance increase. If GA102 has double the transistors we could be looking at huge gains. It really depends if NVIDIA is sticking with large dies for consumer products and how those transistors are split between SM and RT cores.This latest rumor has 3080 Ti with about 17% more cores, which is probably where the low numbers are coming from.

I agree that 15% is too low, but that doesn't mean someone that thinks 30% is a possibility is a moron.

If it was 30% + a price drop that would be great.

If it's 50% gain, but $1500 price, that would suck.

Price performance is that I will have my eye on.

Current info from what looks like PR/Briefing before the GTC videos shows Ampere GA100 pro chip with 54 Billion transistors!

Either the die is of unprecedented size (>1000mm2), or NVidia has somehow managed to get significantly better transistor density than AMD from 7nm. If it's the latter, NVidia may have a significant transistor surplus, which may benefit gaming GPUs

https://hardforum.com/threads/nvidi...ion-out-of-the-water-a100-big-ampere.1996616/

The increase in transistors is probably a better indicator of possible performance increase. If GA102 has double the transistors we could be looking at huge gains. It really depends if NVIDIA is sticking with large dies for consumer products and how those transistors are split between SM and RT cores.

I never said you did. I was replying to the very first line of your reply and provided my own take agreeing with you that just going by number of cores is a poor measure of performance variance.Sure, but did the rumor in question talk about transistor count? Plus I never said I expected 15%, in fact I said that was too low, and my expectations are 30%+.

Do we have the rest of the specs on the DGX A100 yet? Just noting that it is $200k compared to the DGX-1 at $150k with the same number of GPUs. I know that the rest of the hardware in the cluster is going to add to the cost as well, which is why I'm curious.Anandtech is now reporting 826mm2 die size for GA100 and 54B transistors.

This is potentially a Game Changer (for transistor density and cost/transistor).

TSMC 7nm was reported turning in very flat cost/transistor. That really puts a damper on getting much better perf/dollar. This was the basis of my skepticism.

But that was at practical AMD density of around 41 Million t/mm2.

Now we are looking at practical 65 Million t/mm2.

That drastically improves transistor economics. Essentially it's like a bonus 50%+ increase in transistor budget/$.

So now there is a fairly easy path to 50% boost in perf/dollar on the GPU die. Though you will likely need to spend more on memory to back it up, and there is some extra profit taking to be expected.

I just got more optimistic about 3000 series gains in perf/dollar.

Do we have the rest of the specs on the DGX A100 yet? Just noting that it is $200k compared to the DGX-1 at $150k with the same number of GPUs. I know that the rest of the hardware in the cluster is going to add to the cost as well, which is why I'm curious.

This latest rumor has 3080 Ti with about 17% more cores, which is probably where the low numbers are coming from.

I agree that 15% is too low, but that doesn't mean someone that thinks 30% is a possibility is a moron.

If it was 30% + a price drop that would be great.

If it's 50% gain, but $1500 price, that would suck.

Price performance is that I will have my eye on.

Current info from what looks like PR/Briefing before the GTC videos shows Ampere GA100 pro chip with 54 Billion transistors!

Either the die is of unprecedented size (>1000mm2), or NVidia has somehow managed to get significantly better transistor density than AMD from 7nm. If it's the latter, NVidia may have a significant transistor surplus, which may benefit gaming GPUs

https://hardforum.com/threads/nvidi...ion-out-of-the-water-a100-big-ampere.1996616/