pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,103

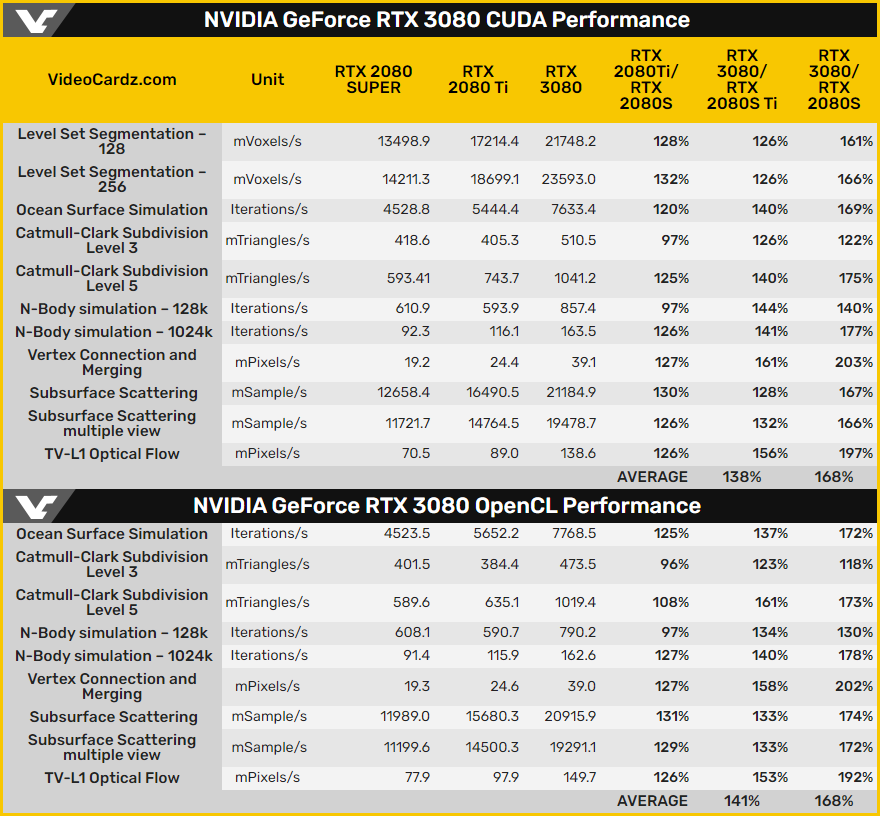

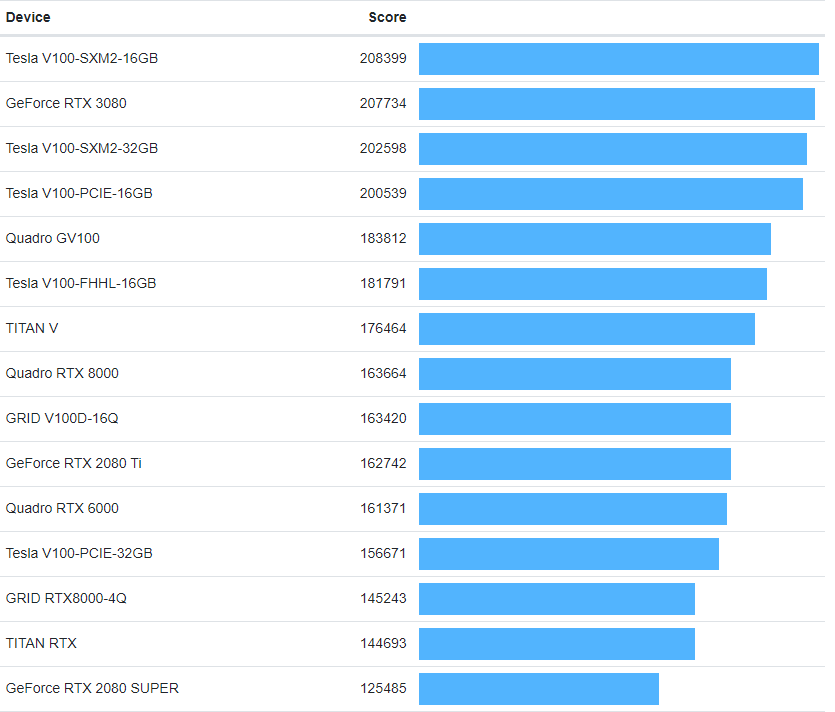

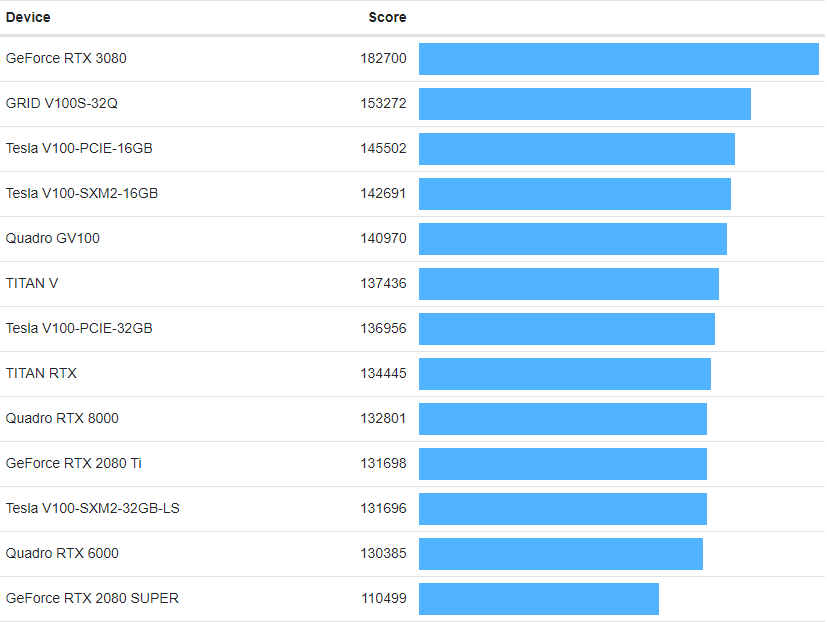

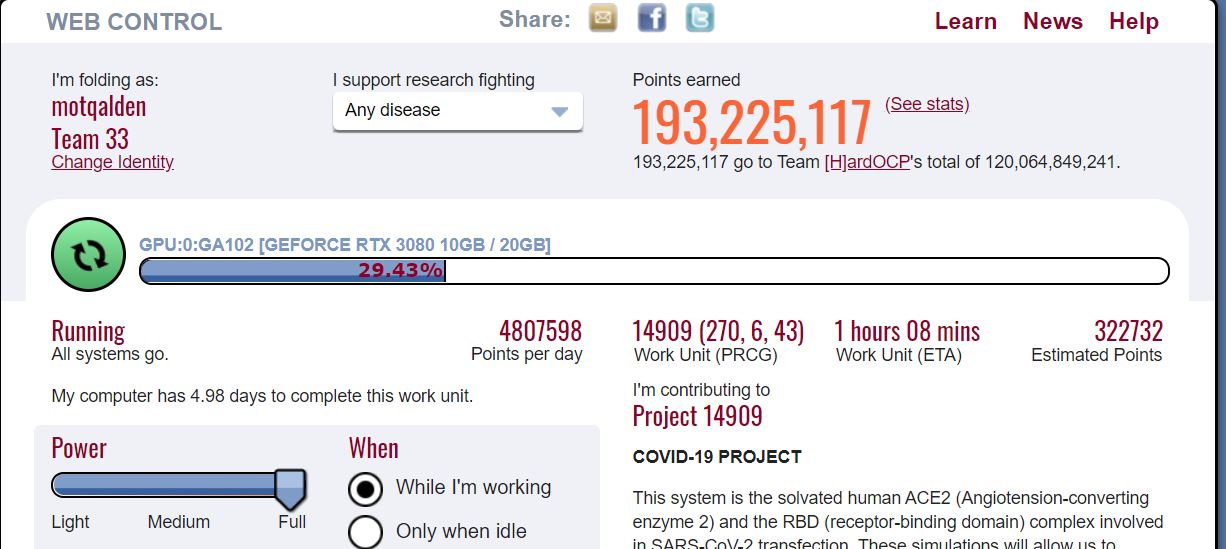

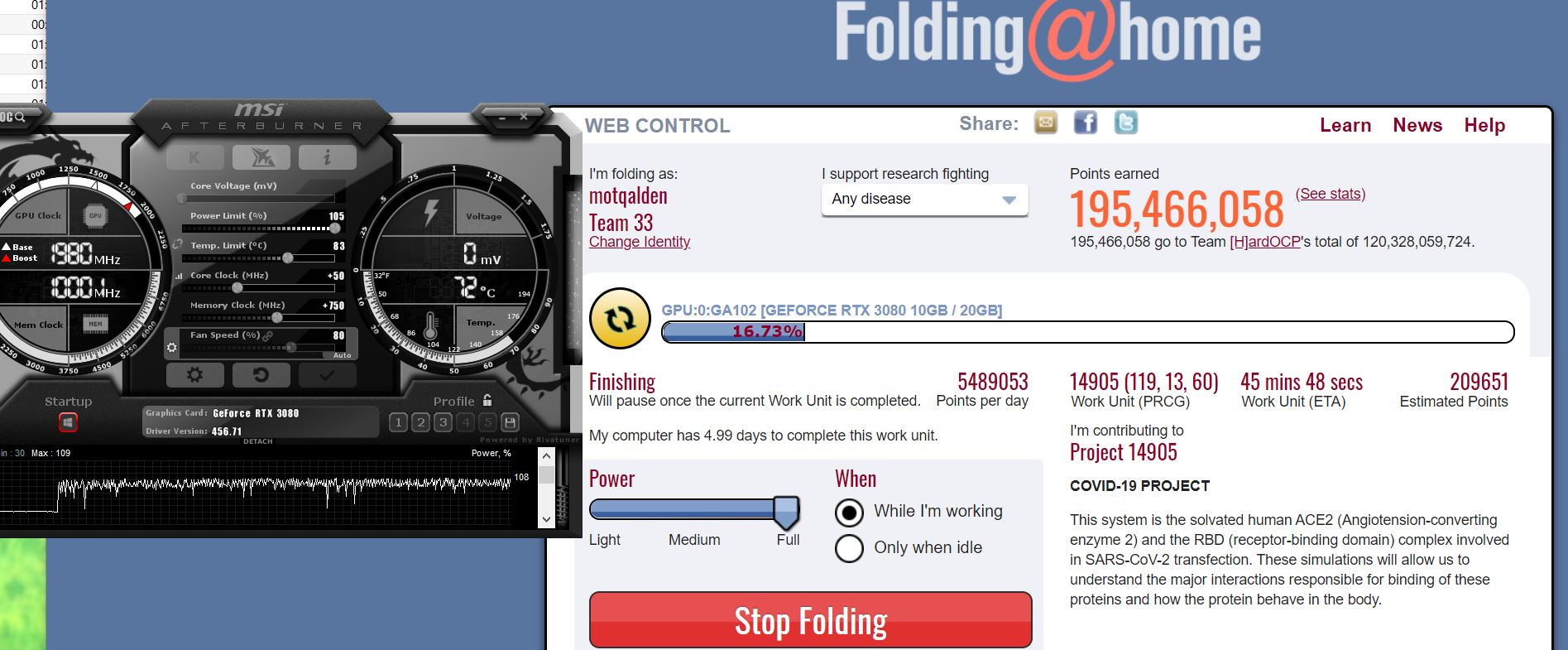

I just watched the "live" presentation by Jensen. Here is a quick summary of the new RTX3000 series card. Nothing on distributed computing. Not even a mention on folding at home in which they are one of the corporate sponsors/donors. I'm guessing it will translate to improvement in crunching particularly on the power efficiency.

Here is the pre-recorded official presentation by Nvidia and the webpage that summarizes the performance.

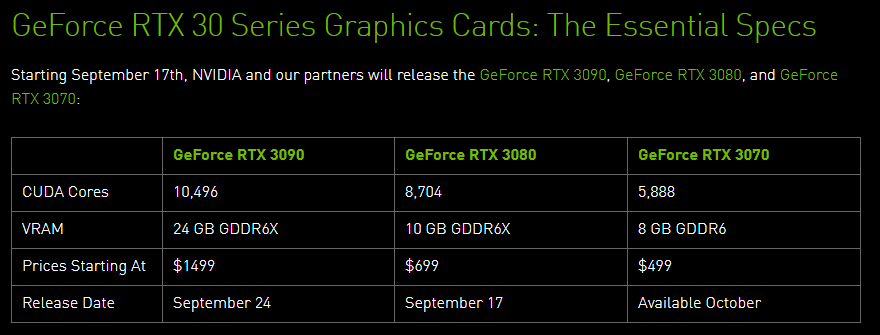

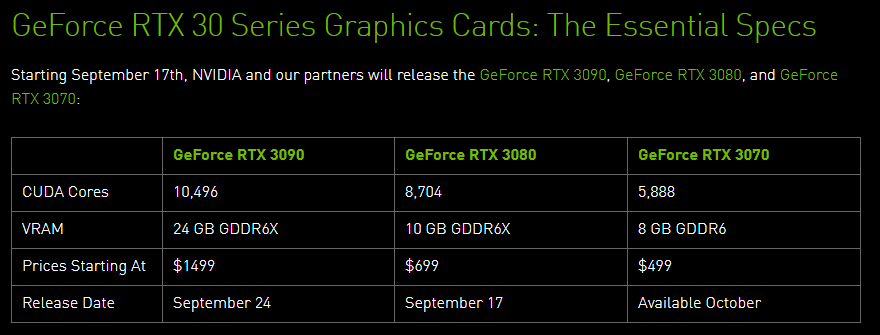

Card summary:

RTX 3080, Sept 17, $699

RTX 3070, sometime in Oct, $499. According to Jensen, this card is comparable in performance as RTX 2080Ti.

RTX 3090, Sept 24, $1499

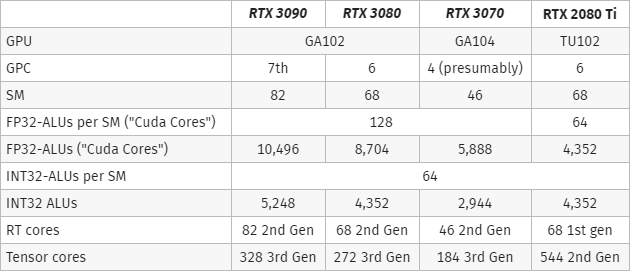

I'm guessing that 3070 and 3080 will be great cards for distributed computing. The 3070 with 5,888 cores seems very compelling!

Here is the pre-recorded official presentation by Nvidia and the webpage that summarizes the performance.

Card summary:

RTX 3080, Sept 17, $699

RTX 3070, sometime in Oct, $499. According to Jensen, this card is comparable in performance as RTX 2080Ti.

RTX 3090, Sept 24, $1499

I'm guessing that 3070 and 3080 will be great cards for distributed computing. The 3070 with 5,888 cores seems very compelling!

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)