erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,904

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I'm pretty sure the 4090 is bandwidth starved, there were some tests that seemed to indicate so. This thing is going to absolutely fly.Not sure why folks are excited about the 512 memory bus, my 390X had it in 2015. The Fury X had 1 Tb/s HBM and it was a failure at 4K.

Well if the 4090 is only 348 bit?Not sure why folks are excited about the 512 memory bus, my 390X had it in 2015. The Fury X had 1 Tb/s HBM and it was a failure at 4K.

I don't think it's unreasonable to think that GDDR7 is all of the speed up in needs in terms of memory bandwidth, but we'll see. I could totally see them selling the card for $2499 and it selling out TBH. I won't be buying one but it'll at least be an interesting piece of tech.I don't buy that the 5090 is going to be a 512-bit card whatsoever and if it is, I'd expect $2500+. That's just not Nvidia's MO with consumer grade graphics. The last time they released anything north of a 384-bit bus on GeForce products (for non-HBM cards) was all the way back in 2008-2009 with the 200-series which had 448-bit and 512-bit cards.

Also what's with everyone in here saying 384-bit was too low for the 4090? Are you the same people that though 192-bit and 128-bit were perfectly fine for the 70 and 60 cards this past gen?

If memory bandwith continue to be a very important element of those ML compute process, 512bits-GDDR7 5090...... if the environment is in any similar to now, hard to imagine how it would not.I could totally see them selling the card for $2499 and it selling out TBH

Yeah there's no world in which people will only use them for gaming, small ML companies will buy these in droves. You're better off as a consumer waiting for the actual consumer grade cards at this point.If memory bandwith continue to be a very important element of those ML compute process, 512bits-GDDR7 5090...... if the environment is in any similar to now, hard to imagine how it would not.

$2500 for a company like Nvidia? That's like a down payment at this point. Especially when you consider that the 4090 was their best selling card. Also what's wrong with having 512-bit? More is always better.I don't buy that the 5090 is going to be a 512-bit card whatsoever and if it is, I'd expect $2500+. That's just not Nvidia's MO with consumer grade graphics. The last time they released anything north of a 384-bit bus on GeForce products (for non-HBM cards) was all the way back in 2008-2009 with the 200-series which had 448-bit and 512-bit cards.

Also what's with everyone in here saying 384-bit was too low for the 4090? Are you the same people that though 192-bit and 128-bit were perfectly fine for the 70 and 60 cards this past gen?

cost more in everyway obviously. More always is.what's wrong with having 512-bit? More is always better.

source ? One would have guess entry level laptop.the 4090 was their best selling card.

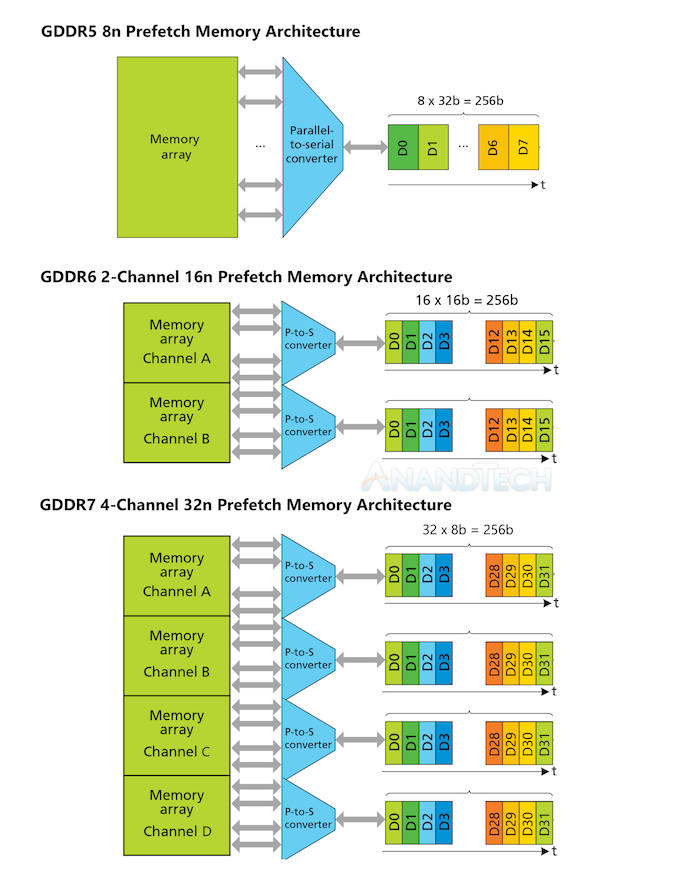

The bandwidth per pin of GDDR7 is double that of GDDR6X. The bus width doesn't need to be north of 384-bit for a significant increase in bandwidth. They're still on 16Gb memory chips for GDDR7, meaning 24GB cards are still going to be 384-bit. The only way you'll see 512-bit is if they make a 32GB card, or use 8Gb memory chips on 16GB cards.I don't buy that the 5090 is going to be a 512-bit card whatsoever and if it is, I'd expect $2500+. That's just not Nvidia's MO with consumer grade graphics. The last time they released anything north of a 384-bit bus on GeForce products (for non-HBM cards) was all the way back in 2008-2009 with the 200-series which had 448-bit and 512-bit cards.

Also what's with everyone in here saying 384-bit was too low for the 4090? Are you the same people that though 192-bit and 128-bit were perfectly fine for the 70 and 60 cards this past gen?

The 4090 is effectively cheaper for most people than the 3090 was. A big part of the 3090 life cycle was during the tariff, only evga was below 2k during the tariff period. I paid $2200 for my Asus Strix. My MSI Suprim Liquid X was only $1750. Im not even factoring in inflation. I would need the 5090 to be 60%+ of a 4090 to upgrade again. The 4090 is such a good card, for most games I'm near or above 4k120 with DLSS.4090 is BY FAR the most expensive card i've owned, real crazy.

but ,anything less than a 4090 for me at this point is peasant territory.

like, if you`re not playing cyberpunk 2077 @4K ultra 70-90fps are you even playing it? (kidding but you get my point)

so anyways, for 30-40% more performance I'd totally consider upgrading to a 5090 ( well not for 2500$ , don't give nvidia ideas)

Depends on their electricity price and nicehash average return too, I imagine that for many amperes ended up among the cheapest GPU ever, even when bought at ridiculous high price points.The 4090 is effectively cheaper for most people than the 3090 was.

Yeah but like us 3090 users you are still thinking that your card will be properly supported like I did when I bought my card. Nividia will screw you over somehow just like they did us. What give you the new features like path tracing and dlss3? No why would we let you have that when you didn't buy a new 40x0 card? Why? Obviously because it's going to be slower on your year old card, it doesn't matter if it can run but slower we can't have you benchmarking and showing that it isn't too unplayable and tanking our 40x0 card sales, so none of these new features for you.I would need the 5090 to be 60%+ of a 4090 to upgrade again. The 4090 is such a good card, for most games I'm near or above 4k120 with DLSS.

that not a new feature, if you mean ray reconstruction to make low resolution RT denoising better and faster, that available on a old RTX 2060new features like path tracing

Radeon HD 2900 series had it tooNot sure why folks are excited about the 512 memory bus, my 390X had it in 2015. The Fury X had 1 Tb/s HBM and it was a failure at 4K.

but what if you could have a steady 120 with DLAA instead.The 4090 is effectively cheaper for most people than the 3090 was. A big part of the 3090 life cycle was during the tariff, only evga was below 2k during the tariff period. I paid $2200 for my Asus Strix. My MSI Suprim Liquid X was only $1750. Im not even factoring in inflation. I would need the 5090 to be 60%+ of a 4090 to upgrade again. The 4090 is such a good card, for most games I'm near or above 4k120 with DLSS.

The card will likely cost way too much, just like the 4090. How much do you think it costs Nvidia to make a 4090? The manufacturing cost is likely under $300.cost more in everyway obviously. More always is.

I'm going by the amount of times it's sold out. The price of the 4090 has been creeping up, which means Nvidia doesn't have a problem moving product. We're talking about a $1,600 RTX 4090 that now sells for over $2k. The RTX 5090 won't be cheap. A lot of the 4090's are probably not used for gaming either. It's not like the 4060 where Nvidia has to drop the price just to get people interested.source ? One would have guess entry level laptop.

The die or a complete card, complete card rumours were around $800-900 I think for the fancy cooling solution. There can be a hit on the margin, but if the competition need to make a 512 bits-gddr7 GPU to have a chance to compete..... without an establish market to sell the very top of them really high and a market to sells the missed die still at a good price... they will stay high, because it will not exist.The card will likely cost way too much, just like the 4090. How much do you think it costs Nvidia to make a 4090? The manufacturing cost is likely under $300.

That does not tell you how many are bought, how many are in store, how many are made, does not tell you anything at all really, only tell you that stock is 0 in store and they have no problem selling what is made.I'm going by the amount of times it's sold out.

Yes, but the L40 using the same chips were selling for what $10k ?, big incentive to not have any die good enough to be in them to end up in a mere $2000 4090, has long the L40s were selling.We're talking about a $1,600 RTX 4090 that now sells for over $2k.

A 300mm wafer has a surface area of 70,000 sq mm, given the 4090 is 609mm2 the absolute best assuming 100% yield Nvidia can get there is 114 chips from that wafer. Given the current cost for TSMC is ~7000 for the wafer and another $20k to process it Nvidia is looking at $236 on just the chip, then there is the packaging and validation costs on that chip before we even get to the rest of the board and its components.The card will likely cost way too much, just like the 4090. How much do you think it costs Nvidia to make a 4090? The manufacturing cost is likely under $300.

yeah 4090's are an incredibly good deal, you're looking at $500 for a yielded, tested, and packaged chip plus margins, then $100 of VRAM, plus PMICs, cooler, board, testing, packaging and distribution on the AIB side...AIB margins are not so good at $1500.A 300mm wafer has a surface area of 70,000 sq mm, given the 4090 is 609mm2 meaning the absolute best assuming 100% yield Nvidia can get there is 114 chips from that wafer. Given the current cost for TSMC is ~7000 for the wafer and another $20k to process it Nvidia is looking at $236 on just the chip, then there is the packaging and validation costs on that chip before we even get to the rest of the board and its components.

$300 doesn’t even get a finished chip out the door let alone the remainder of the board components.

Ah yes, the 512-bit token ring bus, and AMD's first attempt at a high-end GPU after they purchased ATI.Radeon HD 2900 series had it too

R600 w/ 512-bit Memory interface in 2007, bro

Unfortunate waste of the 512-bit memory interface with that broken AA resolveAh yes, the 512-bit token ring bus, and AMD's first attempt at a high-end GPU after they purchased ATI.

Things did not get better until the next generation.

The R600 used sixteen 2Gb memory chips to reach 4GB total. Each GDDR channel is 32 bits wide, so 16 * 32 = 512 bits. Bus width isn't some magical arbitrary number that some people seem to think it is. The total bus width is simply a function of how many memory chips are being used. It's why the 4060 and 4060 Ti are "only" 128-bit (4x 16Gb chips * 32 bits per chip = 128 bits).Radeon HD 2900 series had it too

R600 w/ 512-bit Memory interface in 2007, bro

Don't forget that R&D is factored into the final price, as well. Bill of materials is typically the smallest piece of the actual cost of the product to the manufacturer, which too many people seem to not understand.A 300mm wafer has a surface area of 70,000 sq mm, given the 4090 is 609mm2 meaning the absolute best assuming 100% yield Nvidia can get there is 114 chips from that wafer. Given the current cost for TSMC is ~7000 for the wafer and another $20k to process it Nvidia is looking at $236 on just the chip, then there is the packaging and validation costs on that chip before we even get to the rest of the board and its components.

$300 doesn’t even get a finished chip out the door let alone the remainder of the board components.

TSMC N3 is a miss for a lot of things, logic heavy chips get extraordinary expensive there with few if any performance gains with a higher than average (for TSMC) failure rate.The fact that TSMC 3 seem to be a miss for Nvidia-TSMC for the new generation (and it shows where there was no actual new architecture-software change, performance by mm-power do not seem superb) have been quite massed down...

Could we end up seeing the same for the gaming side....

Are you sure about that?The R600 used sixteen 2Gb memory chips to reach 4GB total.

4870 & 4850 launched in '08, had 2GB. None with 4 though, except maybe the dual radeons, by technicality.

Even 3GB in 2011+ seemed rareAre you sure about that?

No GPU in 2007 had 4GB of VRAM, and the HD 2900 (R600) normally had 512MB on it.

In fact, one of the earliest AMD Radeon GPUs that I remember even having 4GB of VRAM was the Fury X, and that was in 2015.

Yeah but I don’t have numbers for R&D budgets associated with that silicon and If I can show that TSMC alone physically costs more than $300 than that is sufficient.Don't forget that R&D is factored into the final price, as well. Bill of materials is typically the smallest piece of the actual cost of the product to the manufacturer, which too many people seem to not understand.

Yeah, I was thinking about Hawaii XT when I typed that. The 2900 XT still had sixteen memory chips. 256 Mb GDDR3, in that case.Are you sure about that?

No GPU in 2007 had 4GB of VRAM, and the HD 2900 (R600) normally had 512MB on it.

In fact, one of the earliest AMD Radeon GPUs that I remember even having 4GB of VRAM was the Fury X, and that was in 2015.

There was a 3GB version of the GTX 580. Then next wasn't until the 3GB GTX 780, GTX 780 Ti in 2013.Even 3GB in 2011+ seemed rare

780 Ti I think had 3GB in 2013