read the article again please..

He clearly said it was around 155w for gaming? no 80 from slot and 75 from the 6 pin conncetor? No?

At stock clocks.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

read the article again please..

No I didn't He said at stock clocks it wasn't a cause for concern, only when overclocking. What did I mis read about that?

Well for one thing xfx is already selling a 1328mhz version, and pcper and Tom's reported 100w with just 1310

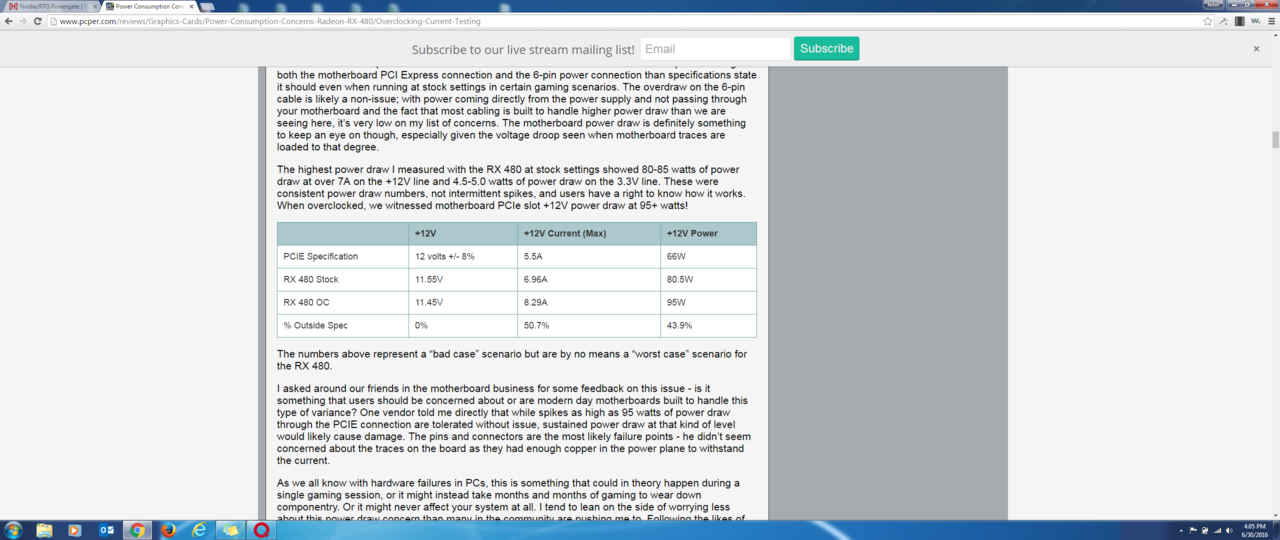

The cause for concern starts at 95w according to pcper, and it's doing 86 at stock, 14% above spec in terms of power on 12v.

At stock speeds the card shouldn't break the ATX spec. For overclocking one can expect this to happen and the onus is on the end user to know the risks of overclocking a reference card. AMD could just have easily added an 8 pin connector to mitigate the issue.

Why does everything come as an afterthought to hardware designers at AMD.

But like someone said some nvidia cards do, but it only matters when amd does it? or we should just brush that aside and because they are older cards and this is new?

Again, it's about sustained vs spikesBut like someone said some nvidia cards do, but it only matters when amd does it? or we should just brush that aside and because they are older cards and this is new?

Can't we just read some cool tech speak without every motherfucker in town trying to prove how god damned smart they are?

Can't we just read some cool tech speak without every motherfucker in town trying to prove how god damned smart they are?

Watch this is just going to go away in a week when the AIB cards come out and people are all over them getting tested.

What AMD is going to do is throttle the boost clock where it will boost less to stay below 150w. Problem solved.

They will be like boost clock is boost clock, it ain't guaranteed, because then the main focus will be after market cards anyways.

#ThrottleGate

Watch this is just going to go away in a week when the AIB cards come out and people are all over them getting tested.

What AMD is going to do is throttle the boost clock where it will boost less to stay below 150w. Problem solved.

They will be like boost clock is boost clock, it ain't guaranteed, because then the main focus will be after market cards anyways.

What if pricing reach near $280-300 for AIB cards? No one will care.

Any AIB 480 within $100 of a GTX 1070 is a bad buy. Hell, a 480 maybe a bad buy altogether in two weeks after the 1060 launch.

Apparently undervolting improves performance as well as reducing consumption

What if pricing reach near $280-300 for AIB cards? No one will care.

Any AIB 480 within $100 of a GTX 1070 is a bad buy. Hell, a 480 maybe a bad buy altogether in two weeks after the 1060 launch.

meh you cant say that without knowing how far they are clocked. I would have to await judgment on that.

AMD's gonna have a CLA up its ass if it decides to release a BIOS to throttle power usage by reducing performance. This will be identical to the 3.5GB fiasco, but worse, since flashing a VBIOS is risky and technically voids warranty, and AFAIK this capability is not in drivers.

So basically AMD's gonna have to do a recall, or risk losing certification, which means not getting into big OEMs.

LOL recall? They can easily adjust the boost clocks. Boost clocks are not promised remember? I think you are just thinking way ahead of yourself. They can easily say the boost clocks were adjusting according to power draw, bamn end of story.

I think trying to dig up dirt on a card released a really long time ago is frankly a pathetic defense.Since this now seems to be an issue with both companies. What are peoples take on Both Nvidia and RTG Failing the PCI-e specs. Regardless of spikes or usage. Both companies make cards that go over the PCI-e spec.

AMD Radeon RX 480 8GB Power Consumption Results (RTG 480)

Power Consumption: Gaming - GeForce GTX 750 Ti Review: Maxwell Adds Performance Using Less Power (750ti Maxwell)

Tomshardware complains about RTG having the issue, but totally ignored the 750ti from doing it as well.

So thats shows biased from Tomshardware sure we can ignore that part.....

What I want to know since we know both companies do it. How do people really think?

So can it be concluded that AMD was caught cheating.

I think there's definitely a deliberate aspect to this. AMD is not that naive or stupid to get this issue unresolved. The more I read, the more it becomes clear that they thought no one will catch this.

I think trying to dig up dirt on a card released a really long time ago is frankly a pathetic defense.

Makes me wonder about those rumors of AIB being unhappy. I can see why they might be unhappy

Nvidia lied to you for months about 4gb of ram and sold you 3.5 for 4gb. I didn't see it being an issue or people cooking that shit up and suing nvidia, nvidia told everyone to shut the fuck and up and move on and everyone did lol. They both do it. Caught cheating for what though? looks like the card uses too much power at the max voltage adjusting that or adjust the boost clocks will be fine. There is no cheating if the boost clocks are not adjusting according to power.

No the fact it exists is not a defense of AMD; it is like one kid saying the other kid stole a cookie too. It is misdirection also it is really old, so irrelevant to what AMD is doing now. Know if the 1060 releases doing the exact same thing, yeah we should be looking into that.what? not if it supports someones argument that it violates the spec just like everyone's claiming rx 480 does. Fair is Fair, no? So you throw away evidence just because its old?

Again, two wrongs don't make a right. And this is a really pathetic defense. People still quote the vram fiasco and so should they do so with 480s power gate issue.

It's either cheating or utter stupidity. Either ways doesn't bode well for AMD. They are going to pay one way or another.

No the fact it exists is not a defense of AMD; it is like one kid saying the other kid stole a cookie too. It is misdirection also it is really old, so irrelevant to what AMD is doing now. Know if the 1060 releases doing the exact same thing, yeah we should be looking into that.

Nvidia lied to you for months about 4gb of ram and sold you 3.5 for 4gb. I didn't see it being an issue or people cooking that shit up and suing nvidia, nvidia told everyone to shut the fuck and up and move on and everyone did lol. They both do it. Caught cheating for what though? looks like the card uses too much power at the max voltage adjusting that or adjust the boost clocks will be fine. There is no cheating if the boost clocks are not adjusting according to power.

I thought they were sued over the 970?

Nvidia lawsuit over GTX 970

And it was a pretty big deal around here...

The 480 power issue is a possible issue because overcurrenting your Mobo isn't the best thing to do. Hell my Asus Rampage has an extra power connection for multiGPU to help power the PCIe slots. What happens with the budget mobos that these cards will go into?

I'd also have to wonder if the cards are getting clean power off the PCIe slots. I guess it shouldn't matter since the VRMs should clean it up.

So can it be concluded that AMD was caught cheating.

I think there's definitely a deliberate aspect to this. AMD is not that naive or stupid to get this issue unresolved. The more I read, the more it becomes clear that they thought no one will catch this.

This could have been reason why it took a whole month for them to bring the card to market and the whole reason why the cards run out of spec. A possible desperate attempt to raise and stabilize the boost clocks to at least come close a GTX 970 in overall performance.Kyle Bennet:

Because AMD is trying to buy enough time to try and get the clocks up on production GPUs.

Since this now seems to be an issue with both companies. What are peoples take on Both Nvidia and RTG Failing the PCI-e specs. Regardless of spikes or usage. Both companies make cards that go over the PCI-e spec.

AMD Radeon RX 480 8GB Power Consumption Results (RTG 480)

Power Consumption: Gaming - GeForce GTX 750 Ti Review: Maxwell Adds Performance Using Less Power (750ti Maxwell)

Tomshardware complains about RTG having the issue, but totally ignored the 750ti from doing it as well.

So thats shows biased from Tomshardware sure we can ignore that part.....

What I want to know since we know both companies do it. How do people really think?

i really don't want to defend Nvidia in ramgate, but here goes. The card had 4gb of ram period no lie there, end of story; what they did was use slower ram for the last 512mb which certainly is misleading but is not the same as what AMD may be doing here.what? not if it supports someones argument that it violates the spec just like everyone's claiming rx 480 does. Fair is Fair, no? So you throw away evidence just because its old?