I''m doing these benches for my "Best Old Card Project" on my website http://hardwarebenchmark.googlepages.com/ .

I was surprised by what i saw.

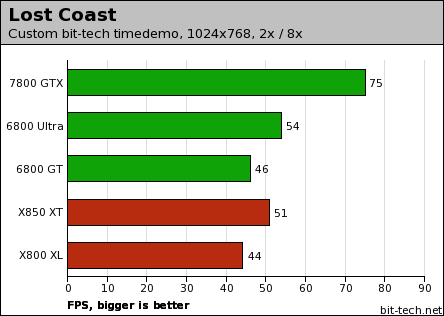

This is the Lost Coast ( HL2 is supposed to be a ATI game ) in built benchmark on highest settings . Rest of the machine in sig.

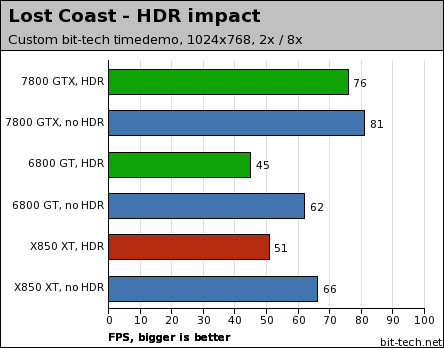

Yes that is 8600GTS 256MB up there with x1950XT . I never expected 8600GTS to be this strong and so much better than 7800GTX .

Used 8.8 Catalysts for ATI benchmark and 177.41 for Nvidia Benchmark .

ADDED : Lost Planet Snow Level DX9 Benchamark . AA as not been enabled as Nvidia Cards 7 series cards are not able to do so .

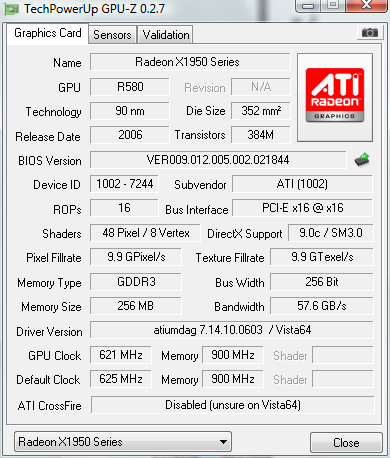

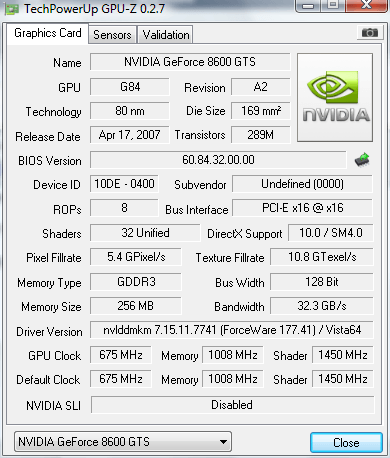

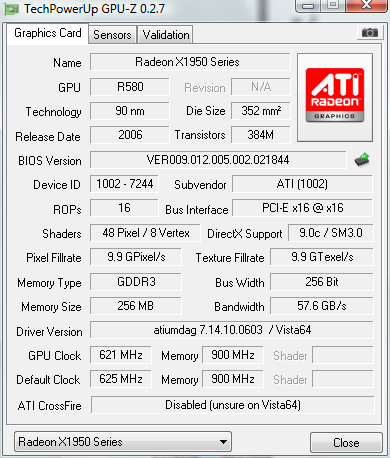

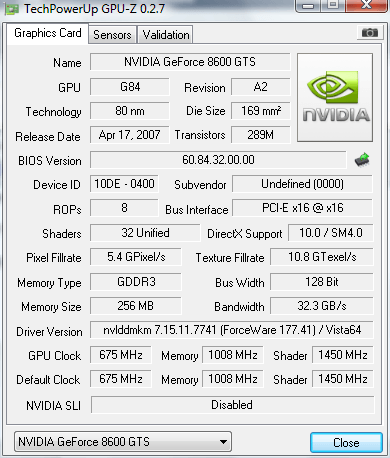

This is the GPU-Z's of those cards , all default speeds

I was surprised by what i saw.

This is the Lost Coast ( HL2 is supposed to be a ATI game ) in built benchmark on highest settings . Rest of the machine in sig.

Yes that is 8600GTS 256MB up there with x1950XT . I never expected 8600GTS to be this strong and so much better than 7800GTX .

Used 8.8 Catalysts for ATI benchmark and 177.41 for Nvidia Benchmark .

ADDED : Lost Planet Snow Level DX9 Benchamark . AA as not been enabled as Nvidia Cards 7 series cards are not able to do so .

This is the GPU-Z's of those cards , all default speeds

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)