ChristianVirtual

[H]ard DCOTM x3

- Joined

- Feb 23, 2013

- Messages

- 2,561

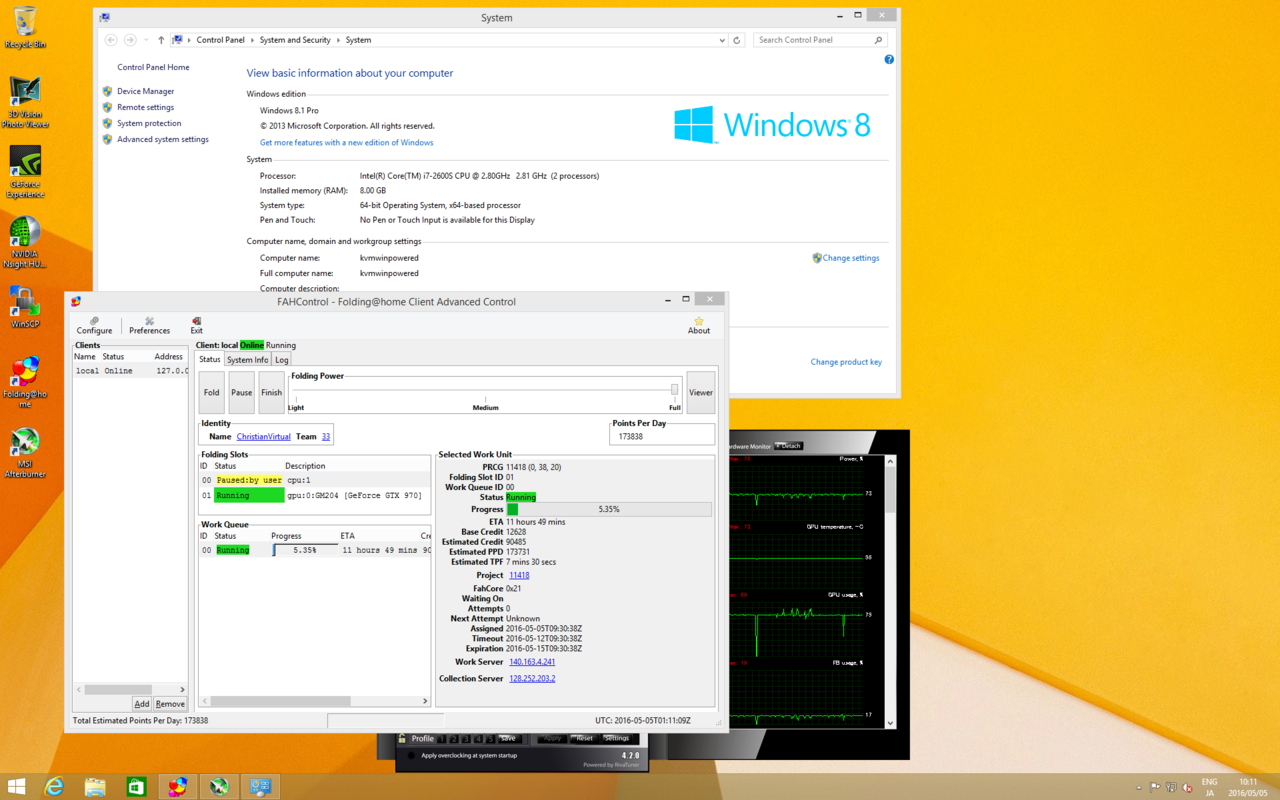

Since ESXi remain an issue with consumer GPU I start thinking to move to KVM.

I would need some advise on the setup.

Idea:

1) CentOS 7 minimal as host

2) one CentOS with X with one GTX 980Ti

3) one Windows guest with GTX 970

I need to run the original GPU driver as I want to keep Folding running not working with 3rd party drivers. But I also would like both OS flavor at the same time.

Possible ?

CPU is i7-2600S, RAM right now 8GB, but can go 16GB.

I would need some advise on the setup.

Idea:

1) CentOS 7 minimal as host

2) one CentOS with X with one GTX 980Ti

3) one Windows guest with GTX 970

I need to run the original GPU driver as I want to keep Folding running not working with 3rd party drivers. But I also would like both OS flavor at the same time.

Possible ?

CPU is i7-2600S, RAM right now 8GB, but can go 16GB.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)