merlin704

The Great Procrastinator

- Joined

- Oct 4, 2001

- Messages

- 12,843

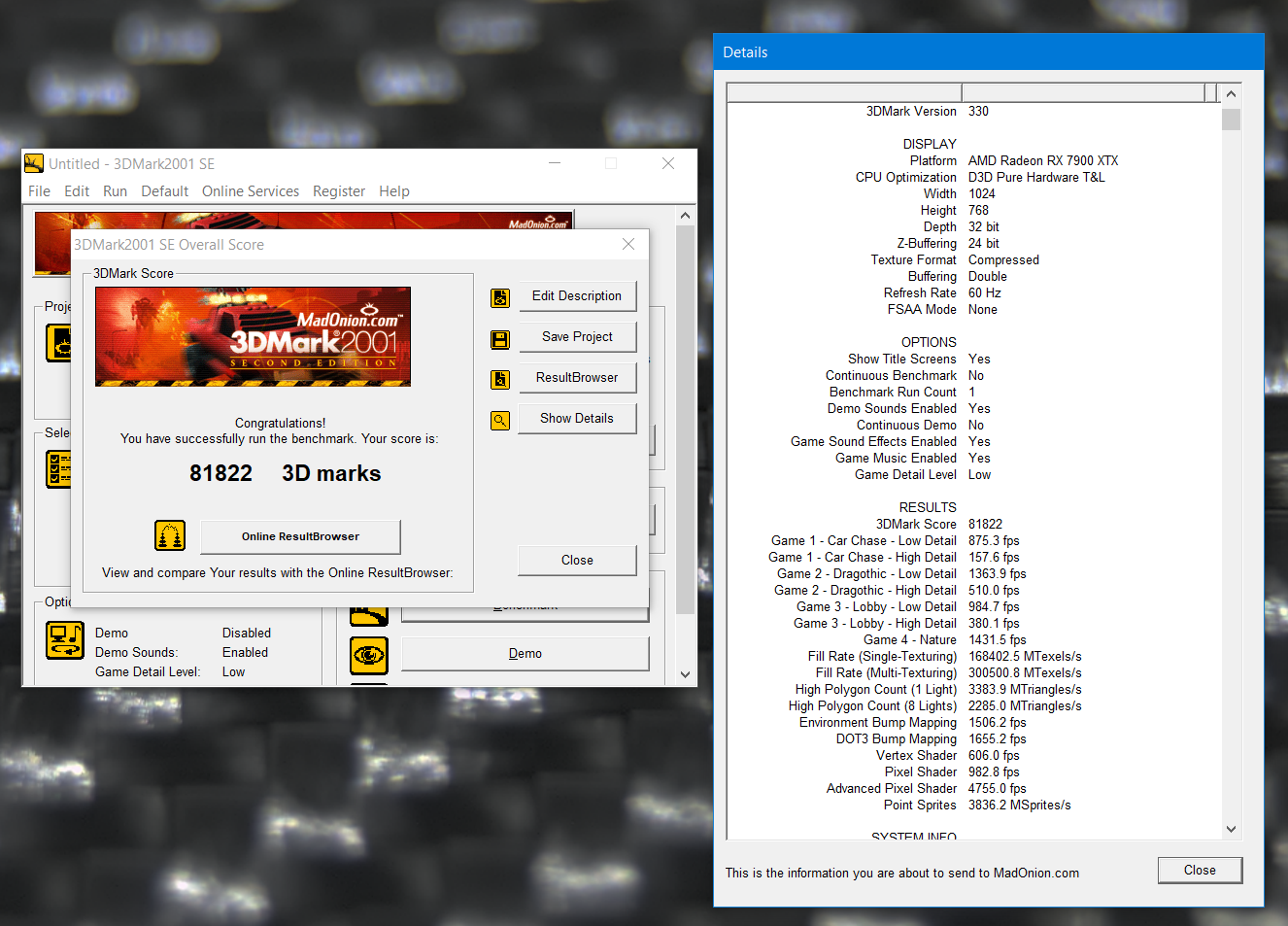

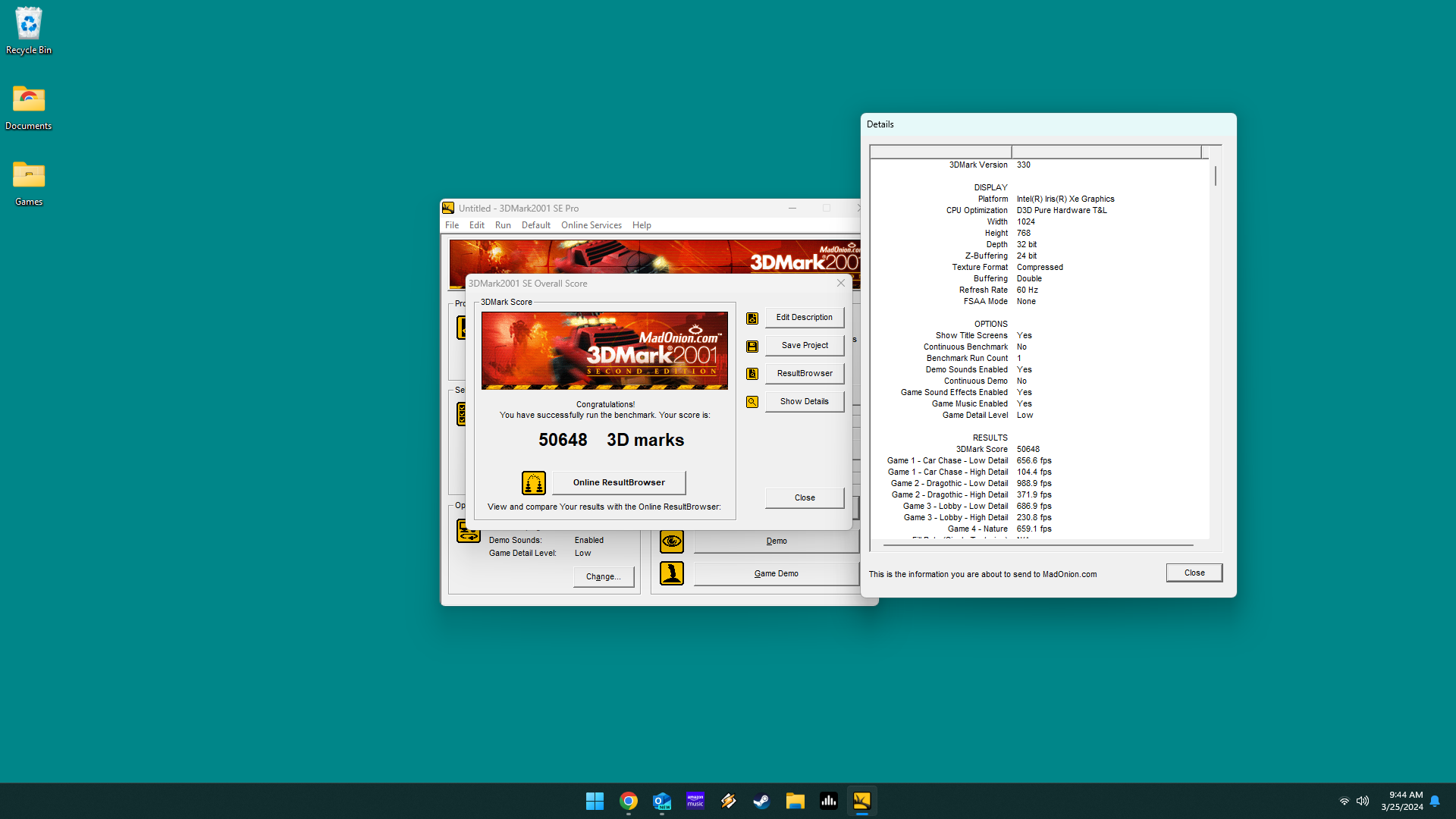

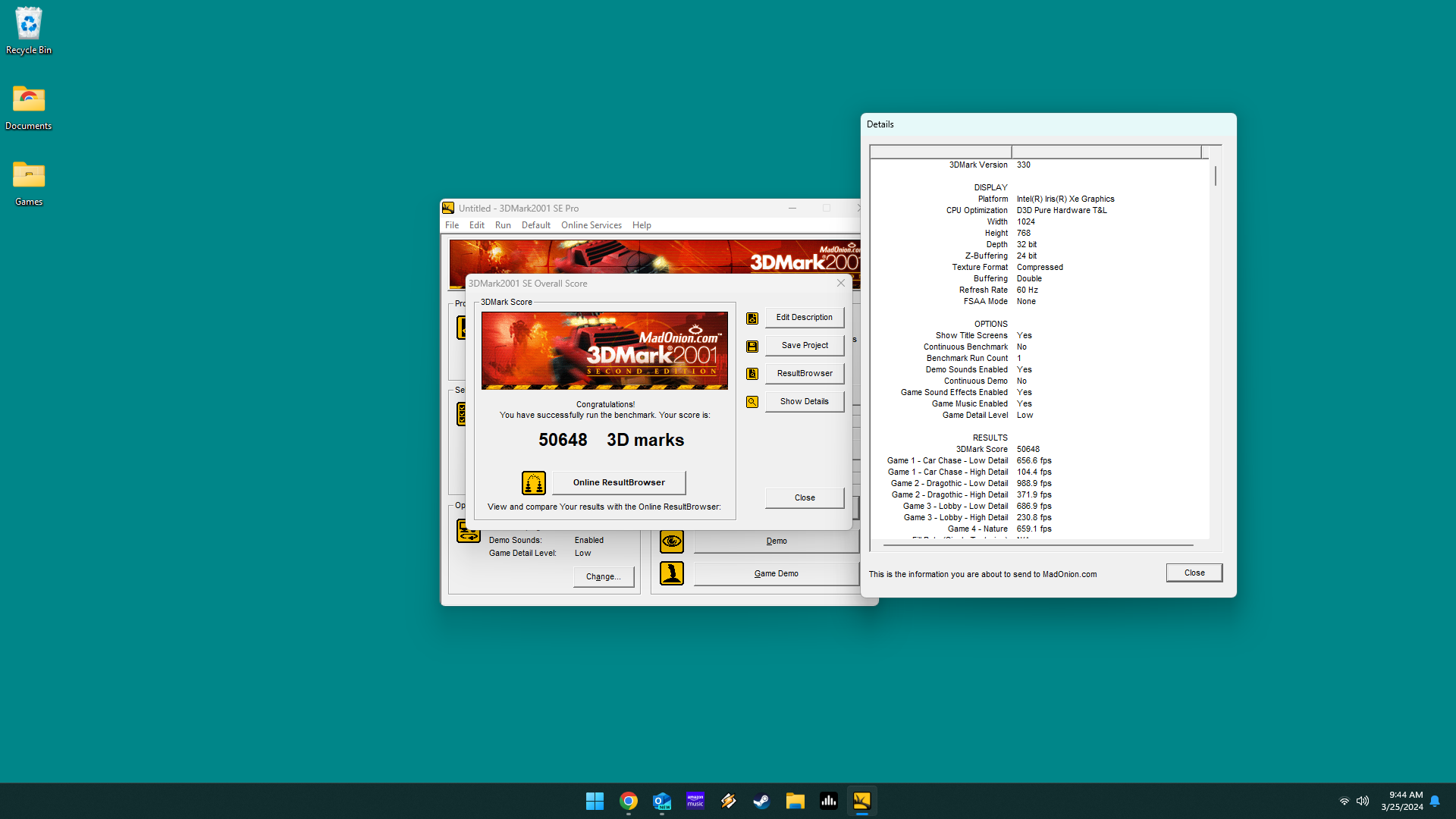

So just for fun, I decided to play around with 3DMark2001SE on some modern hardware. This is not my main system but it's one I built for retro-gaming (mid 90s to 2000s). It does very well for what it is. Its an Intel 11th Gen Nuc.

Specs:

Intel i5 1135G7 @ 2.4Ghz (Boost 4.2ghz)

32gb Crucial DDR4 3200Mhz

Intel Iris Xe Graphics

Samsung 1tb SSD

If you want to try it out on whatever system you have, here are the links you will need:

VOGONS Running 3dMark2001 patch thread:

https://www.vogons.org/viewtopic.php?t=51587

3DMark Legacy Benchmarks:

https://benchmarks.ul.com/legacy-benchmarks

If you want to try and compare with myself or others here, use the default settings to keep things equal.

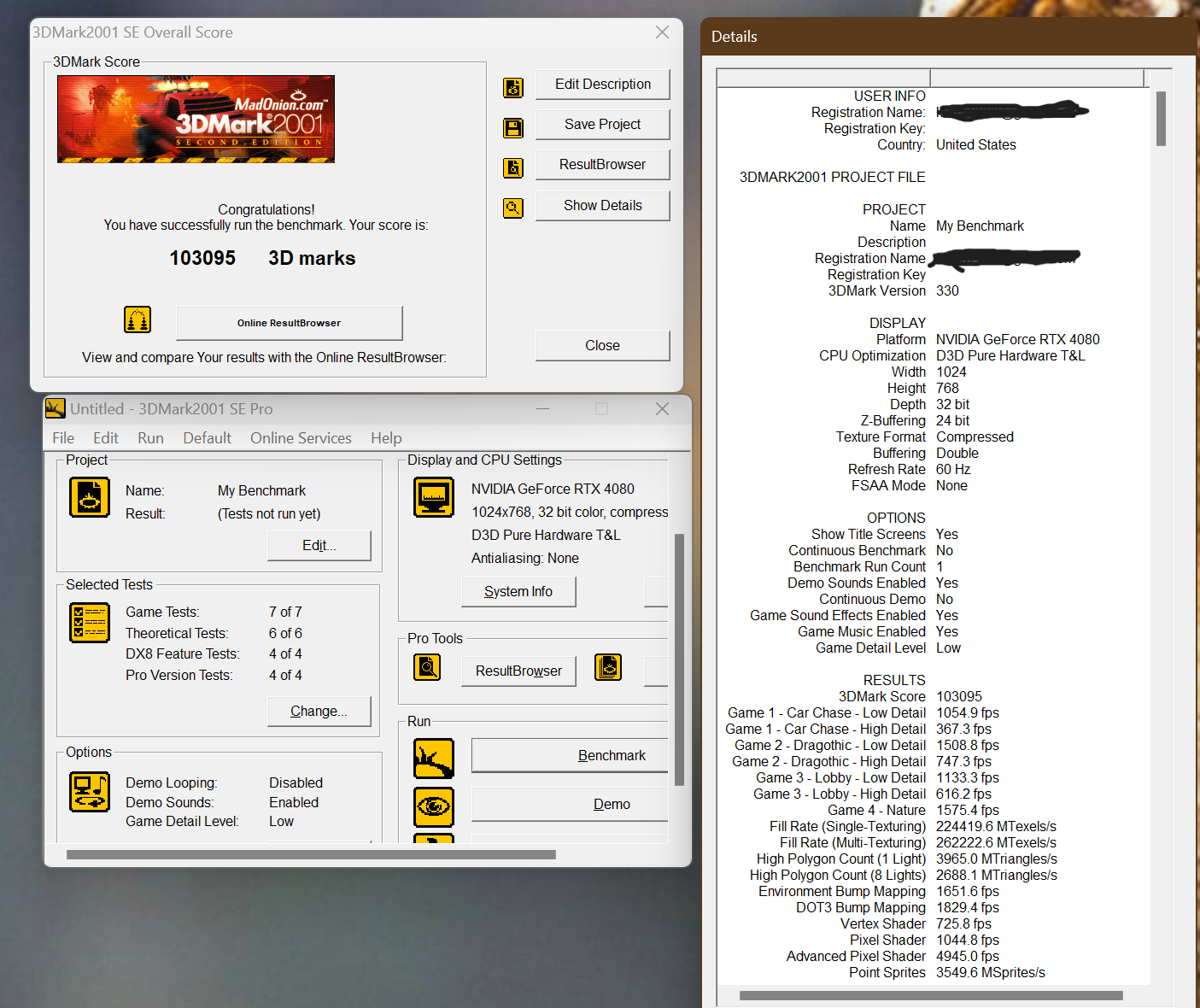

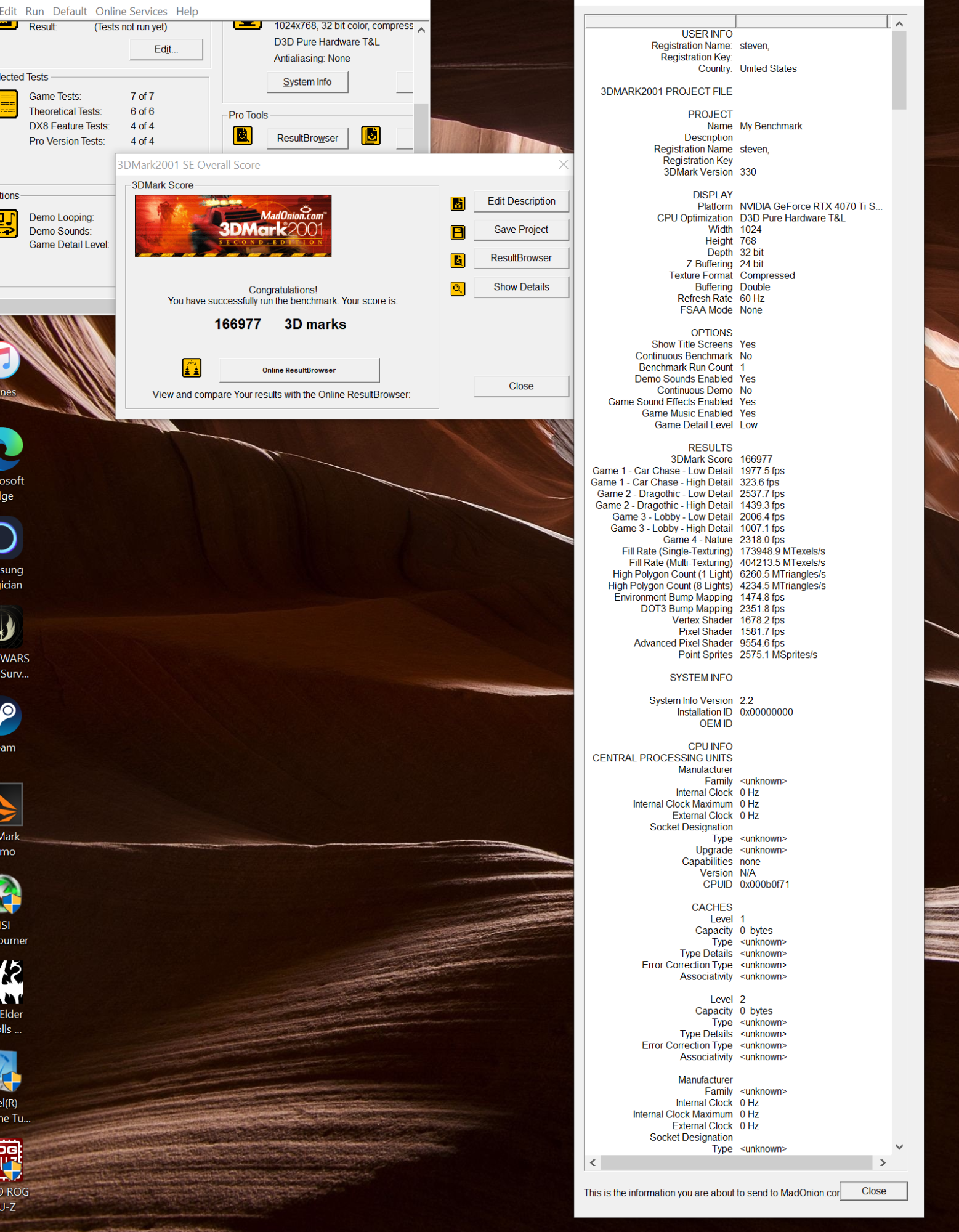

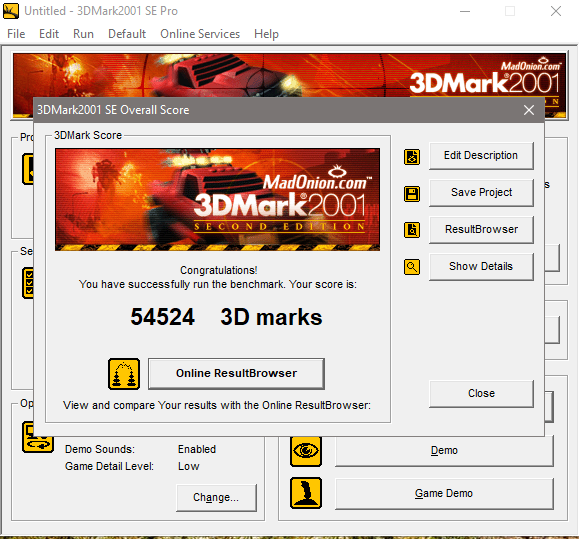

Here are my results:

Specs:

Intel i5 1135G7 @ 2.4Ghz (Boost 4.2ghz)

32gb Crucial DDR4 3200Mhz

Intel Iris Xe Graphics

Samsung 1tb SSD

If you want to try it out on whatever system you have, here are the links you will need:

VOGONS Running 3dMark2001 patch thread:

https://www.vogons.org/viewtopic.php?t=51587

3DMark Legacy Benchmarks:

https://benchmarks.ul.com/legacy-benchmarks

If you want to try and compare with myself or others here, use the default settings to keep things equal.

Here are my results:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)