erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 11,156

Interesting as always!

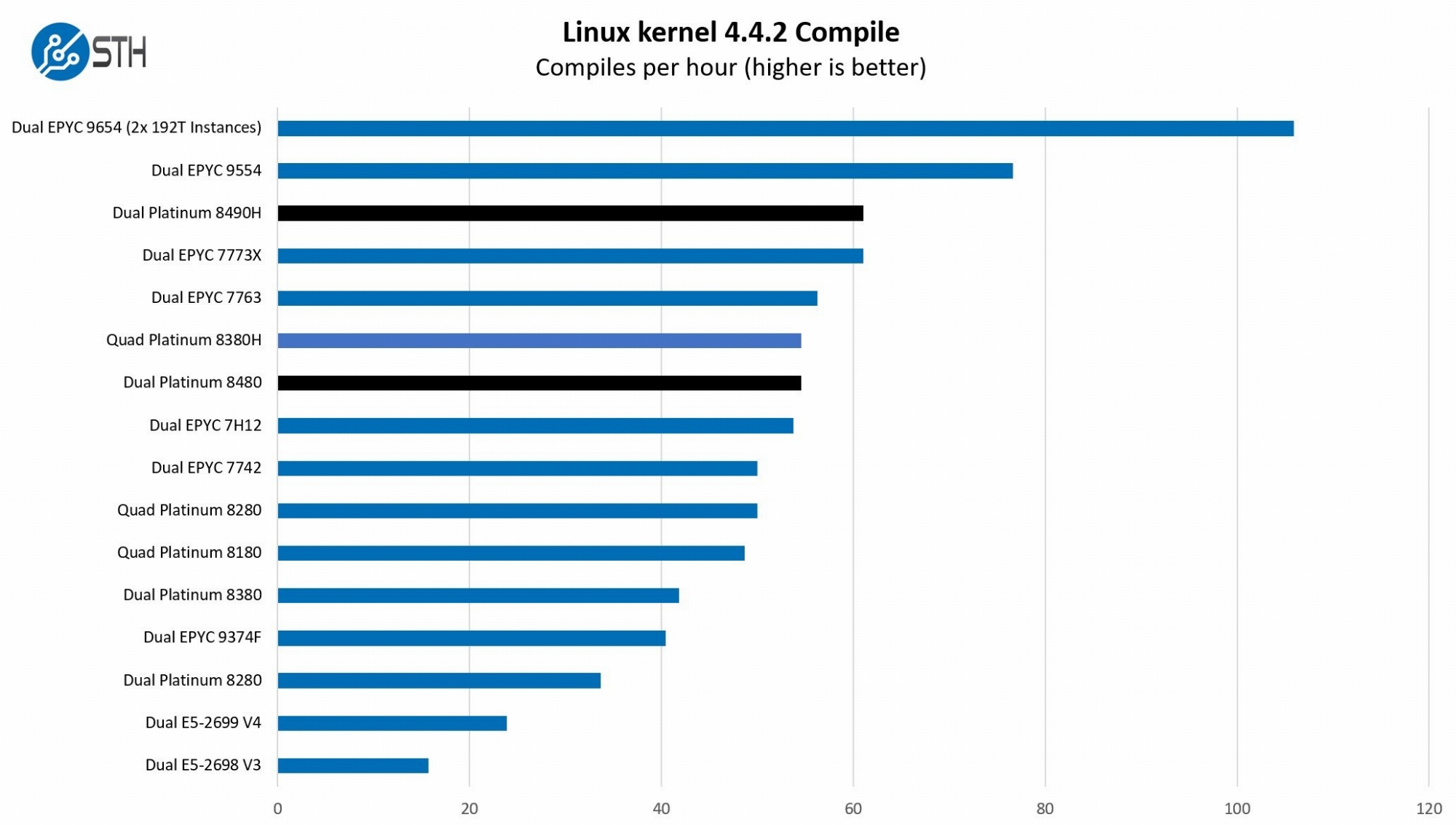

"Intel knows what AMD launched with Genoa. Some of the list pricing of Sapphire Rapids looks almost like it was designed to discount. The market will sort that out. While Intel does not have a direct socket-to-socket top-bin competitor to AMD, what it does have is a range of products under 200W TDP, almost as many 32-core SKUs as AMD has in its entire EPYC 9004 SKU stack, and scale. These lower power and core count SKUs move volume, ensuring that Intel has volume for its Sapphire Rapids parts and for its server OEMs."

Source: https://www.servethehome.com/4th-gen-intel-xeon-scalable-sapphire-rapids-leaps-forward/

https://www.phoronix.com/review/intel-xeon-platinum-8490h

"Intel knows what AMD launched with Genoa. Some of the list pricing of Sapphire Rapids looks almost like it was designed to discount. The market will sort that out. While Intel does not have a direct socket-to-socket top-bin competitor to AMD, what it does have is a range of products under 200W TDP, almost as many 32-core SKUs as AMD has in its entire EPYC 9004 SKU stack, and scale. These lower power and core count SKUs move volume, ensuring that Intel has volume for its Sapphire Rapids parts and for its server OEMs."

Source: https://www.servethehome.com/4th-gen-intel-xeon-scalable-sapphire-rapids-leaps-forward/

https://www.phoronix.com/review/intel-xeon-platinum-8490h

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)