Damn, I just read a couple of reviews. I want my time back.

You went back for seconds?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Damn, I just read a couple of reviews. I want my time back.

I didn't believe the first review I read...You went back for seconds?

Hardware has simply outgrown a micro kernel os. Windows as it exists at least doesn't have a great future. The companies making these complicated disparate core / NPU / SOC type modern processors, the companies making them need to be doing the direct work on kernel drivers and required scheduler changes. Just imagine how bad the previous gens of Intel big LITTLE chips would be if they didn't do on board scheduling.Microsoft is just dropping balls left and right.

Azure is their most profitable platform and it doesn’t even run on Windows.

They know it’s cooked.

You can see it in W10. It doesn't have the updated scheduler for 12th - 14th gen. And its common for it to put apps and games on e-cores, when it shouldnt.. Just imagine how bad the previous gens of Intel big LITTLE chips would be if they didn't do on board scheduling.

I am not sure how much that match the video quoted, with as example of being worst than 14th gen on Linux at certain things while being better at certain other things, and seem to be the same as in windows...Microsoft is just dropping balls left and right.

yeah but Zen 1 was a mass improvement over AMD's previous Gen, this is more like Intel's bulldozerI think this may be Intel's Zen1 moment. Refresh on this be a major improvement I think. Isn't this also the first part out under Jim Keller?

Keep in mind that Wendell tested on 6.11 kernel which he claims has patches from Intel while Phoronix tested with 6.10 kernel. Specifically that 6.11 supports EDAC and 80-bit wide memory. This might have played a roll. This maybe another situation where we'll have to see if Linux does what Windows doesn't.I am not sure how much that match the video quoted, with as example of being worst than 14th gen on Linux at certain things while being better at certain other things, and seem to be the same as in windows...

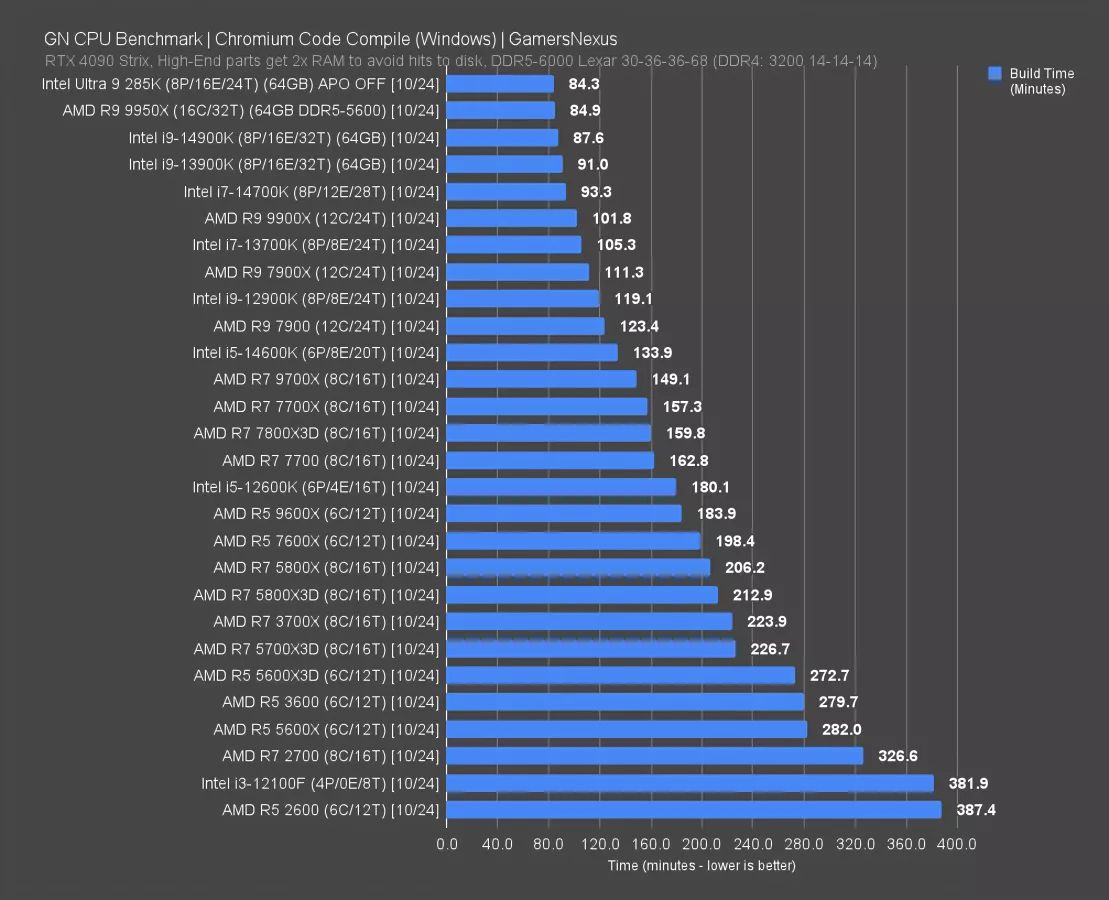

Faster at compiling is not a Linux or Windows affair, can be faster than the mighty 9950x on windows at it as well:

View attachment 688515

The 285k gaming drop is also terrible on linux than windows and also all over the place no ?

https://www.phoronix.com/review/intel-core-ultra-9-285k-linux/17

often the slowest cpu tested of them all (slower than 14400f, 8600g, 5900x, etc...)

I would hope overclocking them would match AMD stock settings.So fun fact - watching more of the folks playing with early release CUDIMM: Arrow Lake is obscenely memory speed constrained. It catches up heavily at 8400MT/s, and if you bump the cache and the e cores especially, it can match the 9950x in a lot of things, and close the range to the x3d models by quite a bit.

Of course, this relies on two things -

1. You can find CUDIMMs (not releasing till late november), and can afford it (they're only big modules so far, and $$).

2. You can get the OC working, since that's a major jump from stock, never mind JEDEC.

Now I'm intrigued as we get to the 9000X3d launch and release of CUDIMM what kind of impact they'll have together, and how hard it will be to find the DIMMs. If they're not insane, I may be willing to tinker with arrow lake.

ROFLI would hope overclocking them would match AMD stock settings.

Exactly right.yeah but Zen 1 was a mass improvement over AMD's previous Gen, this is more like Intel's bulldozer

AMD's Bulldozer was much like Intel's Netburst architecture. The difference is that AMD has done nothing to fix Bulldozer while Intel did a lot to fix the Pentium 4. The P4 went from terrible to pretty good once Intel gave it Hyperthreading, dual channel memory, and even 64-bit support. Piledriver was AMD's only attempt to make Bulldozer good, and then just made worse performing versions on AM1 and AM2 platforms. Good chance we'll see improvements in future iterations of Arrow Lake. I don't know if they'll be able to keep up with AMD's improvements going forward. What Intel needs is to hire serious engineers to give Intel their next Sandy Bridge.yeah but Zen 1 was a mass improvement over AMD's previous Gen, this is more like Intel's bulldozer

AMD didn't really have the funds to completely redesign their long pipeline screw up. It took some wiggle and an CEO change to get the company committed to a redesign with zen. To be fair it took Intel a long time to mostly fix P4, I mean even the very end of P4 were still not great chips... the core chips were a lot better out of the gate. If I remember right the core chips had 40% better IPC over P4, and as they moved to an actual new node they ran at almost the same freq.AMD's Bulldozer was much like Intel's Netburst architecture. The difference is that AMD has done nothing to fix Bulldozer while Intel did a lot to fix the Pentium 4. The P4 went from terrible to pretty good once Intel gave it Hyperthreading, dual channel memory, and even 64-bit support. Piledriver was AMD's only attempt to make Bulldozer good, and then just made worse performing versions on AM1 and AM2 platforms. Good chance we'll see improvements in future iterations of Arrow Lake. I don't know if they'll be able to keep up with AMD's improvements going forward. What Intel needs is to hire serious engineers to give Intel their next Sandy Bridge.

Core didn't have an on-die memory controller. Nahalem was the first Intel CPU to have that. All of them until now have had integrated memory controllers, all of which were on-die.This isn't the first time Intel moved the memory controller off die. The first time I recall them doing it was with first generation Core (Clarkdale). I don't think they moved the IMC back onto the processor die until 11th generation Core (Raptor Lake).

AMD and Intel tend to take their time redesigning their new CPU architectures but once made they have to commit to it for years. AMD didn't try to enhance the Bulldozer architecture, but instead just made iterations with GPU's which had a tendency to slow down the CPU. There were some FM1 and FM2 socket based chips that didn't have GPU's built in, and those were the best version to get, but were still slower compared to Trinity.AMD didn't really have the funds to completely redesign their long pipeline screw up. It took some wiggle and an CEO change to get the company committed to a redesign with zen.

The Pentium 4 did get a lot better, and Intel made sure to promote benchmarks like VirtualDub to show off it's performance. VirtualDub could make use of Hyperthreading and of course Netburst architecture. Like Apple with Geekbench, it was one of the few benchmarks where it did well on. Eventually Intel sneaked in the Pentium M for laptops, which eventually evolved into the CoreDuo and Core2Duo. All Intel did was go back to the Pentium 3 and just made the Pentium M. The act of adding 64-bit to the Pentium 4 and CoreDuo was no easy feat for Intel.To be fair it took Intel a long time to mostly fix P4, I mean even the very end of P4 were still not great chips... the core chips were a lot better out of the gate. If I remember right the core chips had 40% better IPC over P4, and as they moved to an actual new node they ran at almost the same freq.

To be fair, I liked Bulldozer. Bulldozer had the same problem that AMD also had with their Zen5, in that Windows wasn't ready for it. Windows 7 needed a patch to fix it's performance, which nobody ever installed. It took Windows 10 to get the best performance out of Bulldozer without needing the user to hunt down an obscure patch. Since reviewers weren't big to go back and review chips twice, it's not like Bulldozer had a good start. Sounds just like AMD's Zen5 situation doesn't it? I do believe that we're even seeing this with Intel's Arrow Lake chips too. On Linux, the 285K isn't doing that badly. It's losing to AMD's 9900X but it's overall faster than the 14900K. Now we're learning about CUDIMM Memory and how it plays a role for Arrow Lake. I do believe that Intel will turn Arrow Lake Lemon's into Lemonade over time. I also believe that Windows is yet again playing a roll in the lack luster performance to some degree. I don't know if gaming performance can be fixed with Arrow Lake as it doesn't seem to do well on Linux either, but who knows.Not that it means anything anymore... Bulldozer is also a lot better in hindsight then it was at launch. Mostly because intel didn't get caught at the time for having zero security on their branch prediction. For the best anyway cause ya Bulldozer was terrible, if it had actually sold AMD might have stuck with that long pipe design. :/

I remember the Athlon XP chips absolutely being amazing then... kept me going till I snagged a Core Q6600 Quad chip and it felt like a massive jump. Never ran a P4, went from a P3 to an XP.AMD didn't really have the funds to completely redesign their long pipeline screw up. It took some wiggle and an CEO change to get the company committed to a redesign with zen. To be fair it took Intel a long time to mostly fix P4, I mean even the very end of P4 were still not great chips... the core chips were a lot better out of the gate. If I remember right the core chips had 40% better IPC over P4, and as they moved to an actual new node they ran at almost the same freq.

Not that it means anything anymore... Bulldozer is also a lot better in hindsight then it was at launch. Mostly because intel didn't get caught at the time for having zero security on their branch prediction. For the best anyway cause ya Bulldozer was terrible, if it had actually sold AMD might have stuck with that long pipe design. :/

The Q6600 was a beast.I remember the Athlon XP chips absolutely being amazing then... kept me going till I snagged a Core Q6600 Quad chip and it felt like a massive jump. Never ran a P4, went from a P3 to an XP.

Intel moves most of their product on mobile so I’m thinking they have things tuned for LPDDR5 speeds. So the CUDIMMS are going to be key there.AMD and Intel tend to take their time redesigning their new CPU architectures but once made they have to commit to it for years. AMD didn't try to enhance the Bulldozer architecture, but instead just made iterations with GPU's which had a tendency to slow down the CPU. There were some FM1 and FM2 socket based chips that didn't have GPU's built in, and those were the best version to get, but were still slower compared to Trinity.

The Pentium 4 did get a lot better, and Intel made sure to promote benchmarks like VirtualDub to show off it's performance. VirtualDub could make use of Hyperthreading and of course Netburst architecture. Like Apple with Geekbench, it was one of the few benchmarks where it did well on. Eventually Intel sneaked in the Pentium M for laptops, which eventually evolved into the CoreDuo and Core2Duo. All Intel did was go back to the Pentium 3 and just made the Pentium M. The act of adding 64-bit to the Pentium 4 and CoreDuo was no easy feat for Intel.

To be fair, I liked Bulldozer. Bulldozer had the same problem that AMD also had with their Zen5, in that Windows wasn't ready for it. Windows 7 needed a patch to fix it's performance, which nobody ever installed. It took Windows 10 to get the best performance out of Bulldozer without needing the user to hunt down an obscure patch. Since reviewers weren't big to go back and review chips twice, it's not like Bulldozer had a good start. Sounds like just AMD's Zen5 situation doesn't it? I do believe that we're even seeing this with Intel's Arrow Lake chips too. On Linux, the 285K isn't doing that badly. It's losing to AMD's 9900X but it's overall faster than the 14900K. Now we're learning about CUDIMM Memory and how it plays a role for Arrow Lake. I do believe that Intel will turn Arrow Lake Lemon's into Lemonade over time. I also believe that Windows is yet again playing a roll in the lack luster performance to some degree. I don't know if gaming performance can be fixed with Arrow Lake as it doesn't seem to do well on Linux either, but who knows.

P4 was the height of intel super ego. They tried to push rambus on consumers (initially). They thought they were going to move servers and workstations to 64 bit itanium which consumers had no need of. They designed netburst to have a really long pipeline mostly so they cold crank up frequency. Which I still say was more about marketing then it was about actual performance. Most people forget how dumb the average PC buyers were back then... and Intel management was embarrassed and pissed that AMD shipped the first 1ghz CPU. 2 years later they get 1.3ghz P4 out the door. You didn't miss much skipping the P4. The later p4s were decent... but intel was easily able to best them when they went back to a more traditional core.I remember the Athlon XP chips absolutely being amazing then... kept me going till I snagged a Core Q6600 Quad chip and it felt like a massive jump. Never ran a P4, went from a P3 to an XP.

Yup. First of all the P4 was no stop gap. It was an entirely new architecture and lasted for quite some time. It had six revisions. It used twice the power of the Athlons at the time on a nodes twice as advanced. It consumed twice the power and ran a full 1 Ghz faster to give performance that was just similar to the Athlons at the time. If you ran video encoding it was great anything else and the Athlon would usually beat it. Bulldozer largely flopped because A) it's cache hierarchy was more secure (slower but correct) and it's node was no where near Intel. Those two things killed it. Furthermore at the time people didn't know that Core was a security nightmare. You're talking from 2008 until Skylake in 2019. Core should have been on average 15 to 20% slower. I really don't think AMD could have worked through all of that any other way. I'm pretty sure it knew of the problems with Core but what was it really going to do? Nothing.P4 was the height of intel super ego. They tried to push rambus on consumers (initially). They thought they were going to move servers and workstations to 64 bit itanium which consumers had no need of. They designed netburst to have a really long pipeline mostly so they cold crank up frequency. Which I still say was more about marketing then it was about actual performance. Most people forget how dumb the average PC buyers were back then... and Intel management was embarrassed and pissed that AMD shipped the first 1ghz CPU. 2 years later they get 1.3ghz P4 out the door. You didn't miss much skipping the P4. The later p4s were decent... but intel was easily able to best them when they went back to a more traditional core.

I rocked a AMD thunderbird Athlon 1.4ghz for a long time. Cost a fraction of what a P4 setup did and destroyed almost all metrics. At that time I was young and selling PCs for a living... all day I saw people that would see the larger freq number and say I want that one. I rarely talked them out of it... the world had commissioned pc sales people back then. lmao I also got a few p4 systems... Intel used to give sales people systems now and then, and a few times they offered stupid cheap pricing like MOBO and CPU for 1/8th retail... I set up my parents and my sister with my Intel freebie/lowcosters, Intel was generious to sales people to be fair. Thining back ya a lot of tech companies paid off sales people with the freebies haha I guess we were pretty cheap markeing really... in the time before internet reviews they gave the samples to the comissioned sales kids and hoped we would talk their stuff up.

Intel knew for sure yes. They got lucky it didn't bite them earlier. I don't know what went on behind the scenes, I am not a tin foil hat type that believes the NSA pressured them to build their chips that way. It is interesting though that NSA funded research pointed to the flaw way earlier then most people realize. Intel has security engineers they had to have known this was an attack vector and how open their chips were to them.Yup. First of all the P4 was no stop gap. It was an entirely new architecture and lasted for quite some time. It had six revisions. It used twice the power of the Athlons at the time on a nodes twice as advanced. It consumed twice the power and ran a full 1 Ghz faster to give performance that was just similar to the Athlons at the time. If you ran video encoding it was great anything else and the Athlon would usually beat it. Bulldozer largely flopped because A) it's cache hierarchy was more secure (slower but correct) and it's node was no where near Intel. Those two things killed it. Furthermore at the time people didn't know that Core was a security nightmare. You're talking from 2008 until Skylake in 2019. Core should have been on average 15 to 20% slower. I really don't think AMD could have worked through all of that any other way. I'm pretty sure it knew of the problems with Core but what was it really going to do? Nothing.

I had a couple early cheap ones. They were stable, reliable, and not super competitive. Northwood-C was the only one that was, and that's because taking a 2.4 and running it to 3.06 or the like was EASY, and at that speed (where the extreme edition lived) it was competitive - and at a similar price to AMD (EE was $$$$, so competitive but too expensive).I remember the Athlon XP chips absolutely being amazing then... kept me going till I snagged a Core Q6600 Quad chip and it felt like a massive jump. Never ran a P4, went from a P3 to an XP.

I had a Pentium D running 3.8 or something on air iirc. I remember it was the highest air-cooled OC for an 820 on 3DMark back in the day, and it was 24/7 stable like that as well. I can't remember the OC, but it was nearly +1GHz or right at it. Had the most golden sample of all golden samples.I had a couple early cheap ones. They were stable, reliable, and not super competitive. Northwood-C was the only one that was, and that's because taking a 2.4 and running it to 3.06 or the like was EASY, and at that speed (where the extreme edition lived) it was competitive - and at a similar price to AMD (EE was $$$$, so competitive but too expensive).

Prescott was a disaster.

Yeah, Prescott was somewhat interesting though, that long pipeline is one of the things that hurt it. But if you could get the clocks up, you could see some potential, it did ok. Another thing against it was it ran warmer than amd or the p4's before it. It's been 20 year's, so that's about the best i can remember from it lol. I' sure someone else on here could give a better recollection of it.I had a couple early cheap ones. They were stable, reliable, and not super competitive. Northwood-C was the only one that was, and that's because taking a 2.4 and running it to 3.06 or the like was EASY, and at that speed (where the extreme edition lived) it was competitive - and at a similar price to AMD (EE was $$$$, so competitive but too expensive).

Prescott was a disaster.

Yeah, Prescott was somewhat interesting though, that long pipeline is one of the things that hurt it. But if you could get the clocks up, you could see some potential, it did ok. Another thing against it was it ran warmer than amd or the p4's before it. It's been 20 year's, so that's about the best i can remember from it lol. I' sure someone else on here could give a better recollection of it.

It was known as the preshott - it COULD clock, if you had amazing water cooling or LN2, but it normally ran so hot it would thermally throttle before long. Precursor to 14900/etc - it was a toaster. It wasn't significantly worse than Northwood, but it wasn't better, and by then AMD was running away with their dual core chips. Core 2 was what blew the world up.Yeah, Prescott was somewhat interesting though, that long pipeline is one of the things that hurt it. But if you could get the clocks up, you could see some potential, it did ok. Another thing against it was it ran warmer than amd or the p4's before it. It's been 20 year's, so that's about the best i can remember from it lol. I' sure someone else on here could give a better recollection of it.

Yeah, +1Ghz or more clocks were not unheard of at all. It was a good chip.I had a Pentium D running 3.8 or something on air iirc. I remember it was the highest air-cooled OC for an 820 on 3DMark back in the day, and it was 24/7 stable like that as well. I can't remember the OC, but it was nearly +1GHz or right at it. Had the most golden sample of all golden samples.

Oh, Yeah, I know. I haven't forgotten Conroe. Man that was an amazing chip/run, Maybe for the time better than Sandy was for it's time imo.It was known as the preshott - it COULD clock, if you had amazing water cooling or LN2, but it normally ran so hot it would thermally throttle before long. Precursor to 14900/etc - it was a toaster. It wasn't significantly worse than Northwood, but it wasn't better, and by then AMD was running away with their dual core chips. Core 2 was what blew the world up.

The initial socket 478 Prescott chips were a stop gap measure at best. The deeper pipeline was to facilitate higher clock speeds and depended more on branch prediction than earlier chips. These also had EM64T capabilities on die but this was disabled until the LGA 775 CPU's came out. Intel banked on their manufacturing capability being able to eventually scale these CPU's to over 5.0GHz. (I remember Intel saying 5.5GHz and beyond or something like that.) The thinking being that it could make up the IPC deficit against the Athlon 64. Unfortunately, there were two problems with this approach. The architecture couldn't really scale that high. It was horribly inefficient and could only manage 3.73GHz with any reliability. There was a 4.8GHz model that barely saw the light of day before being taken off the market for this reason.Yeah, Prescott was somewhat interesting though, that long pipeline is one of the things that hurt it. But if you could get the clocks up, you could see some potential, it did ok. Another thing against it was it ran warmer than amd or the p4's before it. It's been 20 year's, so that's about the best i can remember from it lol. I' sure someone else on here could give a better recollection of it.

Thanks Dan. Yep, It really was a hot mess, I think i must've been buying some of what intel was saying about the pipeline and clock speed lol. (It sounded good on paper) And Man i forgot all about btx. Dell is the only one i Remember too with btx.The initial socket 478 Prescott chips were a stop gap measure at best. The deeper pipeline was to facilitate higher clock speeds and depended more on branch prediction than earlier chips. These also had EM64T capabilities on die but this was disabled until the LGA 775 CPU's came out. Intel banked on their manufacturing capability being able to eventually scale these CPU's to over 5.0GHz. (I remember Intel saying 5.5GHz and beyond or something like that.) The thinking being that it could make up the IPC deficit against the Athlon 64. Unfortunately, there were two problems with this approach. The architecture couldn't really scale that high. It was horribly inefficient and could only manage 3.73GHz with any reliability. There was a 4.8GHz model that barely saw the light of day before being taken off the market for this reason.

Intel tried to improve cooling efficiency with the BTX form factor which no one was really interested in. This form factor failed to make enough of a difference in cooling anyway to make higher clocked CPU's viable. Only bigger OEM's like Dell even adopted the form factor and eventually even they abandoned it. If I recall correctly, tests were eventually done with LN2 getting Prescott CPU's to 5.5GHz or thereabouts which proved that that approach wouldn't have saved them. As I understand it, the Pentium 4 still didn't beat the upper end Athlon 64's decisively in benchmarks. Unfortunately, I can't find the article anymore on that but that's what I remember.

Intel's did do very well at video editing type benchmarks and some other productivity tasks. I don't think this was an architectural advantage and came down to how well Intel works with companies like Adobe to help them take advantage of their architecture and newer instruction sets. One thing that was hard to quantify is that Intel systems felt a lot smoother when multitasking than Athlon 64 systems did. Hyperthreading really helped with that. Back then this was important if you wanted to run WinAmp or have anything going on in the background while gaming. That said, when the Athlon X2 came out that advantage was no longer there.Thanks Dan. Yep, It really was a hot mess, I think i must've been buying some of what intel was saying about the pipeline and clock speed lol. (It sounded good on paper) And Man i forgot all about btx. Dell is the only one i Remember too with btx.

FTFYThis isn't the first time Intel moved the memory controller off die. The first time I recall them doing it was with first generation Core (Clarkdale). I don't think they moved the IMC back onto the processor die until 11th generation Core (Raptor Lake) Rocket Lake.

Which is really unfortunate if that's the case. All the old school Intel dudes I used to work with (30+ year guys) used to harp on the concept of "disagree and commit", which translates to put your personal desires aside and align to the chosen path....

It really does seem like Intel has an internal Engineering civil war that has been raging for awhile. I mean its common with the big complicated silicon companies to have a Tick and a Tok design team... in general though they have one overall planning direction, how can it work if the teams don't agree on a fundamental unified design path.

Except that's entirely wrong. Intel first integrated the memory controller with Nahalem. It's never changed that until Arrow Lake. Previous CPU's, including the Core and Core 2 families all had memory controllers built into the PCH/Chipset. Not the CPU.FTFY

Yeah I wasn't sure if he was correct or not, I was just meaning to correct him calling 11Gen, Raptor Lake instead of Rocket Lake.Except that's entirely wrong. Intel first integrated the memory controller with Nahalem. It's never changed that until Arrow Lake. Previous CPU's, including the Core and Core 2 families all had memory controllers built into the PCH/Chipset. Not the CPU.

One of the most revolutionary releases of all time. That, sandy bridge for consumers, Athlon 64, the original Pentium MMX 200...Oh, Yeah, I know. I haven't forgotten Conroe. Man that was an amazing chip/run, Maybe for the time better than Sandy was for it's time imo.