Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel Core i9-7980xe

- Thread starter floridadoc

- Start date

Shintai

Supreme [H]ardness

- Joined

- Jul 1, 2016

- Messages

- 5,678

7980xe NDA must have just lifted. Videos are hitting youtube in the last 10 minutes. It is officially a beast. Videos of easy 4.6GHz overclocks and Der8auer going 6.0Ghz+...

Someone are eating crow now

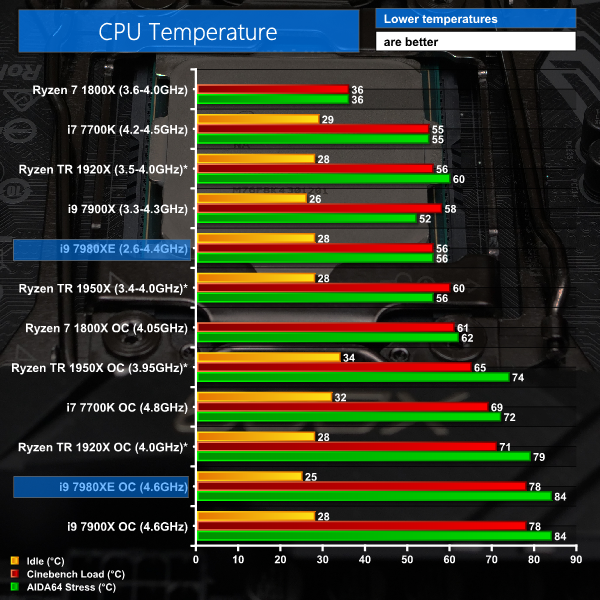

They run cooler than 1950x with solder while having 2 moar cores. That's interesting.

Shintai

Supreme [H]ardness

- Joined

- Jul 1, 2016

- Messages

- 5,678

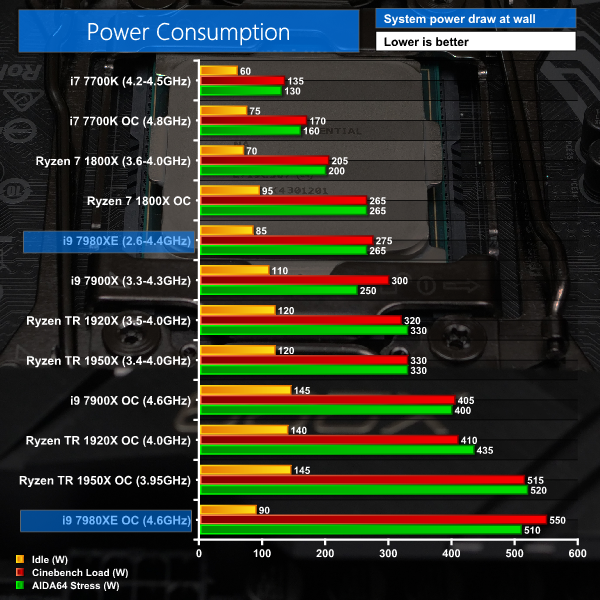

roflmfao, mild overclock has the CPU pulling 500w

20% performance over a 1950x for 80% more while sacrificing ECC and PCIE lanes... Intel has lost their fucking minds.

TR at 4Ghz pulls 400W+ and performs nowhere near.

Have you actually seen working ECC on TR? Not talking about just supporting the DIMMs. And ECC and overclocking is a no go as well.

How many PCIe lanes do you actually sacrifice? Remember the limitation for TR and EPYC because lanes are shared with other resources.

There is a reason why TR was priced as it was

And here you dont have to sit and play die joking while switching between regular mode and gaming mode.

Last edited:

TR at 4Ghz pulls 400W+ and performs nowhere near.

Have you actually seen working ECC on TR? Not talking about just supporting the DIMMs. And ECC and overclocking is a no go as well.

How many PCIe lanes do you actually sacrifice? Remember the limitation for TR and EPYC because lanes are shared with other resources.

There is a reason why TR was priced as it was

And here you dont have to sit and play die joking while switching between regular mode and gaming mode.

I have not seen working ECC on Threadripper. Then again, I haven't tried it. I'm not interested in it personally for all the reasons you mentioned. Threadripper benefits from higher RAM clocks. ECC generally doesn't clock that high, nor overclock well. To answer your question, you sacrifice 4 PCIe lanes as a bridge to the PCH. This leaves 60 usable PCIe lanes for devices. However, some are dedicated to a specific purpose. You get 8 more from the PCH for a total of 68, but are sadly only PCIe 2.0. The GIGABYTE X399 Aorus Gaming 7 leaves you with 48 usable PCIe lanes via expansion slots. M.2, USB 3.1, etc. consume the rest.

motherboard and two PLX chips

There is no context here. I have no idea what the hell you are trying to say. No one, or nearly no one bothers with PLX chips anymore because their cost has gone up and the nature of the HSIO architecture in Intel chipsets eliminates the flexibility advantage PLX chips provided. Motherboard manufacturers no longer need to employ a fleet of PCIe switches and bridge chips to give a motherboard flexibility. HSIO allows PCIe lanes to be allocated in groups of 4 for any purpose. This is why we only have 8x SATA ports instead of 10x. You get those in two groups of four.

On the AMD side, I'm not sure if they still need the multitude of PCIe bridges or not, but you have 60 PCIe lanes and each PLX chip consumes 16 for connectivity and gives back double that number. However, since you are multiplexing lanes you end up with the CPU to PLX 16x lane bridge as the bottleneck. Given the fact that there is no difference between using graphics cards with 8x PCIe lanes and 16x PCIe lanes, what would be the point when motherboards like the afore-mentioned X399 Aorus Gaming 7 give you 48 usable lanes and thus, the ability to use four GPU's in a 16x16x8x8 configuration? It makes no sense when PLX chips would raise the cost of the motherboard substantially with zero gains while introducing additional latency.

Looks good to me even with thermal grease instead of solder. You can probably run this with air cooler lol.roflmfao, mild overclock has the CPU pulling 500w

20% performance over a 1950x for 80% more while sacrificing ECC and PCIE lanes... Intel has lost their fucking minds.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

Derbauer had this under LN2, and it really ate up all the LN2 on its way to some seriously crazy overclocks. With -100C in the loop on LN2 the cpu pushed temps to over 0C, which is a feat in itself lol. However at the same time it could have melted a hole in the floor on its way to consuming 1000 watts. That is just insano.

Why you buy 2K cpu and slap on a band aid huh? That's if they come out with a band aid board yea?

motherboard and two PLX chips

Why you buy 2K cpu and slap on a band aid huh? That's if they come out with a band aid board yea?

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,029

biggest epeen is gonna cost ya!

TahoeDust

Limp Gawd

- Joined

- Dec 3, 2011

- Messages

- 502

https://www.bhphotovideo.com/c/prod...80673i97980x_core_i9_7980xe_x_series_2_6.html

Ready for order. This is where I bought my 7820x. Pre-ordered and it shipped on launch day. Good company.

Ready for order. This is where I bought my 7820x. Pre-ordered and it shipped on launch day. Good company.

floridadoc

n00b

- Joined

- Apr 2, 2017

- Messages

- 40

https://www.bhphotovideo.com/c/prod...80673i97980x_core_i9_7980xe_x_series_2_6.html

Ready for order. This is where I bought my 7820x. Pre-ordered and it shipped on launch day. Good company.

I pre-ordered on Newegg. It says it will ship in 10-20 days.

There is no context here. I have no idea what the hell you are trying to say. No one, or nearly no one bothers with PLX chips anymore because their cost has gone up and the nature of the HSIO architecture in Intel chipsets eliminates the flexibility advantage PLX chips provided. Motherboard manufacturers no longer need to employ a fleet of PCIe switches and bridge chips to give a motherboard flexibility. HSIO allows PCIe lanes to be allocated in groups of 4 for any purpose. This is why we only have 8x SATA ports instead of 10x. You get those in two groups of four.

On the AMD side, I'm not sure if they still need the multitude of PCIe bridges or not, but you have 60 PCIe lanes and each PLX chip consumes 16 for connectivity and gives back double that number. However, since you are multiplexing lanes you end up with the CPU to PLX 16x lane bridge as the bottleneck. Given the fact that there is no difference between using graphics cards with 8x PCIe lanes and 16x PCIe lanes, what would be the point when motherboards like the afore-mentioned X399 Aorus Gaming 7 give you 48 usable lanes and thus, the ability to use four GPU's in a 16x16x8x8 configuration? It makes no sense when PLX chips would raise the cost of the motherboard substantially with zero gains while introducing additional latency.

u have some stuff to say. It doubles pcie bandwidth in 7x pcie connectors. PCIE Bifurcation was problem in fast M2 ssds.

AMD EPYC has the adavatage add more fast flash storage than intel - cheaper. great deal for those hos budget dont hold two intel x299 systems. M2 dont mind this 0.001ms lag that PLX produces. makes sense add more than gpus in one system, infiband, m2 drives. double bandwidth makes most sense. very low cost.

I ordered mine from BHPhoto. Amazon proved to be the slowest of them all.I pre-ordered on Newegg. It says it will ship in 10-20 days.

Hagrid

[H]F Junkie

- Joined

- Nov 23, 2006

- Messages

- 9,163

The benchmarks look pretty good for the 7980xe and 7960x. They do beat the TR. But not nearly enough to justify 700 to 1k more. I guess if you want the fastest and/or have money to burn, it would be good.

floridadoc

n00b

- Joined

- Apr 2, 2017

- Messages

- 40

I ordered mine from BHPhoto. Amazon proved to be the slowest of them all.

I'm just looking forward to finishing my system. It's the last piece I need (aside from the inability to do cable management better than a mentally-challenged monkey).

Attachments

Mistermill

n00b

- Joined

- Sep 25, 2017

- Messages

- 7

So with the Asrock X299 ITX it is finally possible to break the 1000 watts in an ITX system

u have some stuff to say. It doubles pcie bandwidth in 7x pcie connectors. PCIE Bifurcation was problem in fast M2 ssds.

AMD EPYC has the adavatage add more fast flash storage than intel - cheaper. great deal for those hos budget dont hold two intel x299 systems. M2 dont mind this 0.001ms lag that PLX produces. makes sense add more than gpus in one system, infiband, m2 drives. double bandwidth makes most sense. very low cost.

We aren't talking about Epyc or the server space. We are talking about HEDT which is the Core i9 / X299 and Threadripper / X399. First off, PLX chips do NOT, never have, and couldn't possibly double bandwidth. If you think that, you have zero idea of what those chips do or how they work as that is simply incorrect. PLX chips multiplex the existing lanes by taking 16 lanes that go directly to the CPU for connectivity. On the slot side, there are 32 per PLX chip. However, those 32 lanes still bottleneck down to 16 when going to the CPU. Period, end of story. PLX chips have been shown to offer no real world advantage beyond working as a PCIe switch and providing dynamic lane allocation to slots. This improves platform flexibility but it doesn't add actual PCIe lanes. I believe it was Anandtech that reported PLX chips were now 3x their original cost and as a result, they've priced themselves out of the market. Those chips were already listed on PEX's website at $70 a chip, though I'm sure buying in bulk cut that figure down to some degree.

We do not need PLX chips and they introduce far more than 0.001ms of latency. 0.001ms wouldn't show up as the 1-3FPS loss in multiGPU gaming. Both Intel and AMD systems have 68 PCIe lanes, many of which are available to the user via M.2 slots or PCIe x16 slots.

This platform has now 68 pcie lines?

For X299 systems, 44 PCIe lanes are provided by the CPU. 24 lanes are provided by the X299 PCH. 44+24=68. All of them on the Intel platform are PCIe 3.0 compliant. For AMD it's the same thing, but the break down is different. AMD has 64 lanes provided by the CPU, 4 of which are used for the connection to the X399 PCH. This leaves 60 CPU based PCIe 3.0 lanes which are usable. The X399 PCH has 8 PCIe lanes but they are only PCIe 2.0 compliant. Thus, 64-4+8=68 PCIe lanes. However you slice it, both Intel and AMD's HEDT platforms have 68 PCIe lanes. How many are usable depends on the motherboard's design and what's integrated into them.

As far as NVMe RAID are concerned, they can both now use CPU lanes for such devices, but Intel has extra restrictions that AMD doesn't. Although, I don't know how many devices AMD can use in this way but Intel can handle 20 NVMe devices in various RAID configurations. Intel can support RAID 5, I don't believe AMD does.

Last edited:

AMD supports fine and free.

I'm going to quit responding if you don't post something that is a coherent thought or at least a complete sentence. I have no idea what your talking about and I'm not going to do any more guessing.

Hagrid

[H]F Junkie

- Joined

- Nov 23, 2006

- Messages

- 9,163

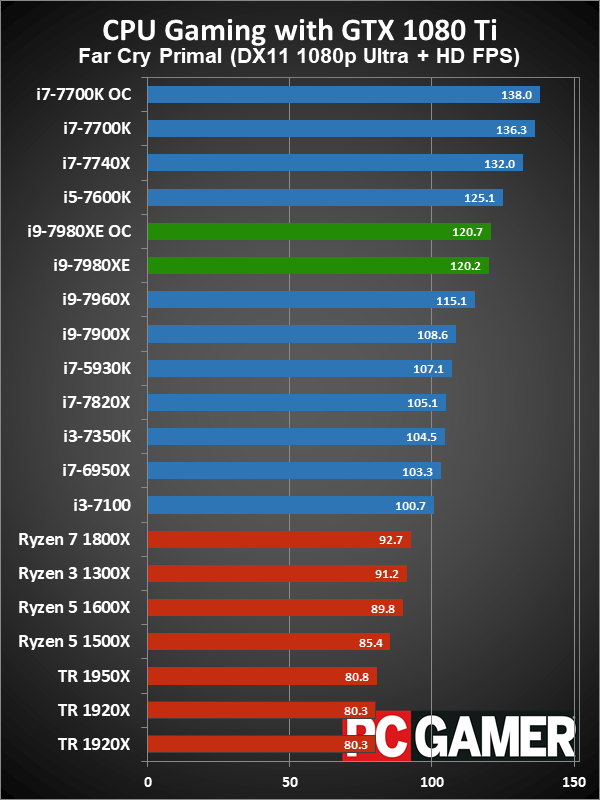

No TR OC

Also interesting is that when you disable 8 cores to make it a 10/20 config, the larger L3 cache gives a noticable boost versus the lesser one in 7900x.

Basically most of the new stuff plays games with a 1080ti just fine, even if not OC.

I wasn't comparing to TR lolNo TR OC

Basically most of the new stuff plays games with a 1080ti just fine, even if not OC.

Hagrid

[H]F Junkie

- Joined

- Nov 23, 2006

- Messages

- 9,163

I meant on the list, they forgot TR OC.I wasn't comparing to TR lol

Welp. B&H will only ship on October 23. I'm gonna try else where.I'm just looking forward to finishing my system. It's the last piece I need (aside from the inability to do cable management better than a mentally-challenged monkey).

floridadoc

n00b

- Joined

- Apr 2, 2017

- Messages

- 40

Welp. B&H will only ship on October 23. I'm gonna try else where.

They seem to be very slow with new products. I got my MB from Amazon while waiting for B&H to get it in stock.

I'm going to quit responding if you don't post something that is a coherent thought or at least a complete sentence. I have no idea what your talking about and I'm not going to do any more guessing.

What he was referring too was AMD supports Raid 5 abd is FREE of charge. He could have stated it better but I got him.

What he was referring too was AMD supports Raid 5 abd is FREE of charge. He could have stated it better but I got him.

Yes, it will be free of charge, whenever the driver and UEFI updates are actually released. However, I recall reading that it didn't support RAID 5. AMD never has in the past and doesn't on it's SATA volumes as it is. It's always 0, 1, or 10.

I also can’t wait for AMD to drop a TR part with 32 threads.

I believe they already did that with TR 1950X.

If you did mean 32 cores, I wouldn't believe so much. Why would AMD modify the SP3 socket to make TR4 socket and then return back to support 32 cores again?

Also interesting is that when you disable 8 cores to make it a 10/20 config, the larger L3 cache gives a noticable boost versus the lesser one in 7900x.

I was going to ask where is ThreadRipper in that graph. Nevermind I just found it. LOL

I meant on the list, they forgot TR OC.

Will it change things significantly?

Hagrid

[H]F Junkie

- Joined

- Nov 23, 2006

- Messages

- 9,163

No, overclocking does nothing to help performance. Do people still do such a thing?I was going to ask where is ThreadRipper in that graph. Nevermind I just found it. LOL

Will it change things significantly?

I was going to ask where is ThreadRipper in that graph. Nevermind I just found it. LOL

Couple that Threadripper with a Vega 64 and you will see it at the top of the charts. That is the funny shit that all these Intel fanatics will never admit because they lack the ability to remove themselves from their intense stockholm syndome biases.

nVidia and Ryzen play acceptably together but there is some serious friction and I have a strong gut feeling nVidia is playing driver politics in favor of Intel. Its funny how rather acceptable Vega performs on Intel but when you plug it into a Ryzen system suddenly the damn thing comes to life.

Is vega 64 and effecient and highly powerful card? hell no I am not saying that. I am just saying that Intel and nVidia are having sex togther or something because this shit makes no sense how you can plug a vega into a Ryzen system and get like 50-60 more FPS vs. an nvidia card on quite a few titles.

I am not going to reference crap. The internet is chock full of crap just go and type in what you want to know and through magic or witchcraft or both you get an answer right in your browser.

And before you say I am just an AMD fan boy... I am on two 1080ti's. Yes I gave over $1800 to nVidia. So call me biased... I think not.

Shintai

Supreme [H]ardness

- Joined

- Jul 1, 2016

- Messages

- 5,678

Couple that Threadripper with a Vega 64 and you will see it at the top of the charts.

So the biggest turd of a graphics card in modern history is the excuse case?

If a site uses that card for any benchmarking outside the card alone, something is awfully wrong and their integrity can be rightfully challenged.

No, overclocking does nothing to help performance. Do people still do such a thing?

+1 for the sarcasm. -1 for ignornig my point. So no "like" for your post.

I asked you if overclocking the 1950X would "change things significantly". An overclock of the 1950X @3.9GHz would put it below the R3 and R7 RyZens, and still far from all Intel chips including the cheap i3. That overlocked 1950X would be about 33% slower than the 7980XE on stock settings.

So the answer to my question is "overclocking will not change things significantly".

Couple that Threadripper with a Vega 64 and you will see it at the top of the charts. That is the funny shit that all these Intel fanatics will never admit because they lack the ability to remove themselves from their intense stockholm syndome biases.

The syndrome must affect AMD people as well, because engineers don't recommend mixing ThreadRipper with Vega. Indeed, AMD uses i7-7700k in gaming demos of Vega

Do you know why even AMD uses Intel CPUs? Because the syndrome consists on getting the maximum performance possible.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)