erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,942

was this already posted?

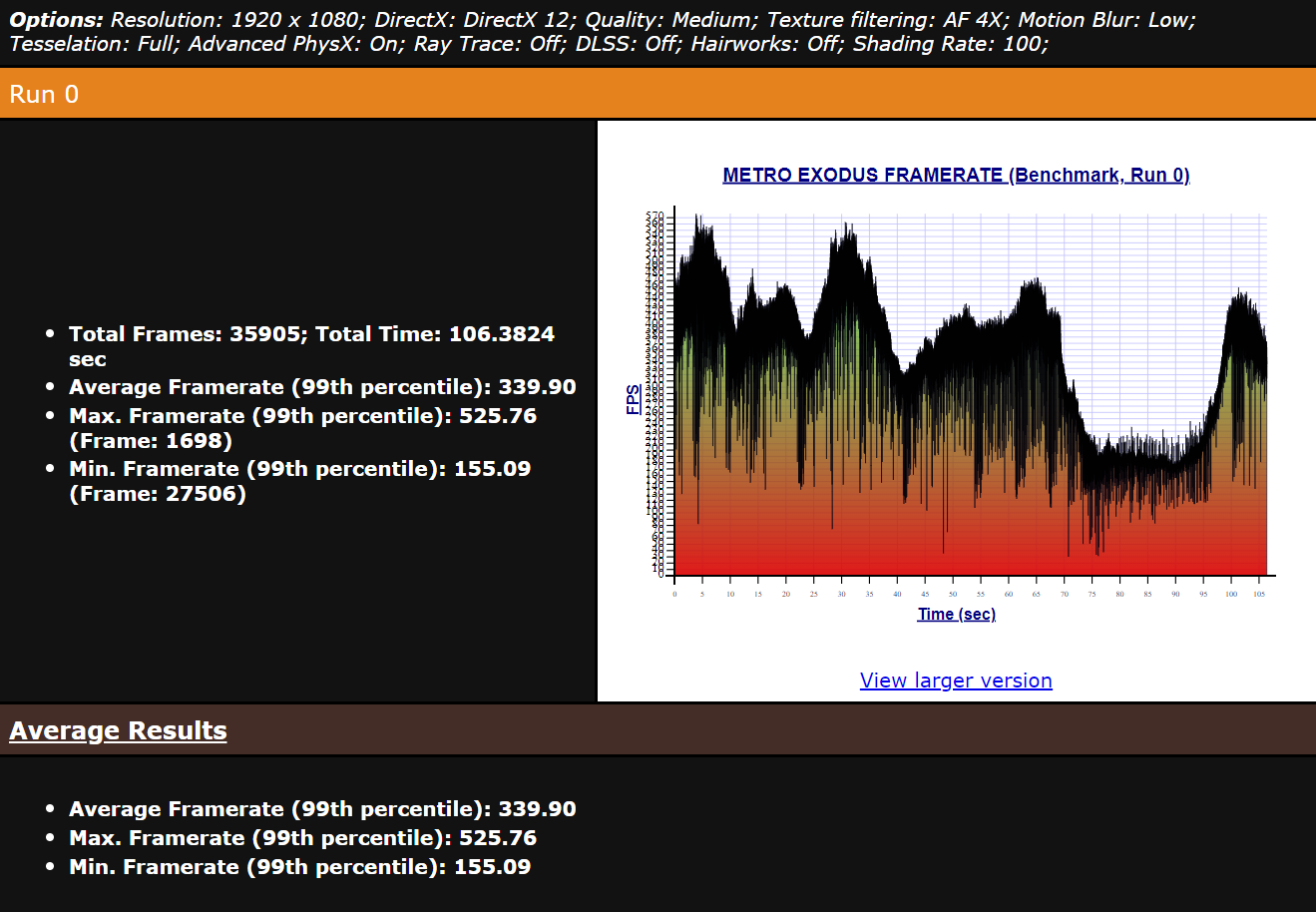

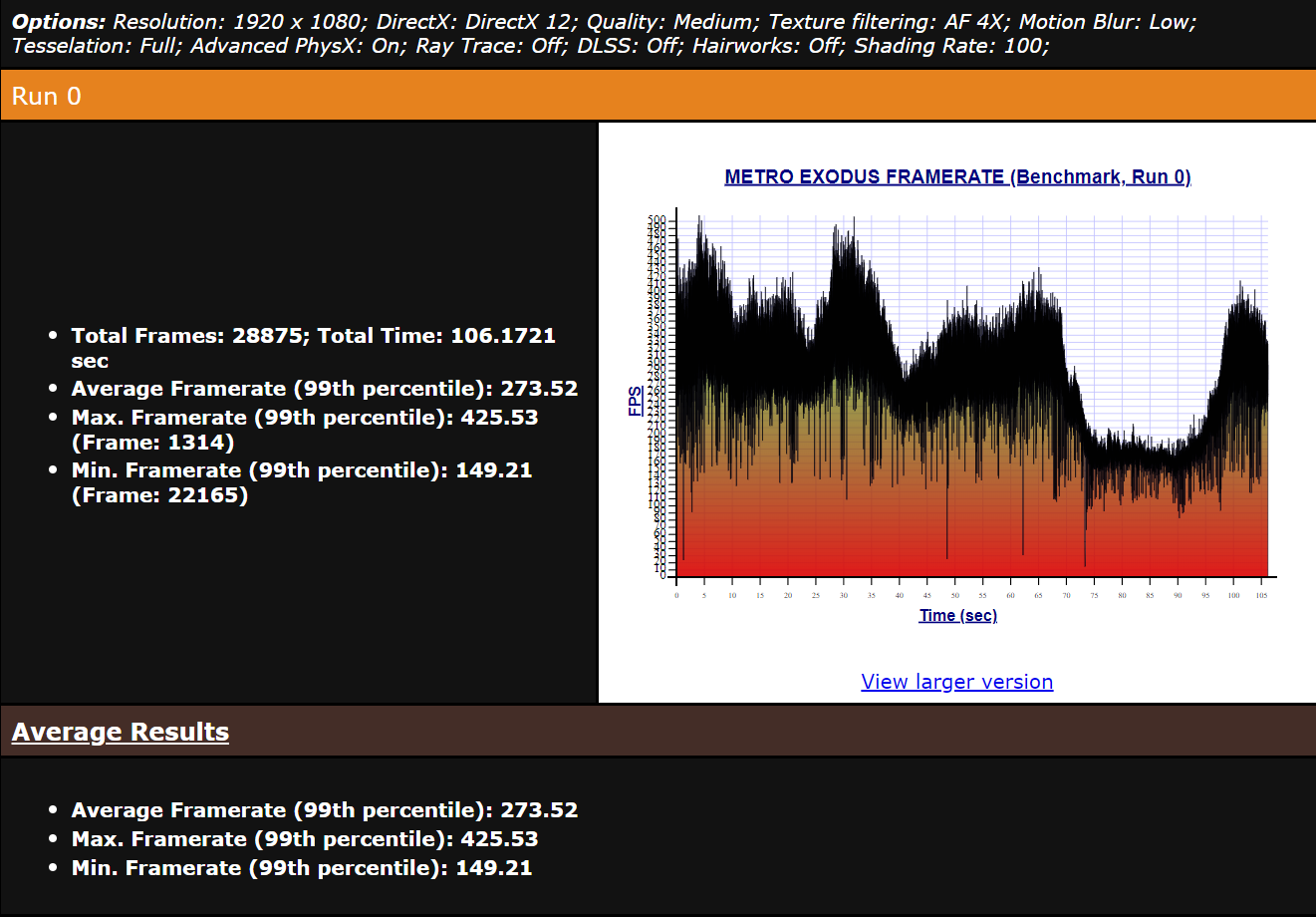

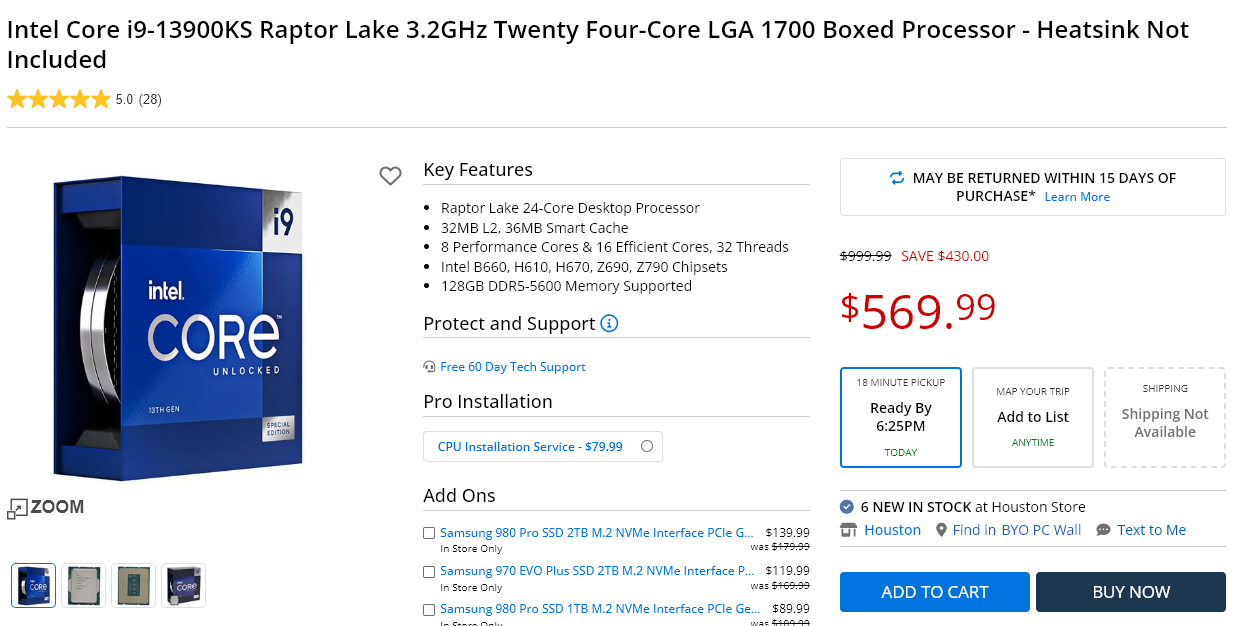

Intel's latest 14th Gen chips aren't a huge improvement over the 13th Gen in gaming performance, but a new Intel Application Optimization (APO) feature might just change that. From a report:Intel's new APO app simply runs in the background, improving performance in games. It offers impressive boosts to frame rates in games that support it, like Tom Clancy's Rainbow Six Siege and Metro Exodus. Intel Application Optimization essentially directs application resources in real time through a scheduling policy that fine-tunes performance for games and potentially even other applications in the future.

It operates alongside Intel's Thread Director, a technology that's designed to improve how apps and games are assigned to performance or efficiency cores depending on the performance needs. The result is some solid gains to performance in certain games, with one Reddit poster seeing a 200fps boost in Rainbow Six Siege at 1080p. "Not all games benefit from APO," explained Intel VP Roger Chandler in a press briefing ahead of the 14th Gen launch. "As we test and verify games we will add those that benefit the most, so gamers can get the best performance from their systems."

Intel's New 14th Gen CPUs Get a Boost To Gaming Performance With APO Feature (theverge.com)19

Posted by msmash on Wednesday October 25, 2023 @02:00PM from the moving-forward dept.Intel's latest 14th Gen chips aren't a huge improvement over the 13th Gen in gaming performance, but a new Intel Application Optimization (APO) feature might just change that. From a report:Intel's new APO app simply runs in the background, improving performance in games. It offers impressive boosts to frame rates in games that support it, like Tom Clancy's Rainbow Six Siege and Metro Exodus. Intel Application Optimization essentially directs application resources in real time through a scheduling policy that fine-tunes performance for games and potentially even other applications in the future.

It operates alongside Intel's Thread Director, a technology that's designed to improve how apps and games are assigned to performance or efficiency cores depending on the performance needs. The result is some solid gains to performance in certain games, with one Reddit poster seeing a 200fps boost in Rainbow Six Siege at 1080p. "Not all games benefit from APO," explained Intel VP Roger Chandler in a press briefing ahead of the 14th Gen launch. "As we test and verify games we will add those that benefit the most, so gamers can get the best performance from their systems."

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)