CAD4466HK

2[H]4U

- Joined

- Jul 24, 2008

- Messages

- 2,735

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Not a total lie its up like $30 over the 12600kSo the rumored price hike was a total lie lol. 13600k looks like a beast at that price.

I might be wrong but Intel isn't talking retail are they ?Not a total lie its up like $30 over the 12600k

Yeah those are tray prices.I might be wrong but Intel isn't talking retail are they ?

I thought they where talking tray price. Which means no middle man margins have been added yet. Perhaps I heard wrong and at some point they actually said MSRP.

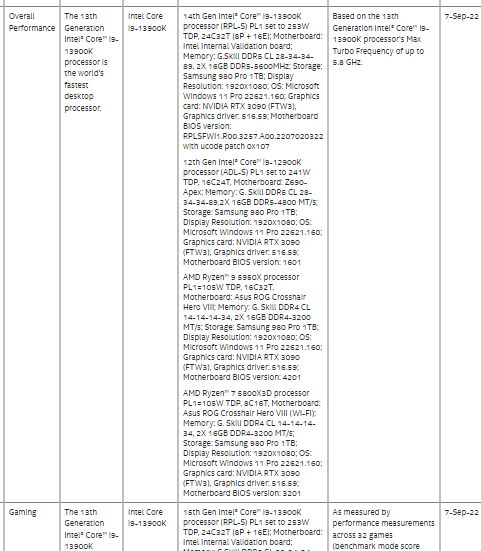

You have a very strange definition of hide. Nothing is hidden there, it just takes more than a glance to see it. And losses? 3 Games it losses to the 5800X3D, 2 look like a tie or margin of error, and and 3 other games they seem to beat the 5800X3D. Only a die hard hater would classify this as hiding. Obscuring; yeah, sure. But hiding is some next level reach there.View attachment 515802

Holy shit, this is an official slide! I thought some 3rd party made it to show where the AMD X3D would fall, but this is actual Intel bullshit graph shenanigans to hide the Raptor Lake losses, aaahahahaha!

Obscuring yes. I will give them credit for including it I guess. They probably figured they would get clobbered if they didn't. Yes they only loose 2... but they tie in two others, and those wins I mean look at the scale, they are barely wins. Then of course there is the fact that this is their flagship vs a mid range part. I know the 3D is an outlier until AMD releases a 3D part for the 7000s.You have a very strange definition of hide. Nothing is hidden there, it just takes more than a glance to see it. And losses? 3 Games it losses to the 5800X3D, 2 look like a tie or margin of error, and and 3 other games they seem to beat the 5800X3D. Only a die hard hater would classify this as hiding. Obscuring; yeah, sure. But hiding is some next level reach there.

This graph could have easily been used to obscure the fact that intel wins half the times just the same as it could be used to obscure that it loses half the times. It seems to me that Intel just doesn't want to bring attention to their competitor too much in a graph, whoop de doo.

This is just like nvidia trying to make their product look as good as possible. They're selling something so it should come as no surprise.You have a very strange definition of hide. Nothing is hidden there, it just takes more than a glance to see it. And losses? 3 Games it losses to the 5800X3D, 2 look like a tie or margin of error, and and 3 other games they seem to beat the 5800X3D. Only a die hard hater would classify this as hiding. Obscuring; yeah, sure. But hiding is some next level reach there.

This graph could have easily been used to obscure the fact that intel wins half the times just the same as it could be used to obscure that it loses half the times. It seems to me that Intel just doesn't want to bring attention to their competitor too much in a graph, whoop de doo.

This is just like nvidia trying to make their product look as good as possible. They're selling something so it should come as no surprise.

Please, arguing semantics is Intel level here. Mixing bars which are favorable to them with little lines that are much less so, is bullshit however you want to call it.You have a very strange definition of hide. Nothing is hidden there, it just takes more than a glance to see it. And losses? 3 Games it losses to the 5800X3D, 2 look like a tie or margin of error, and and 3 other games they seem to beat the 5800X3D. Only a die hard hater would classify this as hiding. Obscuring; yeah, sure. But hiding is some next level reach there.

This graph could have easily been used to obscure the fact that intel wins half the times just the same as it could be used to obscure that it loses half the times. It seems to me that Intel just doesn't want to bring attention to their competitor too much in a graph, whoop de doo.

It’s a weird graph. It took me a triple take to figure what the hell is going on.

I'm back and forth to whether or not I think they should have even mentioned the X3D on that chart.

Always has been, since forever.If anything this should be showing consumers that if your just a gamer... flagship is a terrible waste of your money.

Nvidia doesn't have to try to make their product look good, the product speaks for itself. Whether you like them as a company or not they consistently put out top tier products and push the envelop in graphics over and over. Full stop.This is just like nvidia trying to make their product look as good as possible. They're selling something so it should come as no surprise.

We see them a lot on the Internet people buying 24 gig of Vram or 16 core CPU to games, but outside the very rich people I would imagine tend to look at reviews before going for expensive items.Intel is still going to sell these people are still going to over buy.

You have a very strange definition of hide. Nothing is hidden there, it just takes more than a glance to see it. And losses? 3 Games it losses to the 5800X3D, 2 look like a tie or margin of error, and and 3 other games they seem to beat the 5800X3D. Only a die hard hater would classify this as hiding. Obscuring; yeah, sure. But hiding is some next level reach there.

This graph could have easily been used to obscure the fact that intel wins half the times just the same as it could be used to obscure that it loses half the times. It seems to me that Intel just doesn't want to bring attention to their competitor too much in a graph, whoop de doo.

Haha you should have read a bunch of the 40 series reveal thread, LOTS of people were claiming that nvidia was outright misrepresenting performance numbers.Always has been, since forever.

Nvidia doesn't have to try to make their product look good, the product speaks for itself. Whether you like them as a company or not they consistently put out top tier products and push the envelop in graphics over and over. Full stop.

We could argue the merit of their pricing ad nauseam, but we would be wasting our breaths. They are in a unique situation and they are the ones calling the shots trying to steer the whole 3000/4000 series storm. All we can do is sit, watch and hope GPU pricing comes back to a sane level somewhere down the road.

Again, they aren't concealing anything. It's right there on the graph. Anyone that isn't just glacing at it for a half of a second can easily read it. Anyone stating otherwise is being disingenuous at best.It's not a strange definition of hide, it IS the definition of hide. To hide is to conceal something, and when you conceal a result as a weird candle-stick element on a bar graph, that is trying to hide it.

It's just business as usual with Intel PR. They've always been petty, childish and dishonest in their PR slides going back decades. They wouldn't have to rig up stupid graphs to try and rationalize their products existence if they didn't make terrible products in the first place /COUGH 11th gen, Netburst /COUGH.

The pettiness, childishness and dishonesty in Intels PR slides always seems to coincide with how well they're doing in the market. If they're releasing wastes of silicon like Netburst or the 11th gen, they lay it on thick. When they're somewhat even, you get nonsense charts like the one above. When they're far ahead, they generally ignore AMD entirely.

Remember when AMD was going to a chiplet approach with Epyc, and Intel slandered them for "using glued together cores"? Intel had done the same thing as far back as the Pentium D, Core 2 Quad and numerous mobile chips.

It's always so strange to see how heated people get when their prefered choice of product is getting overshadowed by a competitors and just how far they'll go to defend some misguided sense of honor of a corporation that doesn't give a rats ass about end users (none of them do).Haha you should have read a bunch of the 40 series reveal thread, LOTS of people were claiming that nvidia was outright misrepresenting performance numbers.

Nvidia is touting their DLSS3 as if it is the only way to play. This draws attention away from the actual performance of the hardware and the talking points and marketing are effectively trying to steer everything this way. Prices are stupid and all the shill sites are attempting to justify them.Again, they aren't concealing anything. It's right there on the graph. Anyone that isn't just glacing at it for a half of a second can easily read it. Anyone stating otherwise is being disingenuous at best.

It's always so strange to see how heated people get when their prefered choice of product is getting overshadowed by a competitors and just how far they'll go to defend some misguided sense of honor of a corporation that doesn't give a rats ass about end users (none of them do).

Why Nvidia would not be one of the best positioned company in the world for when that happen ?The future is really in integrated graphics on the CPU.

View attachment 515802

Holy shit, this is an official slide! I thought some 3rd party made it to show where the AMD X3D would fall, but this is actual Intel bullshit graph shenanigans to hide the Raptor Lake losses, aaahahahaha!

Nvidia is not involved in driving the PC market forward with CPUs. You will never see their Graphics in an Intel or AMD chip. Last I checked, Intel and AMD are really the only two companies for PCs these days.Why Nvidia would not be one of the best positioned company in the world for when that happen ?

Tegra is one of the best sold gaming SOC of all time (by far the most outside cellphone ?)

They have SOC in the car-self driving industry, game consoles, AI, the Jetson familyt, when they tried to acquire arm seem to show that like Apple-AMD-Intel they are well aware of that possibility and have a large presence in that world.

A lot of NVIDIA advancement make a lot of sense in the place that SOC make a lot of sense (and independent wireless VR set could benefit a lot from DLSS 3 and what not, same for a switch or other handheld device)

It became generic with DLSS 2.0 from my limited understanding, no need to train for specific game anymore.Your Games have to be prerendered by Nvidia Super Computers and supported. It's meant to lock you into their products.

ARM cpu could become good enough that using them will be good enough.Maybe they would be well positioned if they actually made CPUs, they don't. They failed to acquire ARM, they only license the technology.

AMD is only open because they don't have the manpower or market share to close it off, DLSS is a cheat in the same way that Anti-Aliasing is a cheat, or the SLI profiles of old were a cheat.Nvidia is not involved in driving the PC market forward with CPUs. You will never see their Graphics in an Intel or AMD chip. Last I checked, Intel and AMD are really the only two companies for PCs these days.

Maybe they would be well positioned if they actually made CPUs, they don't. They failed to acquire ARM, they only license the technology.

I'm not saying that Nvidia is going to disappear.

The way I look at it, is that Nvidia's closed ecosystem of DLSS stuff is a cheat. Your Games have to be prerendered by Nvidia Super Computers and supported. It's meant to lock you into their products. DLSS is shit, because it's not real. DLSS 3.0 is being touted as only with 4,000 series cards while it can be run on 2000 & 3000 series boards as well. It's predatory business and one of these days that shit is gonna bite them in the ass.

AMD might be an evil corporation, but at least their technologies are open to everyone. Their technologies don't require games to be pre-rendered on a supercomputer to gain a bonus in frames per second and the technology is really close to DLSS in terms of quality....And it runs on everything.

Karma's a Bitch and it's coming for Nvidia one of these days

The big thing that the 2xxx-3xxx cards lack that DLSS 3.0 requires is the hardware optical flow accelerator, the 2000 and 3000 series cards do calculate optical flow but they do it at a software level so it doesn't necessarily have the needed performance to insert frames in the same way and is more prone to artifact glitches due to corners being cut to maintain the needed calculation speeds.It became generic with DLSS 2.0 from my limited understanding, no need to train for specific game anymore.

ARM cpu could become good enough that using them will be good enough.

It is really hard to predict, specially with what the console world make possible in term of breaking paradigm vs the PC world an Arm a la Apple M1 with a NVIDIA gpu type of soc, with some specialized hardware path for frequent function of game engine instead of media (IK, collision detection, etc..) could be hard to beat.

Has for, being shit because it is not real, not being real is exactly the point and exactly the strength and something already use massively in audio, video not in real-time.

Running DLSS 3.0 on a 2xxx-3xxx would have come with a different bitting (artifact level to not gain a giant level of latency could have gave a bad name to the techno)

Well probably because there's not many people who care to see how a CPU performs on a nearly 20 year old game.It's always difficult to find benchmarks of World of Warcraft on brand new hardware. I was VERY surprised to see in on the Intel slide. Not only that, but it straight-up shows their new 13-series CPU getting stomped by the 5800X3D. And only 6% faster than regular Zen3? I doubt that Intel would exaggerate performance numbers for their competitor's product, so the numbers are likely real. Kudos to Intel for actually showing situations where their stuff is not the fastest.

Wasn't that a rumor that was speculation of a refresh that never occurred, there was millions of switch sales between when the rumored stop time and now, and the switch is entering black friday season-christmas with the same Tegra.we know the old Tegra stuff is no longer produced

Who knows it's all rumors and Nintendo is famous for its tight lips, they have proven time and time again that they will burn your contract and fight a court battle with you over leaking their stuff before their announcements.Wasn't that a rumor that was speculation of a refresh that never occurred, there was millions of switch sales between when the rumored stop time and now, and the switch is entering black friday season-christmas with the same Tegra.

Why all this Nvidia and Arm stuff in a thread about Intels X86 CPUs? Tegra is not one, but several versions of SoC as is Snapdragon (phones, Amazon firestick, Oculus VR etc) which among those Nvidia primarily would compete against in the ARM space (Qualcomm, Samsung, Apple, Mediatek etc).Somewhat related to my previous post, I see Nvidia has snuck in that they are releasing a new Orion chip in 2023 that operates in the 5-10w range, to completely supplant the original Jetson Nano based on the Tegra stuff.

What is interesting here is the Jetson nano is famous for basically being the guts of the Nintendo Switch (fewer cores at a lower clock), we know the old Tegra stuff is no longer produced so I wonder if this could possibly be the guts of the rumored "Switch Pro" that has been rumored for 2023.

Almost guaranteed that Intel sells the OEM their ARC GPUs for dirt, not at a discount that would get them in trouble but sells them at a loss and makes it clear they are selling them at a loss to gain market share.Why all this Nvidia and Arm stuff in a thread about Intels X86 CPUs? Tegra is not one, but several versions of SoC as is Snapdragon (phones, Amazon firestick, Oculus VR etc) which among those Nvidia primarily would compete against in the ARM space (Qualcomm, Samsung, Apple, Mediatek etc).

Nvidia will probably come with a good SoC at a later stage primarily build for gaming, I am almost certain of that (perhaps even with their Vulcan based "rosetta"). But, Nvidia is a sidenote in Intels Keynote besides their new GPUs. They have no X86 SoC and are already basically being squeezed out of the low end and entry level marked, now that Intel have an "APU" that can do some light gaming (Iris XE) and if to look at Intels past, they would probably squeeze Dell, HP, Lenovo and other businesses to bundle Intels ARC GPU with their CPUs on laptops and prebuilds instead of Nvidia. Intel got sued and lost in the past against AMD, but they might do the same towards Nvidia now that they have their own GPUs and perhaps have learned how not to loose if they get sued again.

So, concerning Nvidia in this Intel Keynote, the only thing I gather from it, is that Intel is pitting their GPU up against Nvidia in the slides when it comes to price. Gauntlet has been thrown against Nvidia. The only relevance a potential Nvidia SoC have in this, is that Intel have incentive to screw Nvidia now out of the high volume low end to mid range market when it comes to prebuilds and laptops, so it might be a chicken and the egg situation. Does Nvidia build their own SoC and release it to the laptop and prebuilds market before or after they get screwed and squeezed out by Intel ... What will Intel do to gain marked share of their own ARC GPUS you think?

Well probably because there's not many people who care to see how a CPU performs on a nearly 20 year old game.

You can polish a turd 6 ways to sunday but that game never looked good anyhow. Plenty of people play Overwatch but reviewers don't waste time on it for similar reasons.World of Warcraft has had 8 expansions, with the 9th coming in a couple months. Every expansion has brought significant improvements and changes to the game engine. When the game was released you could play it on a 3dfx Voodoo3 or a Nvidia TNT2. Now it's a DirectX 12 game that supports Ray Tracing. To imply that it's the same as it was when it was released just shows that you have no idea about the game. It is an extremely CPU dependent game, so yes, CPU performance absolutely matters. It was a noticeable upgrade in WoW when I swapped my 3900X for a 5900X. Being that it's still one of the most popular MMO games out today, I'd say that there are plenty of people who care.

You can polish a turd 6 ways to sunday but that game never looked good anyhow. Plenty of people play Overwatch but reviewers don't waste time on it for similar reasons.

I'm just trying to explain to you why no reviewers are putting up WoW benchmarks.Do you actually have anything to contribute to this thread beyond being a shit disturber? Just because you don't care for a particular game doesn't mean it's not important enough to benchmark. Get over yourself.

I'm just trying to explain to you why no reviewers are putting up WoW benchmarks.

No, it was not trolling, quit being so sensitive. I played WoW at release and for a year or so afterward, I don't hate the game or anything like that. I'm also looking forward to playing OW2 some. I just am giving my opinion that neither are really worthy benchmarks at this point, I'd much rather see newer titles with perhaps the one old school title like CS:go thrown in there even though it's not really very relevant anymore.Your "explanation" was a thinly-veiled condescending attempt at trolling. Intel seemed to think WoW was important enough to benchmark, despite not even showing their own products in a particularly positive light. And WoW benchmarks are not actually that rare, they just usually tend to coincide with the release of a new expansion, not necessarily with the release of new hardware, as in this case. I expect to see quite a few benchmarks when Dragonflight is released in November.