pututu

[H]ard DC'er of the Year 2021

- Joined

- Dec 27, 2015

- Messages

- 3,090

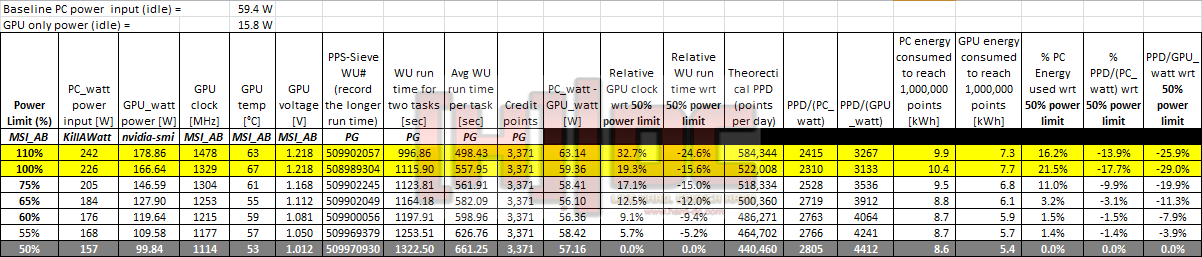

Not much have being discussed about GPU (BOINC) crunching power efficiency here so I thought it might be useful to share a study which I did over this Xmas holiday. What I found out (no surprise at all) is that the optimum power-point (PPD/watt) efficiency and least energy consumption (kWh) to reach a desired point target occurs when the GPU is underclocked and undervolted. Note: YMMV depending on the PC system and GPU card. Even on the same card, if the GPU BIOS version is different, I think it may yield different result when the GPU throttles when "power limit" is imposed onto the GPU card.

Purpose: Take a GPU card and attempt to find a "power limit" that yields the best power-point efficiency & low energy consumption. In this study I used PrimeGrid GFN-18 project as a test case since this yields pretty consistent points per work unit (WU) and put quite a stress on the GPU card, less on CPU and motherboard.

Test setup:

GPU card - MSI GTX 970 Gaming 4G

CPU -- intel core i3 530 (running @140 BCLK or 3.08GHz)

MB - Gigabyte GA-H55M-S2H

PSU - Sesonic G-550 (SSR-550RM, gold rated)

Memory - 2 x 2GB Corsair XMS3 DDR3 (running @1333MHz)

SSD - PNY CS1311 120GB Sata III

Case - Compaq Presario 5BW130 case (do I really need to mention this?)

Pictures below for the test setup. This is not a beast machine. The rest of the components, I had them for many years except for the PSU and SSD. The compaq case I had it for 16 years!

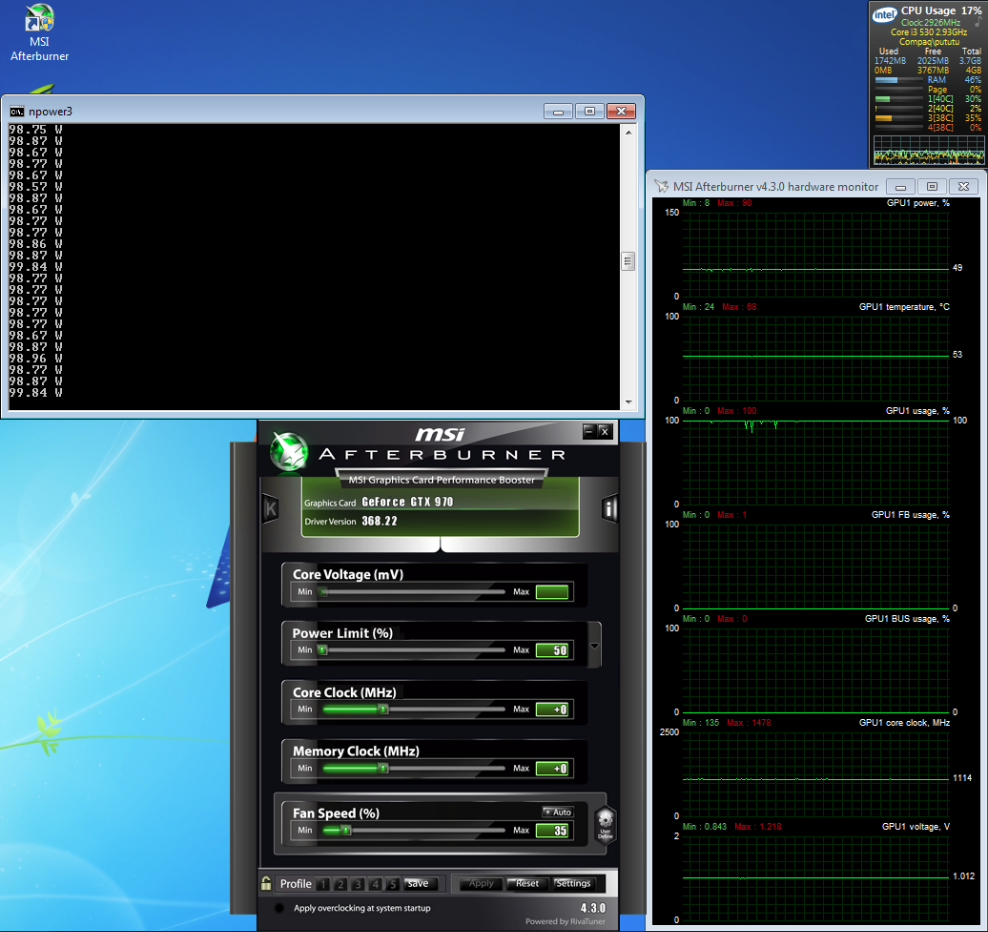

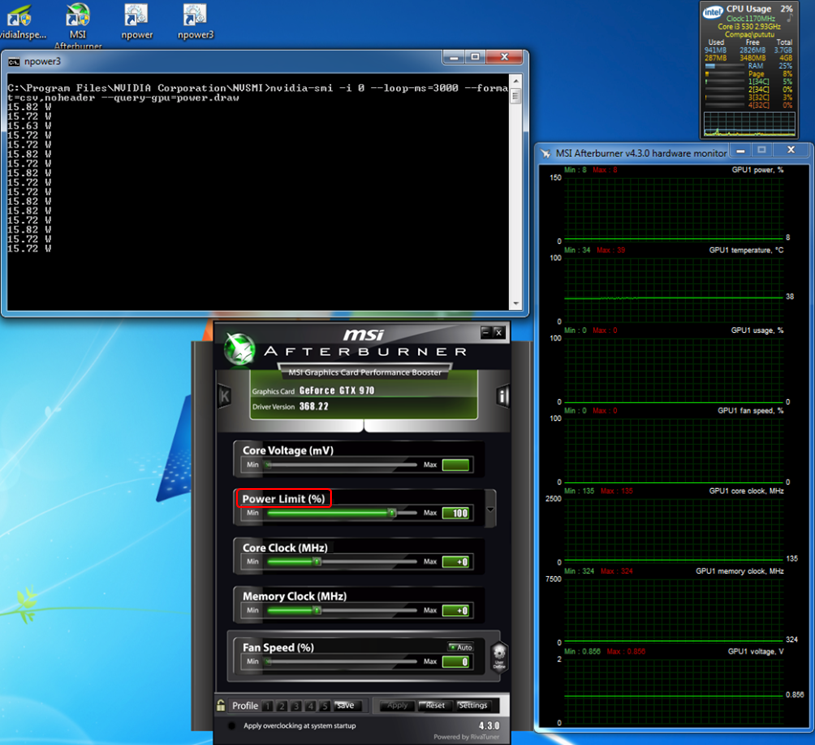

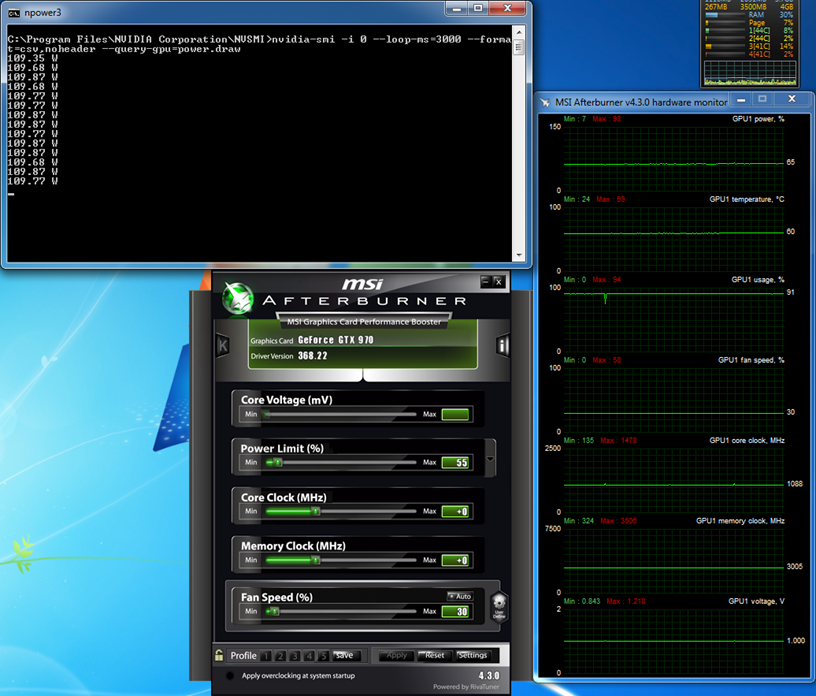

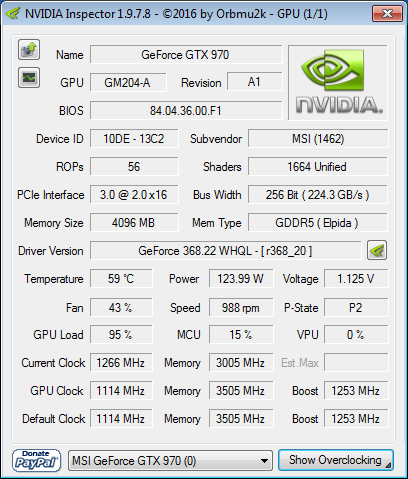

Other tools needed: MSI Afterburner 4.3.0, Nvidia Inspector 1.9.7.8, Kill-A-Watt meter and nvidia-smi program that comes it when installing Nvidia driver. This program is typically located at C:\Program Files\NVIDIA Corporation\NVSMI folder. There is even a manual for it. I used nvidia-smi program rather than Nvidia Inspector to record GPU power consumption.

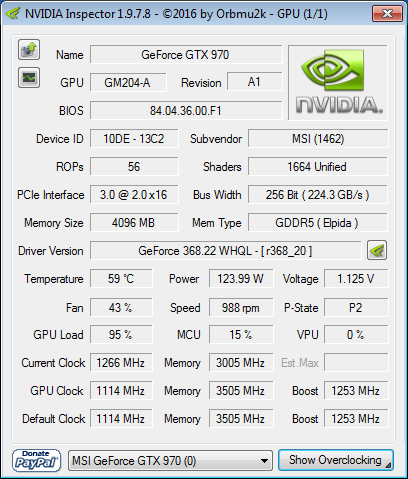

Baseline and GPU information: GPU Bios version 84.04.36.00.F1 at stock setup. Nvidia driver 368.22 used.

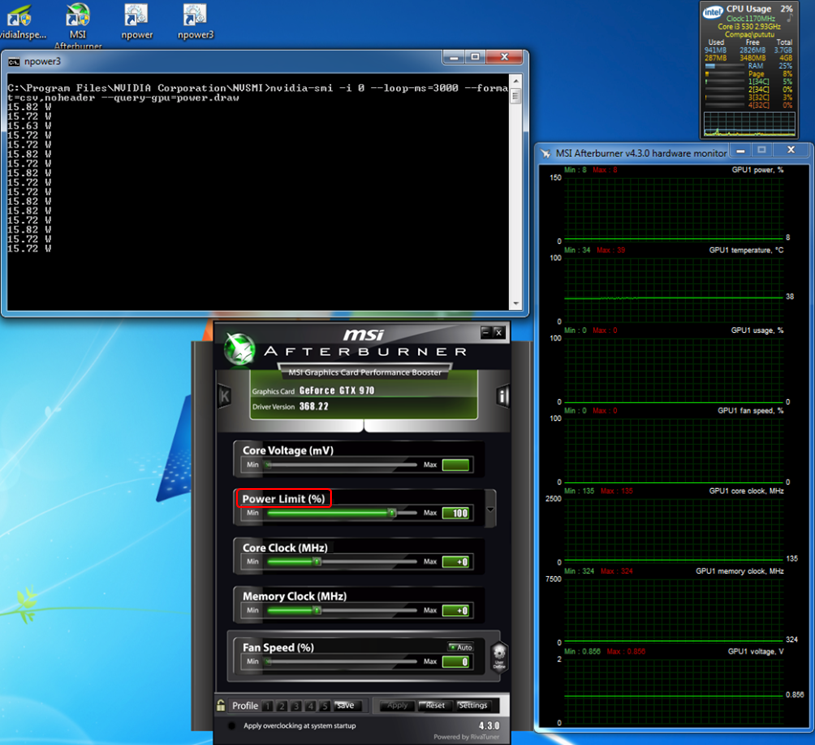

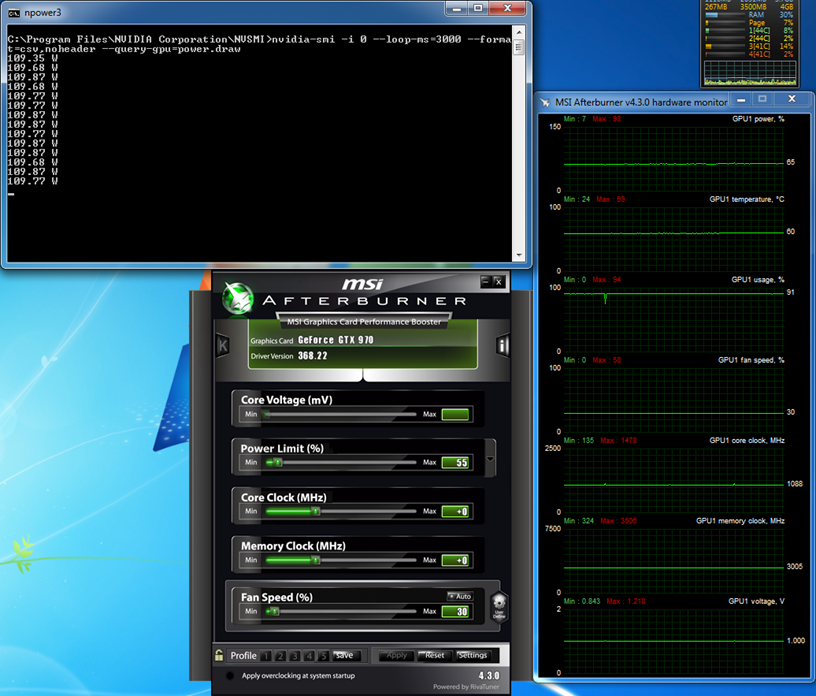

At idle, PC power input is 57.20W (kill a watt) and GPU idle power is 15.82W (nvidia-smi). Let's call these PC_watt and GPU_watt respectively. That means the PC system minus GPU consumes 57.20 - 15.82 = 41.38W at idle. I think this is quite decent for a non-beast system. "Power Limit" is set by the MSI AfterBurner or the Nvidia Inspector. Either one will give set the same "Power Limit". Below are the actual pictures at idle condition. Note that the lowest GPU voltage that can be achieved by this card is 0.856V. If we can run the GPU during crunching at 0.856V, you get the theoretical best possible efficiency. I think this is set by the BIOS and somewhat limited by the semiconductor material and processing.

Test Results:

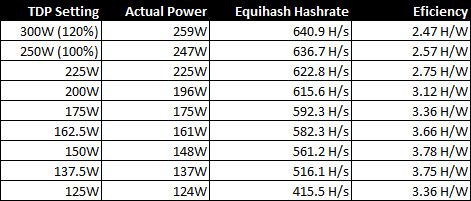

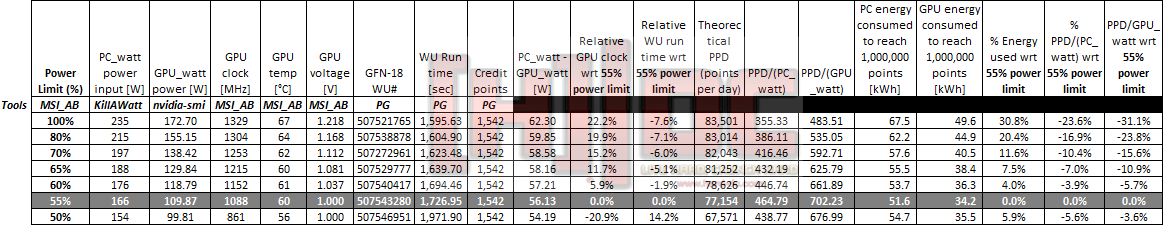

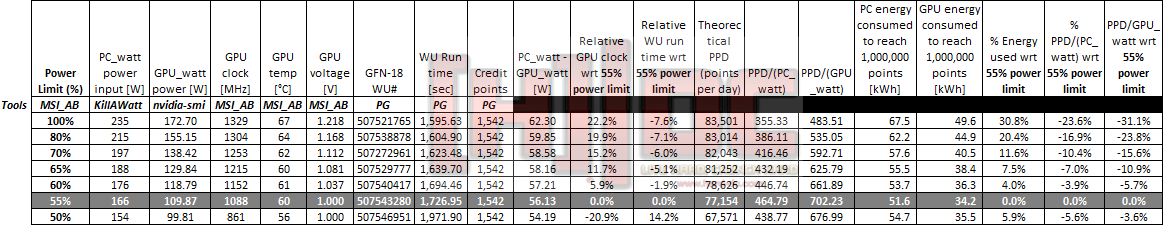

Record result at power limit of 100%, 80%, 70%, 65%, 60%, 55% and 50%.I can't figure out if there is a away to reduce the power limit setting below 50%. If anyone knows, would like to hear from you. Found this in nvidia-smi. In this testing, I let the GPU BIOS (whatever the card manufacturer decides) automatically determines the voltage and GPU clock setting. I'm not sure if there is a way to manually reduce the voltage (up to a limit of the transistor operation of 0.856V in this case). Below is the result based on PrimeGrid GFN-18 work units.

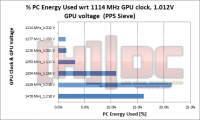

The optimum power-point efficiency and PC energy consumption occurs when the power limit is set around 55%. At 50% power limit, the GPU clocks down from 1088MHz to 861MHz, that's a drop of 21% in clock speed while maintaining GPU voltage of 1.0V. Again, this might be limited by GPU BIOS or perhaps in combination with other components limitation.

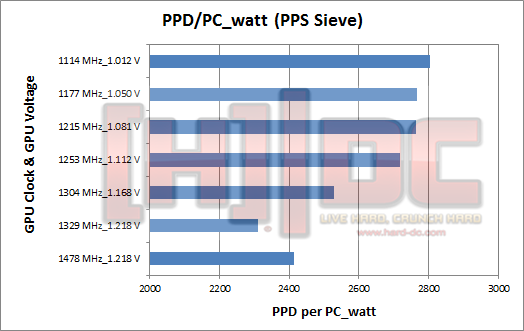

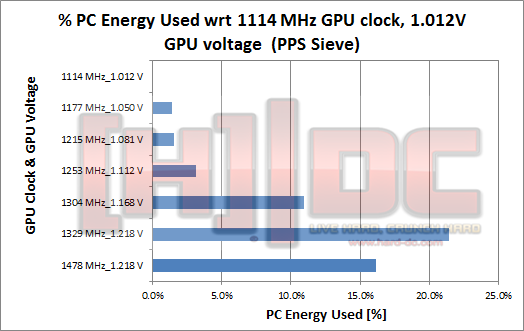

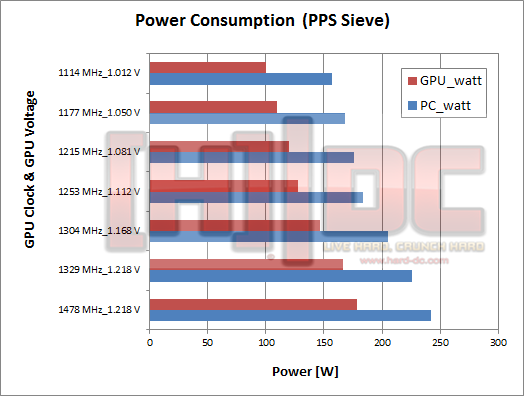

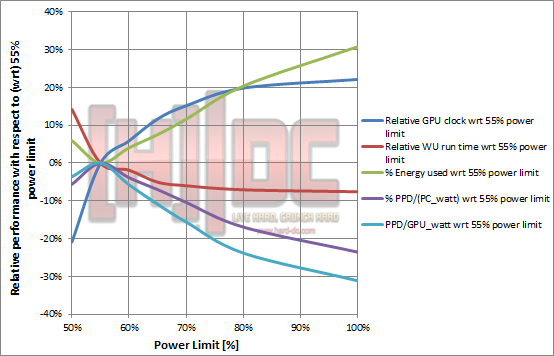

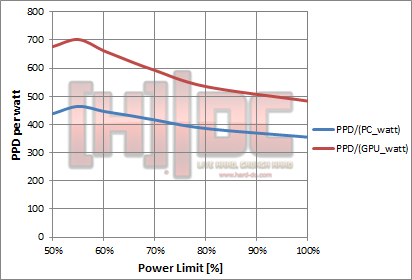

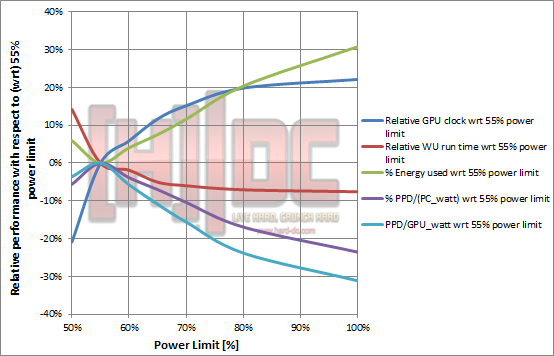

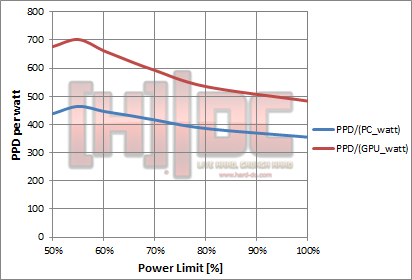

The first graph below shows the relative performance of various metrics with respect to (wrt) the optimum 55% power limit. The second graph shows PPD (points per day) per watt performance.

Below is the screenshot of the 55% power limit setup.

Discussions and Conclusions:

IIRC this may not apply to FAH project where points are awarded based on TPF (time per frame) time. So you need to increase both GPU clock speed and power to achieve the best possible PPD per watt. Due to varying project points in FAH, PG GFN-18 is a better choice for this study. In general, YMMV and in my case, 55% appears to be the optimum for GFN-18. At this setting and to get 1M BOINC points, it consumes about 51.6 kWh (PC_energy) or 34.2 kWh (GPU_energy). For states with high power bills, I hope this study provides a useful insight to the GPU crunching efficiency.

Purpose: Take a GPU card and attempt to find a "power limit" that yields the best power-point efficiency & low energy consumption. In this study I used PrimeGrid GFN-18 project as a test case since this yields pretty consistent points per work unit (WU) and put quite a stress on the GPU card, less on CPU and motherboard.

Test setup:

GPU card - MSI GTX 970 Gaming 4G

CPU -- intel core i3 530 (running @140 BCLK or 3.08GHz)

MB - Gigabyte GA-H55M-S2H

PSU - Sesonic G-550 (SSR-550RM, gold rated)

Memory - 2 x 2GB Corsair XMS3 DDR3 (running @1333MHz)

SSD - PNY CS1311 120GB Sata III

Case - Compaq Presario 5BW130 case (do I really need to mention this?)

Pictures below for the test setup. This is not a beast machine. The rest of the components, I had them for many years except for the PSU and SSD. The compaq case I had it for 16 years!

Other tools needed: MSI Afterburner 4.3.0, Nvidia Inspector 1.9.7.8, Kill-A-Watt meter and nvidia-smi program that comes it when installing Nvidia driver. This program is typically located at C:\Program Files\NVIDIA Corporation\NVSMI folder. There is even a manual for it. I used nvidia-smi program rather than Nvidia Inspector to record GPU power consumption.

Baseline and GPU information: GPU Bios version 84.04.36.00.F1 at stock setup. Nvidia driver 368.22 used.

At idle, PC power input is 57.20W (kill a watt) and GPU idle power is 15.82W (nvidia-smi). Let's call these PC_watt and GPU_watt respectively. That means the PC system minus GPU consumes 57.20 - 15.82 = 41.38W at idle. I think this is quite decent for a non-beast system. "Power Limit" is set by the MSI AfterBurner or the Nvidia Inspector. Either one will give set the same "Power Limit". Below are the actual pictures at idle condition. Note that the lowest GPU voltage that can be achieved by this card is 0.856V. If we can run the GPU during crunching at 0.856V, you get the theoretical best possible efficiency. I think this is set by the BIOS and somewhat limited by the semiconductor material and processing.

Test Results:

Record result at power limit of 100%, 80%, 70%, 65%, 60%, 55% and 50%.

The optimum power-point efficiency and PC energy consumption occurs when the power limit is set around 55%. At 50% power limit, the GPU clocks down from 1088MHz to 861MHz, that's a drop of 21% in clock speed while maintaining GPU voltage of 1.0V. Again, this might be limited by GPU BIOS or perhaps in combination with other components limitation.

The first graph below shows the relative performance of various metrics with respect to (wrt) the optimum 55% power limit. The second graph shows PPD (points per day) per watt performance.

Below is the screenshot of the 55% power limit setup.

Discussions and Conclusions:

IIRC this may not apply to FAH project where points are awarded based on TPF (time per frame) time. So you need to increase both GPU clock speed and power to achieve the best possible PPD per watt. Due to varying project points in FAH, PG GFN-18 is a better choice for this study. In general, YMMV and in my case, 55% appears to be the optimum for GFN-18. At this setting and to get 1M BOINC points, it consumes about 51.6 kWh (PC_energy) or 34.2 kWh (GPU_energy). For states with high power bills, I hope this study provides a useful insight to the GPU crunching efficiency.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)