_mockingbird

Gawd

- Joined

- Feb 20, 2017

- Messages

- 1,017

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

A bit disingenuous since I have yet to see a single game that has this issues when playing at 1080p at medium settings. You know, where these GPUs are really meant to compete.In games such as Assassin's Creed Shadows and Horizon Zero Dawn Remastered, insufficient VRAM causes significant performance drops.

It's no wonder that NVIDIA doesn't want reviewers reviewing the GeForce RTX 5060 Ti 8GB.

That is a terrible argument.A bit disingenuous since I have yet to see a single game that has this issues when playing at 1080p at medium settings. You know, where these GPUs are really meant to compete.

Honestly, the lower spec GPUs performance reviews make me really miss [H] reviews when they would conclude at what resolution and settings gamers would expect to use to have acceptable performance. All these tech tubers give me the below memes vibes so much. It's almost like they intentionally ignore the fact that a Prius level GPU isn't designed to compete in a quarter mile drag race.

View attachment 723997

The cache only helps with memory bandwidth, not the lack of memory.Looks like it actually does well. Maybe games are better able to utilize the cache?

That said, 5060ti should be 16GB only with the 5060 getting the 2 vram size options.

I'm really hoping the 5050(ti?) gets 96 bit gddr7 with 12GB. Should make for a great budget card.

I don't think that will happen for the life of the 50 series, but I could see that until supply catches up.What's sad is that Nvidia made the 16gb card specifically for reviews, and it won't be available after the initial launch.

The 8GB card will be all that is available for the rest of the life of the 5000 series.

Leaks say the 5050 will have 8GB of GDDR6 on a 128-bit bus.Looks like it actually does well. Maybe games are better able to utilize the cache?

That said, 5060ti should be 16GB only with the 5060 getting the 2 vram size options.

I'm really hoping the 5050(ti?) gets 96 bit gddr7 with 12GB. Should make for a great budget card.

Absolutely no argument from me. The tests at both 1440p and 4K are indeed important, but let's stop bullshiting here. The tech tubers have all prioritize performance comparisons without a single though about the type of people who actually buy XX60 class cards. They are like a dog with a bone, continuously making moronic comparisons that have little to nothing to do at all with actual performance at acceptable resolutions and game settings; and go on and on about, "The XX60 does terribly against the previous generation XX70 at 4K with highest in game settings and path tracing turned on, as shown in our graphs". There is not a single game that runs into VRAM issues at 1080p with medium settings on even a mobile XX60 GPU with only 8GB of VRAM. NOT ONE. Every single bullshit video they've shown of the issue is at 1440p/4K with the highest settings possible to FORCE and issue that not a single user of a XX60 class card ever encounters. And by the time it does become and issue, the lifespan of said cards is well passed any reasonable persons expectations.That is a terrible argument.

Today, 8GB VRAM is already insufficient. Right now, you may be able to tweak some settings to get it to work, but games are becoming more VRAM demanding and insufficient VRAM will become more and more of an issue in the future.

View attachment 723943View attachment 723944View attachment 723945View attachment 723946View attachment 723947View attachment 723948View attachment 723949View attachment 723950View attachment 723951View attachment 723952View attachment 723954View attachment 723953View attachment 723955View attachment 723956View attachment 723957View attachment 723958View attachment 723959View attachment 723960View attachment 723961View attachment 723962

Source: https://www.bilibili.com/video/BV1TWoMYUEGW/

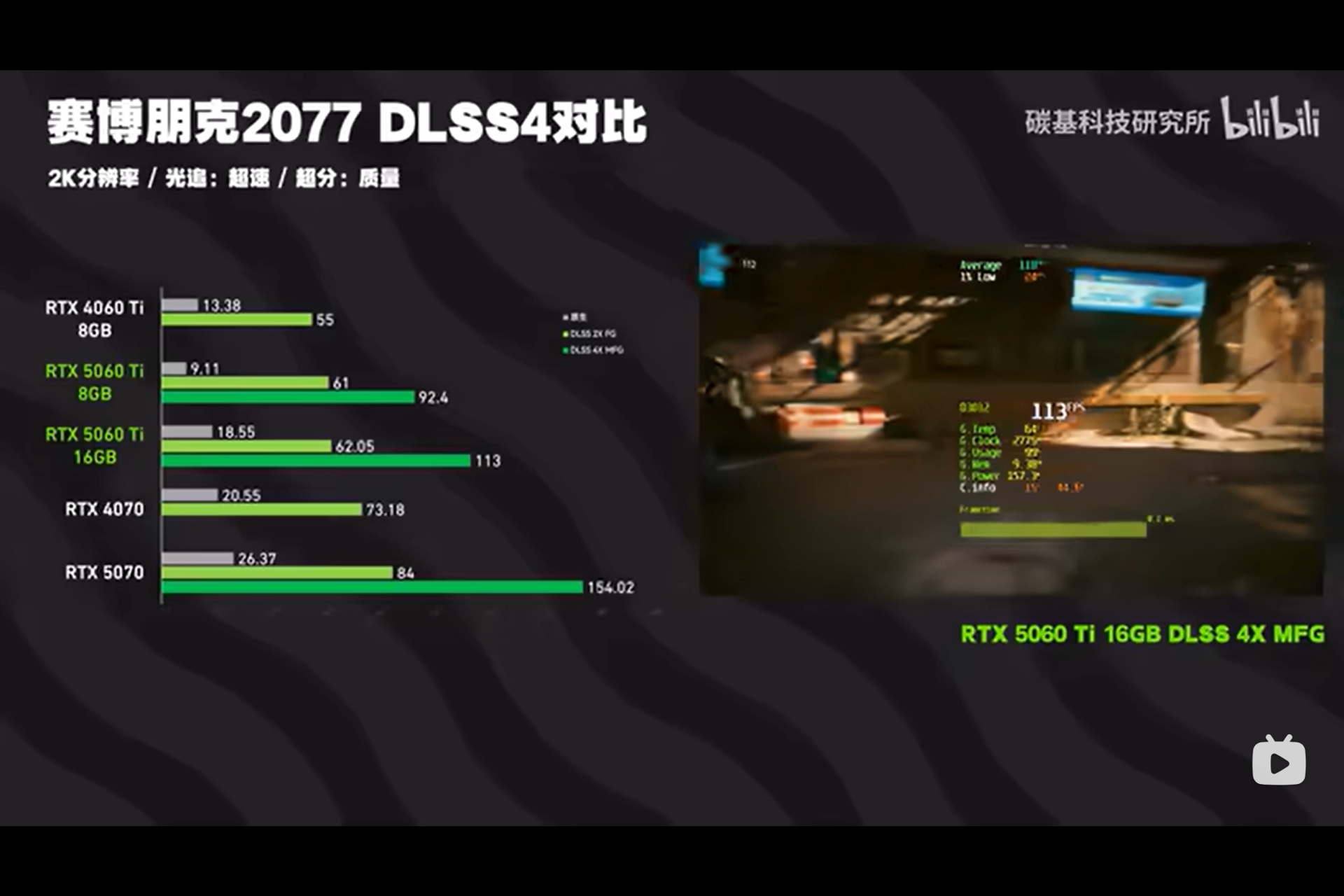

cant they make up the performance problems by using DLSS4 and 4x MFG ?

For the most part yes, but wouldn't you need less than 1 GB of ram if you could have a theoretical infinite bandwidth ie, the vram stores bulk texture data across several frames?The cache only helps with memory bandwidth, not the lack of memory.

Yeah the switch to gddr7 has been a bummer since it never got a capacity boost while carrying over the 128 bit trash for 60ti class cards. 12 GB would have been perfect for this performance level and I can't imagine a 192 bit bus of gddr6 being much more expensive.I don't think that will happen for the life of the 50 series, but I could see that until supply catches up.

I'm not sure what the bottleneck is making higher end cards as scarce as they are, but some people seem to think GDDR7 availability is part of the reason. If there is a shortage of GDDR7 it'll discourage the production of 16GB 5060Tis. Same chips as a 5070Ti, so why put them on a 16GB 5060Ti when you can make a 5070Ti or two 8GB 5060Tis? Or slap two sets on a 5090 and charge $3k for it?

It didn't happen with the 4060Ti for the life of the 40 series. I didn't pay much attention, but IIRC last summer the price gap between the 8GB and 16GB models had narrowed to about $50 from $100, mostly due to the 16GB version dropping in price below MSRP. They launched with an MSRP of $400 and $500, respectively, and IIRC the 16GB 4060Ti dropped to about $450. MSRP on the 5060Ti is lower than that at $429 for the 16GB and $379 for the 8GB.

Leaks say the 5050 will have 8GB of GDDR6 on a 128-bit bus.

Personally I think it would have been better if NVidia only used GDDR7 on the 5070Ti, 5080 and 5090, then given the 5070 16GB GDDR6 on a 256-bit bus, 5060Ti & 5060 12GB GDDR6 on 192-bit, and the 5050 gets the 8GB/128-bit setup. GDDR7 is apparently a good bit more expensive than GDDR6. It might also help with availability. Out of the 50 series cards that launched before the 5060Ti, the only one that's relatively easy to get without getting looted is the 12GB 5070.

My 1080 can play most games are medium settings and 1080p... What's your point?A bit disingenuous since I have yet to see a single game that has this issues when playing at 1080p at medium settings. You know, where these GPUs are really meant to compete.

Honestly, the lower spec GPUs performance reviews make me really miss [H] reviews when they would conclude at what resolution and settings gamers would expect to use to have acceptable performance. All these tech tubers give me the below memes vibes so much. It's almost like they intentionally ignore the fact that a Prius level GPU isn't designed to compete in a quarter mile drag race.

View attachment 723997

DLSS and Frame Generation increase VRAM usage, so insufficient VRAM becomes an even bigger issue.cant they make up the performance problems by using DLSS4 and 4x MFG ?

You inadvertently revealed to everyone that you didn't look at the review.Absolutely no argument from me. The tests at both 1440p and 4K are indeed important, but let's stop bullshiting here. The tech tubers have all prioritize performance comparisons without a single though about the type of people who actually buy XX60 class cards. They are like a dog with a bone, continuously making moronic comparisons that have little to nothing to do at all with actual performance at acceptable resolutions and game settings; and go on and on about, "The XX60 does terribly against the previous generation XX70 at 4K with highest in game settings and path tracing turned on, as shown in our graphs". There is not a single game that runs into VRAM issues at 1080p with medium settings on even a mobile XX60 GPU with only 8GB of VRAM. NOT ONE. Every single bullshit video they've shown of the issue is at 1440p/4K with the highest settings possible to FORCE and issue that not a single user of a XX60 class card ever encounters. And by the time it does become and issue, the lifespan of said cards is well passed any reasonable persons expectations.

Imagine crying about a VRAM issue you won't encounter on a 5060 until the year 2037 or some other bullshit. Didn't think I'd see the day when [H] members forgot how the most defining part of [H] reviews were the apples to apples comparison PLUS the best playable settings.

Frame gem does increase vram slightly, but from what I've seen, DLSS actually decreases vram usage, all things given equal, since it's rendering at a lower resolution.DLSS and Frame Generation increase VRAM usage, so insufficient VRAM becomes an even bigger issue.

I didn't post a screenshot of it, but it's also shown in the video.

8GB *60 class cards dont do well.Looks like it actually does well. Maybe games are better able to utilize the cache?

That said, 5060ti should be 16GB only with the 5060 getting the 2 vram size options.

I'm really hoping the 5050(ti?) gets 96 bit gddr7 with 12GB. Should make for a great budget card.

https://www.eurogamer.net/digitalfo...ce-but-care-is-needed-with-8gb-graphics-cardsFor measuring the impact of differences in memory I'd be more interested in texture pop-in comparisons.

Many a modern game engine adapts its memory usage to the amount available to prevent its performance from tanking.

I watched the review, I saw the slides with 1080p, 1440p and 4K, and I also saw how in the 16 games average test they only show results for 1440p and 4K and how every single tech tuber has still refused to test the card at best playable settings at optimal resolutions to see how it performs. XX60 class cards with 8BG of memory DO NOT suffer the made up bullshit issue of running out of VRAM when played at 1080p and medium setting. Period, full stop, and replying to me as if you were some smartass doesn't change that.You inadvertently revealed to everyone that you didn't look at the review.

"1920X1080" in large text doesn't need to be translated from Chinese.

My point is relevant to the thread while your post is irrelevant to the discussion.My 1080 can play most games are medium settings and 1080p... What's your point?

It really isn't. Being "mediocre" at 1080p is trash. At 4 generations newer I would expect a 60 series to perform better than that.My point is relevant to the thread while your post is irrelevant to the discussion.

It really isn't. Being "mediocre" at 1080p is trash. At 4 generations newer I would expect a 60 series to perform better than that.

Alot of that has to do with game bloat. There's better, less demanding game experiences out there than Wutang: Monkey game on Ultra.It really isn't. Being "mediocre" at 1080p is trash. At 4 generations newer I would expect a 60 series to perform better than that.

It is probably fine if you are at 1080p, but at 1440p the 10GB 3080 needed tuning due to running out of VRAM in CP 2077: Phantom liberty, Far Cry 6 and several other games. It struggled a lot with texture dropouts/lower quality textures in Indiana Jones that pretty much all 12GB cards would be superior. Basically the 3080 has been borderline for a few years now at 1440p, with some manual tuning needed in quite a few games due to lack of VRAM. The framerate dives on the 3080 aren't constant, more like insane stutters while clearing VRAM/Cache, which would show up quite well on 1% and 0.1% lows.It's pretty remarkable how well the 10GB 3080 is holding up though. I haven't seen an example where that card takes a dive from lack of vram outside forced scenarios where the card would be at 14 fps anyhow.

IMHO all the 50 series cards below the 5070Ti should be using GDDR6 and have a wider bus and more vram. GDDR6 is cheaper, so use a wider bus and put more of it on the card. A few more PCB traces and GPU pins don't cost much. The GPU and the ram are most of the cost of a card.The 5060 and 5060 ti should be 12gb. The 5050 should be 8gb.

Sounds like that game is an extremely poorly optimized game.Reviewers really need to review more than popular AAA and eSports games. A game like Atelier Yumia takes 8GB+ VRAM at 1080p. Basically unplayable on my 2070.

It is (as are most modern console games apparently). There's a thread on steam about this, and it seems like nobody cares to cover the issue because it's Gust and not an EA or Activision.Sounds like that game is an extremely poorly optimized game.

If you admit it's poorly optimized, why would ever suggest reviewers use this game in their benchmarks?It is (as are most modern console games apparently). There's a thread on steam about this, and it seems like nobody cares to cover the issue because it's Gust and not an EA or Activision.