cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,091

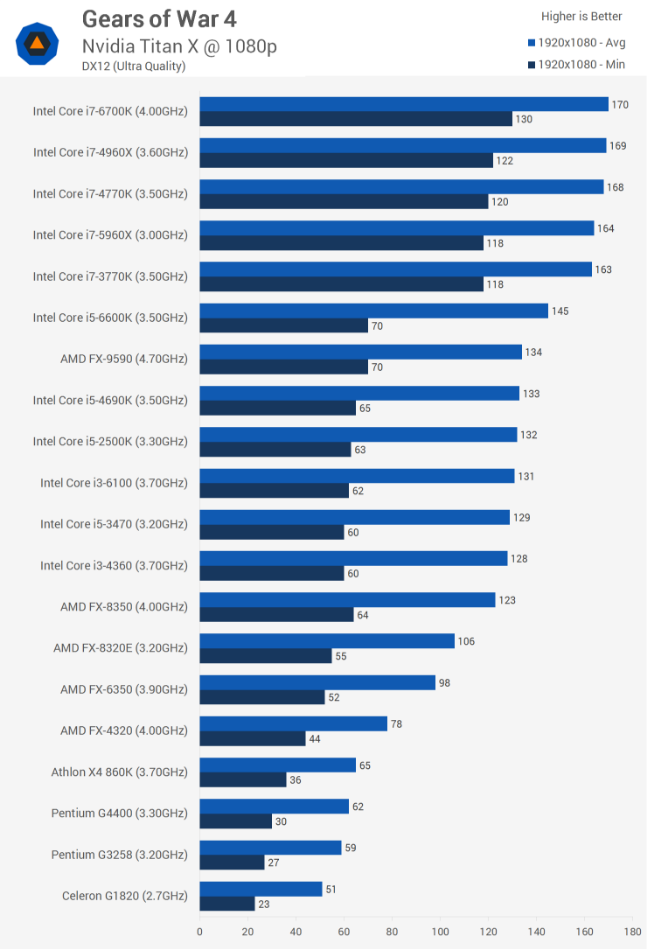

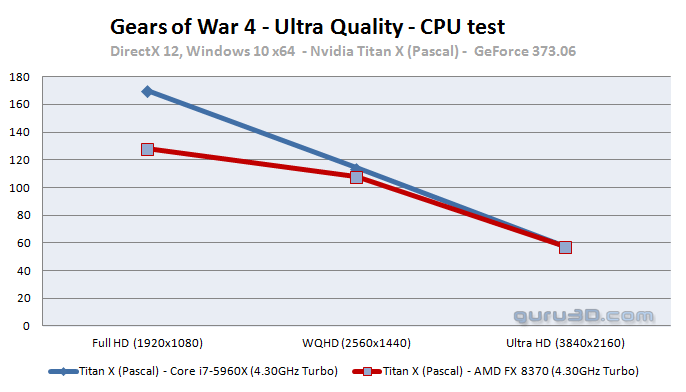

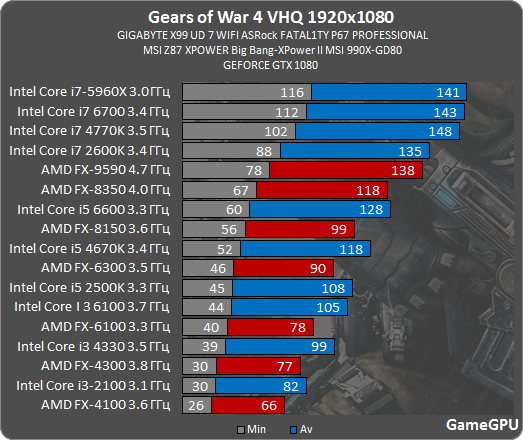

Since we haven't had a drama thread in awhile, and there is a hurricane headed to flood my area, I figured why not post some i7-6700K vs FX-8370 benchmarks in Gears of War 4. It's the end of the world as we know it; might as well go out happy. Need a translator for these though. Well I'm sure that you'll can read charts without a translator. 1080p, 1440p, and 4K. Go to their website for the chart.

Gears of War 4 mit DirectX 12 im Benchmark (Seite 3)

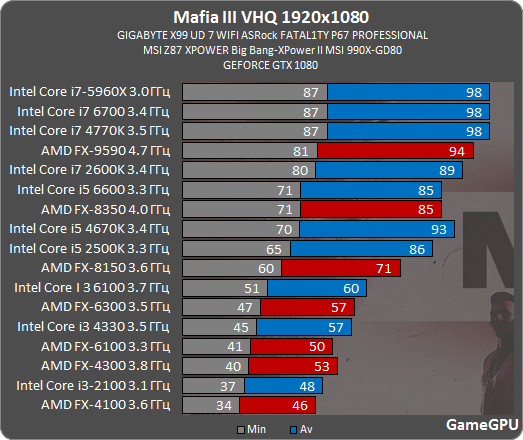

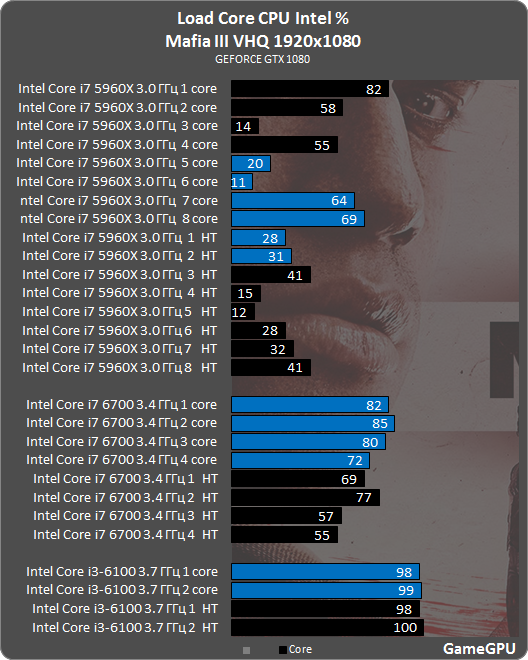

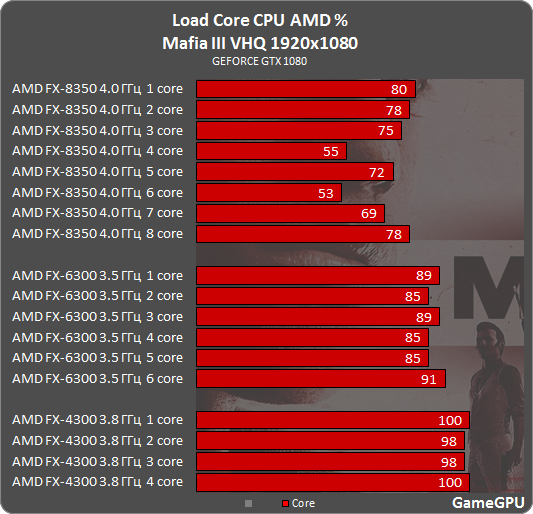

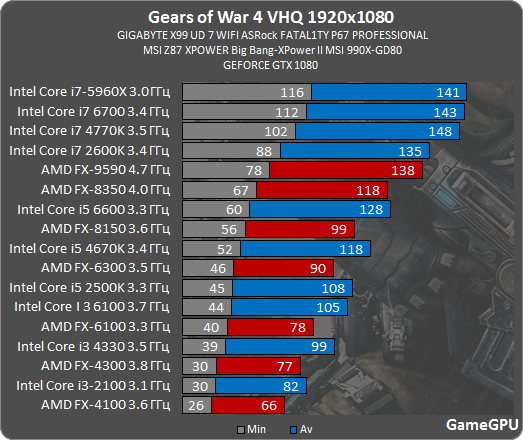

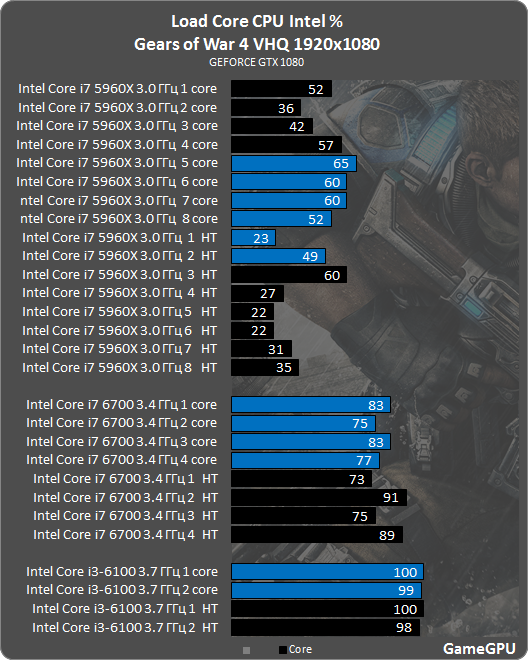

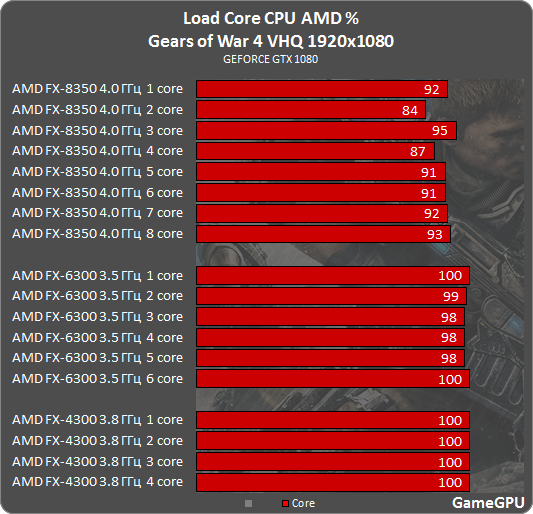

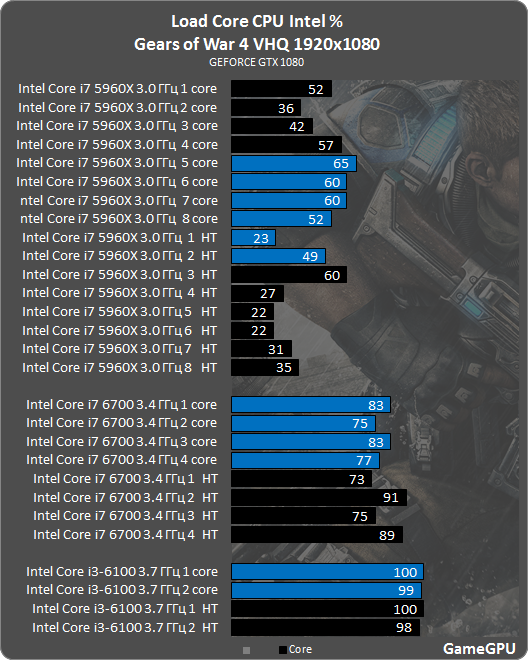

GameGPU did more testing and it seems that the game will use 16 threads! Those monster Intel processors finally get a workout. Not going to lie; that i7-5960X makes my heart all fluttery inside.

Gears of War 4 тест GPU | Action / FPS / TPS | Тест GPU

Anyway have fun reading.

Gears of War 4 mit DirectX 12 im Benchmark (Seite 3)

GameGPU did more testing and it seems that the game will use 16 threads! Those monster Intel processors finally get a workout. Not going to lie; that i7-5960X makes my heart all fluttery inside.

Gears of War 4 тест GPU | Action / FPS / TPS | Тест GPU

Anyway have fun reading.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)