Michaelius

Supreme [H]ardness

- Joined

- Sep 8, 2003

- Messages

- 4,684

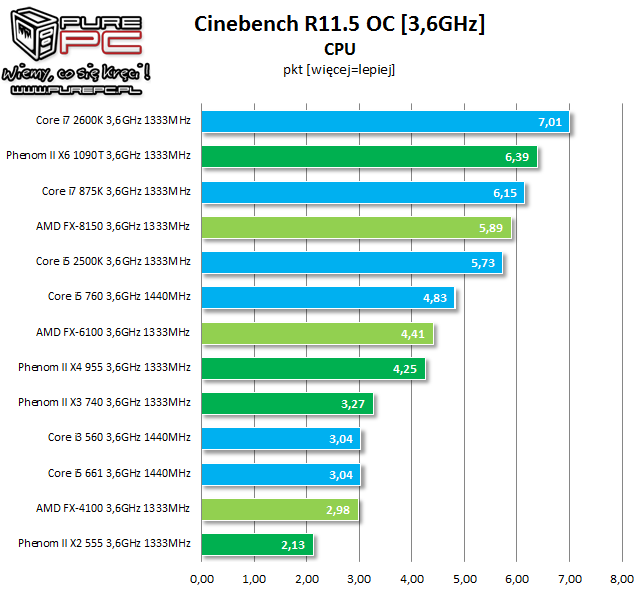

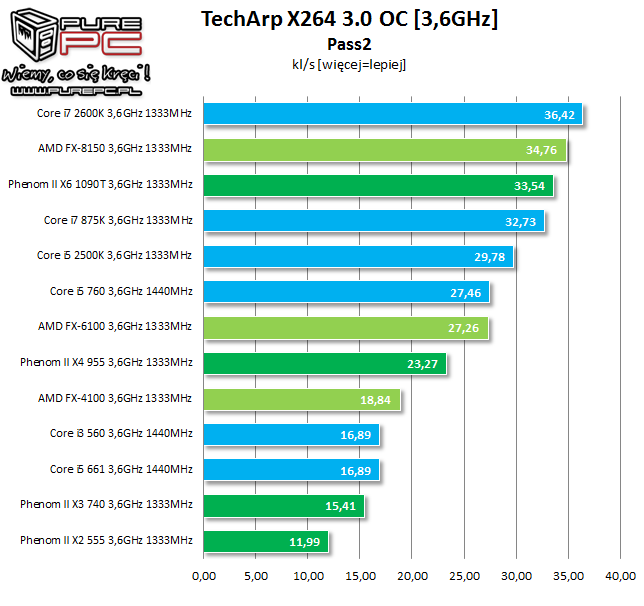

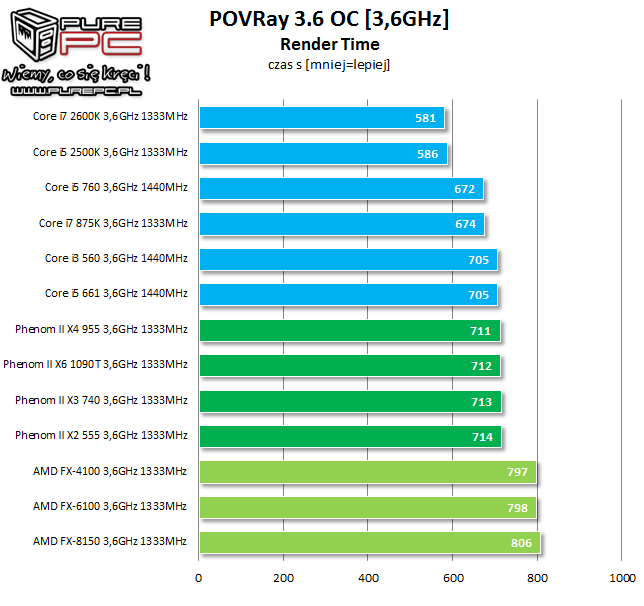

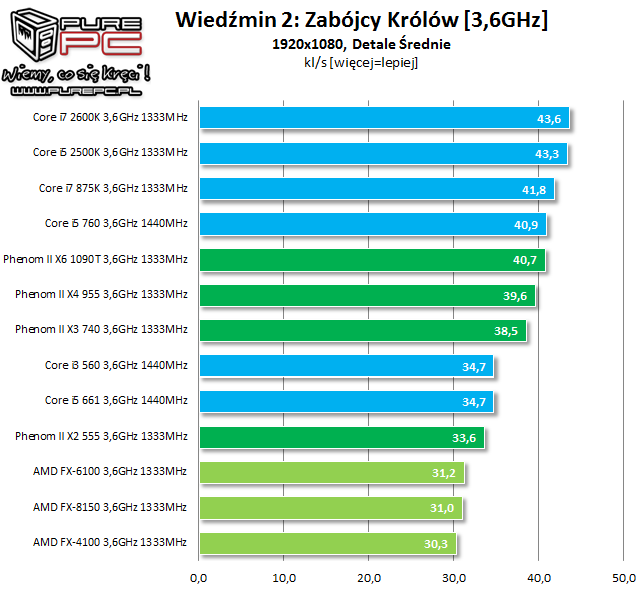

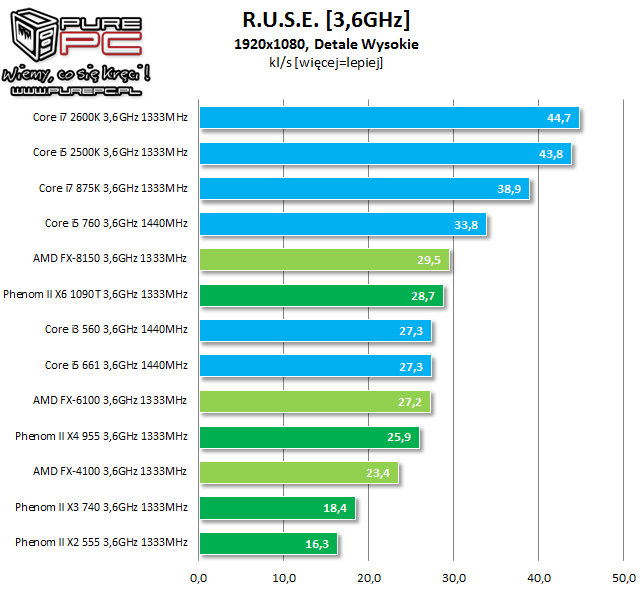

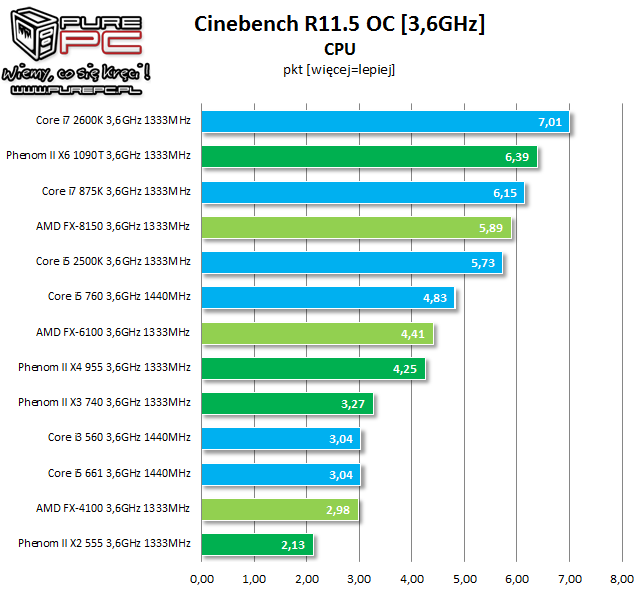

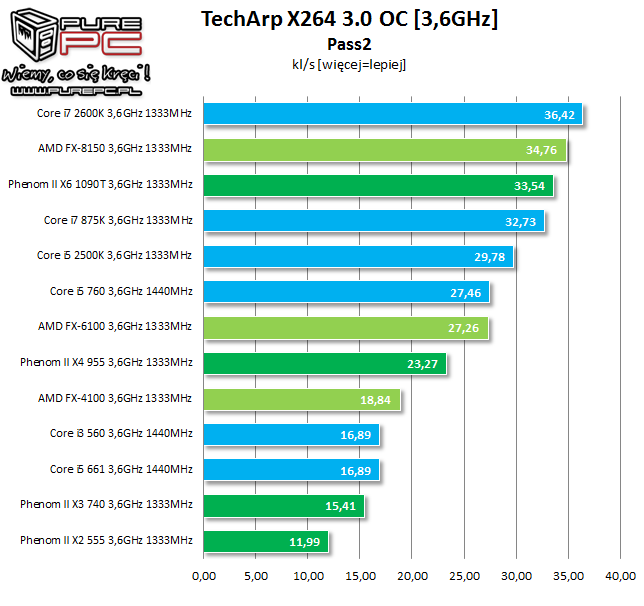

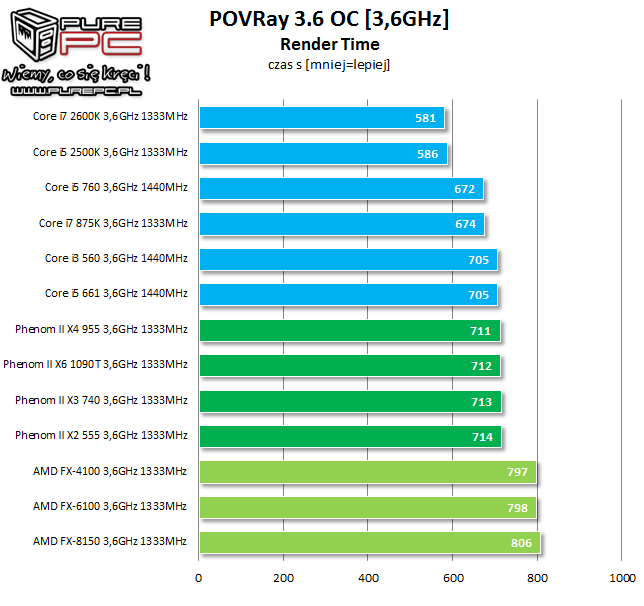

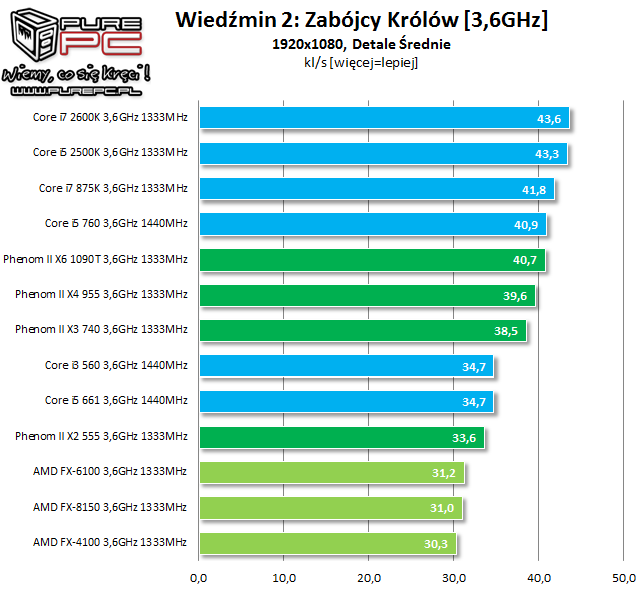

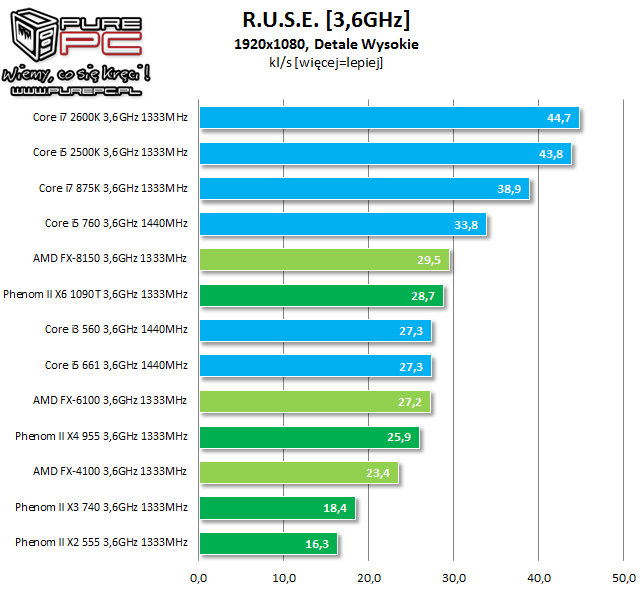

If someone is interested one of our Polish portals made benches for FXs at same clock setting to look at IPC:

http://www.purepc.pl/procesory/test_amd_fx8150_bulldozer_kontra_intel_sandy_bridge?page=0,11

http://www.purepc.pl/procesory/test_amd_fx8150_bulldozer_kontra_intel_sandy_bridge?page=0,11

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)