erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,987

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Scope this outWow. Imagine crapping your pants becuase you actually won the auction and now you have to figure out where to put it...lol.

yea, more like the local Nukempowah plant, hehehePower Grid not included.

The 5.34-petaflops (Linpack) system was the 20th most powerful supercomputer in the world when it launched, but now would be considered slow and inefficient when compared to cutting edge systems.

The water-cooled supercomputer features SGI ICE XA modules, 28 racks, and 8,064 Intel E5-2697v4 CPUs (for a total of 145,152 cores). It has DDR4-2400 ECC single-rank, 64 GB per node, with 3 High Memory E-Cells having 128GB per node, totaling 313,344GB.

Two air-cooled management racks consist of 26 1U Servers (20 with 128 GB RAM, 6 with 256 GB RAM), 10 Extreme Switches, and 2 Extreme Switch power units. Each rack weighs 2500 lbs.

Fiber and CAT5/6 cabling are not included.

"The system is currently experiencing maintenance limitations due to faulty quick disconnects causing water spray," the auction listing notes.

That's one heck of a homelab setup.

Honestly, I would take this and put it in Northern Canada where cooling is cheap, and then build out a solar array grid to power it. If the solar grid and the auction is cheap enough, may be the biggest bang for buck ever considering there would be nearly zero opEx to keep it running.

Oh yeah, and I would need to find a sunny and cold part of Canada, lol.You're gonna need to find a good auction source for your solar array... 1.7 MW is a lot of power.

True, but how much extra you think it would be? If cloud computing vendors can do it, why not a datacenter? Even if you have to build it out I would think the roi would be there.Free cooling is nice, but extra latency to where the people are is kind of meh.

And access to major existing networking. (Which is self reinforcing, but oh well)

That would be great! I see 80ms to go from CA to AL.You're looking at probably 20-30 ms to go to Canada from the southern parts of the US. If it matters a lot or not, I dunno. Cloud vendors have stuff in Canada, but they've also got stuff all over the place. No big deal to have a couple datacenters in Canada when you have like 10 in the US. GCP has one in Las Vegas which tends to have worse latency to everywhere else, even people in Las Vegas are likely to get lower latency to Los Angeles or Salt Lake City; I've seen data!

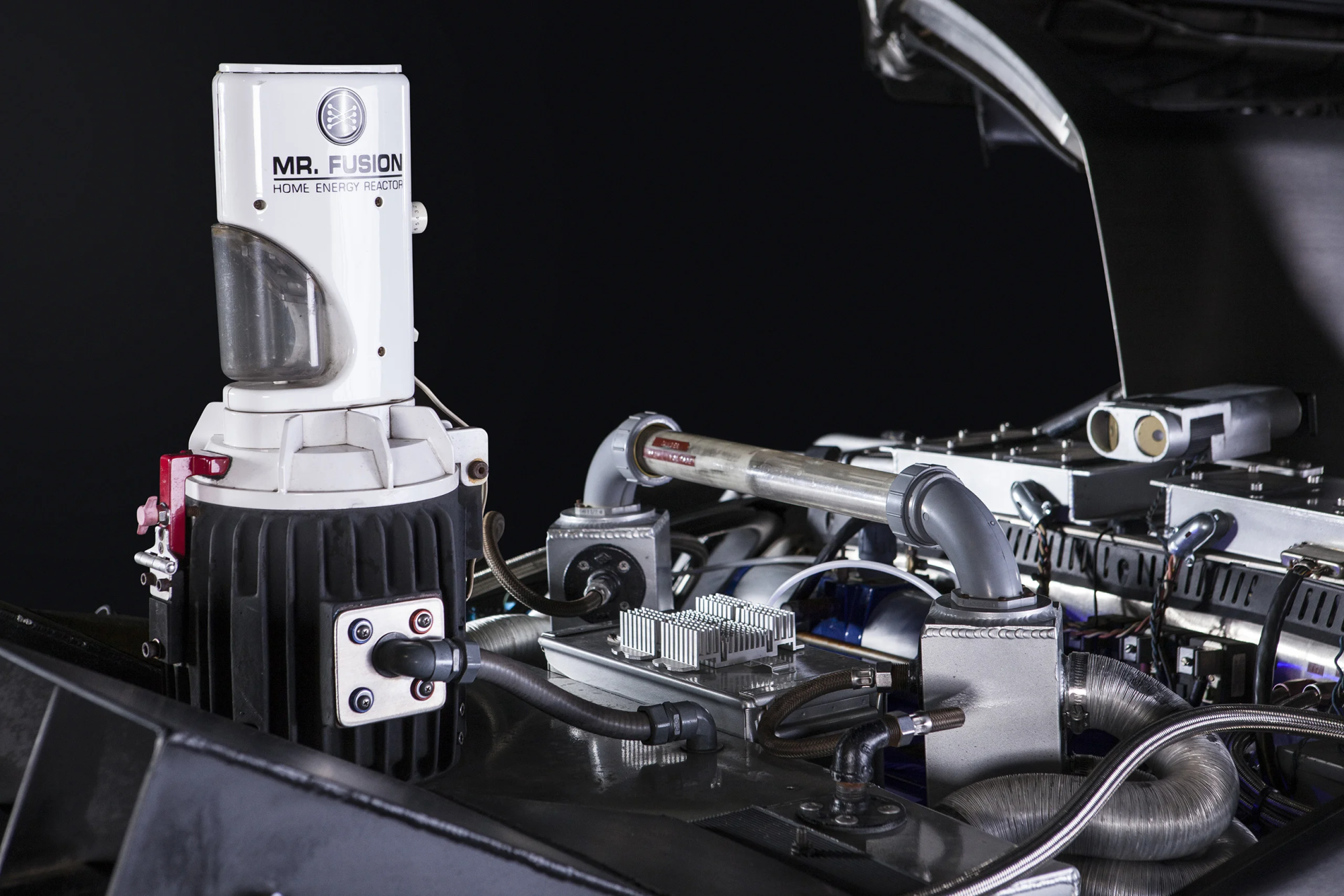

Awesome and highly underrated movie!Why go big when you can just borrow a bit of plutonium and a science fair project?

View attachment 651466

There's a MacGyver episode with a similar plot. Equally awesome to watch.Awesome and highly underrated movie!

1.7 MW is a lot of power.

Why go big when you can just borrow a bit of plutonium and a science fair project?

View attachment 651466

Someone ran Crysis in software render mode with a Threadripper, so there's hope!Does it play Crysis?

Walked away? More like trucked away. I can only imagine the cost to transport all of that. I am sure it will resemble something like what Elon did with Twitter's data center in 2022.

UCAR says that Cheynne was originally slated to be replaced after five years, but the COVID-19 pandemic severely disrupted supply chains, and it clocked in two extra years in its tour of duty. The auction page says that Cheyenne recently experienced maintenance limitations due to faulty quick disconnects in its cooling system. As a result, approximately 1 percent of the compute nodes have failed, primarily due to ECC errors in the DIMMs. Given the expense and downtime associated with repairs, the decision was made to auction off the components.

The transportation costs are going to be absurdly high. I've had display cabinets from auctions that were priced for transport for higher than the cost of the cabinet. This is specialty tech gear.Walked away? More like trucked away. I can only imagine the cost to transport all of that. I am sure it will resemble something like what Elon did with Twitter's data center in 2022.

It'll tell you which nodes - if you bother to hook it all back up again.I hope they tell the winner which ones those were, because that sounds like an absolute bloody nightmare to try to find that in this cluster.

As far as the price, 480k... well I looked up these chips on ebay, it looks like they're selling for about $40-50 a piece? They just got 8000 of them. 1% might be broken (?) so ~7983 chips operational. 7983*50=399150. Then there's all of the cabinets and motherboards, and ECC RAM, so... overall, about a decent price. Crazy how much tech depreciates over time. I wonder how this thing would hold up to a cluster of Nvidia AI chips (with some random modern CPU) that cost the same amount in contemporary times.

The problem is that even if they intend to just flip it, how the hell do you get rid of 8k chips on Ebay without the price for them just deflating to almost nothing? Hell, I'd be on board for grabbing some Xeons and ECC ram on the (really) cheap if they do piece it out lol.

The transportation costs are going to be absurdly high. I've had display cabinets from auctions that were priced for transport for higher than the cost of the cabinet. This is specialty tech gear.

In that case, hard pass.Power Grid not included.