Andrew_Carr

2[H]4U

- Joined

- Feb 26, 2005

- Messages

- 2,777

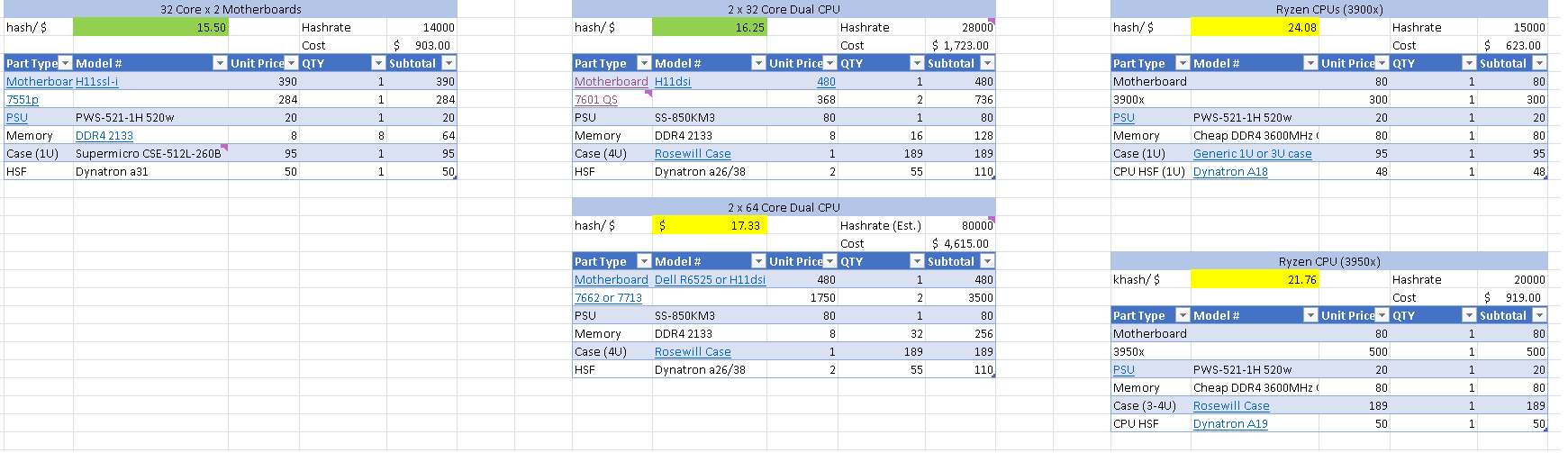

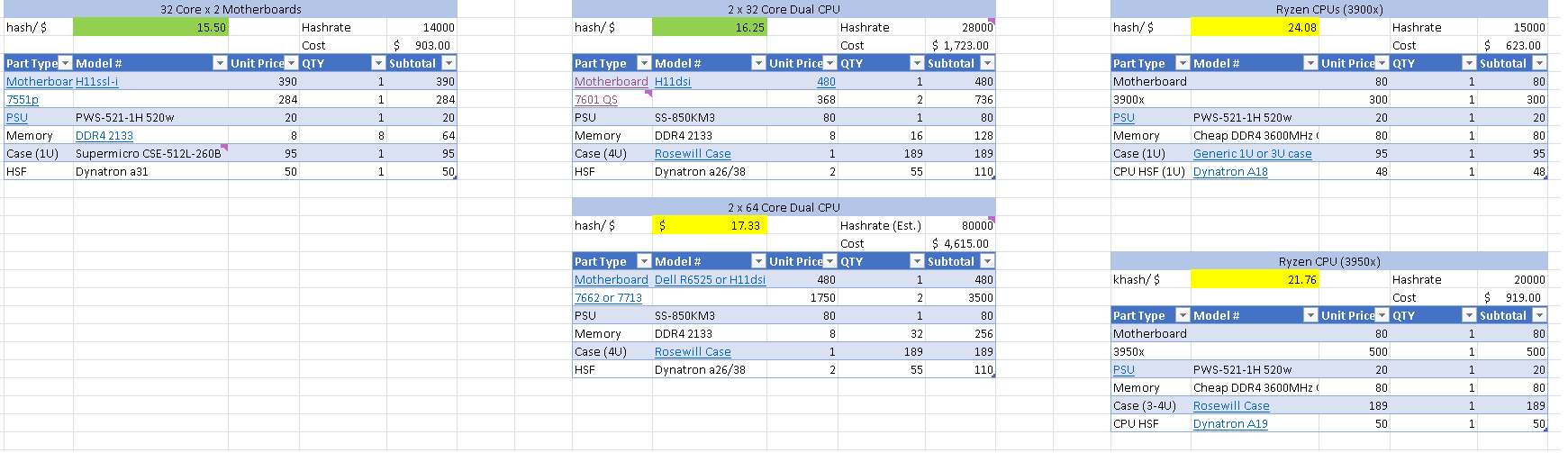

I thought I'd share my results of exploring sketchy ebay & alibaba listings in search of higher CPU hashrates in randomx at a low cost. (These results are basically the same for raptoreum except you can save money by using slower memory / less channels) I basically narrowed things down to a few viable routes:

1.) Single CPU Consumer Builds (AMD Ryzen 3xxx series) - Generally 3900x CPUs are the best in this area. I buy cheap RAM between 3200MHz - 3600MHz in bulk whenever possible ($20-40 / stick)

3950x CPUs are usually too expensive to justify since it's a roughly 30% performance increase for usually something like a 50% price increase ($300 vs $500ish). Additionally, you'll need to buy an aftermarket cooler for the 3950x instead of just using a stock wraith prism on the 3900x. Going lower than the 3900x and the costs of things like the motherboard, case, PSU, etc. eat up too much of the budget.

On all builds you'll need to tweak memory settings to lower your timings and this can be time consuming. Because of this, I would go with a single type of memory and motherboard so you can save time and (hopefully) reuse timings as much as possible.

----Server Builds----

2.) Single CPU Server Builds - The CPUs for these are generally the P-type Epyc 7001/7002 series CPUs and because they can only be used in a single CPU configuration they're usually cheaper for the same # of cores vs a dual-socket capable CPU. I've had good luck with the 7551P CPUs. However, lesson learned here though is that despite the cheaper motherboards and cheaper CPUs, you actually end up spending more for the same hashrate because you're buying double the number of PSUs, cases, motherboards, etc. It's not a massive difference though, so if you find really good prices on P CPUs it can be worthwhile.

3.) Dual CPU Server Builds - These generally aren't much more complicated to setup than a single CPU setup and are a better investment. I generally jump to 4U cases at this point though because of the cooler size and motherboard size increases, and sometimes put in an oversized ATX PSU so I have the option of running a few GPUs as well. I haven't had good luck running the 1U dynatron coolers on my 7601 CPUs for whatever reason (could be user error), but temps with their larger a26 or a38 heatsinks are great.

Another odd thing I didn't expect is that 64 core options are also generally the most cost effective. Despite costing 4x as much per CPU, again you're saving a lot of money on fewer motherboards and DIMMs. Power efficiency should also be better here and space savings will also add up. I've had a hard time finding good deals on 64 core CPUs though, while the 32 core options are plentiful and keep dropping in price the 64 core CPUs have remained steady for awhile. So if the 64 core Epycs drop to around $1k/each as I hope they will, I think these would be the best option by far (This is for QS/ES samples that will require tweaking. Retail versions are much more expensive).

General server notes:

While you don't have to mess with memory timings here, server parts are more finnicky in my experience. As an example, I installed some of my CPUs with too little pressure and had instability that took awhile to troubleshoot. Memory requirements are also something to be more aware of, although I haven't had any issues yet with memory (even when mixing different speeds, sizes, etc. It all clocked to the lowest stick as expected). I generally have targeted older Epyc CPUs and QS/ES samples. With the zenstates and rome overclocker from the servethehome forums you can overclock these. Make sure you buy CPUs that aren't vendor locked, or if you come across a cheap Dell motherboard, snatch it up and buy the cheap vendor locked CPUs. The newer Epyc CPUs blow fuses when installed in certain vendor's motherboards so there's no reverting that.

I've tried 2133MHz through 2666MHz memory in my Epyc builds and the performance loss is minimal unlike with the ryzen machines (2-3kh/s loss on a 31kh/s machine). So you can probably save money and buy 2133MHz memory @ $8/stick instead of the $20 or so per stick for 2666MHz stuff. And when it comes to a lot of other algorithms, that high memory speed is sometimes even less important. Generally the number of memory channels seriously impacts performance though so you want enough DIMMs to populate every slot, so go for low density 4-8GB DIMMs to save money. While Ryzen CPUs are limited to 1 memory channel, Epyc CPUs can have up to 8 and this is a major difference. Also, while I spend about 50% more on memory for Epyc builds, it's very easy to spend a ton more on memory if you buy higher density stuff so be cautious of that. You also want to go for 32 Core CPUs and higher for the most part. The high cost of motherboards and memory makes it cost prohibitive to use the lower end parts even though those CPU prices are dirt cheap.

Another major benefit to server motherboards is that you get IPMI for remote management. This lets you hard reboot your computer, view POST errors, etc. via a separate on-board processor. This reason alone makes me want to switch everything over to server motherboards and should also save you ~$20/computer versus buying smart plugs or something.

TLDR Numbers

The hashrate figures are actual hashrates for the 32 Core builds (31khash on 2 x 32 core 7601 QS when using 2666MHz memory, about 28khash with slow memory). I get between 14-16khash/s per 32 core Epyc (7551P and 7601 ES perform very similarly). I need to tweak my 3900x builds still but I put in 15khash for now as a conservative figure for an ideal build that doesn't require expensive memory. Once I start overclocking the Epycs I expect to get closer to 18-19khash per CPU (https://xmrig.com/benchmark?cpu=AMD+EPYC+7601+32-Core+Processor) which puts the dual CPU 32 core Epyc builds very close to Ryzens in terms of performance per dollar. Performance per watt I don't think they'd pull ahead until I start using zen 2 64 core CPUs. Also, I did end up with two duds in the 7601 QS sampels that I bought. They're locked to 1.9GHz and only hash at half what the others do (the others are 1.4GHz QS samples and boost to 2.5GHz without any tweaking) so these will require overclocking to fix.

1.) Single CPU Consumer Builds (AMD Ryzen 3xxx series) - Generally 3900x CPUs are the best in this area. I buy cheap RAM between 3200MHz - 3600MHz in bulk whenever possible ($20-40 / stick)

3950x CPUs are usually too expensive to justify since it's a roughly 30% performance increase for usually something like a 50% price increase ($300 vs $500ish). Additionally, you'll need to buy an aftermarket cooler for the 3950x instead of just using a stock wraith prism on the 3900x. Going lower than the 3900x and the costs of things like the motherboard, case, PSU, etc. eat up too much of the budget.

On all builds you'll need to tweak memory settings to lower your timings and this can be time consuming. Because of this, I would go with a single type of memory and motherboard so you can save time and (hopefully) reuse timings as much as possible.

----Server Builds----

2.) Single CPU Server Builds - The CPUs for these are generally the P-type Epyc 7001/7002 series CPUs and because they can only be used in a single CPU configuration they're usually cheaper for the same # of cores vs a dual-socket capable CPU. I've had good luck with the 7551P CPUs. However, lesson learned here though is that despite the cheaper motherboards and cheaper CPUs, you actually end up spending more for the same hashrate because you're buying double the number of PSUs, cases, motherboards, etc. It's not a massive difference though, so if you find really good prices on P CPUs it can be worthwhile.

3.) Dual CPU Server Builds - These generally aren't much more complicated to setup than a single CPU setup and are a better investment. I generally jump to 4U cases at this point though because of the cooler size and motherboard size increases, and sometimes put in an oversized ATX PSU so I have the option of running a few GPUs as well. I haven't had good luck running the 1U dynatron coolers on my 7601 CPUs for whatever reason (could be user error), but temps with their larger a26 or a38 heatsinks are great.

Another odd thing I didn't expect is that 64 core options are also generally the most cost effective. Despite costing 4x as much per CPU, again you're saving a lot of money on fewer motherboards and DIMMs. Power efficiency should also be better here and space savings will also add up. I've had a hard time finding good deals on 64 core CPUs though, while the 32 core options are plentiful and keep dropping in price the 64 core CPUs have remained steady for awhile. So if the 64 core Epycs drop to around $1k/each as I hope they will, I think these would be the best option by far (This is for QS/ES samples that will require tweaking. Retail versions are much more expensive).

General server notes:

While you don't have to mess with memory timings here, server parts are more finnicky in my experience. As an example, I installed some of my CPUs with too little pressure and had instability that took awhile to troubleshoot. Memory requirements are also something to be more aware of, although I haven't had any issues yet with memory (even when mixing different speeds, sizes, etc. It all clocked to the lowest stick as expected). I generally have targeted older Epyc CPUs and QS/ES samples. With the zenstates and rome overclocker from the servethehome forums you can overclock these. Make sure you buy CPUs that aren't vendor locked, or if you come across a cheap Dell motherboard, snatch it up and buy the cheap vendor locked CPUs. The newer Epyc CPUs blow fuses when installed in certain vendor's motherboards so there's no reverting that.

I've tried 2133MHz through 2666MHz memory in my Epyc builds and the performance loss is minimal unlike with the ryzen machines (2-3kh/s loss on a 31kh/s machine). So you can probably save money and buy 2133MHz memory @ $8/stick instead of the $20 or so per stick for 2666MHz stuff. And when it comes to a lot of other algorithms, that high memory speed is sometimes even less important. Generally the number of memory channels seriously impacts performance though so you want enough DIMMs to populate every slot, so go for low density 4-8GB DIMMs to save money. While Ryzen CPUs are limited to 1 memory channel, Epyc CPUs can have up to 8 and this is a major difference. Also, while I spend about 50% more on memory for Epyc builds, it's very easy to spend a ton more on memory if you buy higher density stuff so be cautious of that. You also want to go for 32 Core CPUs and higher for the most part. The high cost of motherboards and memory makes it cost prohibitive to use the lower end parts even though those CPU prices are dirt cheap.

Another major benefit to server motherboards is that you get IPMI for remote management. This lets you hard reboot your computer, view POST errors, etc. via a separate on-board processor. This reason alone makes me want to switch everything over to server motherboards and should also save you ~$20/computer versus buying smart plugs or something.

TLDR Numbers

The hashrate figures are actual hashrates for the 32 Core builds (31khash on 2 x 32 core 7601 QS when using 2666MHz memory, about 28khash with slow memory). I get between 14-16khash/s per 32 core Epyc (7551P and 7601 ES perform very similarly). I need to tweak my 3900x builds still but I put in 15khash for now as a conservative figure for an ideal build that doesn't require expensive memory. Once I start overclocking the Epycs I expect to get closer to 18-19khash per CPU (https://xmrig.com/benchmark?cpu=AMD+EPYC+7601+32-Core+Processor) which puts the dual CPU 32 core Epyc builds very close to Ryzens in terms of performance per dollar. Performance per watt I don't think they'd pull ahead until I start using zen 2 64 core CPUs. Also, I did end up with two duds in the 7601 QS sampels that I bought. They're locked to 1.9GHz and only hash at half what the others do (the others are 1.4GHz QS samples and boost to 2.5GHz without any tweaking) so these will require overclocking to fix.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)

. I find naples much easier to overclock than rome ES/QS (via EDC bug) but rome certainly is more efficient. I can rock 3.2 to 3.3GHz all cores all day on DC with 32 core system pulling 220W to 240W off the wall depending on projects. Naples are so cheap nowadays but the MB itself probably costs more than the cpu.

. I find naples much easier to overclock than rome ES/QS (via EDC bug) but rome certainly is more efficient. I can rock 3.2 to 3.3GHz all cores all day on DC with 32 core system pulling 220W to 240W off the wall depending on projects. Naples are so cheap nowadays but the MB itself probably costs more than the cpu.