D

Deleted member 88227

Guest

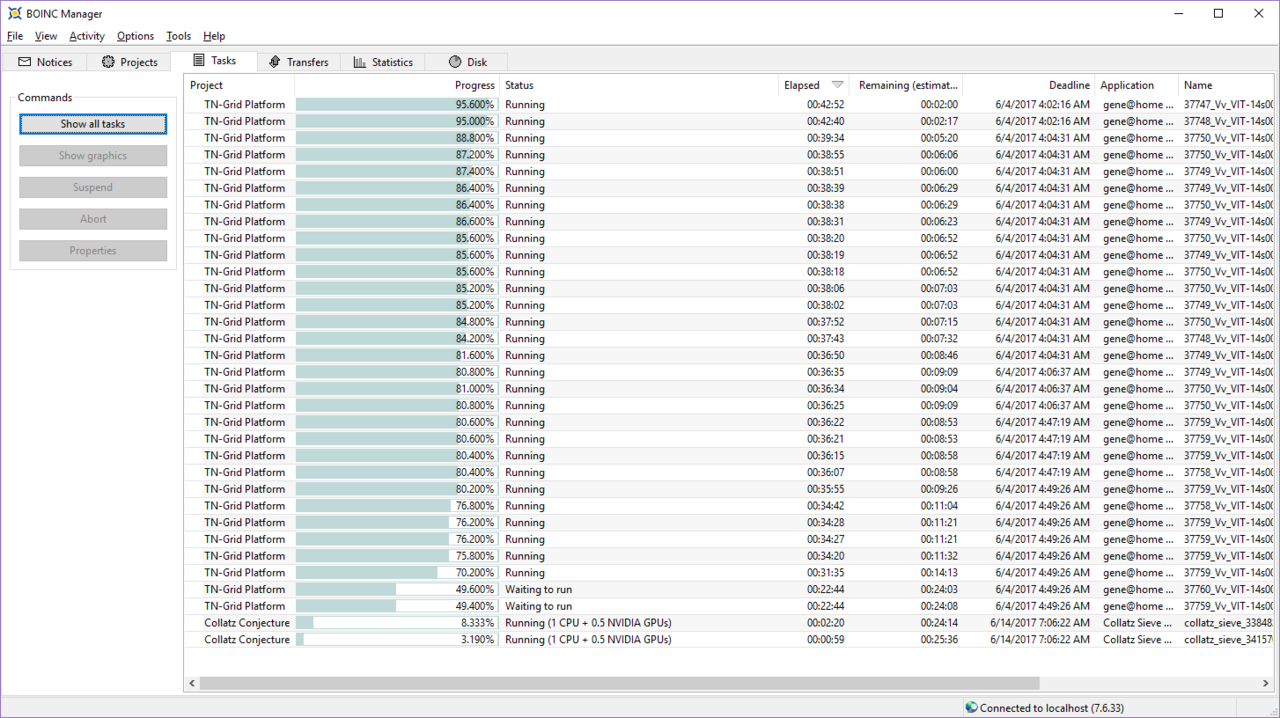

I already switched the main ones out. I might setup one of the single GPU rigs with the #53 settings to see what happens.

How can I tell that they're all working? With so many different GPU types my production might overall go up, but how can I tell if one of the GPUs aren't still holding back further progress?

How can I tell that they're all working? With so many different GPU types my production might overall go up, but how can I tell if one of the GPUs aren't still holding back further progress?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)