DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,956

That display also does 144hz, because it's a gaming laptop with a RTX 4060. Which anyone who's seen a Macbook display in action knows that the response time is so bad it's like someone smeared petroleum jelly on it. You can nit pick that laptop all day long, but the reality is that laptops RTX 4060 has the same amount of ram as a $1,600 Macbook. You want a better display? This Asus ROG has 2560 × 1440 that runs at 240hz for $1,800. Half the ram of the Lenovo with 1/4 of the storage, but also 4x the ram of a Macbook and 8x the storage, while again not including the VRAM of the RTX 4070. It's just sad and pathetic the lengths you'll go to defend a company who charged you $7k for that 128GB Macbook you bought.You could not pay me $10k to use that machine on a daily basis. It is absolutely embarrassing that a $1600 computer has a 1920x1200 screen with a garbage-tier touchpad and terrible battery life. And don't say "I'd just dock it" - what's the point in a laptop then? My MBP is excellent both on the go AND in a dock.

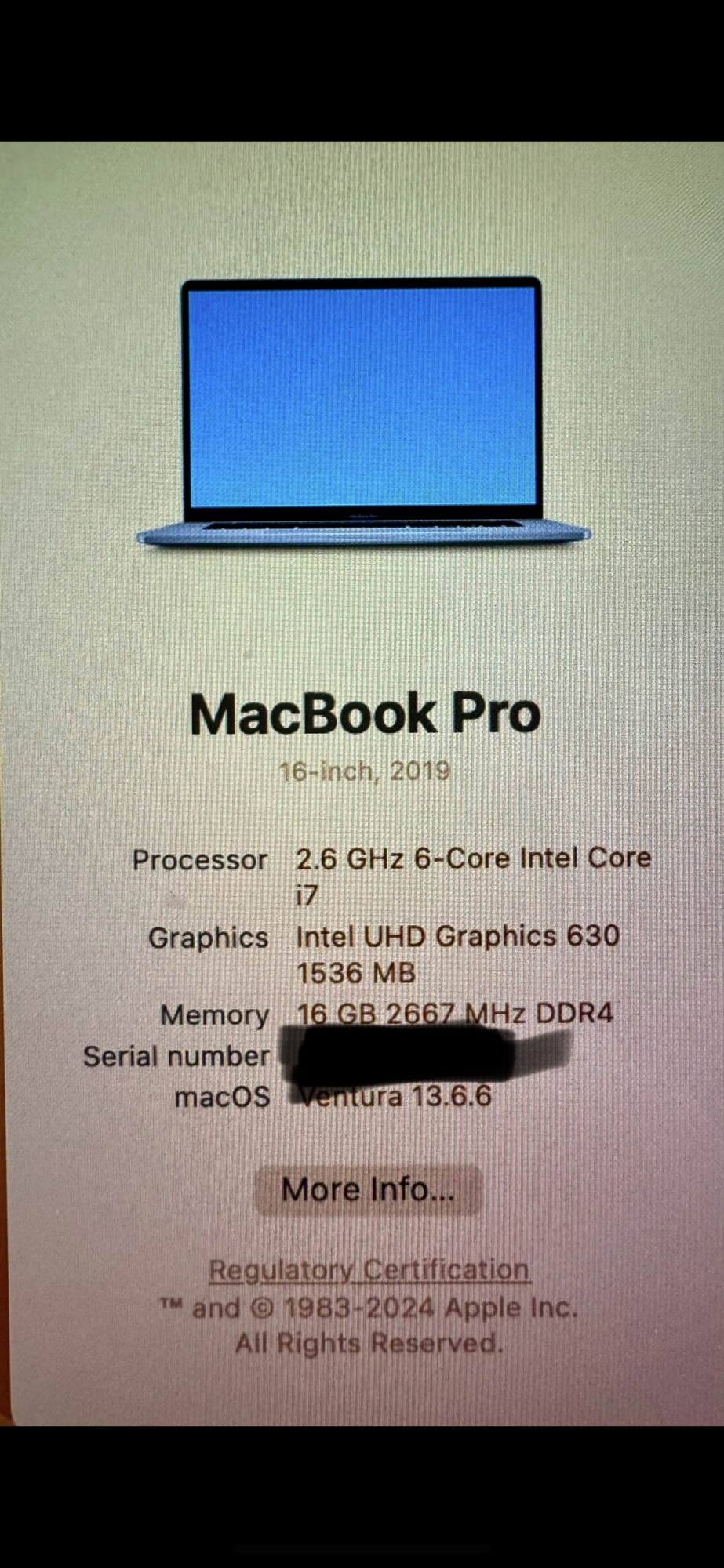

You carry 3 screens with you? Do they run off battery?That is garbage to me because I have a Macbook Pro which I often run a mobile triple screen setup off of on battery with an iPad Pro and a LG Gram.

In this configuration, the resolution and screen quality available dwarf the PC as does the battery life with two other devices sucking off battery. I was able to work the entire day off battery from a hotel bar with this configuration on my last road trip!

Laptops are never straight forward and build quality from Apple is subjective when they had keyboard gate.The comparison with that Lenovo isn't straightforward. The LOQ has a lower resolution (if slightly higher refresh rate) and lower quality display, just one USB-C port (no surprises at the lack of Thunderbolt with AMD), build quality will undoubtedly be worse... you get the idea.

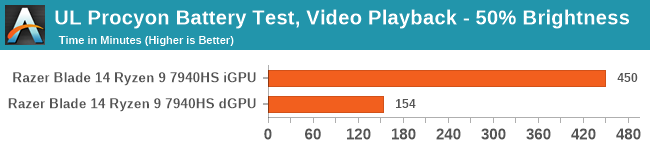

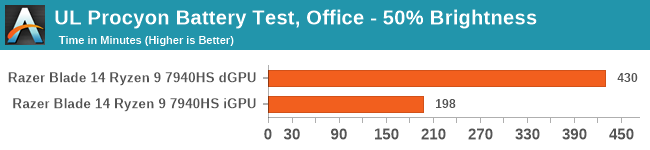

This is 2024 where even AMD laptops run several hours on battery. LukeTbk even linked you the info. Not sure what that Asus ROG Strix Scar has that you speak of, but as long as you're with AMD then you don't have battery problems. This is like when AMD used to run hot while Intel wasn't, but after Ryzen the shoe is on the other foot but it took a while before Intel fanboys agreed that AMD is now the cooler less power consuming CPU.Performance will be great for games and some pro apps while plugged in, of course, but it's going to tank while on battery and won't fare as well with some creative apps. I recently used an ASUS ROG Strix Scar 16 and that thing took a speed hit while unplugged (even with performance modes forced on). It also didn't last more than a few hours on battery when doing pedestrian tasks.

How much work you think you can do with 8GB of ram? What exactly is Apple prioritizing with $1,600 laptops with 8GB of ram? Other than the elephant in the room where as Darunion put it, the 8GB is meant to entice users to buy more expensive models. If Apple has to overhaul their entire Mac line it, then that tells me that things aren't doing well for Apple's Mac lineup.That kind of RAM and SSD capacity is great for the money, but Lenovo is prioritizing different things than Apple. And while Apple is undoubtedly charging higher profit margins, I know I'd much rather have the MacBook Pro for audiovisual editing (provided I get a config with enough storage, at least) even with those memory and storage deficits.

As an Amazon Associate, HardForum may earn from qualifying purchases.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)