erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,996

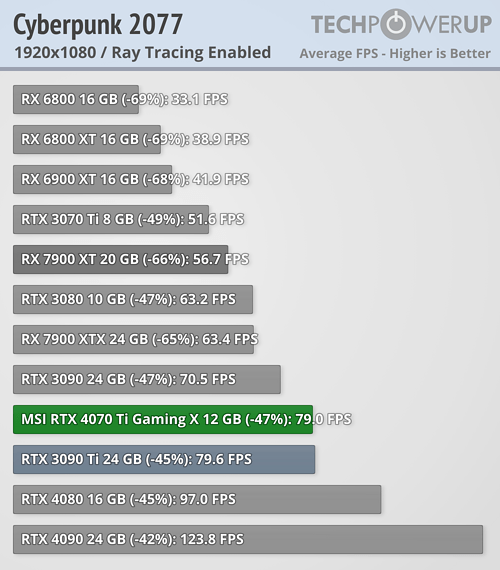

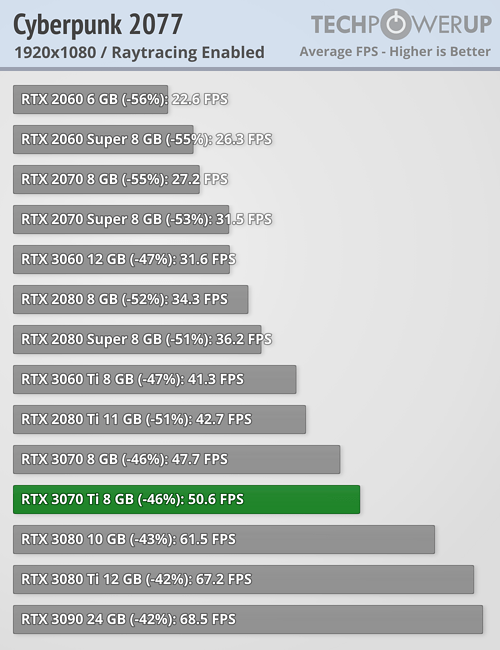

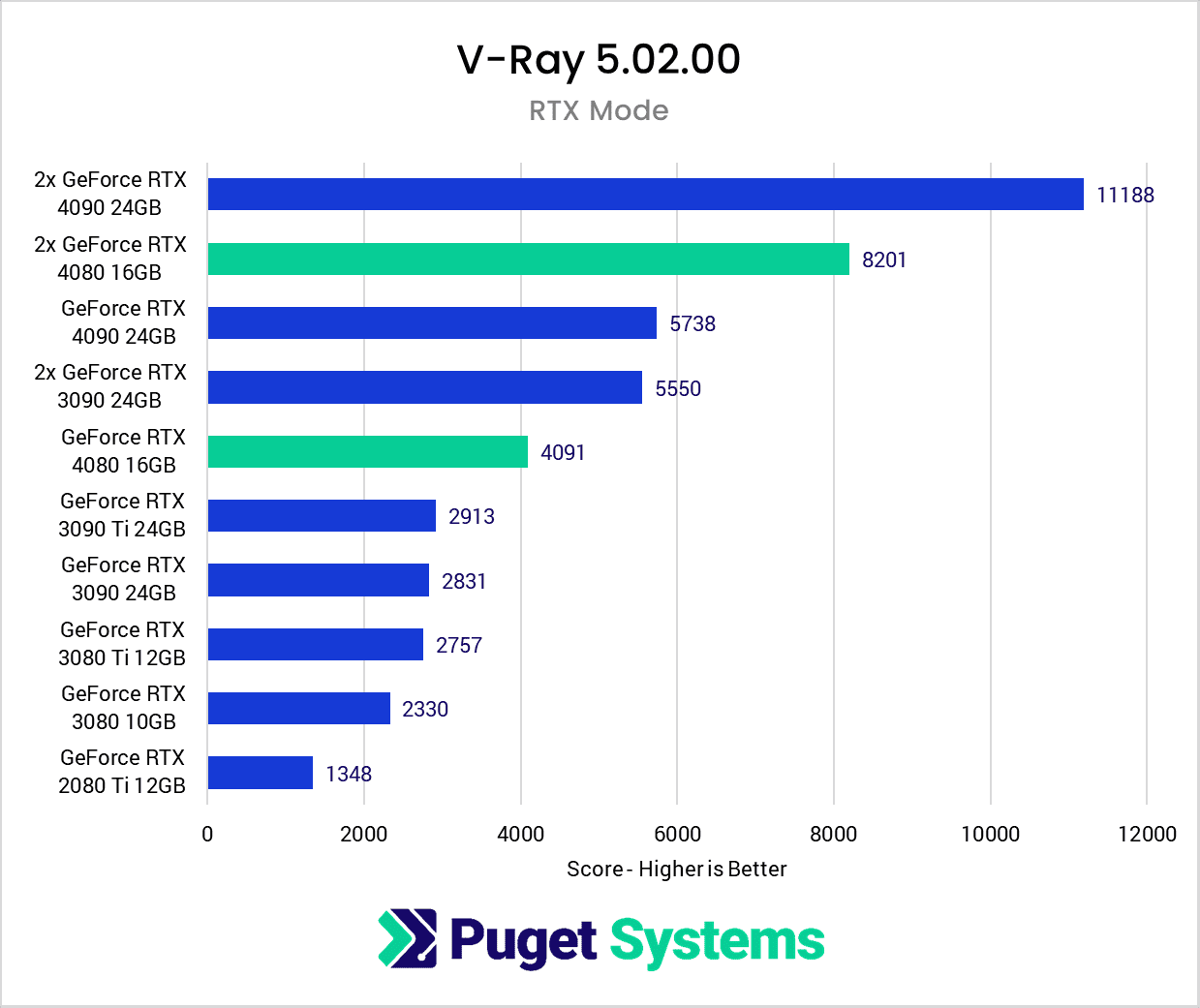

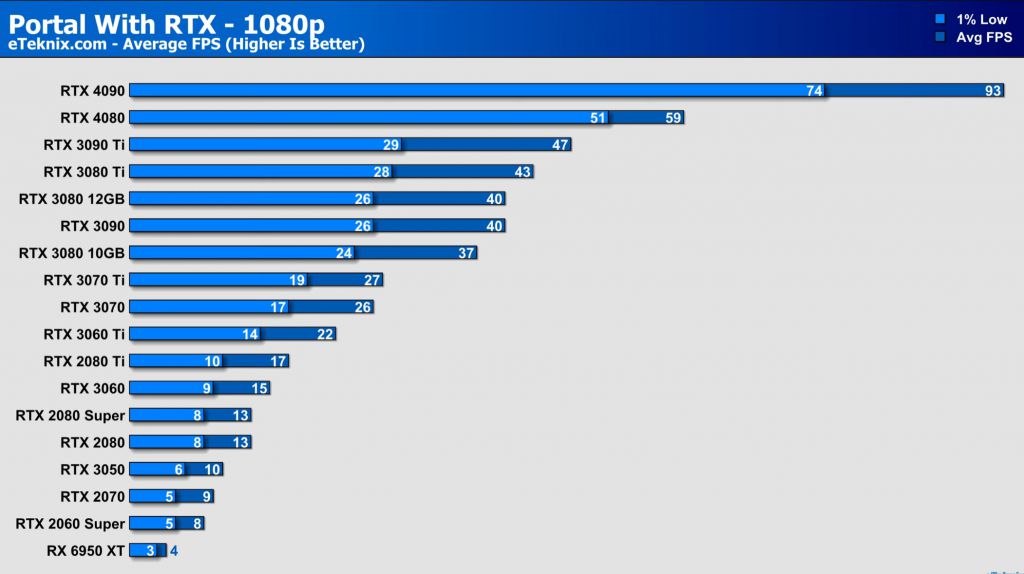

“What this could mean for a potential Radeon RX 8800 XT is that even though rasterized or standard rendering performance is not up to the level of the flagship Radeon RX 7900 XTX - ray-tracing performance could blow it out of the water. And that would be impressive.

It would still need to compete with NVIDIA's upcoming GeForce RTX 50 Series and Intel's next-gen Arc, codenamed Battlemage, both of which are expected to boost ray-tracing performance. Either way, it's great to see AMD getting serious about RT.”

Read more: https://www.tweaktown.com/news/9794...ure-brand-new-ray-tracing-hardware/index.html

It would still need to compete with NVIDIA's upcoming GeForce RTX 50 Series and Intel's next-gen Arc, codenamed Battlemage, both of which are expected to boost ray-tracing performance. Either way, it's great to see AMD getting serious about RT.”

Read more: https://www.tweaktown.com/news/9794...ure-brand-new-ray-tracing-hardware/index.html

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)