https://www.notebookcheck.net/AMD-i...spend-savings-on-other-PC-parts.700365.0.html

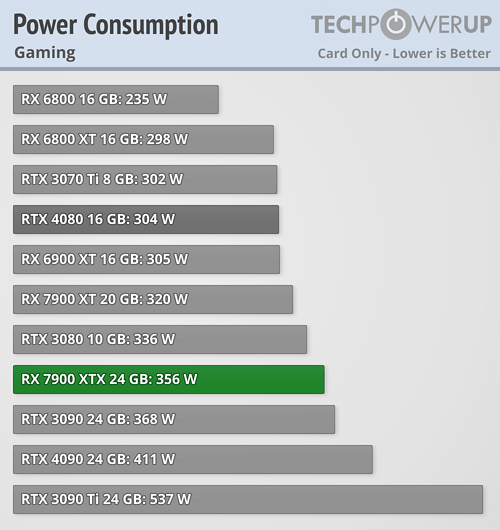

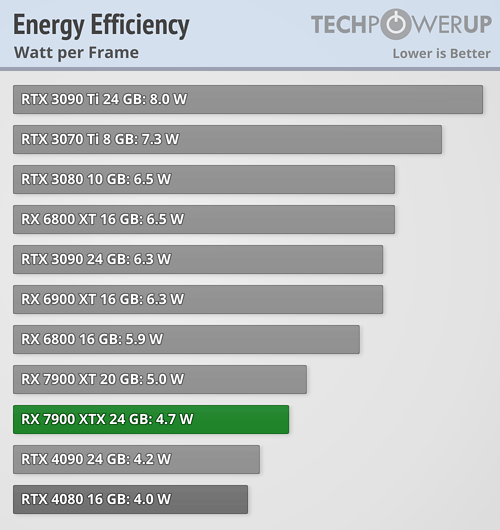

"AMD could have made an RTX 4090 competitor but chose not to in favor of a trade-off between price and competitive performance. AMD's rationale is that a 600 W card with a US$1,600 price tag is not a mainstream option for PC gamers and that the company always strived to price its flagship GPUs in the US$999 bracket. The company execs also explained why an MCM approach to the GPU die may not be feasible yet unlike what we've seen with CPUs."

They could have - but they didn't wanna.

"AMD could have made an RTX 4090 competitor but chose not to in favor of a trade-off between price and competitive performance. AMD's rationale is that a 600 W card with a US$1,600 price tag is not a mainstream option for PC gamers and that the company always strived to price its flagship GPUs in the US$999 bracket. The company execs also explained why an MCM approach to the GPU die may not be feasible yet unlike what we've seen with CPUs."

They could have - but they didn't wanna.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)