https://www.tomshardware.com/monito...resh-rate-needed-for-1080p-and-1440p-displays

Baby steps forward are still steps forward.

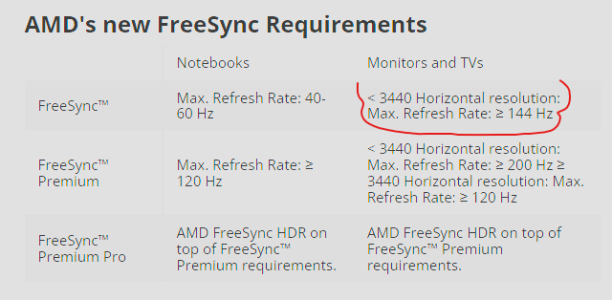

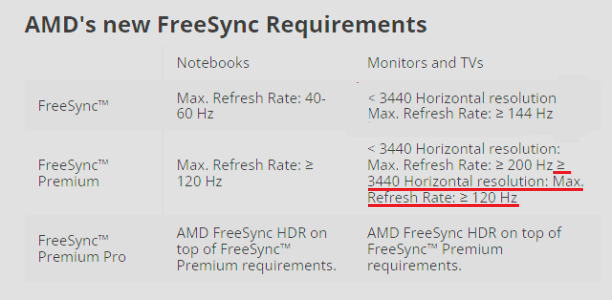

It’s good to see AMD addressing the low end of the display spectrum that has been a victim of the FreeSync labeling.

Baby steps forward are still steps forward.

It’s good to see AMD addressing the low end of the display spectrum that has been a victim of the FreeSync labeling.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)